HDM-xut-340M-Anime

World's smallest, cheapest anime-style T2I base

Introduction

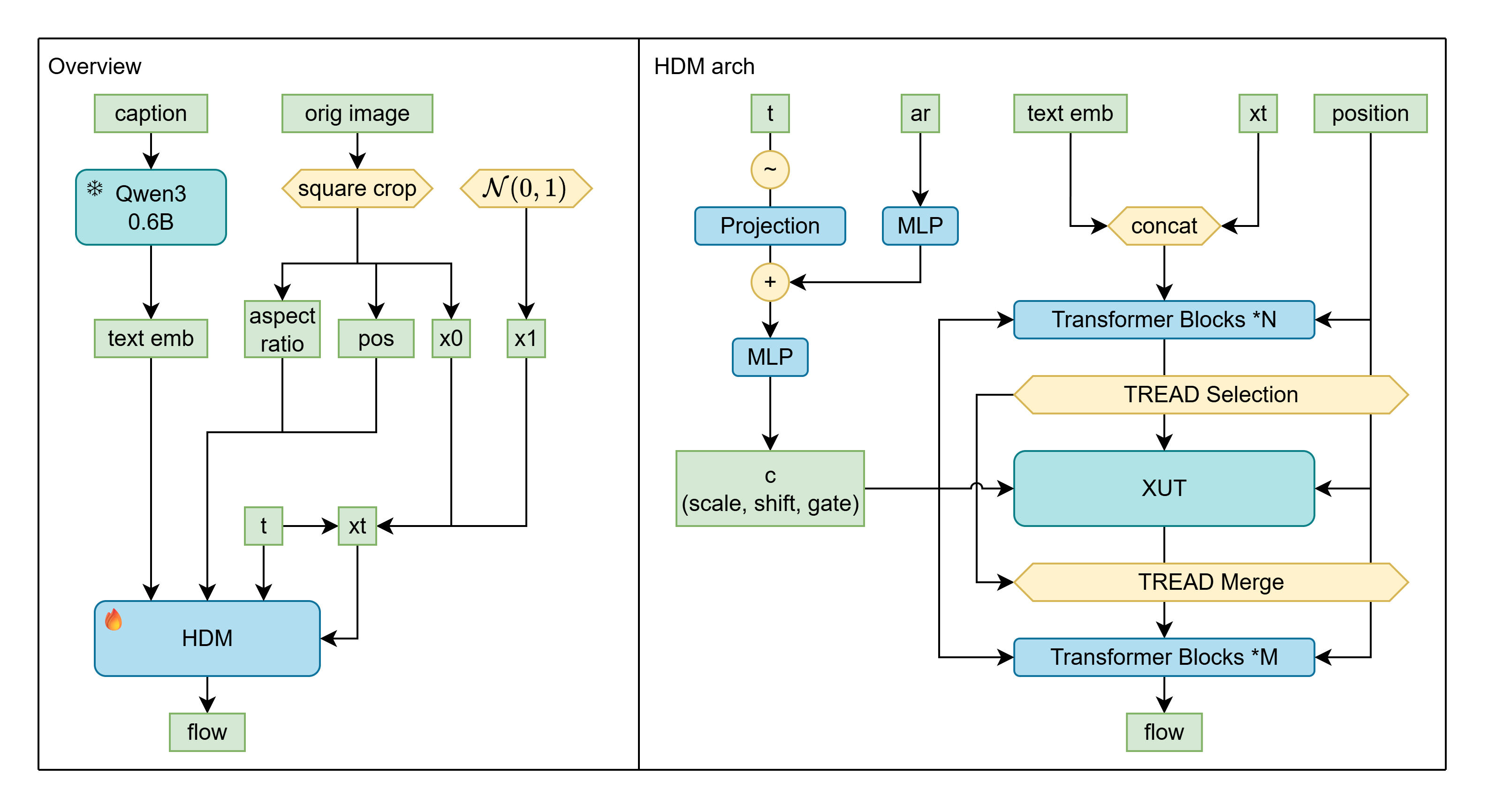

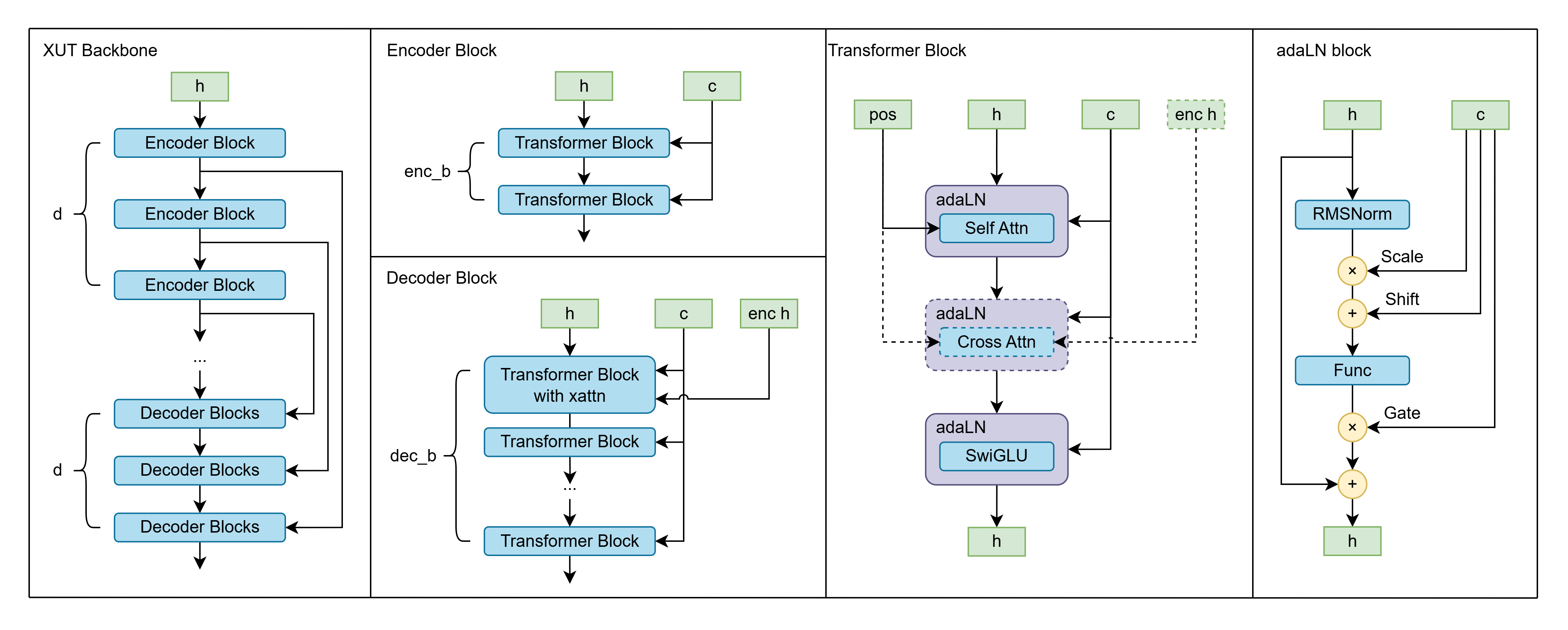

HDM(Home made Diffusion Model) is a project to investigate specialized training recipe/scheme for "pretraining T2I model at home" which require the training setup should be exectuable on customer level hardware or cheap enough second handed server hardware.

Under this constraint, we introduce a new transformer backbone designed for multi-modal (for example, text-to-image) generative model called "XUT" (Cross-U-Transformer). With minimalized arch design with TREAD technique, we can achieve usable performan with computes that cost 650USD at most. (based on pricing from vast.ai)

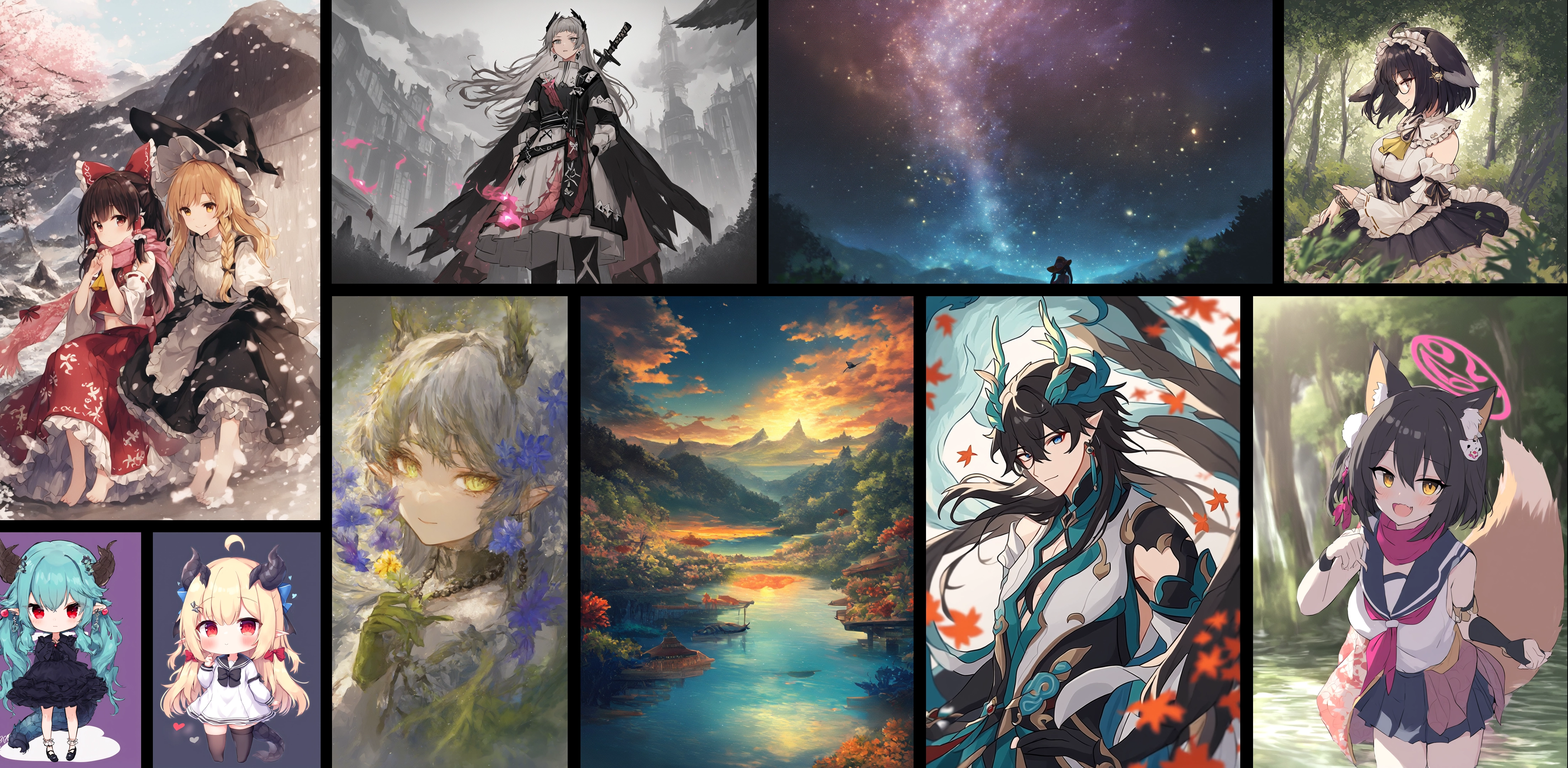

Gallery

youyo (rnna), own hands together, dragon tail, pointy ears, dated, solo, tail, blue tail, dragon girl, black dress, flower, simple background, full body, purple background, artist name, socks, twintails, earrings, closed mouth, signature, aqua hair, dress, mary janes, jewelry, hair flower, horns, shoes, standing, looking at viewer, sleeves past wrists, white background, hair ornament, red eyes, long hair, hair bobbles, black footwear,

A young girl with long blue hair and red eyes. she is wearing a black dress with ruffles on the skirt and sleeves, and black high heels. her hair is styled in two large horns on either side of her head, which are also decorated with small pink flowers. the background is white, making the girl and her outfit stand out.

masterpiece, newest, absurdres

animal, outdoors, black hair, animal ear fluff, tree, wide sleeves, nature, umbrella, yellow eyes, night, long hair, forest, fur-trimmed kimono, snow, oil-paper umbrella, snowing, fur trim, winter, solo, oversized animal, blunt bangs, fox ears, hime cut, wooden bridge, looking at viewer, scenery, red kimono, kimono, fox, long sleeves, fox girl, animal ears, japanese clothes, straight hair, bridge, bare tree,

The image, created by the artist dan-98, depicts a serene winter scene from the Azur Lane series. It features two characters standing on a wooden bridge that spans over a snowy forest. One character is holding an oil-paper umbrella with a red and white pattern, providing shelter to both figures. The backdrop of bare trees and falling snowflakes enhances the tranquil atmosphere of this picturesque winter landscape.

masterpiece, newest, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 913639157822666, Size: 1360x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

blurry foreground, galaxy, outdoors, magic circle, scenery, standing, blue hat, mountain, long hair, depth of field, bug, black hair, fireflies, starry sky, blurry, hat, night sky, solo, tree, comet, firefly, mountainous horizon, witch hat, sky, star (sky), grass, night, from behind, twintails, witch, wide shot, nature, blue theme, forest, magic, grasslands,

This enchanting image by the artist zi13591 depicts a mystical night scene from the series "Genshin Impact. She is elegantly dressed in a flowing gown and wears a wide-brimmed hat, adding an air of mystery to her persona. The background features a breathtaking starry sky with swirling patterns and celestial elements, creating a dreamlike atmosphere. The scene is further enhanced by the presence of fireflies and a gentle glow that illuminates the forest below, highlighting the depth and vastness of the night.

masterpiece, newest, chinese commentary, commentary, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 77551003993312, Size: 1536x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 303199109673130, Size: 1024x1360, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: low quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 205282829445317, Size: 1024x1536, Model hash: f982ead8e5, Model: hdm-xut-340M-1024px-note, Version: ComfyU

bow, black hat, wide sleeves, tree, scarf, juliet sleeves, scenery, blunt bangs, shared clothes, torii, skirt, barefoot, yellow ascot, white apron, midriff peek, ascot, mountain, ribbon trim, snow, skirt set, puffy sleeves, red skirt, pink scarf, shared scarf, petals, hair bow, black dress, sky, waist apron, sitting, detached sleeves, outdoors, hat, hair tubes, hand up, white bow, red bow, long sleeves, wide shot, witch hat, ribbon-trimmed sleeves, dress, petticoat, blonde hair, looking at viewer, day, brown hair, sweater, brown eyes, sweater dress, apron, cherry blossoms, sitting on torii, snowflake print, floral print, hat bow, long hair,

This image is a beautifully detailed illustration by the artist ke-ta, known for their intricate and expressive style. The artwork features two characters from the series "Hidden Star in Four Seasons" of the Touhou project, set against a picturesque backdrop of cherry blossom trees and snowy mountains. The scene is serene yet captivating, with the cherry blossoms adding a touch of natural beauty to the composition. The characters' interactions and the harmonious setting create a sense of camaraderie and tranquility, showcasing ke-ta's skill in character design and storytelling within the Touhou universe.

masterpiece, newest, doujinshi, scan, translation request, absurdres

bokeh, depth of field, lens flare, (artist:wlop:0.9090909090909091), (artist:WANKE:0.9090909090909091), (artist:ciloranko:0.9090909090909091), backlighting, chiaroscuro, vignetting, caustics, (artist:amashiro.natsuki:0.9090909090909091), smiling, chromatic aberration, white flowers, from above, bird's-eye view, vintage filter, among flowers, limited palette, white, field s of flowers, amazing quality, absurdres

masterpiece of a fantasy world, adding to the whimsical atmosphere of the scene., with a young girl standing in the center of the image surrounded by a field of white flowers. she is wearing a long dress and has blonde hair tied up in a bun. there are also several birds flying around her, green, and yellow., with shades of blue, adding to the whimsical and dreamlike feel of the painting. the overall color palette is soft and muted,

This image is a captivating piece by the artist hiten (hitenkei), known for their whimsical and ethereal style. The composition features a character from the series "Cevio Synthesizer V," standing amidst a field of vibrant flowers that glow with an otherworldly light, creating a dreamlike atmosphere. The scene is further enhanced by the use of color gradients, giving it a surreal quality, making it a visually striking image that blends elements of fantasy and tranquility.

best quality, very aesthetic, best quality, newest

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 162583156957958, Size: 2048x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 753488460583170, Size: 1024x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

year 2025, solo, knight, armor, full armor, plate armor, sitting, sword, weapon, cape, red cape, helmet, medieval, fantasy, rocks, outdoors, sky, clouds, dramatic, melancholy, somber, weathered, battle-worn, resting, contemplative, red light, beam of light, artistic, painting, textured, gritty, atmospheric, moody, warrior, crusader, templar, chainmail, metal armor, exhausted, battlefield, conveying a sense of sadness and despair., post-battle

masterpiece 1902. the overall mood of the image is somber and melancholic,

This image is a captivating piece by the artist Osprey, known for their detailed and emotive style. It depicts a scene from the "Battle of Troy" series, specifically from "The Lords of Chaos" which involves two knights. This artwork beautifully captures the essence of medieval warfare and the somber atmosphere that is characteristic of Osprey's style.

best quality, newest

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 83399343549266, Size: 2048x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 1006466452888165, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 710459885578623, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

twilight, indoors, reflection, classroom, school desk, solo, halo, shoes, school chair, water world, hairband, chair, scenery, long sleeves, cloud, on desk, short hair, wide shot, broken wall, sitting, stack, skirt, from behind, bag, desk, blue hair, sneakers, evening, white choker, ocean, serafuku, choker, reflective water, school bag, cloudy sky, pink hair, water, shirt, sailor collar, blunt bangs, colored inner hair, bow hairband, horizon, blue shirt, window, multicolored hair, pleated skirt, sky, backlighting, sitting on desk, school uniform, white skirt, white hairband,

The image, created by the artist onita, depicts a serene and contemplative scene set within an indoor environment. A young girl with blue hair is seated at a desk in front of a large window that offers a breathtaking view of a cloudy sky during what appears to be either sunrise or sunset. The room has a soft, warm lighting that casts gentle shadows, enhancing the tranquil atmosphere. This image is part of the "Blue Archive" series and exemplifies onita's signature style, characterized by its detailed, almost surreal elements and expressive characters.

masterpiece, newest, commentary request, absurdres

hat, holding, planet, indoors, sleeveless, plant, clock, blue eyes, watering can, white hair, vase, blue hair, birdcage, space, cage, cat, earth (planet), sitting, closed eyes, flower, dress, chromatic aberration, smile, window, hair bun, bare shoulders, single hair bun, fish tank, sleeveless dress, looking at viewer, whale shark, air bubble, red flower, closed mouth, short hair, chain, bubble, fish, blue dress, manta ray, potted plant, animal print, arm up, eel, holding watering can, underwater, goldfish, water, starfish, shark print, fish print, bubble blowing,

This vibrant anime-style illustration shows a white-haired character in an ornate red and gold kimono sitting within what appears to be an underwater scene, surrounded by colorful fish, floating bubbles, and aquatic plants. The setting features metal framework structures that create a cage-like environment, all bathed in ethereal blue-green underwater lighting that gives the entire scene a magical, dreamlike quality. The character's expression is calm and serene, adding to the tranquil atmosphere of this underwater-themed environment. This artwork is created by the artist fuzichoco, known for their detailed and imaginative style, capturing a moment of peaceful interaction between the characters in an otherworldly setting.

masterpiece, newest, commentary request, absurdres

herta (puppet) (honkai: star rail), white shirt, brown hair, ..., embarrassed, hair flower, shirt, hair over mouth, flower, purple eyes, very long hair, long hair, solo, sleepwear, scrunchie, blush, doll joints, hand in own hair, strap slip, holding, hair ornament, speech bubble, simple background, purple jacket, purple scrunchie, joints, white background, holding own hair, purple flower, jacket, upper body, hair scrunchie, holding own wrist, light brown hair, sidelocks, wrist scrunchie,

A young girl with long, straight hair. her eyes are closed and she has a peaceful expression on her face. the overall style of the illustration is anime-style.

masterpiece, newest, ai-assisted, commentary, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 684249266742885, Size: 2048x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

branch, own hands together, blunt bangs, pointy ears, very long hair, long hair, gradient hair, dress, tentacle hair, beret, purple hair, dated, white dress, two-tone hair, animal ears, upper body, signature, pink flower, hair bun, mole, own hands clasped, single side bun, light smile, hat, sidelocks, multicolored hair, virtual youtuber, mole under eye, straight hair, orange hair, smile, plaid, flower, solo, alternate costume, blue eyes, looking at viewer, white hat, closed mouth, plaid dre,

a digital illustration by the artist ninomae ina'nis (artist), known for their distinctive and detailed style. The artwork features a character from the Hololive English series, specifically Ninomae Ina'nis, who is depicted with long, dark hair adorned with pink highlights and pointed ears. She wears an alternate costume that includes animal-like ears and a beret, adding to her whimsical appearance. The background is a soft gradient of light colors, subtly accentuating the character's features and creating a serene atmosphere.

masterpiece, newest, commentary, english commentary, self-portrait, absurdres

high contrast, light leak, light particles, depth of field, bokeh, lens flare, portrait, short bangs, solo, floating, curled up, hugging earth, from side, glowing, closed eyes, dark background, simple background, stars, alternative costumes, street style costume, black hoodie, fingerless gloves, black shorts, sneakers, amazing quality, absurdres

best quality, chromatic aberration, no lineart, film grain, hoodie, shoes, gloves, blurry, shorts, hugging object, black footwear, long hair,

a captivating digital illustration by artists Jima Aruki and Toosaka Asagi, showcasing the character Hatsune Miku from the Vocaloid series. She is dressed in a black hoodie and shorts, with her long hair flowing around her as she appears to be floating or dancing. The background is dark, creating a stark contrast that highlights Miku's figure. This piece is part of the "Newest Original" series by Yellow Bullet Vocaloid project.

best quality, very aesthetic, very aesthetic, masterpiece, best quality, newest

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 277890920871419, Size: 2048x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

high contrast, light leak, light particles, depth of field, bokeh, lens flare, green hair, long hair, long neck, single ponytail, hair slicked back, disheveled hair, portrait, no bangs, solo, alternative costumes, black hoodie, fingerless gloves, smile, earrings, sidelighting, amazing quality, chromatic aberration, no lineart, film grain, black gloves, aqua theme, jewelry, looking at viewer, sidelocks, eyebrows hidden by hair, hair between eyes, hood down, hand up, light smile, gloves, close-up, blurry background, green eyes, closed mouth, hood, hoodie, blurry,

In this captivating image by the artist rolua (ciloranko), we see a close-up portrait of Hatsune Miku, a character from the Vocaloid series. She is dressed in her iconic black hoodie with red accents and fingerless gloves, adding a touch of edginess to her appearance. The background is blurred with light particles scattered around, creating a dreamy atmosphere that complements Miku's serene expression. This piece showcases rolua (ciloranko)'s distinctive style, characterized by its detailed and expressive portrayal of characters.

best quality, very aesthetic, masterpiece, best quality, newest

fukamiki kei, tail, halo, skin fang, solo, eyeshadow, medium breasts, short hair, skirt, makeup, hair ornament, pink halo, yellow eyes, fox tail, scarf, pom pom hair ornament, fox girl, river, pleated skirt, leaning forward, animal ears, fang, black hair, black skirt, one side up, nature, pink scarf, sleeveless, miniskirt, open mouth, looking at viewer, fox hair ornament, breasts, animal ear fluff, fox ears, outdoors, wading, pink eyeshadow, forest, pom pom (clothes), smile, waterfall, sunlight, white shirt, sleeveless shirt, sailor collar, shirt, tail raised, partially submerged, blush, water, cowboy shot, blue sailor collar, :d,

This image is a digital illustration by the artist masabodo, known for their detailed and vibrant style. The central character in the artwork is Izuna from the series "Blue Archive," depicted with fox-like features including pointed ears and a fluffy tail. Izuna is portrayed with her characteristic black hair adorned with pink ribbons, wearing a sailor-style outfit that includes a blue collar and skirt. The overall scene exudes a sense of tranquility and grace, capturing a moment of serene beauty in nature.

masterpiece, newest, commentary request, absurdres

scenery, barefoot, toes, long dress, collarbone, bare shoulders, outdoors, solo, sun hat, sundress, soles, animal ears, hat, long hair, closed mouth, hair between eyes, animal ear fluff, cat ears, twin braids, braid, sitting, red eyes, sunlight, dress, cat girl, fountain, plant, extra ears, looking to the side, water, white dress, feet, grey hair, crossed ankles, very long hair, plantar flexion, twintails, sleeveless dress, looking at viewer, dappled sunlight, sleeveless, blush, day, white headwear, from side, legs together, vines, stairs, pink eyewear, bare arms, full body, bare legs, low twintail,

In the image, a young girl with cat ears and fluffy tails sits gracefully in an outdoor setting. She is dressed in a light blue dress that contrasts beautifully with her hair. The background features lush greenery and plants, suggesting a serene garden or park atmosphere. The artwork is characterized by the artist fkey's detailed and vibrant style, capturing a moment of tranquility and charm.

masterpiece, newest, absurdres

walking in flowers, looking back, close-up, chromatic aberration, bokeh, caustics, chromatic aberration abuse, extremely detailed CG, depth of field, WANKE, hat with flower, white jacket, smiling, (artist:amashiro.natsuki:0.9090909090909091), long hair, messy hair, amazing quality, flower, sunlight, blurry, jacket, from behind, long sleeves, looking at viewer, closed mouth, standing, smile, water, solo focus, water drop, blue hair, afloat, hat feather, petals on liquid, white headwear, red eyes, hand up, sidelighting, petals,

a captivating digital art piece created by the artist Mika Pikazo for their "Ayatino" series, also known as "original. She wears a white jacket adorned with red accents and holds her hand near her face in a contemplative pose. The scene is set against a dark backdrop, illuminated by soft light that casts gentle shadows and highlights the floating flowers around her. The overall composition exudes a sense of calm and introspection, characteristic of Mika Pikazo's style.

best quality, very aesthetic, masterpiece, best quality, newest

rorynans, animal, antlers, black hair, blue eyes, cleavage cutout, clothing cutout, detached sleeves, dragon, eastern dragon, elbow gloves, fingerless gloves, gloves, hair over shoulder, horns, leaf, long hair, maple leaf, motion blur, mullet, pectorals, pointy ears, sleeveless robe, toned, toned male, pectoral cleavage, earrings, upper body, green horns, looking at viewer, black gloves, single bare shoulder, jewelry, red eyeliner, makeup, chinese clothes, robe, solo focus,

a stunning digital illustration by the artist ravenpulse, known for their intricate and vivid style. The central figure in this artwork is Dan Heng from the series "Honkai: Star Rail. He is dressed in traditional Chinese-inspired attire, which includes a sleeveless robe with intricate patterns and cutouts that reveal his muscular physique. His outfit also features detached sleeves and fingerless gloves, adding to the overall elegance of his appearance. The background is blurred, creating a sense of motion and focusing attention on Dan Heng as he looks directly at the viewer. The setting appears to be an eastern-themed environment with hints of autumn leaves scattered around, enhancing the visual appeal of the piece. The overall composition highlights Dan Heng's strength and poise, capturing a moment of intense focus and readiness.

masterpiece, best quality, newest

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 1031686041350569, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

outdoors, scenery, sitting, lens flare, winter, long hair, snow, pond, scarf, solo, porch, sun, snowing, lake, sunset, long sleeves, park bench, knees up, winter clothes, plaid scarf, black pantyhose, sky, water drop, cloud, profile, pantyhose, wooden floor, closed mouth, looking away, plaid, brown footwear, boots, mountainous horizon, from side, blush, red eyes, cloudy sky, sweater, white sweater, bench,

This image by the artist natsu (hottopeppa3390) depicts a serene winter scene at dusk. The artwork showcases a young girl with long, light-colored hair and striking red eyes seated on a wooden bench. She is dressed warmly in a white sweater and black pantyhose, her feet clad in brown boots. Her gaze is directed away from the viewer, adding an air of contemplation to the scene. The setting sun casts a soft glow over the snow-covered landscape, highlighting the tranquil lake and its reflection on the water's surface. The sky above is filled with clouds and light particles, creating a magical atmosphere. A lone figure stands in the distance, adding depth to the composition.

masterpiece, newest, resolution mismatch, md5 mismatch, photoshop (medium), commentary request, source larger, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 1010047888126740, Size: 1536x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 208730707976133, Size: 1536x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

mikoshi (user wvyd4348), fox ears, pink halo, gloves, holding gift, blurry, medium hair, gift, fox hair ornament, holding, partially fingerless gloves, blurry background, fang out, animal ears, smile, pouch, looking at viewer, black hair, one side up, valentine, closed mouth, black gloves, animal ear fluff, blush, fang, yellow eyes, :3, fox girl, halo, upper body, solo, brown hair, gift box, skin fang, fox shadow puppet, shirt, long sleeves, skirt, pink scarf, box, single glove, blurry foreground, white shirt, pleated skirt, hands up, sailor collar, blue skirt, scarf, depth of field, school uniform, heart-shaped box, serafuku, blue sailor collar,

This image is a vibrant and detailed illustration by the artist Kurohikage, showcasing a character from the series "Blue Archive. She has distinctive cat-like features, including pointed ears and fluffy ear tufts, adding to her unique charm. The background is filled with colorful elements, contributing to a lively and dynamic atmosphere that complements the character's energetic demeanor. The overall style of the artwork is highly detailed and expressive, capturing both the essence of the character and the series' aesthetic.

masterpiece, newest, commentary request, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 944013876753649, Size: 1360x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 494986375547008, Size: 1024x1536, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

.webp)

.webp)

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 1122802635229146, Size: 1024x1360, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

people, road, neon palette, city, wet, blurry, coat, limited palette, long hair, bag, umbrella, night, rain, lights, black hair, transparent umbrella, transparent, long sleeves, arm at side, looking back, scenery, blurry foreground, depth of field, dark, backpack, outdoors, crosswalk, looking at viewer, english text, street, hooded coat, bokeh, city lights, solo focus, from behind, standing, building, hood down, hood, purple theme, cowboy shot, black eyes, holding, puddle, holding umbrella,

The image by the artist catzz depicts a solitary figure standing on a rain-soaked street at night, illuminated by neon lights. She holds an umbrella in her right hand, which is raised as if caught mid-stride. Her posture suggests she is walking along the crosswalk, with the wet pavement reflecting the glow of distant traffic signals and the city's ambient light. The overall atmosphere is one of solitude and quiet introspection amidst the urban landscape.

masterpiece, newest, mixed-language commentary, commentary, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 291795535432825, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 293718624327641, Size: 1024x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

bokeh, depth of field, lens flare, (artist:wlop:0.9090909090909091), (artist:WANKE:0.9090909090909091), (artist:ciloranko:0.9090909090909091), backlighting, (artist:hiten:0.9090909090909091), chiaroscuro, vignetting, caustics, (artist:amashiro.natsuki:0.9090909090909091), smiling, chromatic aberration, white flowers, from above, bird's-eye view, vintage filter, among flowers, limited palette, white, field s of flowers, amazing quality, and it is a digital art piece by hiten (hitenkei), absurdres

masterpiece of the image, creating a vibrant and lively atmosphere., with a bird perched on her shoulder. the background is filled with colorful flowers and plants, making it a perfect hd wallpaper for any fan of the series., adding to the beauty of the scene. the colors are bright and vivid, making it a great choice for any fan.,

a digital artwork created by the artist hiten (hitenkei), showcasing a serene and somewhat surreal scene. At the center of the composition stands a young girl with light-colored hair, dressed in a flowing gown that seems to blend seamlessly with her surroundings. She is positioned on a stone platform, surrounded by an array of vibrant flowers and foliage in various shades of pink, purple, and green. The scene is bathed in soft backlighting, creating a sense of depth and tranquility as the girl appears to be gazing into the distance.

best quality, very aesthetic, best quality, newest

bow, black hat, wide sleeves, tree, scarf, juliet sleeves, scenery, blunt bangs, shared clothes, torii, skirt, barefoot, yellow ascot, white apron, midriff peek, ascot, mountain, ribbon trim, snow, skirt set, puffy sleeves, red skirt, pink scarf, shared scarf, petals, hair bow, black dress, sky, waist apron, sitting, detached sleeves, outdoors, hat, hair tubes, hand up, white bow, red bow, long sleeves, wide shot, witch hat, ribbon-trimmed sleeves, dress, petticoat, blonde hair, looking at viewer, day, brown hair, sweater, brown eyes, sweater dress, apron, cherry blossoms, sitting on torii, snowflake print, floral print, hat bow, winter,

a vibrant illustration by the artist Ke-ta, featuring two characters from the Touhou series. The scene is set outdoors in what appears to be a snowy landscape with cherry blossom trees and mountains in the background. On the right side of the image stands another character wearing a witch's hat adorned with a red bow and a yellow scarf tied around her waist. She is dressed in an elegant outfit, including a black dress decorated with floral patterns, and she gazes towards the viewer with a slight smile on her face.

masterpiece, newest, doujinshi, scan, translation request, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 370116235876126, Size: 1024x1536, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 565463797077543, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 869967301831594, Size: 1536x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

full body, anniversary, sky, outdoors, wheat, wolf ears, brown hair, smile, fur trim, sleeveless, sun glare, long hair, solo, boots, sunlight, tail, animal ears, pouch, mountainous horizon, cart, wolf tail, wolf girl, sleeveless jacket, skirt, day, scenery, nature, blush, blue sky, mountain, standing, brown footwear, looking at viewer, jacket, wind lift, red eyes, wind, wheat field, very long hair, fur-trimmed boots, cloud, cloudy sky, hand on own hip, thigh boots, looking back, forest, floating island, red skirt, fur-trimmed jacket, floating city, from behind, floating hair, thighhighs,

In this vibrant and whimsical artwork by the artist shoichi (ekakijin), a character from the series "Spice and Wolf" stands prominently in a field of golden wheat. The central figure, Holo, is depicted with her signature long brown hair cascading down her back, and she sports wolf-like ears and a tail, adding an element of fantasy to her appearance. Her red eyes sparkle as she looks back over her shoulder, smiling warmly at the viewer. The sky above is a clear blue, dotted with fluffy white clouds, creating a serene backdrop for this enchanting scene.

masterpiece, newest, official art, commentary request, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 87459800149096, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

earrings, jewelry, arm up, tassel, solo, bird, pink hair, bare shoulders, parted bangs, detached sleeves, pink flower, hair ornament, cherry blossoms, holding, white pantyhose, looking at viewer, necklace, forehead jewel, long hair, very long hair, hair spread out, hair stick, short sleeves, pantyhose, floating hair, closed mouth, sidelocks, neck tassel, hair rings, flower, tassel hair ornament, dress, standing, white dre, pink eyes,

In this enchanting illustration by the artist yajuu, a character from the series "Honkai: Star Rail" stands amidst a vibrant display of cherry blossoms. The character, known as Fuyu Fu Xuan (Chinese), is depicted with long, flowing pink hair adorned with intricate hair ornaments and tassels. She wears traditional Chinese attire, including a dress with detached sleeves and white pantyhose, complemented by jewelry such as necklaces and earrings. Her eyes are closed, and she has a serene expression on her face, looking directly at the viewer. Fuyu Fu Xuan is surrounded by cherry blossom branches that burst with red flowers, creating a beautiful contrast against her pink attire. The background features a soft gradient of purple hues, further enhancing the ethereal atmosphere. A bird can be seen flying in the distance, adding to the sense of tranquility and harmony with nature. The overall composition is characterized by yajuu's signature style, which combines detailed line work with vivid colors and a dreamy ambiance.

masterpiece, newest, commentary request, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 1051482950769510, Size: 1024x1360, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

full body, short hair, drop-shaped pupils, sky, oil-paper umbrella, day, dress, blue hair, blue theme, hair ornament, sitting, colored bangs, holding, white dress, symbol-shaped pupils, tree, flower, snow, tassel, solo, white hair, pumps, shoes, breasts, wide sleeves, mountain, blue pupils, heterochromia, hair flower, architecture, streaked hair, blue shoes, holding umbrella, alternate costume, blue eyes, looking at viewer, umbrella, high heels, lake, closed mouth, outdoors, bare shoulders, multicolored hair, pine tree, scenery, china dress, bare legs, pavilion, chinese clothes, medium breasts, east asian architecture, blue sky, long sleeves,

In this captivating digital illustration by the artist miao shang san xiao, a character from the series "Genshin Impact" is depicted in an ethereal setting. The central figure, identifiable as Furina (2024), is elegantly dressed in traditional Chinese attire with flowing blue and white garments that blend seamlessly with the surrounding environment. She sits gracefully on a wooden structure amidst a serene landscape of snow-covered mountains and a tranquil lake, creating an atmosphere of calm and contemplation. The scene is further enhanced by delicate cherry blossom petals falling around her, adding to the dreamy ambiance.

masterpiece, newest, safe, commentary request, absurdres

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 960573175128065, Size: 1360x1024, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 4.0, Seed: 545758812501707, Size: 1024x2048, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

red eyes, profile, grey hair, upper body, tassel, painterly, japanese clothes, animal ears, short hair, wolf ears, tokin hat, detached sleeves, hat, simple background, solo, pom pom (clothes), close-up, messy hair, closed mouth, expressionless, breasts, character name, eyelashes, from side, faux traditional media, white background, nose, turtleneck, headphone, medium breasts, looking away, shaded face, bob cut, lips, bare shoulders,

This image is a striking piece of digital art by the artist Matsuda (Matsukichi), known for their distinctive style within the Touhou series. The central figure in this portrait is Inubashiri Momiji, depicted with her signature features including short grey hair adorned with a red tassel and striking red eyes. She wears traditional Japanese attire, including a tokin hat that adds an air of mystery to her appearance. Her expression is serious and introspective as she gazes off to the side, creating a sense of depth and intrigue in the composition.

masterpiece, newest, photoshop (medium), commentary request, absurdres

bow, black hat, wide sleeves, tree, scarf, juliet sleeves, scenery, blunt bangs, shared clothes, torii, skirt, barefoot, yellow ascot, white apron, midriff peek, ascot, mountain, ribbon trim, snow, skirt set, puffy sleeves, red skirt, pink scarf, shared scarf, petals, hair bow, black dress, sky, waist apron, sitting, detached sleeves, outdoors, hat, hair tubes, hand up, white bow, red bow, long sleeves, wide shot, witch hat, ribbon-trimmed sleeves, dress, petticoat, blonde hair, looking at viewer, day, brown hair, sweater, brown eyes, sweater dress, apron, cherry blossoms, sitting on torii, snowflake print, floral print, hat bow,

This image, created by the artist Ke-ta, is a charming illustration that captures a serene winter scene. The artwork features two characters set against a picturesque backdrop of snow and cherry blossoms. The overall composition of the image is balanced, with each character and element contributing to a harmonious narrative. The use of color and detail in the illustration adds depth and warmth to the winter scene.

masterpiece, newest, doujinshi, scan, translation request, absurdres

ba gu ya rou, purple flower, dragon girl, white flower, blue flower, holding, jewelry, grey jacket, green flower, flower, light particles, off shoulder, holding flower, beads, jacket, hair intakes, long hair, horns, long sleeves, hair between eyes, solo, brown pupils, pointy ears, colored extremities, earrings, bare shoulders, dragon horns, grey hair, yellow flower, light smile, necklace, closed mouth, blonde hair, green eyes, looking at viewer,

a digital illustration by the artist yinghua syrena, known for their detailed and vibrant style. The central figure in the artwork is Shu from the series Arknights. She wears earrings and has horns protruding from her head, adding to her mystical appearance. Surrounding her are various flowers and leaves in shades of blue, purple, and green, creating a harmonious and enchanting composition that emphasizes the character's ethereal beauty.

masterpiece, newest, chinese commentary, commentary, absurdres

knight, helmet, slim, topless, mature male, solo, dark skin, muscular, bara, green trousers, cowboy shot, multicolored fire, blue fire, red fire, rainbow fire, flame magic, high contrast, realistic, 3d, faceless, depth of field, lens flare, bokeh, abs, abnormals, rainbow gradient, flaming arm, muscular male, blurry, backlighting, red eyes, faulds, dark-skinned male, fire, fireball, standing, armor,

A man in a futuristic suit with a helmet and armor. he is standing in front of a dark background with a rainbow-colored light shining down on him. the man appears to be in a battle-ready stance, ready for battle. the image has a surreal and dynamic feel to it.

masterpiece, best quality, newest

Negative prompt: unknownlow quality, worst quality, text, signature, jpeg artifacts, bad anatomy, old, early, copyright name, watermark, artist name, signature, weibo username, mosaic censoring, bar censor, censored, text, speech bubbles, doll, character doll, hair intake, realistic, 2girls, 3girls, multiple girls, crop top, cropped head, cropped, large breasts, nsfw

Steps: 35, Sampler: euler_simple, CFG Scale: 1.0, Seed: 734221260669565, Size: 1024x1536, Model hash: f7e7841239, Model: hdm-xut-340M-1024px-9kstep-note, Version: ComfyU

outdoors, scenery, sitting, lens flare, winter, long hair, snow, pond, scarf, solo, porch, sun, snowing, lake, sunset, fox girl, brown skirt, fox ears, tree, stone lantern, white socks, animal ears, japanese clothes, fox tail, kitsune, tail, red eyes, east asian architecture, stairs, full body, sunlight, haori, bare tree, shrine, black hair, from side, cloudy sky, skirt, mountain, socks, black footwear, building, cloud, animal ear fluff, looking away, railing, sky, blue scarf, lantern, architecture, closed mouth, blunt bang, long sleeve,

A traditional japanese house with a wooden porch and stairs leading up to it. the sky is filled with fluffy white clouds, creating a peaceful and serene atmosphere. on the right side of the image, there are two foxes sitting on the porch steps, facing each other. the overall color palette is soft and muted, giving the image a dreamy and whimsical feel.

masterpiece, newest, resolution mismatch, md5 mismatch, photoshop (medium), commentary request, source larger, absurdres

hat, holding, planet, indoors, sleeveless, plant, clock, blue eyes, watering can, white hair, vase, blue hair, birdcage, space, cage, cat, earth (planet), sitting, closed eyes, flower, dress, chromatic aberration, smile, window, hair bun, bare shoulders, open mouth, globe, star (symbol), potted plant, :o, cleavage, bookshelf, thighhighs, blunt bangs, single side bun, from behind, looking back, blue dress, witch hat, detached sleeves, holding flower, frills, long hair, witch, sleeveless dress, breasts, white legwear, book, blue headwear, medium breasts, bird,

In the whimsical and detailed style characteristic of the artist fuzichoco, this image portrays a fantastical scene within an ornate room. A young woman with long, flowing blue hair and matching eyes sits gracefully on the floor, her posture relaxed yet poised. She is dressed in a light-colored outfit that contrasts beautifully with the rich hues of the room around her. Above her, an intricately designed birdcage hangs from the ceiling, adding an element of absurdity and commentary to the scene. The overall composition exudes a sense of tranquility and charm, blending elements of fantasy with everyday life in fuzichoco's distinctive style.

masterpiece, newest, commentary request, absurdres

dragon-chan (momozu komamochi), bow, black bow, black thighhighs, red bow, sailor dress, ahoge, tail, black bowtie, hair bow, long sleeves, white background, blue bow, solo, simple background, blush, blonde hair, hair ornament, thighhighs, dragon girl, no shoes, heart, chibi only, asymmetrical legwear, dragon horns, dragon wings, wings, black horns, white dress, closed mouth, long hair, horns, dragon tail, pointy ears, uneven legwear, chibi, dress, bowtie, hairclip, red eye,

a charming piece of artwork created by the artist momozu komamochi, known for their whimsical and detailed style. It features an original character with long blonde hair adorned with a black bow and small horns on her head, giving her an otherworldly appearance reminiscent of a dragon girl. She is dressed in a white sailor dress that contrasts beautifully with her red eyes and the dark background behind her. The overall composition is balanced and pleasing, showcasing the artist's skill in creating endearing and visually appealing characters.

masterpiece, newest, commentary request, absurdres

sunlight, black hair, cloud, house, scenery, looking at viewer, solo, retaining wall, two side up, barefoot, pleated skirt, day, smile, alley, school uniform, skirt, blue sky, stairs, outdoors, architecture, sky, dappled sunlight, ocean, sitting, yellow eyes, bare legs, blush, long hair, tree shade, book, shade, shirt, water, tree, serafuku, blouse,

In this image by the artist Kantoku, a young girl with long black hair tied into twin ponytails is captured in an alleyway. Her gaze is directed downwards, and she has a slight on her cheeks, suggesting a moment of contemplation or shyness. The setting appears to be a quaint, somewhat cluttered street lined with old buildings, some of which show signs of age and wear. The overall atmosphere of the image is serene yet slightly melancholic, highlighting the girl's introspective mood amidst an urban environment.

masterpiece, newest, scan, photoshop (medium), absurdres

Quick Start

Read [Usage Hint] section after install the model

READ IT READ IT READ IT

- Use official gradio ui: https://github.com/KohakuBlueleaf/HDM

- Follow the readme in this repository

- Use comfyui loader node: https://github.com/KohakuBlueleaf/HDM-ext

- Install this repository as comfyui custom node

- Use the HDM-loader node with provided safetensors file

- Use diffusers pipeline:

- Install hdm library from github repo

git clone https://github.com/KohakuBlueleaf/HDM cd HDM # fused: xformers fused swiglu/attention # liger: liger-kernel fused swiglu # tipo : tipo prompt gen system for official UI # win : windows specific (all windows user should add this) # finetune: install lycoris-lora for finetune with lycoris pip install -e .[tipo] ## For windows user: pip install -e .[win,tipo] - Than use pipeline from hdm

import torch from hdm.pipeline import HDMXUTPipeline torch.set_float32_matmul_precision("high") pipeline = ( HDMXUTPipeline.from_pretrained( "KBlueLeaf/HDM-xut-340M-anime", trust_remote_code=True ) .to("cuda") .to(torch.float16) ) images = pipeline("1girl....", "").images

- Install hdm library from github repo

Usage Hint

- Prompting:

- Use Danbooru tag + Natural Language Description

- For "tags", use "danbooru tag" ONLY.

- For tags, use comma seperated tag sequence, "without underscore"

- "1girl long_hair" -> "1girl, long hair"

- Make prompt as detail as possible

- TIPO is highly recommended to use here!!!

- Sampling settings:

- CFG scale: 2~4

- Inference steps: at least 8, works best in 16~32

- For 1024px model, recommend following resolution (WxH):

- 1024xK where K >= 1024

- WxH where W > H, W*H >= 1024*1024, H <= 1024

- For example: 1024x1536(1:2), 1440x800(16:9), 1472x736(2:1), 1280x960(4:3)

- Don't know where to start? New to danbooru-tag style prompting?

- Check danbooru.donmai.us, find any image you feel good, copy its tag!

- Remember to apply the transformation mentioned above.

Example prompt format

1girl,

izuna (blue archive), blue archive,

fukamiki kei, tail, halo, skin fang, solo, eyeshadow, medium breasts, short hair, skirt, makeup, hair ornament, pink halo, yellow eyes, fox tail, scarf, pom pom hair ornament, fox girl, river, pleated skirt, leaning forward, animal ears, fang, black hair, black skirt, one side up, nature, pink scarf, sleeveless, miniskirt, open mouth, looking at viewer, fox hair ornament, breasts, animal ear fluff, fox ears, outdoors, wading, pink eyeshadow, forest, pom pom (clothes), smile, waterfall, sunlight, white shirt, sleeveless shirt, sailor collar, shirt, tail raised, partially submerged, blush, water, cowboy shot, blue sailor collar, :d,

This image is a digital illustration by the artist masabodo, known for their detailed and vibrant style. The central character in the artwork is Izuna from the series "Blue Archive," depicted with fox-like features including pointed ears and a fluffy tail. Izuna is portrayed with her characteristic black hair adorned with pink ribbons, wearing a sailor-style outfit that includes a blue collar and skirt. The overall scene exudes a sense of tranquility and grace, capturing a moment of serene beauty in nature.

masterpiece, newest, absurdres

Or with placeholder:

<|special|>,

<|characters|>, <|series|>,

<|artist|>,

<|general content tags|>,

<|natural language|>.

<|quality|>, <|rating|>, <|meta|>

NOTE: In ComfyUI, you SHOULD use backslash for ALL the bracket. for example izuna (blue archive) -> izuna \(blue archive\). To ensure TIPO to works correctly and the bracket will be sent to the model.

Model Spec

Model Setup

- 343M XUT diffusion

- 596M Qwen3 Text Encoder (qwen3-0.6B)

- EQ-SDXL-VAE

- Support 1024x1024 or higher resolution

- 512px/768px checkpoints provided

- Sampling method/Training Objective: Flow Matching

- Inference Steps: 16~32

- Hardware Recommendations: any Nvidia GPU with tensor core and >=6GB vram

- Minimal Requirements: x86-64 computer with more than 16GB ram

- 512 and 768px can achieve reasonable speed on CPU

Dataset

- Danbooru2023 (around 7.6M images, latest id around 8.2M)

- Curated Pixiv set (around 700k images)

- Private pvc figure photos. (less than 50k images)

Training

| Stage | 256² | 512² | 768² | 1024² |

|---|---|---|---|---|

| Dataset | Danbooru 2023 | Danbooru2023 + extra* | - | curated** |

| Image Count | 7.624M | 8.469M | - | 3.649M |

| Epochs | 20 | 5 | 1 | 1 |

| Samples Seen | 152.5M | 42.34M | 8.469M | 3.649M |

| Patches Seen | 39B | 43.5B | 19.5B | 15B |

| Learning Rate (muP, base_dim=1) | 0.5 | 0.1 | 0.05 | 0.02 |

| Batch Size (per GPU) | 128 | 64 | 64 | 16 |

| Gradient Checkpointing | No | Yes | Yes | Yes |

| Gradient Accumulation | 4 | 2 | 2 | 4 |

| Global Batch Size | 2048 | 512 | 512 | 256 |

| TREAD Selection Rate | 0.5 | 0.5 | 0.5 | 0.0 |

| Context Length | 256 | 256 | 256 | 512 |

| Training Wall Time | 174h | 120h | 42h | 49h |

Limitations

- Due to constrained training budget and model size:

- This model require user to provide highly detailed and well structured prompt to works well.

- Users are highly recommended to use TIPO

- This model are not good at generate good detail structure or not well-annotated concept (such as hands or special ornaments)

- This model require user to provide highly detailed and well structured prompt to works well.

- This model can only generate anime-style images for now due to dataset choice.

- A more general T2I model is under training, stay tuned.

- This model are capable to generate lot of different anime-like style but it's hard to trigger specific art style stably. It is recommend to try different combination of content, meta, artist tag to achieve different art style.

License

This project is still under developement, therefore all the models, source code, text, documents or any media in this project are licensed under CC-BY-NC-SA 4.0 until the finish of development.

For any usage that may require any kind of standalone, specialized license. Please directly contact [email protected]

- Downloads last month

- 282

-2ea44f)

-HDM-2ea44f)