Swahili-English Speech-to-Text (STT) Model

This model is a fine-tuned version of openai/whisper-medium specifically optimized for Swahili and English speech recognition. The model has been trained on Common Voice 17.0 dataset and achieves significant improvements in word error rate (WER) compared to the base model.

Model Performance

The model achieves the following results on the evaluation set:

- Loss: 0.3390

- WER: 14.7

Usage

Installation

First, install the required dependencies:

pip install transformers torch librosa

Basic Usage

from transformers import AutoModelForSpeechSeq2Seq, AutoProcessor

import torch

import librosa

# Load the model and processor

processor = AutoProcessor.from_pretrained("Jacaranda-Health/ASR-STT")

model = AutoModelForSpeechSeq2Seq.from_pretrained("Jacaranda-Health/ASR-STT")

model.generation_config.forced_decoder_ids = None

def transcribe(filepath):

"""

Transcribe audio file to text

Args:

filepath (str): Path to audio file

Returns:

str: Transcribed text

"""

# Load audio file

audio, sr = librosa.load(filepath, sr=16000)

# Process audio

inputs = processor(audio, sampling_rate=sr, return_tensors="pt")

# Generate transcription

with torch.no_grad():

generated_ids = model.generate(inputs["input_features"])

# Decode the transcription

transcription = processor.batch_decode(generated_ids, skip_special_tokens=True)[0]

return transcription

# Example usage

transcription = transcribe("path/to/your/audio.wav")

print(f"Transcription: {transcription}")

Batch Processing

def transcribe_batch(audio_files):

"""

Transcribe multiple audio files

Args:

audio_files (list): List of audio file paths

Returns:

list: List of transcriptions

"""

transcriptions = []

for filepath in audio_files:

try:

transcription = transcribe(filepath)

transcriptions.append({

'file': filepath,

'transcription': transcription

})

except Exception as e:

transcriptions.append({

'file': filepath,

'error': str(e)

})

return transcriptions

# Example usage

audio_files = ["audio1.wav", "audio2.wav", "audio3.wav"]

results = transcribe_batch(audio_files)

Model Comparison

The fine-tuned model shows dramatic improvements over the base Whisper model, particularly in Swahili language accuracy. Here are some comparison examples showing how the base model completely failed while our fine-tuned model nailed it:

Example 1: Complete Language Confusion

Ground Truth: "Panya wengi huishi kati ya wanadamu."

Base Model: "本来我以为是个铁网来的" (Chinese characters!)

Fine-tuned Model: "Wanyawengi huishi kati ya wanadamu." ✓

Ground Truth: "Mji ulianzishwa kwenye kisiwa kilichopo karibu sana na bara."

Base Model: "Nguni unia nzisho kwenye kisiwa kilichopo kariwu sana nabara"

Fine-tuned Model: "Mji ulianzishwa kwenye kisiwa kilichopo karibu sana na bara." ✓

Ground Truth: "Nchi ya maajabu."

Base Model: "Um dia mais, diabo!" (Portuguese/Spanish)

Fine-tuned Model: "Nchi ya maajabu." ✓

Example 2: Arabic Script Mix

- Ground Truth: "Alama yake ni µm."

- Base Model: "الله معاكي لأم" (Arabic script)

- Fine-tuned Model: "Alama yake ni µm." ✓

Example 3: English Instead of Swahili

- Ground Truth: "Ni msimamizi wa mtandao na wa wanafunzi."

- Base Model: "You don't see no music on Tyndale? No, I don't see no music on Tyndale."

- Fine-tuned Model: "Ni msimamizi wa mtandao na wa wanafunzi." ✓

Key Improvements

The fine-tuned model demonstrates superior performance in:

- Swahili Grammar: Better handling of Swahili sentence structure and grammar

- Word Recognition: More accurate recognition of Swahili vocabulary

- Context Understanding: Improved contextual understanding across different domains

- Pronunciation Variants: Better handling of different Swahili pronunciation patterns

- Mixed Language: Enhanced performance on code-switched Swahili-English content

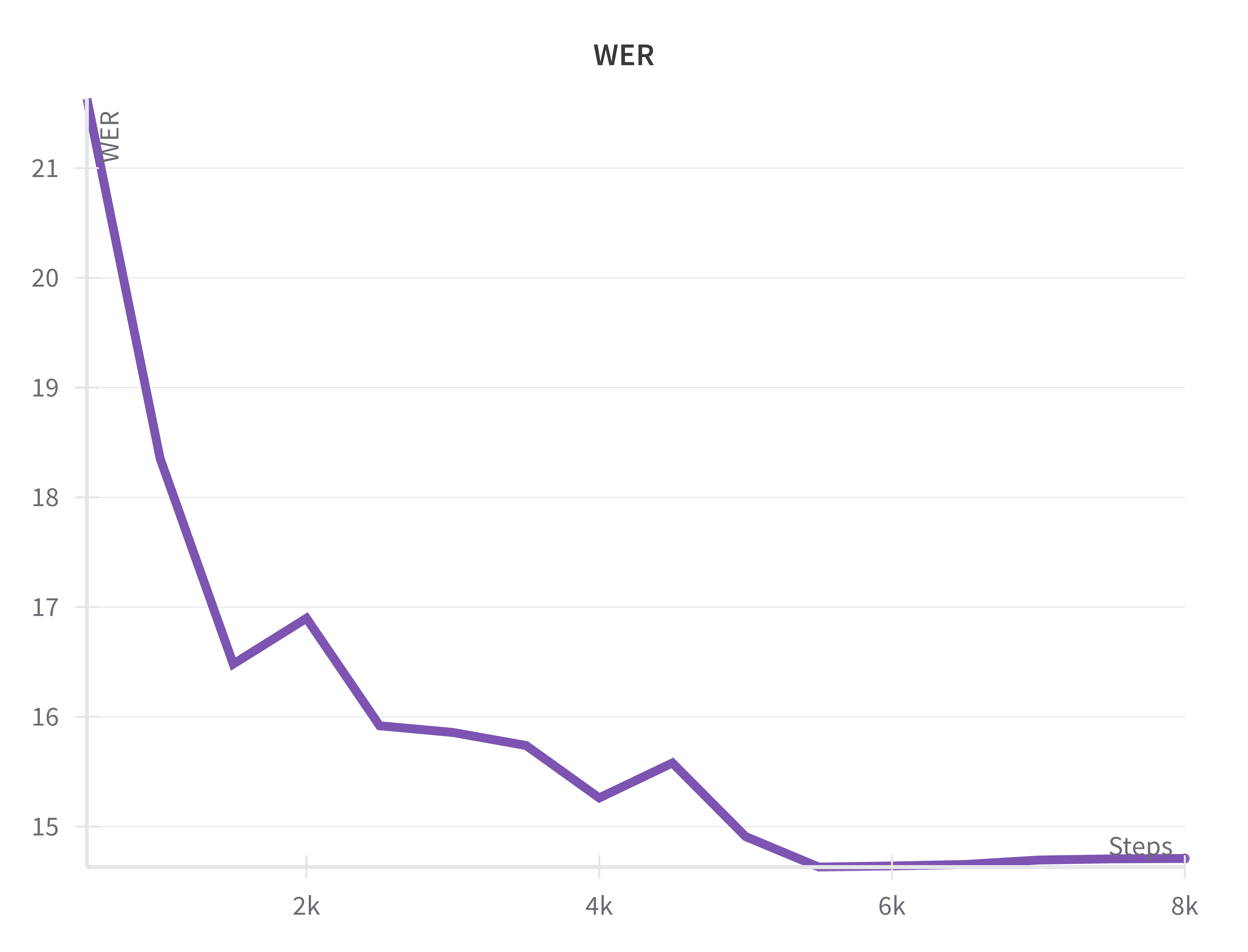

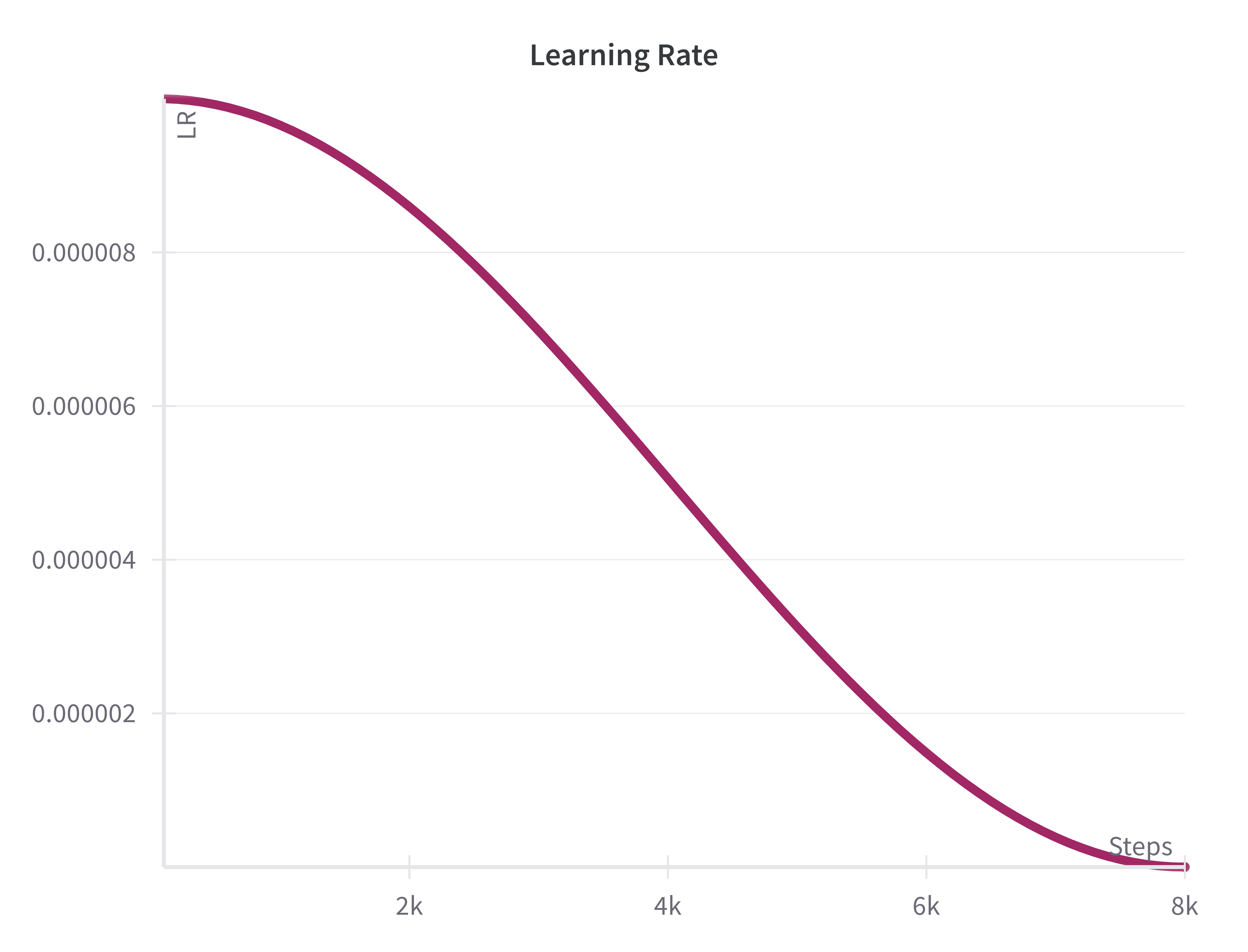

Training Visualizations

The following charts illustrate the model's training progress and performance improvements:

Word Error Rate (WER) Progress

The WER chart shows the steady improvement in transcription accuracy throughout the training process. Starting from approximately 21.6% WER at step 500, the model achieves its best performance of 14.7% WER by step 8000, demonstrating consistent learning and convergence.

Learning Rate Schedule

The learning rate follows a cosine annealing schedule, starting at 1e-05 and gradually decreasing over the 8000 training steps. This schedule helps ensure stable training and prevents overfitting while allowing the model to fine-tune effectively.

Training Details

Training Procedure

The model was fine-tuned using the following approach:

- Base Model: OpenAI Whisper Medium

- Dataset: Mozilla Common Voice 17.0 (Swahili and English)

- Training Steps: 8,000 steps

- Learning Rate: 1e-05 with cosine scheduler

- Batch Size: 16 (train and eval)

Training Hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: AdamW with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 50

- training_steps: 8000

- mixed_precision_training: Native AMP

Training Results

| Training Loss | Epoch | Step | Validation Loss | WER Ortho | WER |

|---|---|---|---|---|---|

| 0.4135 | 0.6180 | 500 | 0.4069 | 29.9115 | 21.6319 |

| 0.2036 | 1.2361 | 1000 | 0.3584 | 25.8738 | 18.3552 |

| 0.1899 | 1.8541 | 1500 | 0.3390 | 24.0940 | 16.4814 |

| 0.0978 | 2.4722 | 2000 | 0.3406 | 24.1957 | 16.8982 |

| 0.0584 | 3.0902 | 2500 | 0.3589 | 22.7718 | 15.9189 |

| 0.0457 | 3.7083 | 3000 | 0.3660 | 23.3075 | 15.8580 |

| 0.0203 | 4.3263 | 3500 | 0.3762 | 22.9108 | 15.7394 |

| 0.0193 | 4.9444 | 4000 | 0.3683 | 22.0192 | 15.2616 |

| 0.0073 | 5.5624 | 4500 | 0.3926 | 22.5447 | 15.5801 |

| 0.0022 | 6.1805 | 5000 | 0.4065 | 21.5649 | 14.9092 |

| 0.0022 | 6.7985 | 5500 | 0.4080 | 21.2835 | 14.6313 |

| 0.0009 | 7.4166 | 6000 | 0.4180 | 21.2564 | 14.6415 |

| 0.0007 | 8.0346 | 6500 | 0.4244 | 21.2361 | 14.6551 |

| 0.0006 | 8.6527 | 7000 | 0.4283 | 21.3276 | 14.6957 |

| 0.0006 | 9.2707 | 7500 | 0.4297 | 21.3378 | 14.7059 |

| 0.0006 | 9.8888 | 8000 | 0.4300 | 21.3276 | 14.7093 |

Supported Languages

- Primary: Swahili (sw)

- Secondary: English (en)

Out-of-Scope Use

The use of this Speech-to-Text (ASR) model is intended for research, social good, and internal use purposes only. For commercial use and distribution, organizations/individuals are encouraged to contact Jacaranda Health. To ensure the ethical and responsible use of this ASR model, we have outlined a set of guidelines. These guidelines categorize activities and practices into three main areas: prohibited actions, high-risk activities, and deceptive practices. By understanding and adhering to these directives, users can contribute to a safer and more trustworthy environment.

1. Prohibited Actions:

- Illegal Activities: Avoid using the model to transcribe content that promotes violence, child exploitation, human trafficking, and other crimes.

- Harassment and Discrimination: No transcription activities that facilitate bullying, threats, or discriminatory practices.

- Unauthorized Surveillance: No unlicensed monitoring or recording of individuals without proper consent.

- Data Misuse: Handle audio data and transcriptions with proper consents and privacy protections.

- Rights Violations: Respect third-party intellectual property and privacy rights in audio content.

- Malicious Content Creation: Avoid transcribing content intended for harmful software or malicious purposes.

2. High-Risk Activities:

- Sensitive Industries: Exercise extreme caution when using in military, nuclear, or intelligence domains.

- Legal Proceedings: Avoid usage as sole evidence in critical legal or judicial processes without proper validation.

- Critical Systems: No deployment in safety-critical infrastructure or transport technologies without extensive testing.

- Medical Diagnosis: Avoid using transcriptions for direct medical diagnosis or treatment decisions.

- Emergency Services: Not suitable as primary tool for emergency response systems.

3. Deceptive Practices:

- Misinformation: Refrain from using transcriptions to create or promote fraudulent or misleading audio content.

- Deepfake Audio: Avoid using transcriptions to facilitate creation of deceptive synthetic audio.

- Impersonation: No transcribing content intended to impersonate individuals without authorization.

- Misrepresentation: No false claims about transcription accuracy or model capabilities.

- Fake Content Generation: No promotion of false audio-text pairs or fabricated conversations.

Bias, Risks, and Limitations

This Speech-to-Text model represents cutting-edge technology with significant potential, yet it is not without inherent risks and limitations. The extensive testing conducted has been predominantly focused on Swahili and English languages, leaving an expansive terrain of linguistic variations and acoustic scenarios unexplored.

Key Limitations:

Language and Dialect Variations: The model's performance may vary significantly across different Swahili dialects, regional accents, and code-switching patterns not represented in the training data.

Audio Quality Sensitivity: Performance degrades with poor audio quality, background noise, multiple speakers, or non-standard recording conditions.

Domain Specificity: The model may struggle with highly technical terminology, proper names, or domain-specific vocabulary outside its training scope.

Contextual Understanding: While improved over the base model, contextual interpretation limitations may lead to incorrect transcriptions in ambiguous scenarios.

Bias Considerations: Like other AI models, this ASR system may exhibit biases present in the training data, potentially affecting transcription quality for underrepresented speaker groups or topics.

Responsible Deployment:

Consequently, like other ASR systems, this model's output predictability remains variable, and there's potential for it to occasionally generate transcriptions that are inaccurate, culturally insensitive, or otherwise problematic when processing certain audio inputs.

Prior to deploying this ASR model in any production applications, developers must embark on thorough safety testing and meticulous evaluation customized to the unique demands of their specific use cases. This includes testing across diverse speaker demographics, audio conditions, and content types relevant to the intended application.

Contact Us

For any questions, feedback, or commercial inquiries, please reach out at [email protected]

Framework Versions

- Transformers 4.51.3

- PyTorch 2.5.1+cu121

- Datasets 3.6.0

- Tokenizers 0.21.1

Citation

If you use this model in your research, please cite:

@misc{jacaranda_asr_stt_2025,

title={Swahili-English Speech-to-Text Model},

author={Jacaranda Health},

year={2025},

howpublished={\url{https://huggingface.co/Jacaranda-Health/ASR-STT}}

}

- Downloads last month

- 95