FaceLLM-38B

Multimodal large language models (MLLMs) have shown remarkable performance in vision-language tasks. However, existing MLLMs are primarily trained on generic datasets, limiting their ability to reason on domain-specific visual cues such as those in facial images. In particular, tasks that require detailed understanding of facial structure, expression, emotion, and demographic features remain underexplored by MLLMs due to the lack of large-scale annotated face image-text datasets. In this work, we introduce FaceLLM, a multimodal large language model trained specifically for facial image understanding. Our experiments demonstrate that FaceLLM achieves the state-of-the-art performance of MLLMs on various face-centric tasks. Project page: https://www.idiap.ch/paper/facellm

Overview

- Training: FaceLLM, a Multimodal Large Language Model for Face Understanding

- Backbone: InternVL3-1B

- Parameters: 0.9B

- Task: Face Understanding tasks, including bias and fairness (age estimation, gender prediction, race estimation), face recognition (high-resolution face recognition, low-resolution face recognition, celebrity identification), face authentication (face anti-spoofing, deepfake detection), face analysis (attributes prediction, facial expression recognition, headpose estimation), face localization (crowd counting, face parsing), etc.

- Framework: Pytorch/Huggingface

Evaluation of Models

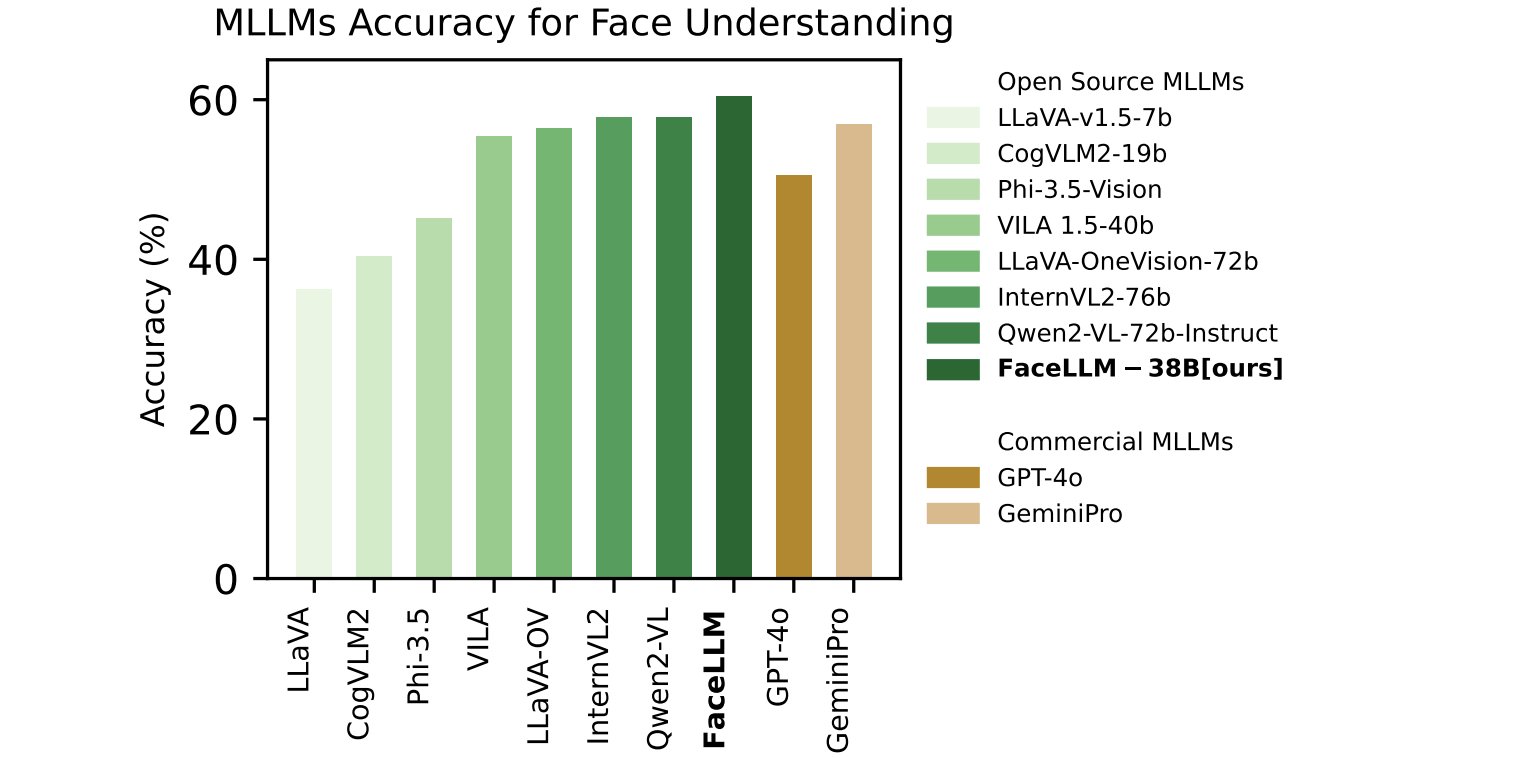

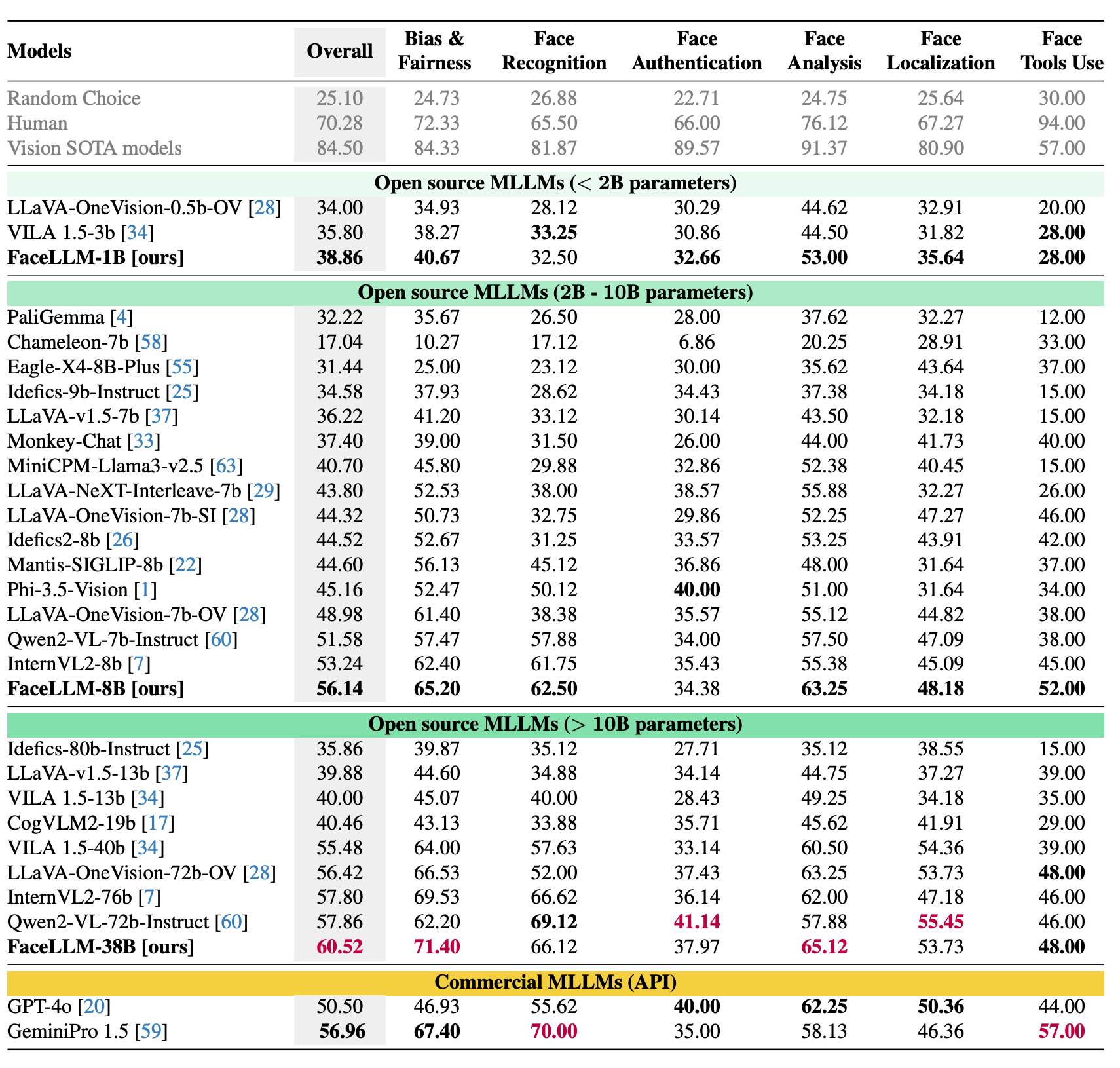

Comparison with MLLMs on the FaceXBench benchmark. The best performing model in each category is emboldened and the best model amongst all MLLMs is in purple.

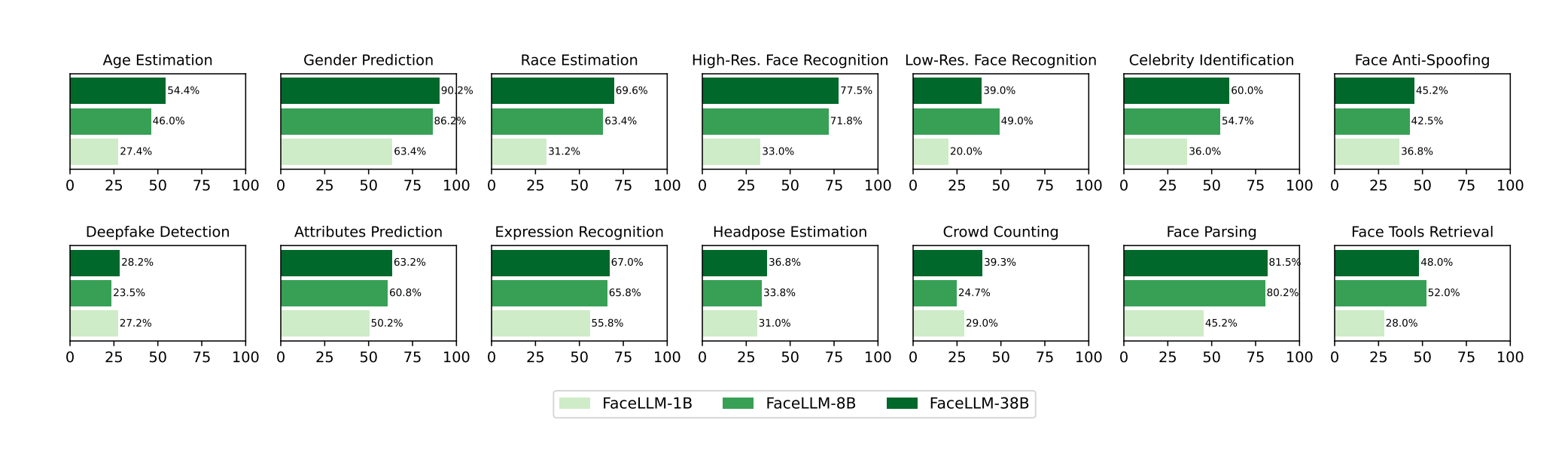

Performance of FaceLLM models (FaceLLM-1B, FaceLLM-8B, and FaceLLM-38B) on different sub-tasks, including age estimation, gender prediction, race estimation, high-resolution face recognition, low-resolution face recognition, celebrity identification, face anti-spoofing, deepfake detection, attributes prediction, facial expression recognition, headpose estimation, face localization crowd counting, face parsing, and face tools retrieval.

Running Code

Minimal code to instantiate the model and perform inference:

from transformers import AutoProcessor, AutoModelForImageTextToText

import torch

import os

# Path to FaceLLM model folder

model_path = "./FaceLLM-38B/model"

# Load processor and model

processor = AutoProcessor.from_pretrained(model_path)

model = AutoModelForImageTextToText.from_pretrained(model_path,

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

trust_remote_code=True)

model.eval() # Set model to evaluation mode

model.to("cuda") # Move model to GPU if available

# Example for image and text input

messages = [

{

"role": "user",

"content": [

{"type": "image", "image": "image.jpg"},

{"type": "text", "text": "Describe this image."},

],

},

]

# Apply the chat template and tokenize

inputs = processor.apply_chat_template(messages, add_generation_prompt=True, tokenize=True, return_dict=True, return_tensors="pt").to(model.device, dtype=torch.bfloat16)

# Generate output

generate_ids = model.generate(**inputs, max_new_tokens=500)

# Decode the generated text

decoded_output = processor.decode(generate_ids[0, inputs["input_ids"].shape[1]:], skip_special_tokens=True)

print(decoded_output)

License

This project is released under the MIT License. This project uses the pre-trained Qwen2.5 as a component, which is licensed under the Qwen License.

Copyright

Copyright (c) 2025, Hatef Otroshi Shahreza, Sébastien Marcel, Idiap Research Institute, Martigny 1920, Switzerland.

https://www.idiap.ch/paper/facellm/

Please refer to the link for information about the License & Copyright terms and conditions.

Citation

If you find our work useful, please cite the following publication:

@article{facellm2025,

author = {Hatef Otroshi Shahreza and S{\'e}bastien Marcel},

title = {FaceLLM: A Multimodal Large Language Model for Face Understanding},

journal = {arXiv preprint arXiv:2507.10300},

year = {2025}

}