![]()

MARA AGENTS

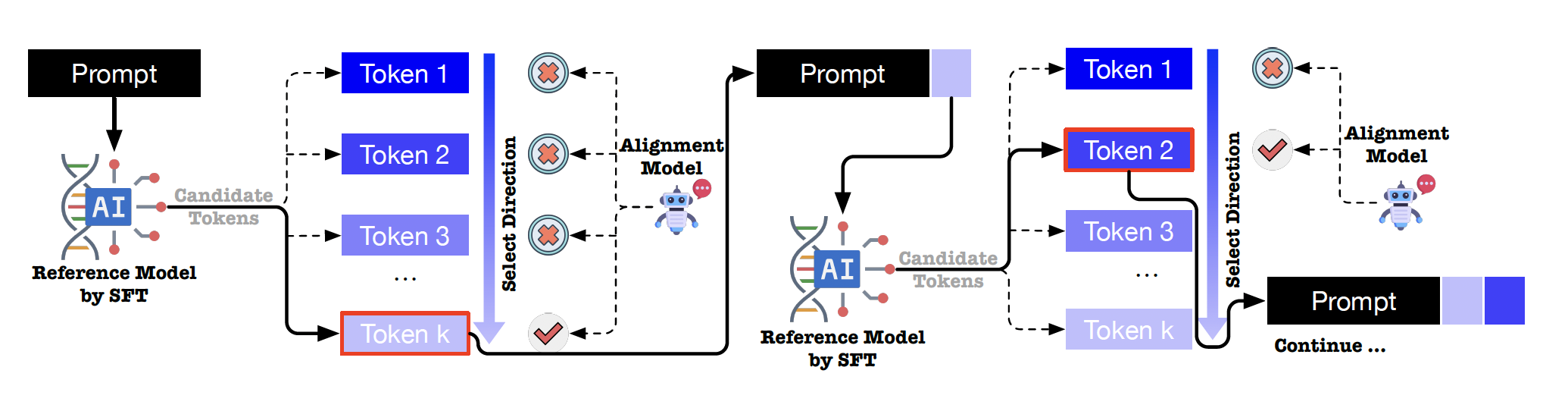

MARA (Micro token-level Accept-Reject Alignment) simplifies the alignment process by breaking down sentence-level preference learning into fine-grained token-level binary classification. The MARA agent—a lightweight multi-layer perceptron (MLP)—operates as an alignment model that evaluates and classifies each candidate token as either Accepted or Rejected during LLM text generation.

💫 Get MARA Agent Align Result

from mara_generator import MARAGenerator

agent_path = "mistral_v3_2_1_actor.pth"

base_model_path = "path2model/Mistral-7B-Instruct-v0.3"

mara_generator = MARAGenerator(agent_path, base_model_path)

instruction = "Please introduce yourself."

raw_result = mara_generator.get_raw_output(instruction, do_sample=False)

print("base model answer: ")

print(raw_result["answer"])

align_result = mara_generator.get_proxy_output(instruction)

print("mara agent align answer: ")

print(align_result["answer"])

🔨Train Your MARA Agent

The source code and implementation details are open-sourced at MARA – you can train your custom alignment model by following the provided instructions.

📊 Experiment Results

Performance improvements of MARA across PKUSafeRLHF, BeaverTails, and HarmfulQA datasets. Each entry shows the percentage improvement in preference rate achieved by applying MARA compared to using the original LLM alone.

|

Compatibility analysis of MARA, an alignment model trained with a LLM to be aggregate with other inference LLM. The value of each cell represents the percentage improvement in preference rate of our algorithm over the upstream model, i.e., inference model.

|

Performance comparison of MARA against RLHF, DPO, and Aligner measured by percentage improvements of preference rate.

|

More details and analyses about experimental results can be found in our paper.

✍️ Citation

If the code or the paper has been useful in your research, please add a citation to our work:

@article{zhang2025tokenlevelacceptrejectmicro,

title={Token-level Accept or Reject: A Micro Alignment Approach for Large Language Models},

author={Yang Zhang and Yu Yu and Bo Tang and Yu Zhu and Chuxiong Sun and Wenqiang Wei and Jie Hu and Zipeng Xie and Zhiyu Li and Feiyu Xiong and Edward Chung},

journal={arXiv preprint arXiv:2505.19743},

year={2025}

}

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for IAAR-Shanghai/MARA_AGENTS

Base model

meta-llama/Llama-3.1-8B

Finetuned

meta-llama/Llama-3.1-8B-Instruct