Accelerating Vision Diffusion Transformers with Skip Branches

This repository contains all the checkpoints of models the paper: Accelerating Vision Diffusion Transformers with Skip Branches. In this work, we enhance standard DiT models by introducing Skip-DiT, which incorporates skip branches to improve feature smoothness. We also propose Skip-Cache, a method that leverages skip branches to cache DiT features across timesteps during inference. The effectiveness of our approach is validated on various DiT backbones for both video and image generation, demonstrating how skip branches preserve generation quality while achieving significant speedup. Experimental results show that Skip-Cache provides a 1.5x speedup with minimal computational cost and a 2.2x speedup with only a slight reduction in quantitative metrics. All the codes and checkpoints are publicly available at huggingface and github. More visualizations can be found here.

Pipeline

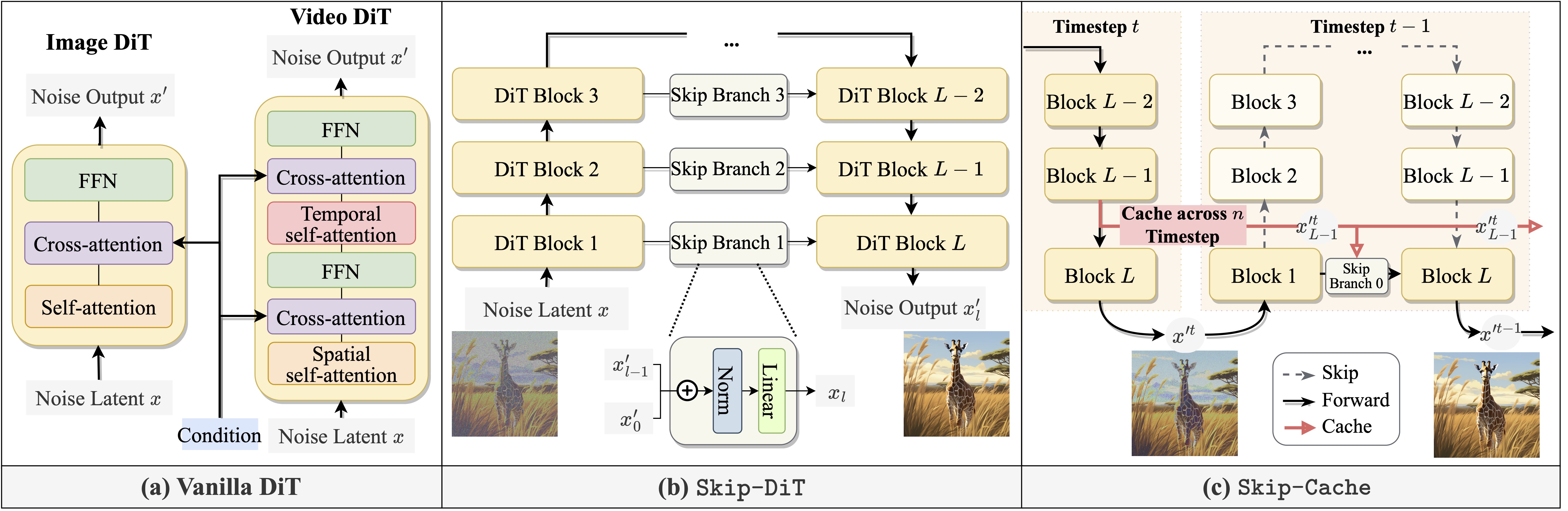

Illustration of Skip-DiT and Skip-Cache for DiT visual generation caching. (a) The vanilla DiT block for image and video generation. (b) Skip-DiT modifies the vanilla DiT model using skip branches to connect shallow and deep DiT blocks. (c) Pipeline of Skip-Cache.

Illustration of Skip-DiT and Skip-Cache for DiT visual generation caching. (a) The vanilla DiT block for image and video generation. (b) Skip-DiT modifies the vanilla DiT model using skip branches to connect shallow and deep DiT blocks. (c) Pipeline of Skip-Cache.

Feature Smoothness

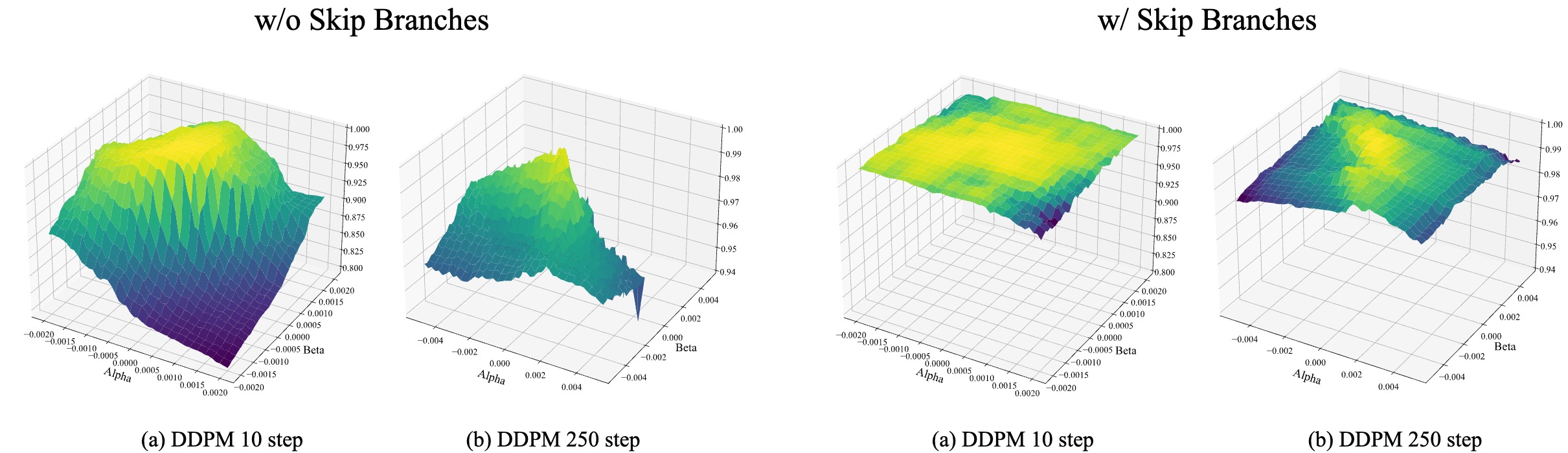

Feature smoothness analysis of DiT in the class-to-video generation task using DDPM. Normalized disturbances, controlled by strength coefficients $\alpha$ and $\beta$, are introduced to the model with and without skip connections. We compare the similarity between the original and perturbed features. The feature difference surface of Latte, with and without skip connections, is visualized in steps 10 and 250 of DDPM. The results show significantly better feature smoothness in Skip-DiT. Furthermore, we identify feature smoothness as a critical factor limiting the effectiveness of cross-timestep feature caching in DiT. This insight provides a deeper understanding of caching efficiency and its impact on performance.

Feature smoothness analysis of DiT in the class-to-video generation task using DDPM. Normalized disturbances, controlled by strength coefficients $\alpha$ and $\beta$, are introduced to the model with and without skip connections. We compare the similarity between the original and perturbed features. The feature difference surface of Latte, with and without skip connections, is visualized in steps 10 and 250 of DDPM. The results show significantly better feature smoothness in Skip-DiT. Furthermore, we identify feature smoothness as a critical factor limiting the effectiveness of cross-timestep feature caching in DiT. This insight provides a deeper understanding of caching efficiency and its impact on performance.

Pretrained Models

| Model | Task | Training Data | Backbone | Size(G) | Skip-Cache |

|---|---|---|---|---|---|

| Latte-skip | text-to-video | Vimeo | Latte | 8.76 | ✅ |

| DiT-XL/2-skip | class-to-image | ImageNet | DiT-XL/2 | 11.40 | ✅ |

| ucf101-skip | class-to-video | UCF101 | Latte | 2.77 | ✅ |

| taichi-skip | class-to-video | Taichi-HD | Latte | 2.77 | ✅ |

| skytimelapse-skip | class-to-video | SkyTimelapse | Latte | 2.77 | ✅ |

| ffs-skip | class-to-video | FaceForensics | Latte | 2.77 | ✅ |

Pretrained text-to-image Model of HunYuan-DiT can be found in Huggingface and Tencent-cloud.

Demo

(Results of Latte with skip-branches on text-to-video and class-to-video tasks. Left: text-to-video with 1.7x and 2.0x speedup. Right: class-to-video with 2.2x and 2.5x speedup. Latency is measured on one A100.)

(Results of Latte with skip-branches on text-to-video and class-to-video tasks. Left: text-to-video with 1.7x and 2.0x speedup. Right: class-to-video with 2.2x and 2.5x speedup. Latency is measured on one A100.)

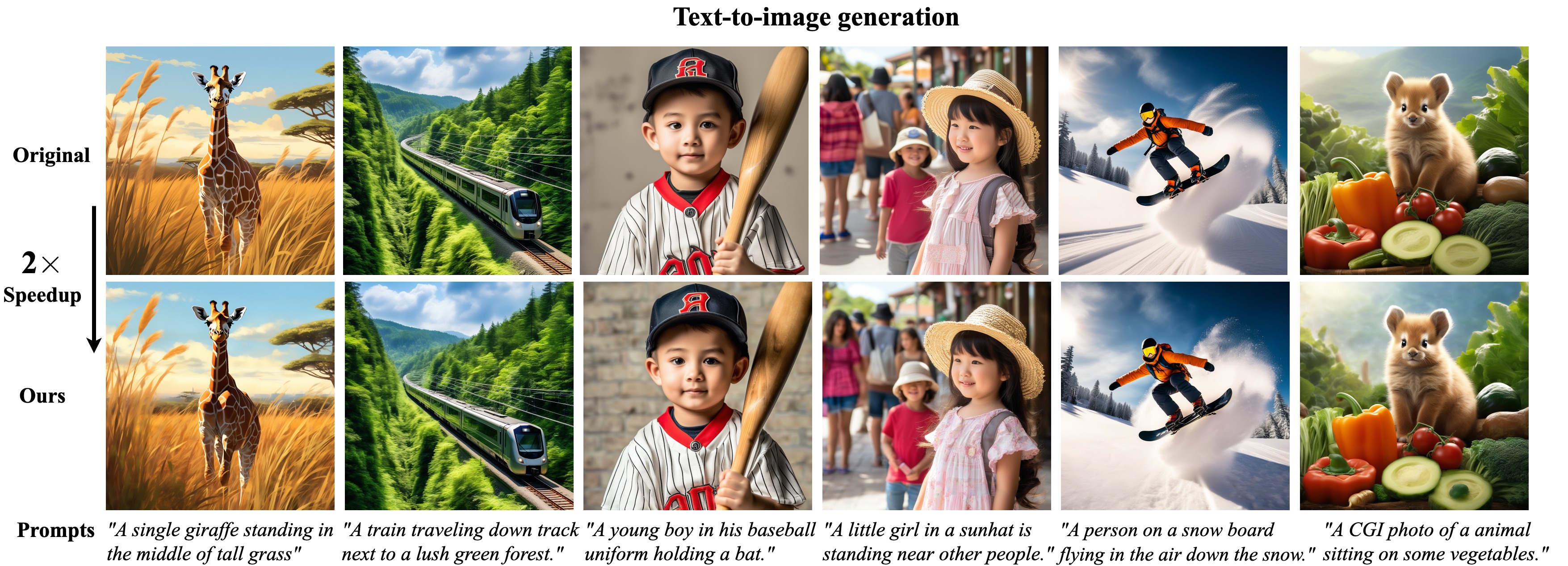

(Results of HunYuan-DiT with skip-branches on text-to-image task. Latency is measured on one A100.)

(Results of HunYuan-DiT with skip-branches on text-to-image task. Latency is measured on one A100.)

Acknowledgement

Skip-DiT has been greatly inspired by the following amazing works and teams: DeepCache, Latte, DiT, and HunYuan-DiT, we thank all the contributors for open-sourcing.

Visualization

Text-to-Video

Class-to-Video

Text-to-image

Class-to-image

Model tree for GuanjieChen/Skip-DiT

Base model

Tencent-Hunyuan/HunyuanDiT