TinyDeepSeek: Reproduction of DeepSeek-R1 and Beyond

📃 Paper • 🤗 TinyDeepSeek-0.5B-base • 🤗 TinyDeepSeek-3.3B-base

🤗 TinyDeepSeek-3.3B-checkpoints • 🤗 TinyDeepSeek-0.5B-checkpoints

Innovation and Open source are the best tributes and reproductions of DeepSeek.

The open-source ethos, rooted in technological equity, upholds two key principles: free access for all developers and the opportunity for technical contributions. While DeepSeek exemplifies the first principle, the second principle is hindered by restrictive training strategies, unclear data sources, and the high costs of model training. These constraints limit the open-source community's capacity to contribute and impede technological progress.

To overcome these challenges, we launched a comprehensive reproduction project of DeepSeek. This initiative involves training models from scratch, replicating DeepSeek's architecture and algorithms. We will fully open-source the training code, datasets, and models, offering code framework, reference solution, and base models for low-cost continual exploration.

🌈 Update

- [2025.03.11] TinyDeepSeek repo is published!🎉

Reproduction of DeepSeek-R1

Architecture

Click to expand

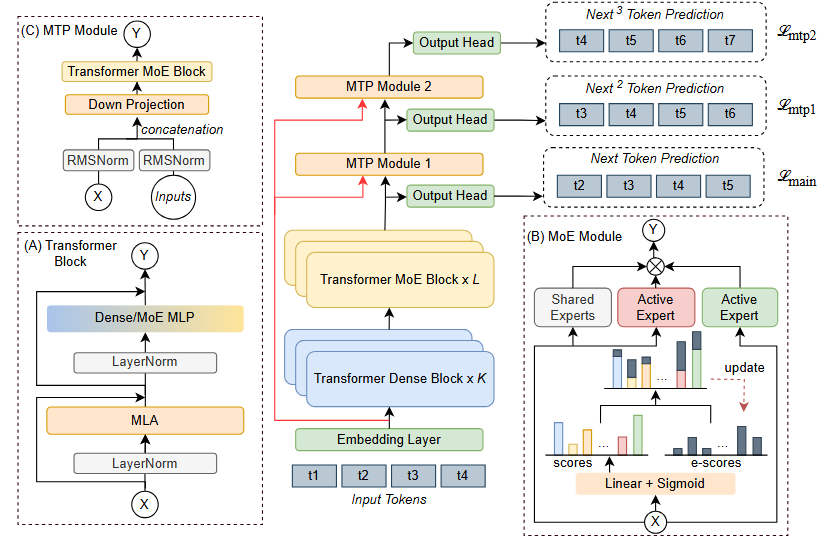

As shown in above Figure, the DeepSeek technical report introduces three architectural designs:

A. Multi-Head Latent Attention (MLA)

B. Load Balancing Strategy without Auxiliary Loss: Please refer code for implementation.

C. Multi-Token Prediction: Please refer code for implementation.

Our Detailed architectural parameter are shown in Table below.

Data Construction

Click to expand

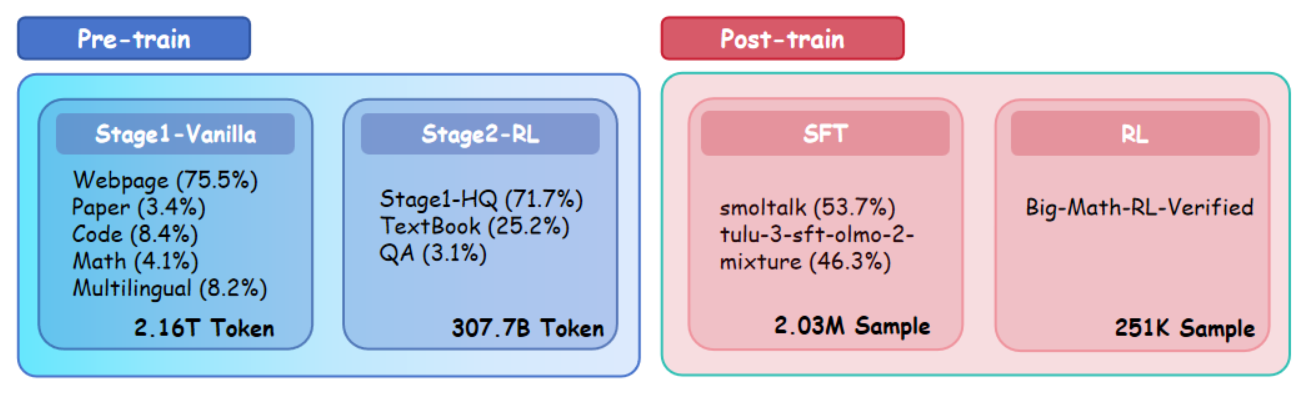

- Pretrain:

- Meta & Labeled Data: TBD

- Process Code: Please refer to Link for implementation.

- SFT:

- RL:

Model Training

bash examples/pretrainStage1.sh

bash examples/pretrainStage2.sh

bash examples/sft.sh

bash examples/rl.sh (TBD)

Results

TBD

Beyond Reproduction

Scale Up RL to Pretrain

RWO: Reward Weighted Optimization

For the training, please include the include the following flags in the training command.

- For Pretrain please prepare data item with key 'text_evaluation':{"knowledge":4, "reasoning":3, ...}.

- For SFT, please provide data file 'General.json' and reward file 'General_reward.json' in the same directory.

--reward_weighted_optimization True \

--remove_unused_columns False \

📃 To do

- Release Evaluation Results

- Release RL Training Code and Model

- Release Pretrain Data quality annotation label

- Release TinyDeepSeek Technical Report

Acknowledgment

Citation

Please use the following citation if you intend to use our dataset for training or evaluation:

@misc{tinydeepseek,

title={TinyDeepSeek},

author={FreedomIntelligence Team},

year = {2025},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/FreedomIntelligence/TinyDeepSeek}},

}