metadata

base_model:

- meta-llama/Llama-3.1-8B-Instruct

datasets:

- DongkiKim/Mol-LLaMA-Instruct

language:

- en

license: apache-2.0

tags:

- biology

- chemistry

- medical

pipeline_tag: text-generation

library_name: transformers

Mol-Llama-3.1-8B-Instruct

[Project Page] [Paper] [GitHub]

This repo contains the weights of Mol-LLaMA including the LoRA weights and projectors, based on meta-llama/Llama-3.1-8B-Instruct.

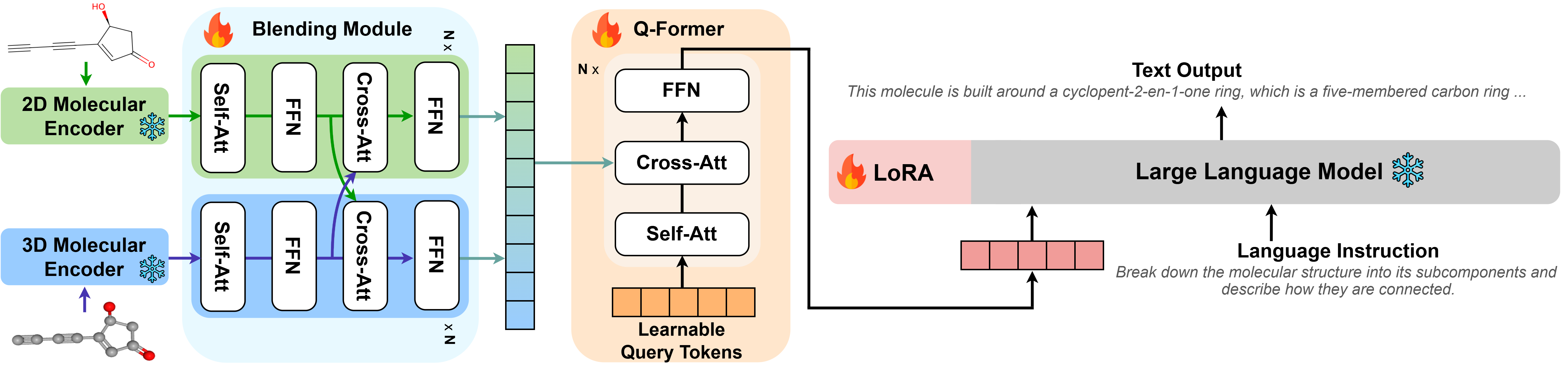

Architecture

- Molecular encoders: Pretrained 2D encoder (MoleculeSTM) and 3D encoder (Uni-Mol)

- Blending Module: Combining complementary information from 2D and 3D encoders via cross-attention

- Q-Former: Embed molecular representations into query tokens based on SciBERT

- LoRA: Adapters for fine-tuning LLMs

Training Dataset

Mol-LLaMA is trained on Mol-LLaMA-Instruct, to learn the fundamental characteristics of molecules with the reasoning ability and explanbility.

How to Use

Please check out the exemplar code for inference in the Github repo.

Citation

If you find our model useful, please consider citing our work.

@misc{kim2025molllama,

title={Mol-LLaMA: Towards General Understanding of Molecules in Large Molecular Language Model},

author={Dongki Kim and Wonbin Lee and Sung Ju Hwang},

year={2025},

eprint={2502.13449},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Acknowledgements

We appreciate LLaMA, 3D-MoLM, MoleculeSTM, Uni-Mol and SciBERT for their open-source contributions.