Add files using upload-large-folder tool

Browse files- .gitattributes +1 -0

- README.md +218 -0

- config.json +53 -0

- configuration_harmon.py +9 -0

- diffloss.py +249 -0

- diffusion_utils.py +73 -0

- gaussian_diffusion.py +877 -0

- mar.py +470 -0

- method.png +3 -0

- misc.py +383 -0

- model-00001-of-00002.safetensors +3 -0

- model-00002-of-00002.safetensors +3 -0

- model.safetensors.index.json +0 -0

- modeling_harmon.py +288 -0

- respace.py +129 -0

- tokenizer.json +0 -0

- tokenizer_config.json +207 -0

- vae.py +522 -0

- vocab.json +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

method.png filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,218 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

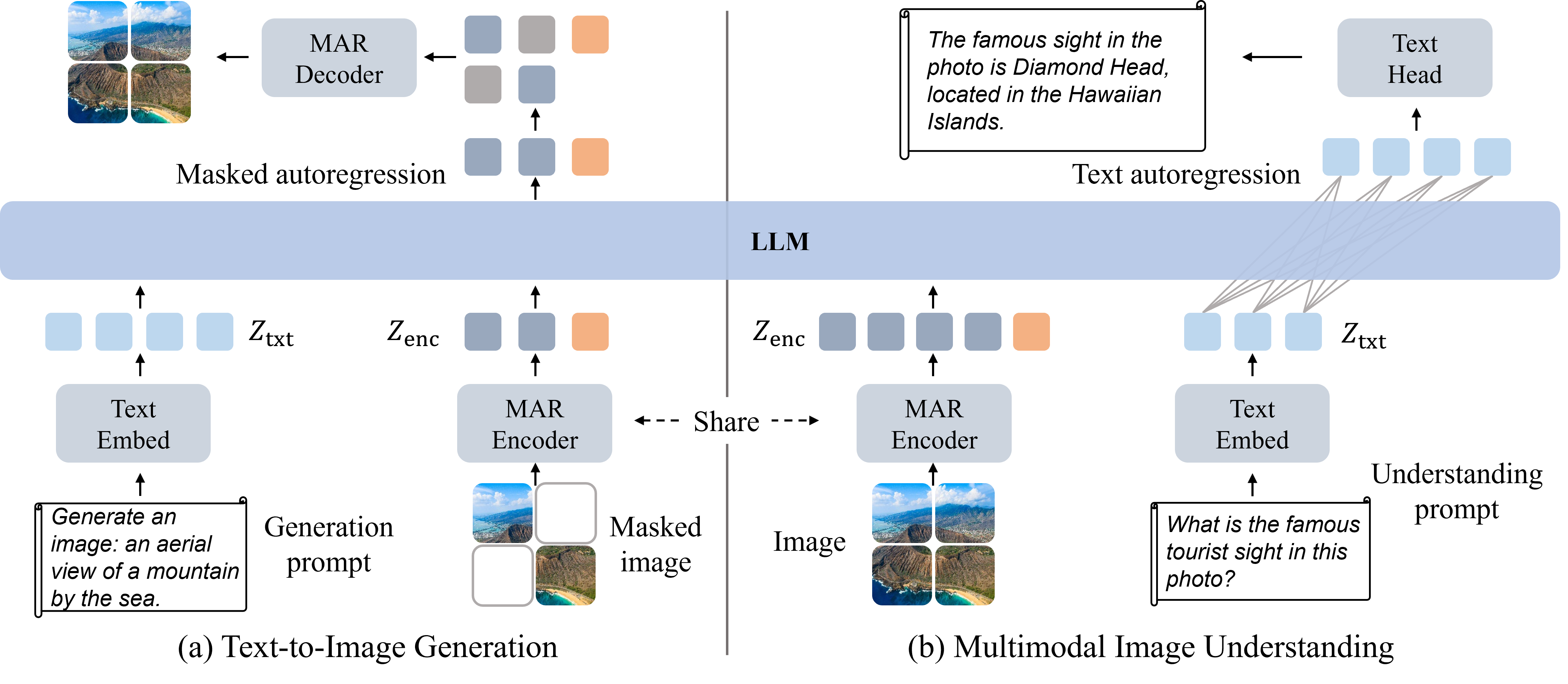

+

# Harmon: Harmonizing Visual Representations for Unified Multimodal Understanding and Generation

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

> **[Harmonizing Visual Representations for Unified Multimodal Understanding and Generation](https://arxiv.org/abs/2406.05821)**

|

| 6 |

+

>

|

| 7 |

+

> Size Wu, Wenwei Zhang, Lumin Xu, Sheng Jin, Zhonghua Wu, Qingyi Tao, Wentao Liu, Wei Li, Chen Change Loy

|

| 8 |

+

>

|

| 9 |

+

> [](https://arxiv.org/abs/2406.05821)

|

| 10 |

+

> [](https://wusize.github.io/projects/Harmon)

|

| 11 |

+

> [](https://github.com/wusize/Harmon#citation)

|

| 12 |

+

|

| 13 |

+

## Introduction

|

| 14 |

+

|

| 15 |

+

**Harmon** is a novel unified framework for multimodal understanding and generation. Unlike existing state-of-the-art

|

| 16 |

+

architectures that disentangle visual understanding and generation with different encoder models, the proposed framework harmonizes

|

| 17 |

+

the visual presentations of understanding and generation via a shared MAR encoder. Harmon achieves advanced generation

|

| 18 |

+

performance on mainstream text-to-image generation benchmarks, and exhibits competitive results on multimodal understanding

|

| 19 |

+

tasks. In this repo, we provide inference code to run Harmon for image understanding (image-to-text) and text-to-image

|

| 20 |

+

generation, with two model variants Harmon-0.5B and Harmon-1.5B.

|

| 21 |

+

|

| 22 |

+

## Usage

|

| 23 |

+

|

| 24 |

+

### 🖌️ Image-to-text Generation

|

| 25 |

+

|

| 26 |

+

```python

|

| 27 |

+

import torch

|

| 28 |

+

import numpy as np

|

| 29 |

+

from transformers import AutoTokenizer, AutoModel

|

| 30 |

+

from einops import rearrange

|

| 31 |

+

from PIL import Image

|

| 32 |

+

import requests

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

PROMPT_TEMPLATE = dict(

|

| 36 |

+

SYSTEM='<|im_start|>system\n{system}<|im_end|>\n',

|

| 37 |

+

INSTRUCTION='<|im_start|>user\n{input}<|im_end|>\n<|im_start|>assistant\n',

|

| 38 |

+

SUFFIX='<|im_end|>',

|

| 39 |

+

SUFFIX_AS_EOS=True,

|

| 40 |

+

SEP='\n',

|

| 41 |

+

STOP_WORDS=['<|im_end|>', '<|endoftext|>'])

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def expand2square(pil_img, background_color):

|

| 45 |

+

width, height = pil_img.size

|

| 46 |

+

if width == height:

|

| 47 |

+

return pil_img

|

| 48 |

+

elif width > height:

|

| 49 |

+

result = Image.new(pil_img.mode, (width, width), background_color)

|

| 50 |

+

result.paste(pil_img, (0, (width - height) // 2))

|

| 51 |

+

return result

|

| 52 |

+

else:

|

| 53 |

+

result = Image.new(pil_img.mode, (height, height), background_color)

|

| 54 |

+

result.paste(pil_img, ((height - width) // 2, 0))

|

| 55 |

+

return result

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

@torch.no_grad()

|

| 59 |

+

def question_answer(question,

|

| 60 |

+

image,

|

| 61 |

+

model,

|

| 62 |

+

tokenizer,

|

| 63 |

+

max_new_tokens=512,

|

| 64 |

+

image_size=512

|

| 65 |

+

):

|

| 66 |

+

assert image_size == 512

|

| 67 |

+

image = expand2square(

|

| 68 |

+

image, (127, 127, 127))

|

| 69 |

+

image = image.resize(size=(image_size, image_size))

|

| 70 |

+

image = torch.from_numpy(np.array(image)).to(dtype=model.dtype, device=model.device)

|

| 71 |

+

image = rearrange(image, 'h w c -> c h w')[None]

|

| 72 |

+

image = 2 * (image / 255) - 1

|

| 73 |

+

|

| 74 |

+

prompt = PROMPT_TEMPLATE['INSTRUCTION'].format(input="<image>\n" + question)

|

| 75 |

+

assert '<image>' in prompt

|

| 76 |

+

image_length = (image_size // 16) ** 2 + model.mar.buffer_size

|

| 77 |

+

prompt = prompt.replace('<image>', '<image>'*image_length)

|

| 78 |

+

input_ids = tokenizer.encode(

|

| 79 |

+

prompt, add_special_tokens=True, return_tensors='pt').cuda()

|

| 80 |

+

_, z_enc = model.extract_visual_feature(model.encode(image))

|

| 81 |

+

inputs_embeds = z_enc.new_zeros(*input_ids.shape, model.llm.config.hidden_size)

|

| 82 |

+

inputs_embeds[input_ids == image_token_idx] = z_enc.flatten(0, 1)

|

| 83 |

+

inputs_embeds[input_ids != image_token_idx] = model.llm.get_input_embeddings()(

|

| 84 |

+

input_ids[input_ids != image_token_idx]

|

| 85 |

+

)

|

| 86 |

+

output = model.llm.generate(inputs_embeds=inputs_embeds,

|

| 87 |

+

use_cache=True,

|

| 88 |

+

do_sample=False,

|

| 89 |

+

max_new_tokens=max_new_tokens,

|

| 90 |

+

eos_token_id=tokenizer.eos_token_id,

|

| 91 |

+

pad_token_id=tokenizer.pad_token_id

|

| 92 |

+

if tokenizer.pad_token_id is not None else

|

| 93 |

+

tokenizer.eos_token_id

|

| 94 |

+

)

|

| 95 |

+

return tokenizer.decode(output[0])

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

harmon_tokenizer = AutoTokenizer.from_pretrained("wusize/Harmon-1_5B",

|

| 99 |

+

trust_remote_code=True)

|

| 100 |

+

harmon_model = AutoModel.from_pretrained("wusize/Harmon-1_5B",

|

| 101 |

+

trust_remote_code=True).eval().cuda().bfloat16()

|

| 102 |

+

|

| 103 |

+

special_tokens_dict = {'additional_special_tokens': ["<image>", ]}

|

| 104 |

+

num_added_toks = harmon_tokenizer.add_special_tokens(special_tokens_dict)

|

| 105 |

+

assert num_added_toks == 1

|

| 106 |

+

|

| 107 |

+

image_token_idx = harmon_tokenizer.encode("<image>", add_special_tokens=False)[-1]

|

| 108 |

+

print(f"Image token: {harmon_tokenizer.decode(image_token_idx)}")

|

| 109 |

+

|

| 110 |

+

image_file = "http://images.cocodataset.org/val2017/000000039769.jpg"

|

| 111 |

+

raw_image = Image.open(requests.get(image_file, stream=True).raw).convert('RGB')

|

| 112 |

+

|

| 113 |

+

output_text = question_answer(question='Describe the image in detail.',

|

| 114 |

+

image=raw_image,

|

| 115 |

+

model=harmon_model,

|

| 116 |

+

tokenizer=harmon_tokenizer,

|

| 117 |

+

)

|

| 118 |

+

|

| 119 |

+

print(output_text)

|

| 120 |

+

|

| 121 |

+

```

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

### 🖼️ Text-to-image Generation

|

| 125 |

+

```python

|

| 126 |

+

import os

|

| 127 |

+

import torch

|

| 128 |

+

from transformers import AutoTokenizer, AutoModel

|

| 129 |

+

from einops import rearrange

|

| 130 |

+

from PIL import Image

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

PROMPT_TEMPLATE = dict(

|

| 134 |

+

SYSTEM='<|im_start|>system\n{system}<|im_end|>\n',

|

| 135 |

+

INSTRUCTION='<|im_start|>user\n{input}<|im_end|>\n<|im_start|>assistant\n',

|

| 136 |

+

SUFFIX='<|im_end|>',

|

| 137 |

+

SUFFIX_AS_EOS=True,

|

| 138 |

+

SEP='\n',

|

| 139 |

+

STOP_WORDS=['<|im_end|>', '<|endoftext|>'])

|

| 140 |

+

|

| 141 |

+

GENERATION_TEMPLATE = "Generate an image: {text}"

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

@torch.no_grad()

|

| 145 |

+

def generate_images(prompts,

|

| 146 |

+

negative_prompt,

|

| 147 |

+

tokenizer,

|

| 148 |

+

model,

|

| 149 |

+

output,

|

| 150 |

+

grid_size=2, # will produce 2 x 2 images per prompt

|

| 151 |

+

num_steps=64, cfg_scale=3.0, temperature=1.0, image_size=512):

|

| 152 |

+

assert image_size == 512

|

| 153 |

+

m = n = image_size // 16

|

| 154 |

+

|

| 155 |

+

prompts = [

|

| 156 |

+

PROMPT_TEMPLATE['INSTRUCTION'].format(input=prompt)

|

| 157 |

+

for prompt in prompts

|

| 158 |

+

] * (grid_size ** 2)

|

| 159 |

+

|

| 160 |

+

if cfg_scale != 1.0:

|

| 161 |

+

prompts += [PROMPT_TEMPLATE['INSTRUCTION'].format(input=negative_prompt)] * len(prompts)

|

| 162 |

+

|

| 163 |

+

inputs = tokenizer(

|

| 164 |

+

prompts, add_special_tokens=True, return_tensors='pt', padding=True).to(model.device)

|

| 165 |

+

|

| 166 |

+

images = model.sample(**inputs, num_iter=num_steps, cfg=cfg_scale, cfg_schedule="constant",

|

| 167 |

+

temperature=temperature, progress=True, image_shape=(m, n))

|

| 168 |

+

images = rearrange(images, '(m n b) c h w -> b (m h) (n w) c', m=grid_size, n=grid_size)

|

| 169 |

+

|

| 170 |

+

images = torch.clamp(

|

| 171 |

+

127.5 * images + 128.0, 0, 255).to("cpu", dtype=torch.uint8).numpy()

|

| 172 |

+

|

| 173 |

+

os.makedirs(output, exist_ok=True)

|

| 174 |

+

for idx, image in enumerate(images):

|

| 175 |

+

Image.fromarray(image).save(f"{output}/{idx:08d}.jpg")

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

harmon_tokenizer = AutoTokenizer.from_pretrained("wusize/Harmon-1_5B",

|

| 179 |

+

trust_remote_code=True)

|

| 180 |

+

harmon_model = AutoModel.from_pretrained("wusize/Harmon-1_5B",

|

| 181 |

+

trust_remote_code=True).cuda().bfloat16().eval()

|

| 182 |

+

|

| 183 |

+

|

| 184 |

+

texts = ['a dog on the left and a cat on the right.',

|

| 185 |

+

'a photo of a pink stop sign.']

|

| 186 |

+

pos_prompts = [GENERATION_TEMPLATE.format(text=text) for text in texts]

|

| 187 |

+

neg_prompt = 'Generate an image.' # for classifier-free guidance

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

generate_images(prompts=pos_prompts,

|

| 191 |

+

negative_prompt=neg_prompt,

|

| 192 |

+

tokenizer=harmon_tokenizer,

|

| 193 |

+

model=harmon_model,

|

| 194 |

+

output='output',)

|

| 195 |

+

|

| 196 |

+

```

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

|

| 200 |

+

## 📚 Citation

|

| 201 |

+

|

| 202 |

+

If you find Harmon useful for your research or applications, please cite our paper using the following BibTeX:

|

| 203 |

+

|

| 204 |

+

```bibtex

|

| 205 |

+

@misc{wu2025harmon,

|

| 206 |

+

title={Harmonizing Visual Representations for Unified Multimodal Understanding and

|

| 207 |

+

Generation},

|

| 208 |

+

author={Size Wu and Wenwei Zhang and Lumin Xu and Sheng Jin and Zhonghua Wu and

|

| 209 |

+

Qingyi Tao and Wentao Liu and Wei Li and Chen Change Loy},

|

| 210 |

+

year={2025},

|

| 211 |

+

eprint={2405.xxxxx},

|

| 212 |

+

archivePrefix={arXiv},

|

| 213 |

+

primaryClass={cs.CV}

|

| 214 |

+

}

|

| 215 |

+

```

|

| 216 |

+

|

| 217 |

+

## 📜 License

|

| 218 |

+

This project is licensed under [NTU S-Lab License 1.0](LICENSE).

|

config.json

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"HarmonModel"

|

| 4 |

+

],

|

| 5 |

+

"auto_map": {

|

| 6 |

+

"AutoConfig": "configuration_harmon.HarmonConfig",

|

| 7 |

+

"AutoModel": "modeling_harmon.HarmonModel"

|

| 8 |

+

},

|

| 9 |

+

"llm": {

|

| 10 |

+

"_attn_implementation": "flash_attention_2",

|

| 11 |

+

"attention_dropout": 0.0,

|

| 12 |

+

"attn_implementation": null,

|

| 13 |

+

"bos_token_id": 151643,

|

| 14 |

+

"eos_token_id": 151645,

|

| 15 |

+

"hidden_act": "silu",

|

| 16 |

+

"hidden_size": 1536,

|

| 17 |

+

"initializer_range": 0.02,

|

| 18 |

+

"intermediate_size": 8960,

|

| 19 |

+

"max_position_embeddings": 32768,

|

| 20 |

+

"max_window_layers": 21,

|

| 21 |

+

"model_type": "qwen2",

|

| 22 |

+

"num_attention_heads": 12,

|

| 23 |

+

"num_hidden_layers": 28,

|

| 24 |

+

"num_key_value_heads": 2,

|

| 25 |

+

"rms_norm_eps": 1e-06,

|

| 26 |

+

"rope_theta": 1000000.0,

|

| 27 |

+

"sliding_window": 32768,

|

| 28 |

+

"tie_word_embeddings": false,

|

| 29 |

+

"use_cache": true,

|

| 30 |

+

"use_sliding_window": false,

|

| 31 |

+

"vocab_size": 151936

|

| 32 |

+

},

|

| 33 |

+

"mar": {

|

| 34 |

+

"attn_dropout": 0.1,

|

| 35 |

+

"buffer_size": 64,

|

| 36 |

+

"class_num": 1000,

|

| 37 |

+

"diffloss_d": 12,

|

| 38 |

+

"diffloss_w": 1536,

|

| 39 |

+

"diffusion_batch_mul": 4,

|

| 40 |

+

"grad_checkpointing": false,

|

| 41 |

+

"img_size": 256,

|

| 42 |

+

"label_drop_prob": 0.1,

|

| 43 |

+

"mask_ratio_min": 0.7,

|

| 44 |

+

"num_sampling_steps": "100",

|

| 45 |

+

"patch_size": 1,

|

| 46 |

+

"proj_dropout": 0.1,

|

| 47 |

+

"type": "mar_huge",

|

| 48 |

+

"vae_embed_dim": 16,

|

| 49 |

+

"vae_stride": 16

|

| 50 |

+

},

|

| 51 |

+

"torch_dtype": "bfloat16",

|

| 52 |

+

"transformers_version": "4.45.2"

|

| 53 |

+

}

|

configuration_harmon.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers import PretrainedConfig

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

class HarmonConfig(PretrainedConfig):

|

| 5 |

+

model_type = "harmon"

|

| 6 |

+

def __init__(self, llm=None, mar=None, **kwargs):

|

| 7 |

+

super().__init__(**kwargs)

|

| 8 |

+

self.llm = llm

|

| 9 |

+

self.mar = mar

|

diffloss.py

ADDED

|

@@ -0,0 +1,249 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

from torch.utils.checkpoint import checkpoint

|

| 4 |

+

import math

|

| 5 |

+

|

| 6 |

+

from .misc import create_diffusion

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class DiffLoss(nn.Module):

|

| 10 |

+

"""Diffusion Loss"""

|

| 11 |

+

def __init__(self, target_channels, z_channels, depth, width, num_sampling_steps, grad_checkpointing=False):

|

| 12 |

+

super(DiffLoss, self).__init__()

|

| 13 |

+

self.in_channels = target_channels

|

| 14 |

+

self.net = SimpleMLPAdaLN(

|

| 15 |

+

in_channels=target_channels,

|

| 16 |

+

model_channels=width,

|

| 17 |

+

out_channels=target_channels * 2, # for vlb loss

|

| 18 |

+

z_channels=z_channels,

|

| 19 |

+

num_res_blocks=depth,

|

| 20 |

+

grad_checkpointing=grad_checkpointing

|

| 21 |

+

)

|

| 22 |

+

|

| 23 |

+

self.train_diffusion = create_diffusion(timestep_respacing="", noise_schedule="cosine")

|

| 24 |

+

self.gen_diffusion = create_diffusion(timestep_respacing=num_sampling_steps, noise_schedule="cosine")

|

| 25 |

+

|

| 26 |

+

def forward(self, target, z, mask=None):

|

| 27 |

+

t = torch.randint(0, self.train_diffusion.num_timesteps, (target.shape[0],), device=target.device)

|

| 28 |

+

model_kwargs = dict(c=z)

|

| 29 |

+

loss_dict = self.train_diffusion.training_losses(self.net, target, t, model_kwargs)

|

| 30 |

+

loss = loss_dict["loss"]

|

| 31 |

+

if mask is not None:

|

| 32 |

+

loss = (loss * mask).sum() / mask.sum()

|

| 33 |

+

return loss.mean()

|

| 34 |

+

|

| 35 |

+

def sample(self, z, temperature=1.0, cfg=1.0):

|

| 36 |

+

# diffusion loss sampling

|

| 37 |

+

if not cfg == 1.0:

|

| 38 |

+

noise = torch.randn(z.shape[0] // 2, self.in_channels).cuda()

|

| 39 |

+

noise = torch.cat([noise, noise], dim=0)

|

| 40 |

+

model_kwargs = dict(c=z, cfg_scale=cfg)

|

| 41 |

+

sample_fn = self.net.forward_with_cfg

|

| 42 |

+

else:

|

| 43 |

+

noise = torch.randn(z.shape[0], self.in_channels).cuda()

|

| 44 |

+

model_kwargs = dict(c=z)

|

| 45 |

+

sample_fn = self.net.forward

|

| 46 |

+

|

| 47 |

+

sampled_token_latent = self.gen_diffusion.p_sample_loop(

|

| 48 |

+

sample_fn, noise.shape, noise, clip_denoised=False, model_kwargs=model_kwargs, progress=False,

|

| 49 |

+

temperature=temperature

|

| 50 |

+

)

|

| 51 |

+

|

| 52 |

+

return sampled_token_latent

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

def modulate(x, shift, scale):

|

| 56 |

+

return x * (1 + scale) + shift

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

class TimestepEmbedder(nn.Module):

|

| 60 |

+

"""

|

| 61 |

+

Embeds scalar timesteps into vector representations.

|

| 62 |

+

"""

|

| 63 |

+

def __init__(self, hidden_size, frequency_embedding_size=256):

|

| 64 |

+

super().__init__()

|

| 65 |

+

self.mlp = nn.Sequential(

|

| 66 |

+

nn.Linear(frequency_embedding_size, hidden_size, bias=True),

|

| 67 |

+

nn.SiLU(),

|

| 68 |

+

nn.Linear(hidden_size, hidden_size, bias=True),

|

| 69 |

+

)

|

| 70 |

+

self.frequency_embedding_size = frequency_embedding_size

|

| 71 |

+

|

| 72 |

+

@staticmethod

|

| 73 |

+

def timestep_embedding(t, dim, max_period=10000):

|

| 74 |

+

"""

|

| 75 |

+

Create sinusoidal timestep embeddings.

|

| 76 |

+

:param t: a 1-D Tensor of N indices, one per batch element.

|

| 77 |

+

These may be fractional.

|

| 78 |

+

:param dim: the dimension of the output.

|

| 79 |

+

:param max_period: controls the minimum frequency of the embeddings.

|

| 80 |

+

:return: an (N, D) Tensor of positional embeddings.

|

| 81 |

+

"""

|

| 82 |

+

# https://github.com/openai/glide-text2im/blob/main/glide_text2im/nn.py

|

| 83 |

+

half = dim // 2

|

| 84 |

+

freqs = torch.exp(

|

| 85 |

+

-math.log(max_period) * torch.arange(start=0, end=half, dtype=torch.float32) / half

|

| 86 |

+

).to(device=t.device)

|

| 87 |

+

args = t[:, None].float() * freqs[None]

|

| 88 |

+

embedding = torch.cat([torch.cos(args), torch.sin(args)], dim=-1)

|

| 89 |

+

if dim % 2:

|

| 90 |

+

embedding = torch.cat([embedding, torch.zeros_like(embedding[:, :1])], dim=-1)

|

| 91 |

+

return embedding

|

| 92 |

+

|

| 93 |

+

def forward(self, t):

|

| 94 |

+

t_freq = self.timestep_embedding(t, self.frequency_embedding_size)

|

| 95 |

+

t_emb = self.mlp(t_freq.to(self.mlp[0].weight.data.dtype))

|

| 96 |

+

return t_emb

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

class ResBlock(nn.Module):

|

| 100 |

+

"""

|

| 101 |

+

A residual block that can optionally change the number of channels.

|

| 102 |

+

:param channels: the number of input channels.

|

| 103 |

+

"""

|

| 104 |

+

|

| 105 |

+

def __init__(

|

| 106 |

+

self,

|

| 107 |

+

channels

|

| 108 |

+

):

|

| 109 |

+

super().__init__()

|

| 110 |

+

self.channels = channels

|

| 111 |

+

|

| 112 |

+

self.in_ln = nn.LayerNorm(channels, eps=1e-6)

|

| 113 |

+

self.mlp = nn.Sequential(

|

| 114 |

+

nn.Linear(channels, channels, bias=True),

|

| 115 |

+

nn.SiLU(),

|

| 116 |

+

nn.Linear(channels, channels, bias=True),

|

| 117 |

+

)

|

| 118 |

+

|

| 119 |

+

self.adaLN_modulation = nn.Sequential(

|

| 120 |

+

nn.SiLU(),

|

| 121 |

+

nn.Linear(channels, 3 * channels, bias=True)

|

| 122 |

+

)

|

| 123 |

+

|

| 124 |

+

def forward(self, x, y):

|

| 125 |

+

shift_mlp, scale_mlp, gate_mlp = self.adaLN_modulation(y).chunk(3, dim=-1)

|

| 126 |

+

h = modulate(self.in_ln(x), shift_mlp, scale_mlp)

|

| 127 |

+

h = self.mlp(h)

|

| 128 |

+

return x + gate_mlp * h

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

class FinalLayer(nn.Module):

|

| 132 |

+

"""

|

| 133 |

+

The final layer adopted from DiT.

|

| 134 |

+

"""

|

| 135 |

+

def __init__(self, model_channels, out_channels):

|

| 136 |

+

super().__init__()

|

| 137 |

+

self.norm_final = nn.LayerNorm(model_channels, elementwise_affine=False, eps=1e-6)

|

| 138 |

+

self.linear = nn.Linear(model_channels, out_channels, bias=True)

|

| 139 |

+

self.adaLN_modulation = nn.Sequential(

|

| 140 |

+

nn.SiLU(),

|

| 141 |

+

nn.Linear(model_channels, 2 * model_channels, bias=True)

|

| 142 |

+

)

|

| 143 |

+

|

| 144 |

+

def forward(self, x, c):

|

| 145 |

+

shift, scale = self.adaLN_modulation(c).chunk(2, dim=-1)

|

| 146 |

+

x = modulate(self.norm_final(x), shift, scale)

|

| 147 |

+

x = self.linear(x)

|

| 148 |

+

return x

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

class SimpleMLPAdaLN(nn.Module):

|

| 152 |

+

"""

|

| 153 |

+

The MLP for Diffusion Loss.

|

| 154 |

+

:param in_channels: channels in the input Tensor.

|

| 155 |

+

:param model_channels: base channel count for the model.

|

| 156 |

+

:param out_channels: channels in the output Tensor.

|

| 157 |

+

:param z_channels: channels in the condition.

|

| 158 |

+

:param num_res_blocks: number of residual blocks per downsample.

|

| 159 |

+

"""

|

| 160 |

+

|

| 161 |

+

def __init__(

|

| 162 |

+

self,

|

| 163 |

+

in_channels,

|

| 164 |

+

model_channels,

|

| 165 |

+

out_channels,

|

| 166 |

+

z_channels,

|

| 167 |

+

num_res_blocks,

|

| 168 |

+

grad_checkpointing=False

|

| 169 |

+

):

|

| 170 |

+

super().__init__()

|

| 171 |

+

|

| 172 |

+

self.in_channels = in_channels

|

| 173 |

+

self.model_channels = model_channels

|

| 174 |

+

self.out_channels = out_channels

|

| 175 |

+

self.num_res_blocks = num_res_blocks

|

| 176 |

+

self.grad_checkpointing = grad_checkpointing

|

| 177 |

+

|

| 178 |

+

self.time_embed = TimestepEmbedder(model_channels)

|

| 179 |

+

self.cond_embed = nn.Linear(z_channels, model_channels)

|

| 180 |

+

|

| 181 |

+

self.input_proj = nn.Linear(in_channels, model_channels)

|

| 182 |

+

|

| 183 |

+

res_blocks = []

|

| 184 |

+

for i in range(num_res_blocks):

|

| 185 |

+

res_blocks.append(ResBlock(

|

| 186 |

+

model_channels,

|

| 187 |

+

))

|

| 188 |

+

|

| 189 |

+

self.res_blocks = nn.ModuleList(res_blocks)

|

| 190 |

+

self.final_layer = FinalLayer(model_channels, out_channels)

|

| 191 |

+

|

| 192 |

+

self.initialize_weights()

|

| 193 |

+

|

| 194 |

+

def initialize_weights(self):

|

| 195 |

+

def _basic_init(module):

|

| 196 |

+

if isinstance(module, nn.Linear):

|

| 197 |

+

torch.nn.init.xavier_uniform_(module.weight)

|

| 198 |

+

if module.bias is not None:

|

| 199 |

+

nn.init.constant_(module.bias, 0)

|

| 200 |

+

self.apply(_basic_init)

|

| 201 |

+

|

| 202 |

+

# Initialize timestep embedding MLP

|

| 203 |

+

nn.init.normal_(self.time_embed.mlp[0].weight, std=0.02)

|

| 204 |

+

nn.init.normal_(self.time_embed.mlp[2].weight, std=0.02)

|

| 205 |

+

|

| 206 |

+

# Zero-out adaLN modulation layers

|

| 207 |

+

for block in self.res_blocks:

|

| 208 |

+

nn.init.constant_(block.adaLN_modulation[-1].weight, 0)

|

| 209 |

+

nn.init.constant_(block.adaLN_modulation[-1].bias, 0)

|

| 210 |

+

|

| 211 |

+

# Zero-out output layers

|

| 212 |

+

nn.init.constant_(self.final_layer.adaLN_modulation[-1].weight, 0)

|

| 213 |

+

nn.init.constant_(self.final_layer.adaLN_modulation[-1].bias, 0)

|

| 214 |

+

nn.init.constant_(self.final_layer.linear.weight, 0)

|

| 215 |

+

nn.init.constant_(self.final_layer.linear.bias, 0)

|

| 216 |

+

|

| 217 |

+

def forward(self, x, t, c):

|

| 218 |

+

"""

|

| 219 |

+

Apply the model to an input batch.

|

| 220 |

+

:param x: an [N x C] Tensor of inputs.

|

| 221 |

+

:param t: a 1-D batch of timesteps.

|

| 222 |

+

:param c: conditioning from AR transformer.

|

| 223 |

+

:return: an [N x C] Tensor of outputs.

|

| 224 |

+

"""

|

| 225 |

+

# import pdb; pdb.set_trace()

|

| 226 |

+

x = self.input_proj(x.to(self.input_proj.weight.data.dtype))

|

| 227 |

+

t = self.time_embed(t)

|

| 228 |

+

c = self.cond_embed(c.to(self.cond_embed.weight.data.dtype))

|

| 229 |

+

|

| 230 |

+

y = t + c

|

| 231 |

+

|

| 232 |

+

if self.grad_checkpointing and not torch.jit.is_scripting():

|

| 233 |

+

for block in self.res_blocks:

|

| 234 |

+

x = checkpoint(block, x, y)

|

| 235 |

+

else:

|

| 236 |

+

for block in self.res_blocks:

|

| 237 |

+

x = block(x, y)

|

| 238 |

+

|

| 239 |

+

return self.final_layer(x, y)

|

| 240 |

+

|

| 241 |

+

def forward_with_cfg(self, x, t, c, cfg_scale):

|

| 242 |

+

half = x[: len(x) // 2]

|

| 243 |

+

combined = torch.cat([half, half], dim=0)

|

| 244 |

+

model_out = self.forward(combined, t, c)

|

| 245 |

+

eps, rest = model_out[:, :self.in_channels], model_out[:, self.in_channels:]

|

| 246 |

+

cond_eps, uncond_eps = torch.split(eps, len(eps) // 2, dim=0)

|

| 247 |

+

half_eps = uncond_eps + cfg_scale * (cond_eps - uncond_eps)

|

| 248 |

+

eps = torch.cat([half_eps, half_eps], dim=0)

|

| 249 |

+

return torch.cat([eps, rest], dim=1)

|

diffusion_utils.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Modified from OpenAI's diffusion repos

|

| 2 |

+

# GLIDE: https://github.com/openai/glide-text2im/blob/main/glide_text2im/gaussian_diffusion.py

|

| 3 |

+

# ADM: https://github.com/openai/guided-diffusion/blob/main/guided_diffusion

|

| 4 |

+

# IDDPM: https://github.com/openai/improved-diffusion/blob/main/improved_diffusion/gaussian_diffusion.py

|

| 5 |

+

|

| 6 |

+

import torch as th

|

| 7 |

+

import numpy as np

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

def normal_kl(mean1, logvar1, mean2, logvar2):

|

| 11 |

+

"""

|

| 12 |

+

Compute the KL divergence between two gaussians.

|

| 13 |

+

Shapes are automatically broadcasted, so batches can be compared to

|

| 14 |

+

scalars, among other use cases.

|

| 15 |

+

"""

|

| 16 |

+

tensor = None

|

| 17 |

+

for obj in (mean1, logvar1, mean2, logvar2):

|

| 18 |

+

if isinstance(obj, th.Tensor):

|

| 19 |

+

tensor = obj

|

| 20 |

+

break

|

| 21 |

+

assert tensor is not None, "at least one argument must be a Tensor"

|

| 22 |

+

|

| 23 |

+

# Force variances to be Tensors. Broadcasting helps convert scalars to

|

| 24 |

+

# Tensors, but it does not work for th.exp().

|

| 25 |

+

logvar1, logvar2 = [

|

| 26 |

+

x if isinstance(x, th.Tensor) else th.tensor(x).to(tensor)

|

| 27 |

+

for x in (logvar1, logvar2)

|

| 28 |

+

]

|

| 29 |

+

|

| 30 |

+

return 0.5 * (

|

| 31 |

+

-1.0

|

| 32 |

+

+ logvar2

|

| 33 |

+

- logvar1

|

| 34 |

+

+ th.exp(logvar1 - logvar2)

|

| 35 |

+

+ ((mean1 - mean2) ** 2) * th.exp(-logvar2)

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def approx_standard_normal_cdf(x):

|

| 40 |

+

"""

|

| 41 |

+

A fast approximation of the cumulative distribution function of the

|

| 42 |

+

standard normal.

|

| 43 |

+

"""

|

| 44 |

+

return 0.5 * (1.0 + th.tanh(np.sqrt(2.0 / np.pi) * (x + 0.044715 * th.pow(x, 3))))

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def discretized_gaussian_log_likelihood(x, *, means, log_scales):

|

| 48 |

+

"""

|

| 49 |

+

Compute the log-likelihood of a Gaussian distribution discretizing to a

|

| 50 |

+

given image.

|

| 51 |

+

:param x: the target images. It is assumed that this was uint8 values,

|

| 52 |

+

rescaled to the range [-1, 1].

|

| 53 |

+

:param means: the Gaussian mean Tensor.

|

| 54 |

+

:param log_scales: the Gaussian log stddev Tensor.

|

| 55 |

+

:return: a tensor like x of log probabilities (in nats).

|

| 56 |

+

"""

|

| 57 |

+

assert x.shape == means.shape == log_scales.shape

|

| 58 |

+

centered_x = x - means

|

| 59 |

+

inv_stdv = th.exp(-log_scales)

|

| 60 |

+

plus_in = inv_stdv * (centered_x + 1.0 / 255.0)

|

| 61 |

+

cdf_plus = approx_standard_normal_cdf(plus_in)

|

| 62 |

+

min_in = inv_stdv * (centered_x - 1.0 / 255.0)

|

| 63 |

+

cdf_min = approx_standard_normal_cdf(min_in)

|

| 64 |

+

log_cdf_plus = th.log(cdf_plus.clamp(min=1e-12))

|

| 65 |

+

log_one_minus_cdf_min = th.log((1.0 - cdf_min).clamp(min=1e-12))

|

| 66 |

+

cdf_delta = cdf_plus - cdf_min

|

| 67 |

+

log_probs = th.where(

|

| 68 |

+

x < -0.999,

|

| 69 |

+

log_cdf_plus,

|

| 70 |

+

th.where(x > 0.999, log_one_minus_cdf_min, th.log(cdf_delta.clamp(min=1e-12))),

|

| 71 |

+

)

|

| 72 |

+

assert log_probs.shape == x.shape

|

| 73 |

+

return log_probs

|

gaussian_diffusion.py

ADDED

|

@@ -0,0 +1,877 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|