Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- README.md +282 -0

- chat_template.jinja +123 -0

- config.json +37 -0

- images/README +1 -0

- images/cogito-v2-405b-benchmarks.png +3 -0

- images/deep-cogito-logo.png +0 -0

- model-00001-of-00191.safetensors +3 -0

- model-00002-of-00191.safetensors +3 -0

- model-00003-of-00191.safetensors +3 -0

- model-00004-of-00191.safetensors +3 -0

- model-00005-of-00191.safetensors +3 -0

- model-00006-of-00191.safetensors +3 -0

- model-00007-of-00191.safetensors +3 -0

- model-00008-of-00191.safetensors +3 -0

- model-00009-of-00191.safetensors +3 -0

- model-00010-of-00191.safetensors +3 -0

- model-00011-of-00191.safetensors +3 -0

- model-00012-of-00191.safetensors +3 -0

- model-00013-of-00191.safetensors +3 -0

- model-00014-of-00191.safetensors +3 -0

- model-00015-of-00191.safetensors +3 -0

- model-00016-of-00191.safetensors +3 -0

- model-00017-of-00191.safetensors +3 -0

- model-00018-of-00191.safetensors +3 -0

- model-00019-of-00191.safetensors +3 -0

- model-00020-of-00191.safetensors +3 -0

- model-00021-of-00191.safetensors +3 -0

- model-00022-of-00191.safetensors +3 -0

- model-00023-of-00191.safetensors +3 -0

- model-00024-of-00191.safetensors +3 -0

- model-00025-of-00191.safetensors +3 -0

- model-00026-of-00191.safetensors +3 -0

- model-00027-of-00191.safetensors +3 -0

- model-00028-of-00191.safetensors +3 -0

- model-00029-of-00191.safetensors +3 -0

- model-00030-of-00191.safetensors +3 -0

- model-00031-of-00191.safetensors +3 -0

- model-00032-of-00191.safetensors +3 -0

- model-00033-of-00191.safetensors +3 -0

- model-00034-of-00191.safetensors +3 -0

- model-00035-of-00191.safetensors +3 -0

- model-00036-of-00191.safetensors +3 -0

- model-00037-of-00191.safetensors +3 -0

- model-00038-of-00191.safetensors +3 -0

- model-00039-of-00191.safetensors +3 -0

- model-00040-of-00191.safetensors +3 -0

- model-00041-of-00191.safetensors +3 -0

- model-00042-of-00191.safetensors +3 -0

- model-00043-of-00191.safetensors +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/cogito-v2-405b-benchmarks.png filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,282 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

tags:

|

| 3 |

+

- unsloth

|

| 4 |

+

license: llama3.1

|

| 5 |

+

library_name: transformers

|

| 6 |

+

base_model:

|

| 7 |

+

- deepcogito/cogito-v2-preview-llama-405B

|

| 8 |

+

pipeline_tag: text-generation

|

| 9 |

+

---

|

| 10 |

+

> [!NOTE]

|

| 11 |

+

> Includes Unsloth **chat template fixes**! <br> For `llama.cpp`, use `--jinja`

|

| 12 |

+

>

|

| 13 |

+

|

| 14 |

+

<div>

|

| 15 |

+

<p style="margin-top: 0;margin-bottom: 0;">

|

| 16 |

+

<em><a href="https://docs.unsloth.ai/basics/unsloth-dynamic-v2.0-gguf">Unsloth Dynamic 2.0</a> achieves superior accuracy & outperforms other leading quants.</em>

|

| 17 |

+

</p>

|

| 18 |

+

<div style="display: flex; gap: 5px; align-items: center; ">

|

| 19 |

+

<a href="https://github.com/unslothai/unsloth/">

|

| 20 |

+

<img src="https://github.com/unslothai/unsloth/raw/main/images/unsloth%20new%20logo.png" width="133">

|

| 21 |

+

</a>

|

| 22 |

+

<a href="https://discord.gg/unsloth">

|

| 23 |

+

<img src="https://github.com/unslothai/unsloth/raw/main/images/Discord%20button.png" width="173">

|

| 24 |

+

</a>

|

| 25 |

+

<a href="https://docs.unsloth.ai/">

|

| 26 |

+

<img src="https://raw.githubusercontent.com/unslothai/unsloth/refs/heads/main/images/documentation%20green%20button.png" width="143">

|

| 27 |

+

</a>

|

| 28 |

+

</div>

|

| 29 |

+

</div>

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

<p align="center">

|

| 33 |

+

<img src="images/deep-cogito-logo.png" alt="Logo" width="40%">

|

| 34 |

+

</p>

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

# Cogito v2 preview - 405B

|

| 38 |

+

|

| 39 |

+

[Blog Post](https://www.deepcogito.com/research/cogito-v2-preview)

|

| 40 |

+

|

| 41 |

+

The Cogito v2 LLMs are instruction tuned generative models. All models are released under an open license for commercial use.

|

| 42 |

+

|

| 43 |

+

- Cogito v2 models are hybrid reasoning models. Each model can answer directly (standard LLM), or self-reflect before answering (like reasoning models).

|

| 44 |

+

- The LLMs are trained using **Iterated Distillation and Amplification (IDA)** - an scalable and efficient alignment strategy for superintelligence using iterative self-improvement.

|

| 45 |

+

- The models have been optimized for coding, STEM, instruction following and general helpfulness, and have significantly higher multilingual, coding and tool calling capabilities than size equivalent counterparts.

|

| 46 |

+

- In both standard and reasoning modes, Cogito v2-preview models outperform their size equivalent counterparts on common industry benchmarks.

|

| 47 |

+

- This model is trained in over 30 languages and supports a context length of 128k.

|

| 48 |

+

|

| 49 |

+

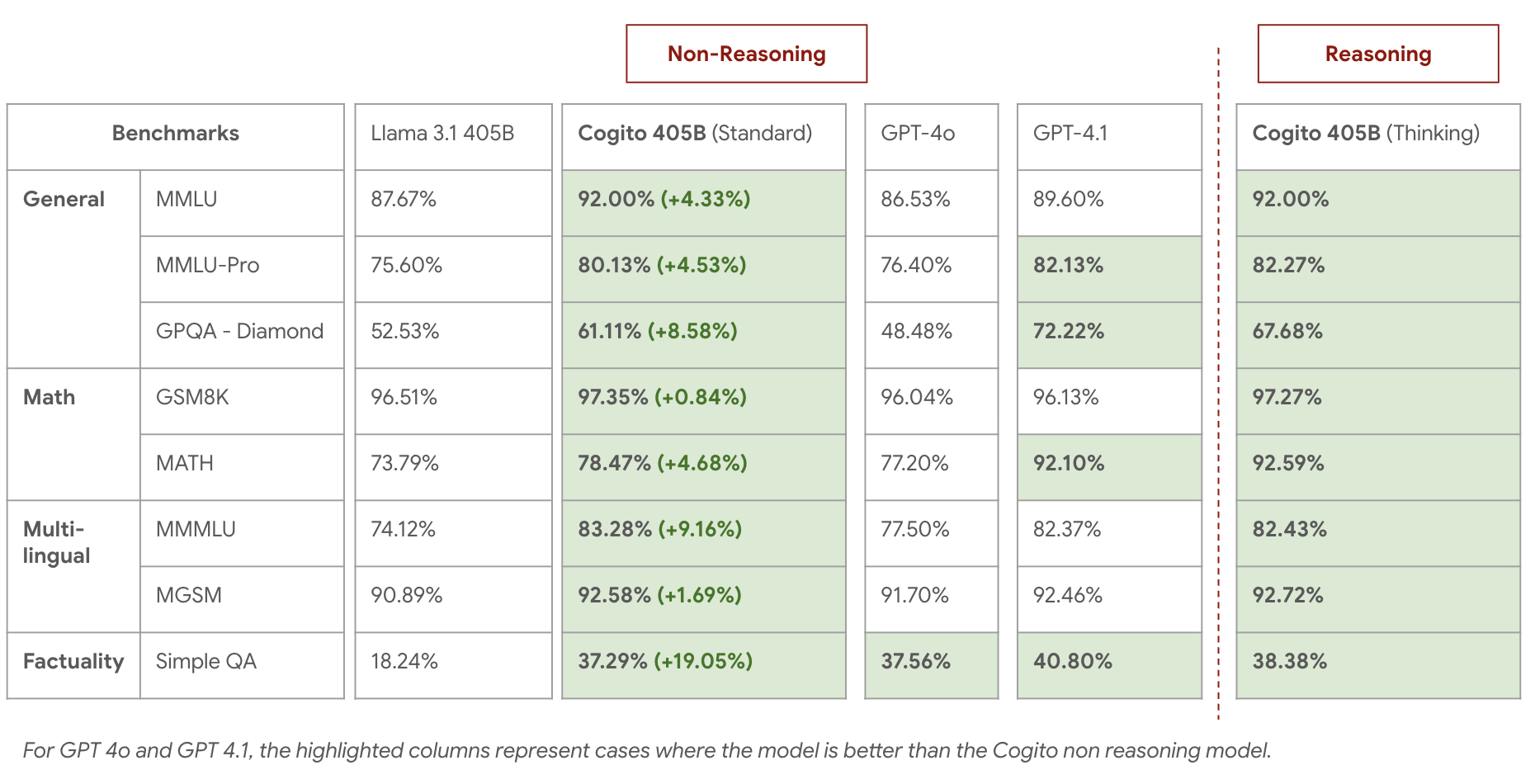

# Evaluations

|

| 50 |

+

Here is the model performance on some standard industry benchmarks:

|

| 51 |

+

|

| 52 |

+

<p align="left">

|

| 53 |

+

<img src="images/cogito-v2-405b-benchmarks.png" alt="Logo" width="90%">

|

| 54 |

+

</p>

|

| 55 |

+

|

| 56 |

+

For detailed evaluations, please refer to the [Blog Post](https://www.deepcogito.com/research/cogito-v2-preview).

|

| 57 |

+

|

| 58 |

+

# Usage

|

| 59 |

+

Here is a snippet below for usage with Transformers:

|

| 60 |

+

|

| 61 |

+

```python

|

| 62 |

+

import transformers

|

| 63 |

+

import torch

|

| 64 |

+

|

| 65 |

+

model_id = "deepcogito/cogito-v2-preview-llama-405B"

|

| 66 |

+

|

| 67 |

+

pipeline = transformers.pipeline(

|

| 68 |

+

"text-generation",

|

| 69 |

+

model=model_id,

|

| 70 |

+

model_kwargs={"torch_dtype": torch.bfloat16},

|

| 71 |

+

device_map="auto",

|

| 72 |

+

)

|

| 73 |

+

|

| 74 |

+

messages = [

|

| 75 |

+

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

|

| 76 |

+

{"role": "user", "content": "Give me a short introduction to LLMs."},

|

| 77 |

+

]

|

| 78 |

+

|

| 79 |

+

outputs = pipeline(

|

| 80 |

+

messages,

|

| 81 |

+

max_new_tokens=512,

|

| 82 |

+

)

|

| 83 |

+

|

| 84 |

+

print(outputs[0]["generated_text"][-1])

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

## Implementing extended thinking

|

| 90 |

+

- By default, the model will answer in the standard mode.

|

| 91 |

+

- To enable thinking, you can do any one of the two methods:

|

| 92 |

+

- Set `enable_thinking=True` while applying the chat template.

|

| 93 |

+

- Add a specific system prompt, along with prefilling the response with "\<think\>\n".

|

| 94 |

+

|

| 95 |

+

**NOTE: Unlike Cogito v1 models, we initiate the response with "\<think\>\n" at the beginning of every output when reasoning is enabled. This is because hybrid models can be brittle at times (<0.1% of the cases), and adding a "\<think\>\n" ensures that the model does indeed respect thinking.**

|

| 96 |

+

|

| 97 |

+

### Method 1 - Set enable_thinking=True in the tokenizer

|

| 98 |

+

If you are using Huggingface tokenizers, then you can simply use add the argument `enable_thinking=True` to the tokenization (this option is added to the chat template).

|

| 99 |

+

|

| 100 |

+

Here is an example -

|

| 101 |

+

```python

|

| 102 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 103 |

+

|

| 104 |

+

model_name = "deepcogito/cogito-v2-preview-llama-405B"

|

| 105 |

+

|

| 106 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 107 |

+

model_name,

|

| 108 |

+

torch_dtype="auto",

|

| 109 |

+

device_map="auto"

|

| 110 |

+

)

|

| 111 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 112 |

+

|

| 113 |

+

prompt = "Give me a short introduction to LLMs."

|

| 114 |

+

messages = [

|

| 115 |

+

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

|

| 116 |

+

{"role": "user", "content": prompt}

|

| 117 |

+

]

|

| 118 |

+

|

| 119 |

+

text = tokenizer.apply_chat_template(

|

| 120 |

+

messages,

|

| 121 |

+

tokenize=False,

|

| 122 |

+

add_generation_prompt=True,

|

| 123 |

+

enable_thinking=True

|

| 124 |

+

)

|

| 125 |

+

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

|

| 126 |

+

|

| 127 |

+

generated_ids = model.generate(

|

| 128 |

+

**model_inputs,

|

| 129 |

+

max_new_tokens=512

|

| 130 |

+

)

|

| 131 |

+

generated_ids = [

|

| 132 |

+

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

|

| 133 |

+

]

|

| 134 |

+

|

| 135 |

+

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

|

| 136 |

+

print(response)

|

| 137 |

+

```

|

| 138 |

+

|

| 139 |

+

### Method 2 - Add a specific system prompt, along with prefilling the response with "\<think\>\n".

|

| 140 |

+

To enable thinking using this method, you need to do two parts -

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

Step 1 - Simply use this in the system prompt `system_instruction = 'Enable deep thinking subroutine.'`

|

| 144 |

+

|

| 145 |

+

If you already have a system_instruction, then use `system_instruction = 'Enable deep thinking subroutine.' + '\n\n' + system_instruction`.

|

| 146 |

+

|

| 147 |

+

Step 2 - Prefil the response with the tokens `"<think>\n"`.

|

| 148 |

+

|

| 149 |

+

Here is an example -

|

| 150 |

+

|

| 151 |

+

```python

|

| 152 |

+

import transformers

|

| 153 |

+

import torch

|

| 154 |

+

|

| 155 |

+

model_name = "deepcogito/cogito-v2-preview-llama-405B"

|

| 156 |

+

|

| 157 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 158 |

+

model_name,

|

| 159 |

+

torch_dtype="auto",

|

| 160 |

+

device_map="auto"

|

| 161 |

+

)

|

| 162 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 163 |

+

|

| 164 |

+

# Step 1 - Add deep thinking instruction.

|

| 165 |

+

DEEP_THINKING_INSTRUCTION = "Enable deep thinking subroutine."

|

| 166 |

+

|

| 167 |

+

messages = [

|

| 168 |

+

{"role": "system", "content": DEEP_THINKING_INSTRUCTION},

|

| 169 |

+

{"role": "user", "content": "Write a bash script that takes a matrix represented as a string with format '[1,2],[3,4],[5,6]' and prints the transpose in the same format."},

|

| 170 |

+

]

|

| 171 |

+

|

| 172 |

+

text = tokenizer.apply_chat_template(

|

| 173 |

+

messages,

|

| 174 |

+

tokenize=False,

|

| 175 |

+

add_generation_prompt=True

|

| 176 |

+

)

|

| 177 |

+

|

| 178 |

+

# Step 2 - Prefill response with "<think>\n".

|

| 179 |

+

text += "<think>\n"

|

| 180 |

+

|

| 181 |

+

# Now, continue as usual.

|

| 182 |

+

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

|

| 183 |

+

|

| 184 |

+

generated_ids = model.generate(

|

| 185 |

+

**model_inputs,

|

| 186 |

+

max_new_tokens=512

|

| 187 |

+

)

|

| 188 |

+

generated_ids = [

|

| 189 |

+

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

|

| 190 |

+

]

|

| 191 |

+

|

| 192 |

+

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

|

| 193 |

+

print(response)

|

| 194 |

+

```

|

| 195 |

+

|

| 196 |

+

|

| 197 |

+

Similarly, if you have a system prompt, you can append the `DEEP_THINKING_INSTRUCTION` to the beginning in this way -

|

| 198 |

+

|

| 199 |

+

```python

|

| 200 |

+

DEEP_THINKING_INSTRUCTION = "Enable deep thinking subroutine."

|

| 201 |

+

|

| 202 |

+

system_prompt = "Reply to each prompt with only the actual code - no explanations."

|

| 203 |

+

prompt = "Write a bash script that takes a matrix represented as a string with format '[1,2],[3,4],[5,6]' and prints the transpose in the same format."

|

| 204 |

+

|

| 205 |

+

messages = [

|

| 206 |

+

{"role": "system", "content": DEEP_THINKING_INSTRUCTION + '\n\n' + system_prompt},

|

| 207 |

+

{"role": "user", "content": prompt}

|

| 208 |

+

]

|

| 209 |

+

```

|

| 210 |

+

|

| 211 |

+

|

| 212 |

+

# Tool Calling

|

| 213 |

+

Cogito models support tool calling (single, parallel, multiple and parallel_multiple) both in standard and extended thinking mode.

|

| 214 |

+

|

| 215 |

+

Here is a snippet -

|

| 216 |

+

|

| 217 |

+

```python

|

| 218 |

+

# First, define a tool

|

| 219 |

+

def get_current_temperature(location: str) -> float:

|

| 220 |

+

"""

|

| 221 |

+

Get the current temperature at a location.

|

| 222 |

+

|

| 223 |

+

Args:

|

| 224 |

+

location: The location to get the temperature for, in the format "City, Country"

|

| 225 |

+

Returns:

|

| 226 |

+

The current temperature at the specified location in the specified units, as a float.

|

| 227 |

+

"""

|

| 228 |

+

return 22. # A real function should probably actually get the temperature!

|

| 229 |

+

|

| 230 |

+

# Next, create a chat and apply the chat template

|

| 231 |

+

messages = [

|

| 232 |

+

{"role": "user", "content": "Hey, what's the temperature in Paris right now?"}

|

| 233 |

+

]

|

| 234 |

+

|

| 235 |

+

model_inputs = tokenizer.apply_chat_template(messages, tools=[get_current_temperature], add_generation_prompt=True)

|

| 236 |

+

|

| 237 |

+

text = tokenizer.apply_chat_template(messages, tools=[get_current_temperature], add_generation_prompt=True, tokenize=False)

|

| 238 |

+

inputs = tokenizer(text, return_tensors="pt", add_special_tokens=False).to(model.device)

|

| 239 |

+

outputs = model.generate(**inputs, max_new_tokens=512)

|

| 240 |

+

output_text = tokenizer.batch_decode(outputs)[0][len(text):]

|

| 241 |

+

print(output_text)

|

| 242 |

+

```

|

| 243 |

+

|

| 244 |

+

This will result in the output -

|

| 245 |

+

```

|

| 246 |

+

<tool_call>

|

| 247 |

+

{"name": "get_current_temperature", "arguments": {"location": "Paris, France"}}

|

| 248 |

+

</tool_call><|eot_id|>

|

| 249 |

+

```

|

| 250 |

+

|

| 251 |

+

You can then generate text from this input as normal. If the model generates a tool call, you should add it to the chat like so:

|

| 252 |

+

|

| 253 |

+

```python

|

| 254 |

+

tool_call = {"name": "get_current_temperature", "arguments": {"location": "Paris, France"}}

|

| 255 |

+

messages.append({"role": "assistant", "tool_calls": [{"type": "function", "function": tool_call}]})

|

| 256 |

+

```

|

| 257 |

+

|

| 258 |

+

and then call the tool and append the result, with the `tool` role, like so:

|

| 259 |

+

|

| 260 |

+

```python

|

| 261 |

+

messages.append({"role": "tool", "name": "get_current_temperature", "content": "22.0"})

|

| 262 |

+

```

|

| 263 |

+

|

| 264 |

+

After that, you can `generate()` again to let the model use the tool result in the chat:

|

| 265 |

+

|

| 266 |

+

```python

|

| 267 |

+

text = tokenizer.apply_chat_template(messages, tools=[get_current_temperature], add_generation_prompt=True, tokenize=False)

|

| 268 |

+

inputs = tokenizer(text, return_tensors="pt", add_special_tokens=False).to(model.device)

|

| 269 |

+

outputs = model.generate(**inputs, max_new_tokens=512)

|

| 270 |

+

output_text = tokenizer.batch_decode(outputs)[0][len(text):]

|

| 271 |

+

```

|

| 272 |

+

|

| 273 |

+

This should result in the string -

|

| 274 |

+

```

|

| 275 |

+

'The current temperature in Paris is 22.0 degrees.<|eot_id|>'

|

| 276 |

+

```

|

| 277 |

+

|

| 278 |

+

## License

|

| 279 |

+

This repository and the model weights are licensed under the [Llama 3.1 Community License Agreement](https://github.com/meta-llama/llama-models/blob/main/models/llama3_1/LICENSE) (Llama models' default license agreement).

|

| 280 |

+

|

| 281 |

+

## Contact

|

| 282 |

+

If you would like to reach out to our team, send an email to [[email protected]]([email protected]).

|

chat_template.jinja

ADDED

|

@@ -0,0 +1,123 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{{- bos_token }}

|

| 2 |

+

{%- if not tools is defined %}

|

| 3 |

+

{%- set tools = none %}

|

| 4 |

+

{%- endif %}

|

| 5 |

+

{%- if not enable_thinking is defined %}

|

| 6 |

+

{%- set enable_thinking = false %}

|

| 7 |

+

{%- endif %}

|

| 8 |

+

{#- This block extracts the system message, so we can slot it into the right place. #}

|

| 9 |

+

{%- if messages[0]['role'] == 'system' %}

|

| 10 |

+

{%- set system_message = messages[0]['content']|trim %}

|

| 11 |

+

{%- set messages = messages[1:] %}

|

| 12 |

+

{%- else %}

|

| 13 |

+

{%- set system_message = "" %}

|

| 14 |

+

{%- endif %}

|

| 15 |

+

{#- Set the system message. If enable_thinking is true, add the "Enable deep thinking subroutine." #}

|

| 16 |

+

{%- if enable_thinking %}

|

| 17 |

+

{%- if system_message != "" %}

|

| 18 |

+

{%- set system_message = "Enable deep thinking subroutine.

|

| 19 |

+

|

| 20 |

+

" ~ system_message %}

|

| 21 |

+

{%- else %}

|

| 22 |

+

{%- set system_message = "Enable deep thinking subroutine." %}

|

| 23 |

+

{%- endif %}

|

| 24 |

+

{%- endif %}

|

| 25 |

+

{#- Set the system message. In case there are tools present, add them to the system message. #}

|

| 26 |

+

{%- if tools is not none or system_message != '' %}

|

| 27 |

+

{{- "<|start_header_id|>system<|end_header_id|>

|

| 28 |

+

|

| 29 |

+

" }}

|

| 30 |

+

{{- system_message }}

|

| 31 |

+

{%- if tools is not none %}

|

| 32 |

+

{%- if system_message != "" %}

|

| 33 |

+

{{- "

|

| 34 |

+

|

| 35 |

+

" }}

|

| 36 |

+

{%- endif %}

|

| 37 |

+

{{- "Available Tools:

|

| 38 |

+

" }}

|

| 39 |

+

{%- for t in tools %}

|

| 40 |

+

{{- t | tojson(indent=4) }}

|

| 41 |

+

{{- "

|

| 42 |

+

|

| 43 |

+

" }}

|

| 44 |

+

{%- endfor %}

|

| 45 |

+

{%- endif %}

|

| 46 |

+

{{- "<|eot_id|>" }}

|

| 47 |

+

{%- endif %}

|

| 48 |

+

|

| 49 |

+

{#- Rest of the messages #}

|

| 50 |

+

{%- for message in messages %}

|

| 51 |

+

{#- The special cases are when the message is from a tool (via role ipython/tool/tool_results) or when the message is from the assistant, but has "tool_calls". If not, we add the message directly as usual. #}

|

| 52 |

+

{#- Case 1 - Usual, non tool related message. #}

|

| 53 |

+

{%- if not (message.role == "ipython" or message.role == "tool" or message.role == "tool_results" or (message.tool_calls is defined and message.tool_calls is not none)) %}

|

| 54 |

+

{{- '<|start_header_id|>' + message['role'] + '<|end_header_id|>

|

| 55 |

+

|

| 56 |

+

' }}

|

| 57 |

+

{%- if message['content'] is string %}

|

| 58 |

+

{{- message['content'] | trim }}

|

| 59 |

+

{%- else %}

|

| 60 |

+

{%- for item in message['content'] %}

|

| 61 |

+

{%- if item.type == 'text' %}

|

| 62 |

+

{{- item.text | trim }}

|

| 63 |

+

{%- endif %}

|

| 64 |

+

{%- endfor %}

|

| 65 |

+

{%- endif %}

|

| 66 |

+

{{- '<|eot_id|>' }}

|

| 67 |

+

|

| 68 |

+

{#- Case 2 - the response is from the assistant, but has a tool call returned. The assistant may also have returned some content along with the tool call. #}

|

| 69 |

+

{%- elif message.tool_calls is defined and message.tool_calls is not none %}

|

| 70 |

+

{{- "<|start_header_id|>assistant<|end_header_id|>

|

| 71 |

+

|

| 72 |

+

" }}

|

| 73 |

+

{%- if message['content'] is string %}

|

| 74 |

+

{{- message['content'] | trim }}

|

| 75 |

+

{%- else %}

|

| 76 |

+

{%- for item in message['content'] %}

|

| 77 |

+

{%- if item.type == 'text' %}

|

| 78 |

+

{{- item.text | trim }}

|

| 79 |

+

{%- if item.text | trim != "" %}

|

| 80 |

+

{{- "

|

| 81 |

+

|

| 82 |

+

" }}

|

| 83 |

+

{%- endif %}

|

| 84 |

+

{%- endif %}

|

| 85 |

+

{%- endfor %}

|

| 86 |

+

{%- endif %}

|

| 87 |

+

{{- "[" }}

|

| 88 |

+

{%- for tool_call in message.tool_calls %}

|

| 89 |

+

{%- set out = tool_call.function|tojson %}

|

| 90 |

+

{%- if not tool_call.id is defined %}

|

| 91 |

+

{{- out }}

|

| 92 |

+

{%- else %}

|

| 93 |

+

{{- out[:-1] }}

|

| 94 |

+

{{- ', "id": "' + tool_call.id + '"}' }}

|

| 95 |

+

{%- endif %}

|

| 96 |

+

{%- if not loop.last %}

|

| 97 |

+

{{- ", " }}

|

| 98 |

+

{%- else %}

|

| 99 |

+

{{- "]<|eot_id|>" }}

|

| 100 |

+

{%- endif %}

|

| 101 |

+

{%- endfor %}

|

| 102 |

+

|

| 103 |

+

{#- Case 3 - the response is from a tool call. The tool call may have an id associated with it as well. If it does, we add it to the prompt. #}

|

| 104 |

+

{%- elif message.role == "ipython" or message["role"] == "tool_results" or message["role"] == "tool" %}

|

| 105 |

+

{{- "<|start_header_id|>ipython<|end_header_id|>

|

| 106 |

+

|

| 107 |

+

" }}

|

| 108 |

+

{%- if message.tool_call_id is defined and message.tool_call_id != '' %}

|

| 109 |

+

{{- '{"content": ' + (message.content | tojson) + ', "call_id": "' + message.tool_call_id + '"}' }}

|

| 110 |

+

{%- else %}

|

| 111 |

+

{{- '{"content": ' + (message.content | tojson) + '}' }}

|

| 112 |

+

{%- endif %}

|

| 113 |

+

{{- "<|eot_id|>" }}

|

| 114 |

+

{%- endif %}

|

| 115 |

+

{%- endfor %}

|

| 116 |

+

{%- if add_generation_prompt %}

|

| 117 |

+

{{- '<|start_header_id|>assistant<|end_header_id|>

|

| 118 |

+

|

| 119 |

+

' }}

|

| 120 |

+

{%- if enable_thinking %}

|

| 121 |

+

{{- '<think>\n' }}

|

| 122 |

+

{%- endif %}

|

| 123 |

+

{%- endif %}

|

config.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"architectures": [

|

| 3 |

+

"LlamaForCausalLM"

|

| 4 |

+

],

|

| 5 |

+

"attention_bias": false,

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 128000,

|

| 8 |

+

"eos_token_id": 128001,

|

| 9 |

+

"head_dim": 128,

|

| 10 |

+

"hidden_act": "silu",

|

| 11 |

+

"hidden_size": 16384,

|

| 12 |

+

"initializer_range": 0.02,

|

| 13 |

+

"intermediate_size": 53248,

|

| 14 |

+

"max_position_embeddings": 131072,

|

| 15 |

+

"mlp_bias": false,

|

| 16 |

+

"model_type": "llama",

|

| 17 |

+

"num_attention_heads": 128,

|

| 18 |

+

"num_hidden_layers": 126,

|

| 19 |

+

"num_key_value_heads": 8,

|

| 20 |

+

"pad_token_id": 128004,

|

| 21 |

+

"pretraining_tp": 1,

|

| 22 |

+

"rms_norm_eps": 1e-05,

|

| 23 |

+

"rope_scaling": {

|

| 24 |

+

"factor": 8.0,

|

| 25 |

+

"high_freq_factor": 4.0,

|

| 26 |

+

"low_freq_factor": 1.0,

|

| 27 |

+

"original_max_position_embeddings": 8192,

|

| 28 |

+

"rope_type": "llama3"

|

| 29 |

+

},

|

| 30 |

+

"rope_theta": 500000.0,

|

| 31 |

+

"tie_word_embeddings": false,

|

| 32 |

+

"torch_dtype": "bfloat16",

|

| 33 |

+

"transformers_version": "4.54.1",

|

| 34 |

+

"unsloth_fixed": true,

|

| 35 |

+

"use_cache": true,

|

| 36 |

+

"vocab_size": 128256

|

| 37 |

+

}

|

images/README

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Directory for images associated with the model.

|

images/cogito-v2-405b-benchmarks.png

ADDED

|

Git LFS Details

|

images/deep-cogito-logo.png

ADDED

|

model-00001-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:da4fcf2c07107d219e61518b3554b8b8487e60c4f72769cc1c0c35ce36df6e92

|

| 3 |

+

size 4202692736

|

model-00002-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c3a135fe37c739441ac99f987561094c602676dcd35ba5b40eacc7210dd85304

|

| 3 |

+

size 4202725632

|

model-00003-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2f7e4aac30dc96813431d601a4c790205d595da3d34f199f670c7ddcd21e4519

|

| 3 |

+

size 3489661192

|

model-00004-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fb4dd8714572e5385993288d53d360c84bf03b0ac815e1f29da1a999a8bcf7b3

|

| 3 |

+

size 4630578120

|

model-00005-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b4b27d8bed5e0bdac8446319a447f85cc4cb35135dc7409bb1e31c3fbacf1bef

|

| 3 |

+

size 4630578128

|

model-00006-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ec7117f7539c3e627aa56c26436de62cafd82b75abd058e097bc7b1207c7e664

|

| 3 |

+

size 3489661200

|

model-00007-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dc9f428e34fb33a8be29b5571284db6c4e8541bcc11bf483b95ce26f72687175

|

| 3 |

+

size 4630578136

|

model-00008-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bb6f9bc2c61a29c08a53ebebce2d70a06b52b8b2054bb064ee60491cf4a3fbca

|

| 3 |

+

size 4630578136

|

model-00009-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:baf7597d17b5846f1d8160ef7ec8c80653d4a4a4649da93ddf2aba772c25057c

|

| 3 |

+

size 3489661200

|

model-00010-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f1493a5ba226da3a0d2fce9bbc74d9b2884ce4815d1930d71652677c755951f5

|

| 3 |

+

size 4630578136

|

model-00011-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5b1ebe3a8c4ee0e721b9fbdcdd54b21c57b0b809343fad7bc31c61ea1e00251b

|

| 3 |

+

size 4630578136

|

model-00012-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:366926d3047c0bacd272ed15c55ce86be399cf99dba82f0eb302b588ce3f747f

|

| 3 |

+

size 3489661200

|

model-00013-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:586114ddd75056f4792edec1ee75c7af39079178bbb90e0b27798c2e1f63524f

|

| 3 |

+

size 4630578136

|

model-00014-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:40390d7018375855a1a04d5e562cab5ca0bb68d3bd51a0e86e367f2c423e3e0b

|

| 3 |

+

size 4630578136

|

model-00015-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:96b773560e39bbaf4f9c5d55843ec8ef84ff986fe5a73c9e7a98a0509a980c2a

|

| 3 |

+

size 3489661200

|

model-00016-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:341a98443418b77676c3131a96b756f3bb428d032d61067a13d36cf6c52994a2

|

| 3 |

+

size 4630578136

|

model-00017-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5d33df86c8ebefee0c39ed1f96c26db509ddcd85ae505d70e16a34d6adf2c3d7

|

| 3 |

+

size 4630578136

|

model-00018-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ac83bd51c6d077e4ab0794e02c7ae9f4621766f0b519b6c6e085e7fe65a71603

|

| 3 |

+

size 3489661200

|

model-00019-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:139a7491200398b94dcdbae5f8058ace5c108d3a63934d89b6f1738ce277a52e

|

| 3 |

+

size 4630578136

|

model-00020-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7885e2bddfd7bbd3a434b51275738063c3d5c12376c8b187a92c7398875e8111

|

| 3 |

+

size 4630578136

|

model-00021-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:14cff14385de974fa593d585556da391e5167a52110464d6f8db24b72743b6ac

|

| 3 |

+

size 3489661200

|

model-00022-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c7e6d59503b8b46f8b673a7d7f50bdcd3e918edeec5fd65d841364f3736896e5

|

| 3 |

+

size 4630578136

|

model-00023-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fb1e1bd040ff1f02c985928efb5a489624c8b1ab7519140cca774df299417c03

|

| 3 |

+

size 4630578136

|

model-00024-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d9d4af519dc268f65973d6dd9c05bd807aaab947f974aa034503a23908129d68

|

| 3 |

+

size 3489661200

|

model-00025-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d89f8867a6197b799c8071f92fdc299835f0d6433024ebdfde5d2348c2823c5f

|

| 3 |

+

size 4630578136

|

model-00026-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fee4fa1fa8acf4e0858ef233a9e7e2401cb363c05b1cae531b737fcdf5cb7ed9

|

| 3 |

+

size 4630578136

|

model-00027-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d09202cd8a34ad2ab2f69a1b3db10735cea24db8df88a139f0a843873f5b07b0

|

| 3 |

+

size 3489661200

|

model-00028-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b7c15d87433374725722796f0f958c255cda51239f9f101a1f29fdefdda454e0

|

| 3 |

+

size 4630578136

|

model-00029-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ec264e604a8521068743454b72c55e0c64e3ce3c0a82162a6c4505ce1c440c43

|

| 3 |

+

size 4630578136

|

model-00030-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:672f353bbdabbac38030b599f352dd54f3bc5a60582d1d6849e5f41cba6651e5

|

| 3 |

+

size 3489661200

|

model-00031-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5e6a99410a0a731ac150fca9bbb9784d17bd725624a682fd8ffe20a881e9af2d

|

| 3 |

+

size 4630578136

|

model-00032-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:da98f02616eb867d3a7b488690ff8503f3a4f345f5dd809f1a4647ecd2221340

|

| 3 |

+

size 4630578136

|

model-00033-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ea0e4d1677e17bdb346d43621098849236f0bfd96461ca75a5b7fb419a6f01b1

|

| 3 |

+

size 3489661200

|

model-00034-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:30110887b9c9fda6bac9d47f05de8527395c6fa7e9d6b4486bebbe60cd3aecac

|

| 3 |

+

size 4630578136

|

model-00035-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5c37b39aa057388f2903d1f56fe3f2f092ed1f76158cdfd05b1da762784acfe0

|

| 3 |

+

size 4630578136

|

model-00036-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:74b73f7094ea241f79df6119880291776df1e4ab76820bf630a13ddad568868a

|

| 3 |

+

size 3489661200

|

model-00037-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b5239e1e4e6386dbb6209738fbd8d5b02840a1997cec1ec0bb9ca466033779f3

|

| 3 |

+

size 4630578136

|

model-00038-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:09af40136899dfdce4cab00f74cb48aa244c5bac99fd457bede59ce360292bb2

|

| 3 |

+

size 4630578136

|

model-00039-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4c23206fa7c9c051ed121b4031b7c78b33d3f29c9828e783013ac24652ea547e

|

| 3 |

+

size 3489661200

|

model-00040-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:283766bd72e27d35bf79650202c55ff7e97e9050796f95cc6007cce1404e5d21

|

| 3 |

+

size 4630578136

|

model-00041-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:42c47881d517142e961761bf78483fd496f65db4a0142ba4feaa35479f2ff8c1

|

| 3 |

+

size 4630578136

|

model-00042-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:088b827b5beb35b1d61c38156695db8ee2063250beeaaa390a139f48142dab4c

|

| 3 |

+

size 3489661200

|

model-00043-of-00191.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:5e5c09d07dccda230b7f51873b4f27c74ff5fe83d609f4bc907b40391184185b

|

| 3 |

+

size 4630578136

|