Add IQ2_KL

Browse files- README.md +43 -0

- images/perplexity.png +2 -2

README.md

CHANGED

|

@@ -217,6 +217,49 @@ numactl -N 0 -m 0 \

|

|

| 217 |

|

| 218 |

</details>

|

| 219 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 220 |

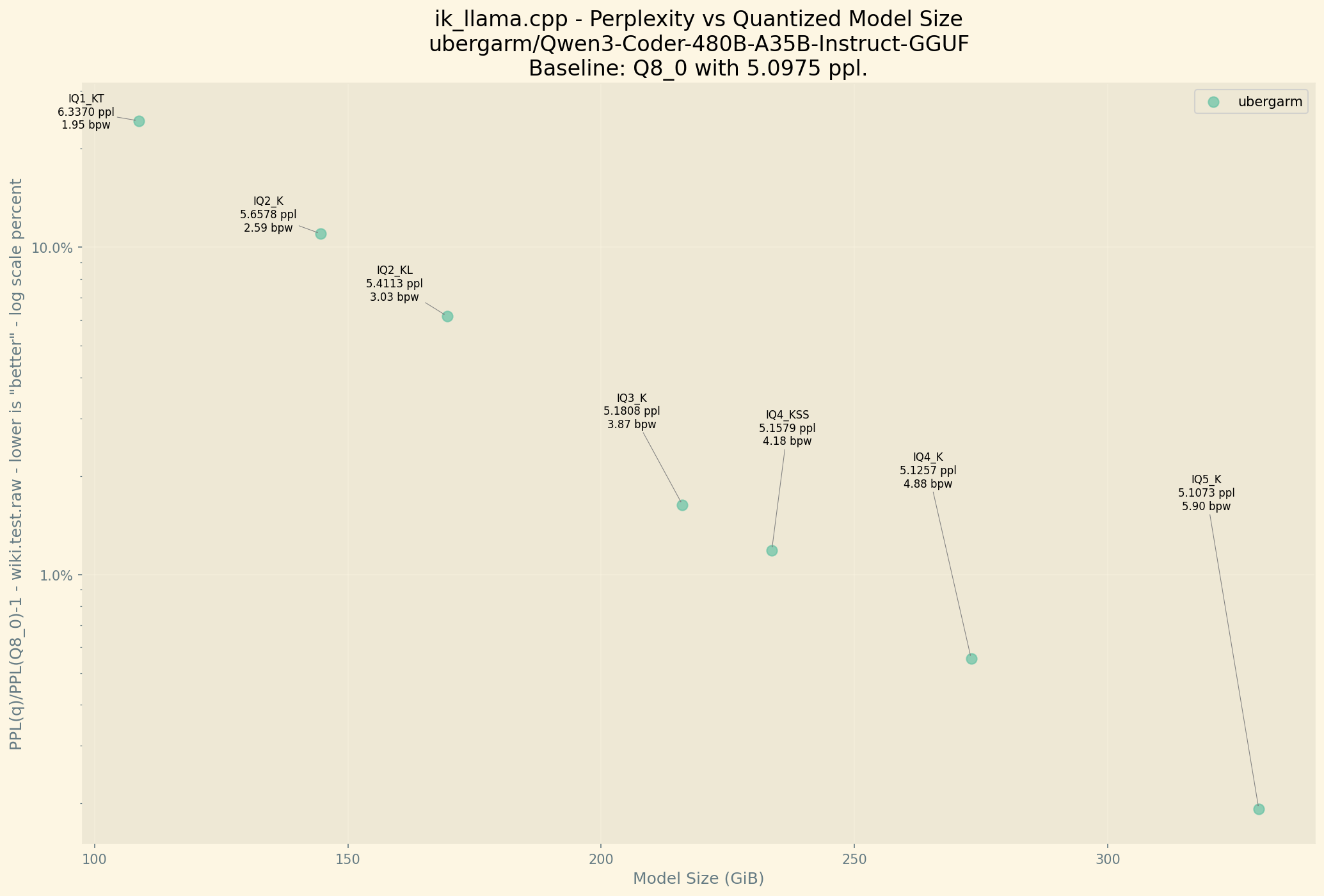

## `IQ2_K` 144.640 GiB (2.588 BPW)

|

| 221 |

Final estimate: PPL = 5.6578 +/- 0.03697

|

| 222 |

|

|

|

|

| 217 |

|

| 218 |

</details>

|

| 219 |

|

| 220 |

+

## `IQ2_KL` 169.597 GiB (3.034 BPW)

|

| 221 |

+

Final estimate: PPL = 5.4113 +/- 0.03516

|

| 222 |

+

|

| 223 |

+

<details>

|

| 224 |

+

|

| 225 |

+

<summary>👈 Secret Recipe</summary>

|

| 226 |

+

|

| 227 |

+

Originally this had issues with NaNs but got it working this this PR: https://github.com/ikawrakow/ik_llama.cpp/pull/735

|

| 228 |

+

|

| 229 |

+

```bash

|

| 230 |

+

#!/usr/bin/env bash

|

| 231 |

+

|

| 232 |

+

custom="

|

| 233 |

+

# Attention

|

| 234 |

+

blk\..*\.attn_q.*=iq6_k

|

| 235 |

+

blk\..*\.attn_k.*=q8_0

|

| 236 |

+

blk\..*\.attn_v.*=q8_0

|

| 237 |

+

blk\..*\.attn_output.*=iq6_k

|

| 238 |

+

|

| 239 |

+

# Routed Experts

|

| 240 |

+

blk\..*\.ffn_down_exps\.weight=iq3_k

|

| 241 |

+

blk\..*\.ffn_(gate|up)_exps\.weight=iq2_kl

|

| 242 |

+

|

| 243 |

+

# Non-Repeating Layers

|

| 244 |

+

token_embd\.weight=iq4_k

|

| 245 |

+

output\.weight=iq6_k

|

| 246 |

+

"

|

| 247 |

+

|

| 248 |

+

custom=$(

|

| 249 |

+

echo "$custom" | grep -v '^#' | \

|

| 250 |

+

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

|

| 251 |

+

)

|

| 252 |

+

|

| 253 |

+

numactl -N 0 -m 0 \

|

| 254 |

+

./build/bin/llama-quantize \

|

| 255 |

+

--custom-q "$custom" \

|

| 256 |

+

--imatrix /mnt/raid/models/ubergarm/Qwen3-Coder-480B-A35B-Instruct-GGUF/imatrix-Qwen3-Coder-480B-A35B-Instruct-Q8_0.dat \

|

| 257 |

+

/mnt/raid/models/ubergarm/Qwen3-Coder-480B-A35B-Instruct-GGUF/Qwen3-Coder-480B-A35B-Instruct-BF16-00001-of-00021.gguf \

|

| 258 |

+

/mnt/raid/models/ubergarm/Qwen3-Coder-480B-A35B-Instruct-GGUF/Qwen3-Coder-480B-A35B-Instruct-IQ2_KL.gguf \

|

| 259 |

+

IQ2_KL \

|

| 260 |

+

192

|

| 261 |

+

```

|

| 262 |

+

|

| 263 |

## `IQ2_K` 144.640 GiB (2.588 BPW)

|

| 264 |

Final estimate: PPL = 5.6578 +/- 0.03697

|

| 265 |

|

images/perplexity.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|