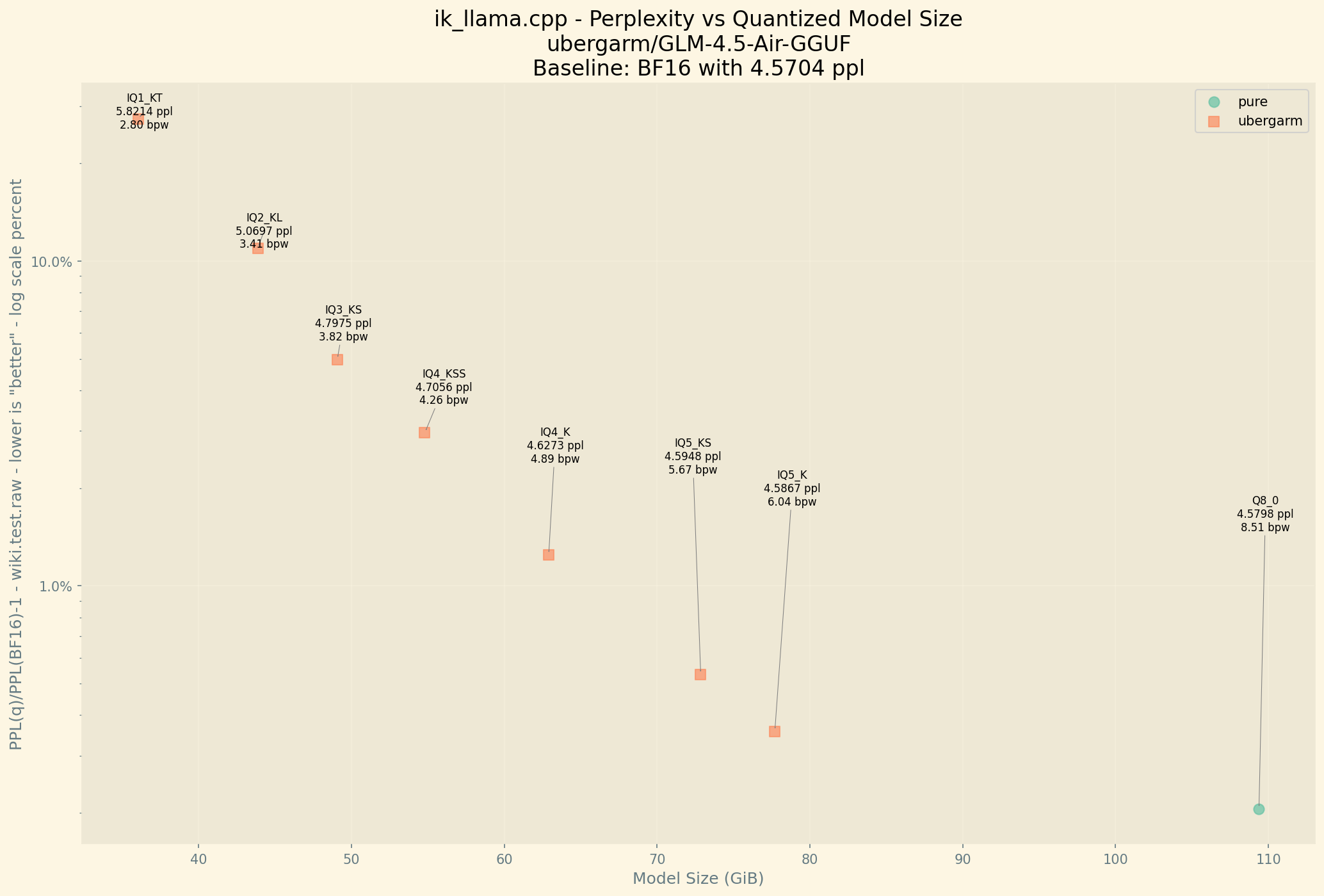

Release IQ3_KS with perplexity info

Browse files- README.md +68 -0

- images/perplexity.png +2 -2

README.md

CHANGED

|

@@ -283,6 +283,74 @@ numactl -N 0 -m 0 \

|

|

| 283 |

|

| 284 |

</details>

|

| 285 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 286 |

## IQ2_KL 43.870 GiB (3.411 BPW)

|

| 287 |

Final estimate: PPL = 5.0697 +/- 0.03166

|

| 288 |

|

|

|

|

| 283 |

|

| 284 |

</details>

|

| 285 |

|

| 286 |

+

## IQ3_KS 49.072 GiB (3.816 BPW)

|

| 287 |

+

Final estimate: PPL = 4.7975 +/- 0.02972

|

| 288 |

+

|

| 289 |

+

<details>

|

| 290 |

+

|

| 291 |

+

<summary>👈 Secret Recipe</summary>

|

| 292 |

+

|

| 293 |

+

```bash

|

| 294 |

+

#!/usr/bin/env bash

|

| 295 |

+

|

| 296 |

+

custom="

|

| 297 |

+

# 47 Repeating Layers [0-46]

|

| 298 |

+

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

|

| 299 |

+

|

| 300 |

+

# Attention

|

| 301 |

+

blk\.(0|1)\.attn_q.*=q8_0

|

| 302 |

+

blk\.(0|1)\.attn_k.*=q8_0

|

| 303 |

+

blk\.(0|1)\.attn_v.*=q8_0

|

| 304 |

+

blk\.(0|1)\.attn_output.*=q8_0

|

| 305 |

+

|

| 306 |

+

blk\..*\.attn_q.*=iq5_ks

|

| 307 |

+

blk\..*\.attn_k.*=iq5_ks

|

| 308 |

+

blk\..*\.attn_v.*=iq5_ks

|

| 309 |

+

blk\..*\.attn_output.*=iq5_ks

|

| 310 |

+

|

| 311 |

+

# First 1 Dense Layers [0]

|

| 312 |

+

blk\..*\.ffn_down\.weight=q6_0

|

| 313 |

+

blk\..*\.ffn_(gate|up)\.weight=iq5_ks

|

| 314 |

+

|

| 315 |

+

# Shared Expert Layers [1-46]

|

| 316 |

+

blk\..*\.ffn_down_shexp\.weight=q6_0

|

| 317 |

+

blk\..*\.ffn_(gate|up)_shexp\.weight=iq5_ks

|

| 318 |

+

|

| 319 |

+

# Routed Experts Layers [1-46]

|

| 320 |

+

blk\.(1)\.ffn_down_exps\.weight=q6_0

|

| 321 |

+

blk\.(1)\.ffn_(gate|up)_exps\.weight=iq5_ks

|

| 322 |

+

|

| 323 |

+

blk\..*\.ffn_down_exps\.weight=iq4_nl

|

| 324 |

+

blk\..*\.ffn_(gate|up)_exps\.weight=iq3_ks

|

| 325 |

+

|

| 326 |

+

# Non-Repeating Layers

|

| 327 |

+

token_embd\.weight=iq4_k

|

| 328 |

+

output\.weight=iq6_k

|

| 329 |

+

|

| 330 |

+

# NextN MTP Layer [46]

|

| 331 |

+

blk\..*\.nextn\.embed_tokens\.weight=iq5_ks

|

| 332 |

+

blk\..*\.nextn\.shared_head_head\.weight=iq5_ks

|

| 333 |

+

blk\..*\.nextn\.eh_proj\.weight=q8_0

|

| 334 |

+

"

|

| 335 |

+

|

| 336 |

+

custom=$(

|

| 337 |

+

echo "$custom" | grep -v '^#' | \

|

| 338 |

+

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

|

| 339 |

+

)

|

| 340 |

+

|

| 341 |

+

numactl -N 0 -m 0 \

|

| 342 |

+

./build/bin/llama-quantize \

|

| 343 |

+

--custom-q "$custom" \

|

| 344 |

+

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

|

| 345 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

|

| 346 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-PR624-IQ3_KS.gguf \

|

| 347 |

+

IQ3_KS \

|

| 348 |

+

192

|

| 349 |

+

```

|

| 350 |

+

|

| 351 |

+

</summary>

|

| 352 |

+

|

| 353 |

+

|

| 354 |

## IQ2_KL 43.870 GiB (3.411 BPW)

|

| 355 |

Final estimate: PPL = 5.0697 +/- 0.03166

|

| 356 |

|

images/perplexity.png

CHANGED

|

Git LFS Details

|

|

Git LFS Details

|