add recipes and perplexity graph

Browse files- README.md +148 -3

- images/perplexity.png +3 -0

README.md

CHANGED

|

@@ -34,6 +34,7 @@ Perplexity computed against *wiki.test.raw*.

|

|

| 34 |

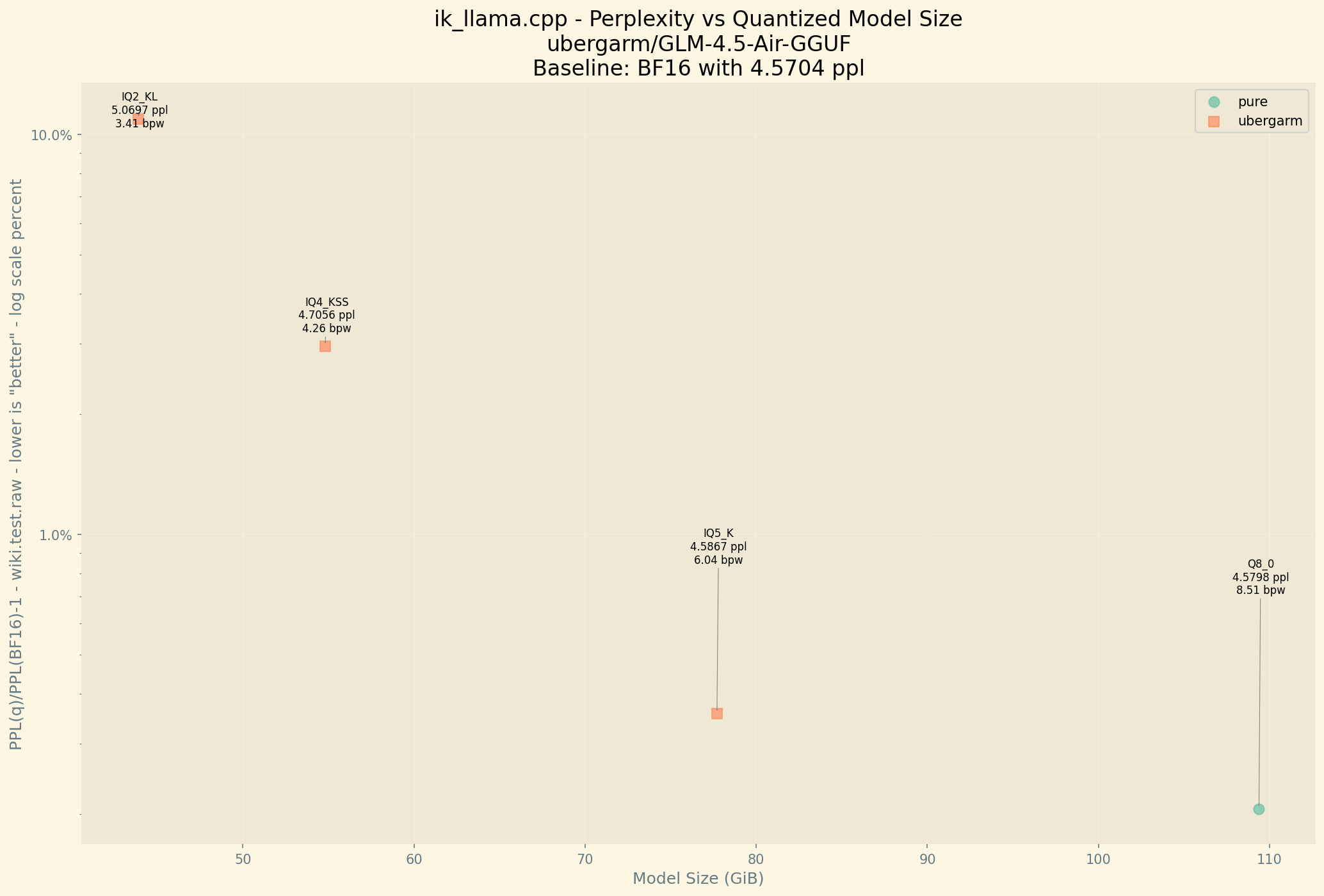

These first two are just test quants for baseline perplexity comparison:

|

| 35 |

* `BF16` 205.811 GiB (16.004 BPW)

|

| 36 |

- Final estimate: PPL = 4.5704 +/- 0.02796

|

|

|

|

| 37 |

* `Q8_0` 109.381 GiB (8.505 BPW)

|

| 38 |

- Final estimate: PPL = 4.5798 +/- 0.02804

|

| 39 |

|

|

@@ -45,7 +46,56 @@ Final estimate: PPL = 4.5867 +/- 0.02806

|

|

| 45 |

<summary>👈 Secret Recipe</summary>

|

| 46 |

|

| 47 |

```bash

|

| 48 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 49 |

```

|

| 50 |

|

| 51 |

</details>

|

|

@@ -59,7 +109,53 @@ Final estimate: PPL = 4.7056 +/- 0.02909

|

|

| 59 |

<summary>👈 Secret Recipe</summary>

|

| 60 |

|

| 61 |

```bash

|

| 62 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 63 |

```

|

| 64 |

|

| 65 |

</details>

|

|

@@ -72,7 +168,56 @@ Final estimate: PPL = 5.0697 +/- 0.03166

|

|

| 72 |

<summary>👈 Secret Recipe</summary>

|

| 73 |

|

| 74 |

```bash

|

| 75 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 76 |

```

|

| 77 |

|

| 78 |

</details>

|

|

|

|

| 34 |

These first two are just test quants for baseline perplexity comparison:

|

| 35 |

* `BF16` 205.811 GiB (16.004 BPW)

|

| 36 |

- Final estimate: PPL = 4.5704 +/- 0.02796

|

| 37 |

+

|

| 38 |

* `Q8_0` 109.381 GiB (8.505 BPW)

|

| 39 |

- Final estimate: PPL = 4.5798 +/- 0.02804

|

| 40 |

|

|

|

|

| 46 |

<summary>👈 Secret Recipe</summary>

|

| 47 |

|

| 48 |

```bash

|

| 49 |

+

#!/usr/bin/env bash

|

| 50 |

+

|

| 51 |

+

custom="

|

| 52 |

+

# 47 Repeating Layers [0-46]

|

| 53 |

+

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

|

| 54 |

+

|

| 55 |

+

# Attention

|

| 56 |

+

blk\..*\.attn_q.*=q8_0

|

| 57 |

+

blk\..*\.attn_k.*=q8_0

|

| 58 |

+

blk\..*\.attn_v.*=q8_0

|

| 59 |

+

blk\..*\.attn_output.*=q8_0

|

| 60 |

+

|

| 61 |

+

# First 1 Dense Layers [0]

|

| 62 |

+

blk\..*\.ffn_down\.weight=q8_0

|

| 63 |

+

blk\..*\.ffn_(gate|up)\.weight=q8_0

|

| 64 |

+

|

| 65 |

+

# Shared Expert Layers [1-46]

|

| 66 |

+

blk\..*\.ffn_down_shexp\.weight=q8_0

|

| 67 |

+

blk\..*\.ffn_(gate|up)_shexp\.weight=q8_0

|

| 68 |

+

|

| 69 |

+

# Routed Experts Layers [1-46]

|

| 70 |

+

blk\.(1)\.ffn_down_exps\.weight=q8_0

|

| 71 |

+

blk\.(1)\.ffn_(gate|up)_exps\.weight=q8_0

|

| 72 |

+

|

| 73 |

+

blk\..*\.ffn_down_exps\.weight=q6_0

|

| 74 |

+

blk\..*\.ffn_(gate|up)_exps\.weight=iq5_k

|

| 75 |

+

|

| 76 |

+

# NextN MTP Layer [46]

|

| 77 |

+

blk\..*\.nextn\.embed_tokens\.weight=iq5_ks

|

| 78 |

+

blk\..*\.nextn\.shared_head_head\.weight=iq5_ks

|

| 79 |

+

blk\..*\.nextn\.eh_proj\.weight=q8_0

|

| 80 |

+

|

| 81 |

+

# Non-Repeating Layers

|

| 82 |

+

token_embd\.weight=iq6_k

|

| 83 |

+

output\.weight=iq6_k

|

| 84 |

+

"

|

| 85 |

+

|

| 86 |

+

custom=$(

|

| 87 |

+

echo "$custom" | grep -v '^#' | \

|

| 88 |

+

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

|

| 89 |

+

)

|

| 90 |

+

|

| 91 |

+

numactl -N 0 -m 0 \

|

| 92 |

+

./build/bin/llama-quantize \

|

| 93 |

+

--custom-q "$custom" \

|

| 94 |

+

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

|

| 95 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

|

| 96 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ5_K.gguf \

|

| 97 |

+

IQ5_K \

|

| 98 |

+

192

|

| 99 |

```

|

| 100 |

|

| 101 |

</details>

|

|

|

|

| 109 |

<summary>👈 Secret Recipe</summary>

|

| 110 |

|

| 111 |

```bash

|

| 112 |

+

#!/usr/bin/env bash

|

| 113 |

+

|

| 114 |

+

custom="

|

| 115 |

+

# 47 Repeating Layers [0-46]

|

| 116 |

+

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

|

| 117 |

+

|

| 118 |

+

# Attention

|

| 119 |

+

blk\.(0|1)\.attn_q.*=q8_0

|

| 120 |

+

blk\.(0|1)\.attn_k.*=q8_0

|

| 121 |

+

blk\.(0|1)\.attn_v.*=q8_0

|

| 122 |

+

blk\.(0|1)\.attn_output.*=q8_0

|

| 123 |

+

|

| 124 |

+

blk\..*\.attn_q.*=iq5_ks

|

| 125 |

+

blk\..*\.attn_k.*=iq5_ks

|

| 126 |

+

blk\..*\.attn_v.*=iq5_ks

|

| 127 |

+

blk\..*\.attn_output.*=iq5_ks

|

| 128 |

+

|

| 129 |

+

# First 1 Dense Layers [0]

|

| 130 |

+

blk\..*\.ffn_down\.weight=q6_0

|

| 131 |

+

blk\..*\.ffn_(gate|up)\.weight=iq5_ks

|

| 132 |

+

|

| 133 |

+

# Shared Expert Layers [1-46]

|

| 134 |

+

blk\..*\.ffn_down_shexp\.weight=q6_0

|

| 135 |

+

blk\..*\.ffn_(gate|up)_shexp\.weight=iq5_ks

|

| 136 |

+

|

| 137 |

+

# Routed Experts Layers [1-46]

|

| 138 |

+

blk\..*\.ffn_down_exps\.weight=iq4_xs

|

| 139 |

+

blk\..*\.ffn_(gate|up)_exps\.weight=iq4_kss

|

| 140 |

+

|

| 141 |

+

# Non-Repeating Layers

|

| 142 |

+

token_embd\.weight=iq4_k

|

| 143 |

+

output\.weight=iq6_k

|

| 144 |

+

"

|

| 145 |

+

|

| 146 |

+

custom=$(

|

| 147 |

+

echo "$custom" | grep -v '^#' | \

|

| 148 |

+

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

|

| 149 |

+

)

|

| 150 |

+

|

| 151 |

+

numactl -N 1 -m 1 \

|

| 152 |

+

./build/bin/llama-quantize \

|

| 153 |

+

--custom-q "$custom" \

|

| 154 |

+

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

|

| 155 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x8.1B-BF16-00001-of-00005.gguf \

|

| 156 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ4_KSS.gguf \

|

| 157 |

+

IQ4_KSS \

|

| 158 |

+

192

|

| 159 |

```

|

| 160 |

|

| 161 |

</details>

|

|

|

|

| 168 |

<summary>👈 Secret Recipe</summary>

|

| 169 |

|

| 170 |

```bash

|

| 171 |

+

#!/usr/bin/env bash

|

| 172 |

+

|

| 173 |

+

custom="

|

| 174 |

+

# 47 Repeating Layers [0-46]

|

| 175 |

+

# Note: All ffn_down.* layers are not divisible by 256 so have limited quantization options.

|

| 176 |

+

|

| 177 |

+

# Attention

|

| 178 |

+

blk\..*\.attn_q.*=iq4_ks

|

| 179 |

+

blk\..*\.attn_k.*=iq5_ks

|

| 180 |

+

blk\..*\.attn_v.*=iq5_ks

|

| 181 |

+

blk\..*\.attn_output.*=iq4_ks

|

| 182 |

+

|

| 183 |

+

# First 1 Dense Layers [0]

|

| 184 |

+

blk\..*\.ffn_down\.weight=iq4_nl

|

| 185 |

+

blk\..*\.ffn_(gate|up)\.weight=iq4_kss

|

| 186 |

+

|

| 187 |

+

# Shared Expert Layers [1-46]

|

| 188 |

+

blk\..*\.ffn_down_shexp\.weight=iq4_nl

|

| 189 |

+

blk\..*\.ffn_(gate|up)_shexp\.weight=iq4_kss

|

| 190 |

+

|

| 191 |

+

# Routed Experts Layers [1-46]

|

| 192 |

+

blk\.(1)\.ffn_down_exps\.weight=iq4_nl

|

| 193 |

+

blk\.(1)\.ffn_(gate|up)_exps\.weight=iq4_kss

|

| 194 |

+

|

| 195 |

+

blk\..*\.ffn_down_exps\.weight=iq4_nl

|

| 196 |

+

blk\..*\.ffn_(gate|up)_exps\.weight=iq2_kl

|

| 197 |

+

|

| 198 |

+

# NextN MTP Layer [46]

|

| 199 |

+

blk\..*\.nextn\.embed_tokens\.weight=iq4_ks

|

| 200 |

+

blk\..*\.nextn\.shared_head_head\.weight=iq4_ks

|

| 201 |

+

blk\..*\.nextn\.eh_proj\.weight=q6_0

|

| 202 |

+

|

| 203 |

+

# Non-Repeating Layers

|

| 204 |

+

token_embd\.weight=iq4_k

|

| 205 |

+

output\.weight=iq6_k

|

| 206 |

+

"

|

| 207 |

+

|

| 208 |

+

custom=$(

|

| 209 |

+

echo "$custom" | grep -v '^#' | \

|

| 210 |

+

sed -Ez 's:\n+:,:g;s:,$::;s:^,::'

|

| 211 |

+

)

|

| 212 |

+

|

| 213 |

+

numactl -N 0 -m 0 \

|

| 214 |

+

./build/bin/llama-quantize \

|

| 215 |

+

--custom-q "$custom" \

|

| 216 |

+

--imatrix /mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/imatrix-GLM-4.5-Air-BF16.dat \

|

| 217 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-128x9.4B-BF16-00001-of-00005.gguf \

|

| 218 |

+

/mnt/raid/models/ubergarm/GLM-4.5-Air-GGUF/GLM-4.5-Air-IQ2_KL.gguf \

|

| 219 |

+

IQ2_KL \

|

| 220 |

+

192

|

| 221 |

```

|

| 222 |

|

| 223 |

</details>

|

images/perplexity.png

ADDED

|

Git LFS Details

|