Upload folder using huggingface_hub

Browse files- .gitattributes +2 -0

- README.md +59 -9

- framework.png +3 -0

- performance.png +3 -0

.gitattributes

CHANGED

|

@@ -34,3 +34,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

framework.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

performance.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,9 +1,59 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: llama3.1

|

| 3 |

-

datasets:

|

| 4 |

-

- BAAI/Infinity-Instruct

|

| 5 |

-

base_model:

|

| 6 |

-

- meta-llama/Llama-3.1-8B-Instruct

|

| 7 |

-

tags:

|

| 8 |

-

- Instruct_Tuning

|

| 9 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: llama3.1

|

| 3 |

+

datasets:

|

| 4 |

+

- BAAI/Infinity-Instruct

|

| 5 |

+

base_model:

|

| 6 |

+

- meta-llama/Llama-3.1-8B-Instruct

|

| 7 |

+

tags:

|

| 8 |

+

- Instruct_Tuning

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

# Shadow-FT

|

| 12 |

+

|

| 13 |

+

<a href="https://arxiv.org/pdf/2505.12716"><b>[📜 Paper]</b></a> •

|

| 14 |

+

<a href="https://huggingface.co/collections/taki555/shadow-ft-683288b49e1e5e1edcf03135"><b>[🤗 HF Models]</b></a> •

|

| 15 |

+

<a href="https://github.com/wutaiqiang/Shadow-FT"><b>[🐱 GitHub]</b></a>

|

| 16 |

+

|

| 17 |

+

This repo contains the weights from our paper: <a href="https://arxiv.org/abs/2411.06839" target="_blank">Shadow-FT: Tuning Instruct via Base</a> by <a href="https://wutaiqiang.github.io" target="_blank">Taiqiang Wu*</a> <a href="https://rummyyang.github.io/" target="_blank">Runming Yang*</a>, Jiayi Li, Pengfei Hu, Ngai Wong and Yujiu Yang.

|

| 18 |

+

|

| 19 |

+

\* for equal contributions.

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Overview

|

| 24 |

+

|

| 25 |

+

<img src="framework.png" width="100%" />

|

| 26 |

+

|

| 27 |

+

Observation:

|

| 28 |

+

|

| 29 |

+

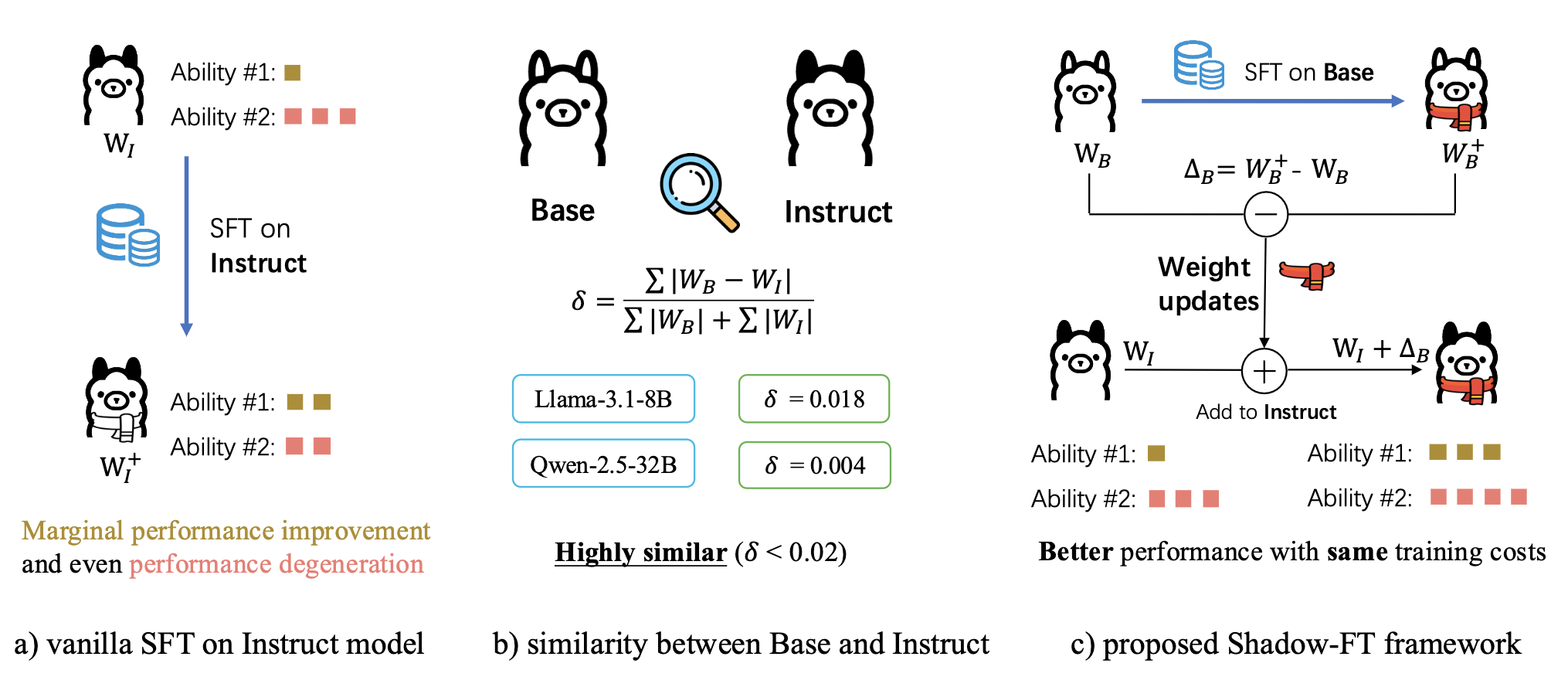

- Directly tuning the INSTRUCT (i.e., instruction tuned) models often leads to marginal improvements and even performance degeneration.

|

| 30 |

+

|

| 31 |

+

- Paired BASE models, the foundation for these INSTRUCT variants, contain highly similar weight values (i.e., less than 2% on average for Llama 3.1 8B).

|

| 32 |

+

|

| 33 |

+

$\Rightarrow$ We propose the Shadow-FT framework to tune the INSTRUCT models by leveraging the corresponding BASE models. The key insight is to fine-tune the BASE model, and then _directly_ graft the learned weight updates to the INSTRUCT model.

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

## Performance

|

| 37 |

+

|

| 38 |

+

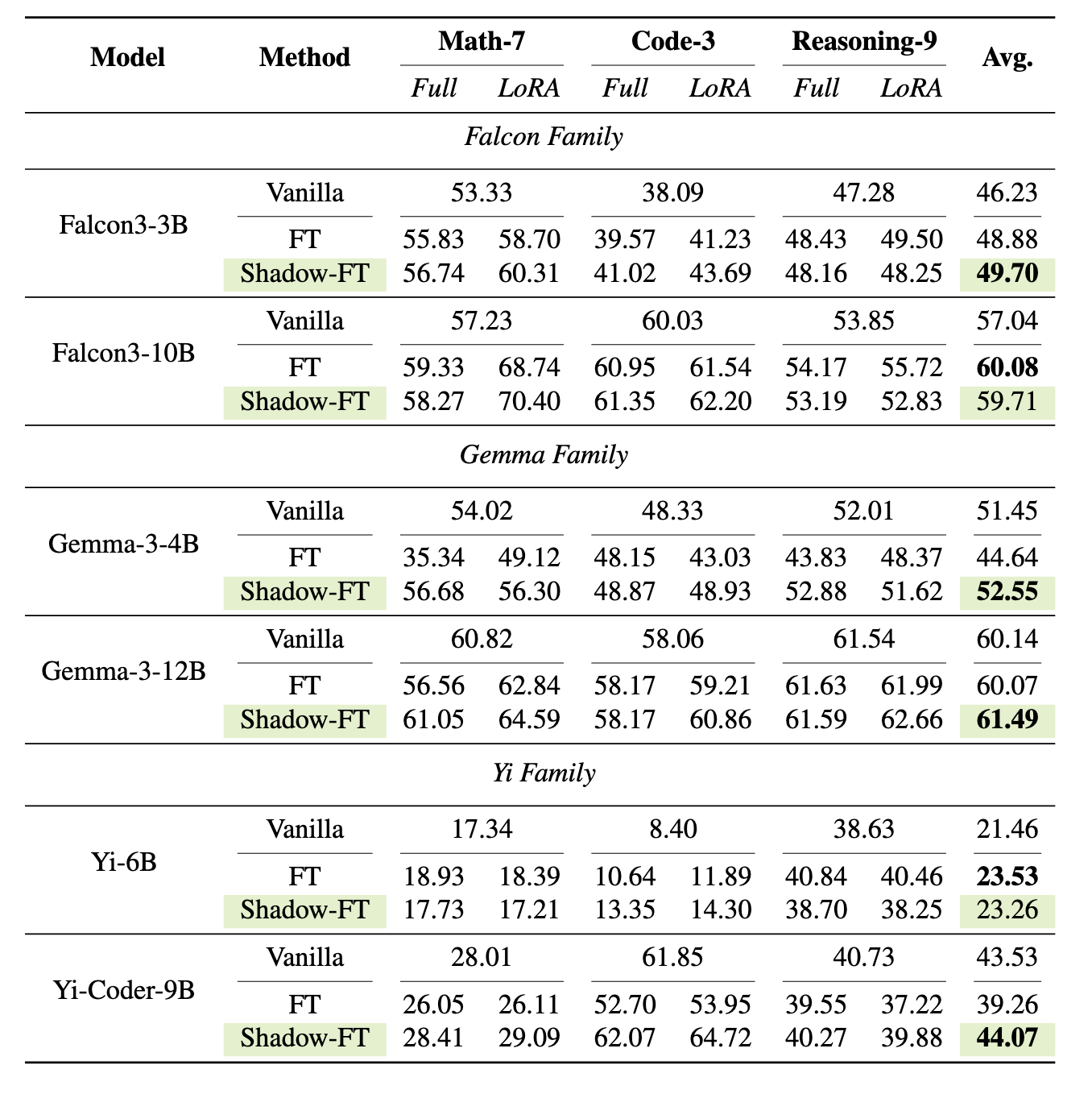

This repository contains the Llama-3.1-8B tuned on BAAI-2k subsets using Shadow-FT.

|

| 39 |

+

|

| 40 |

+

<img src="performance.png" width="100%" />

|

| 41 |

+

|

| 42 |

+

please refer to [our paper](https://arxiv.org/pdf/2505.12716) for details.

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

## ☕️ Citation

|

| 47 |

+

|

| 48 |

+

If you find this repository helpful, please consider citing our paper:

|

| 49 |

+

|

| 50 |

+

```

|

| 51 |

+

@article{wu2025shadow,

|

| 52 |

+

title={Shadow-FT: Tuning Instruct via Base},

|

| 53 |

+

author={Wu, Taiqiang and Yang, Runming and Li, Jiayi and Hu, Pengfei and Wong, Ngai and Yang, Yujiu},

|

| 54 |

+

journal={arXiv preprint arXiv:2505.12716},

|

| 55 |

+

year={2025}

|

| 56 |

+

}

|

| 57 |

+

```

|

| 58 |

+

|

| 59 |

+

For any questions, please pull an issue or email at `[email protected]`

|

framework.png

ADDED

|

Git LFS Details

|

performance.png

ADDED

|

Git LFS Details

|