diff --git a/elia/LICENSE b/LICENSE

similarity index 100%

rename from elia/LICENSE

rename to LICENSE

diff --git a/README.md b/README.md

index 8511de7bfbbc4a731c11381bbf702111eea7d132..869de8afb5569dca5c730028fe17228f54198bef 100644

--- a/README.md

+++ b/README.md

@@ -1,12 +1,222 @@

----

-title: Elia

-emoji: 📈

-colorFrom: green

-colorTo: red

-sdk: gradio

-sdk_version: 3.33.1

-app_file: app.py

-pinned: false

----

-

-Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

+# LAVT: Language-Aware Vision Transformer for Referring Image Segmentation

+Welcome to the official repository for the method presented in

+"LAVT: Language-Aware Vision Transformer for Referring Image Segmentation."

+

+

+

+

+Code in this repository is written using [PyTorch](https://pytorch.org/) and is organized in the following way (assuming the working directory is the root directory of this repository):

+* `./lib` contains files implementing the main network.

+* Inside `./lib`, `_utils.py` defines the highest-level model, which incorporates the backbone network

+defined in `backbone.py` and the simple mask decoder defined in `mask_predictor.py`.

+`segmentation.py` provides the model interface and initialization functions.

+* `./bert` contains files migrated from [Hugging Face Transformers v3.0.2](https://huggingface.co/transformers/v3.0.2/quicktour.html),

+which implement the BERT language model.

+We used Transformers v3.0.2 during development but it had a bug that would appear when using `DistributedDataParallel`.

+Therefore we maintain a copy of the relevant source files in this repository.

+This way, the bug is fixed and code in this repository is self-contained.

+* `./train.py` is invoked to train the model.

+* `./test.py` is invoked to run inference on the evaluation subsets after training.

+* `./refer` contains data pre-processing code and is also where data should be placed, including the images and all annotations.

+It is cloned from [refer](https://github.com/lichengunc/refer).

+* `./data/dataset_refer_bert.py` is where the dataset class is defined.

+* `./utils.py` defines functions that track training statistics and setup

+functions for `DistributedDataParallel`.

+

+

+## Updates

+**June 21st, 2022**. Uploaded the training logs and trained

+model weights of lavt_one.

+

+**June 9th, 2022**.

+Added a more efficient implementation of LAVT.

+* To train this new model, specify `--model` as `lavt_one`

+(and `lavt` is still valid for specifying the old model).

+The rest of the configuration stays unchanged.

+* The difference between this version and the previous one

+is that the language model has been moved inside the overall model,

+so that `DistributedDataParallel` needs to be applied only once.

+Applying it twice (on the standalone language model and the main branch)

+as done in the old implementation led to low GPU utility,

+which prevented scaling up training speed with more GPUs.

+We recommend training this model on 8 GPUs

+(and same as before with batch size 32).

+

+## Setting Up

+### Preliminaries

+The code has been verified to work with PyTorch v1.7.1 and Python 3.7.

+1. Clone this repository.

+2. Change directory to root of this repository.

+### Package Dependencies

+1. Create a new Conda environment with Python 3.7 then activate it:

+```shell

+conda create -n lavt python==3.7

+conda activate lavt

+```

+

+2. Install PyTorch v1.7.1 with a CUDA version that works on your cluster/machine (CUDA 10.2 is used in this example):

+```shell

+conda install pytorch==1.7.1 torchvision==0.8.2 torchaudio==0.7.2 cudatoolkit=10.2 -c pytorch

+```

+

+3. Install the packages in `requirements.txt` via `pip`:

+```shell

+pip install -r requirements.txt

+```

+

+### Datasets

+1. Follow instructions in the `./refer` directory to set up subdirectories

+and download annotations.

+This directory is a git clone (minus two data files that we do not need)

+from the [refer](https://github.com/lichengunc/refer) public API.

+

+2. Download images from [COCO](https://cocodataset.org/#download).

+Please use the first downloading link *2014 Train images [83K/13GB]*, and extract

+the downloaded `train_2014.zip` file to `./refer/data/images/mscoco/images`.

+

+### The Initialization Weights for Training

+1. Create the `./pretrained_weights` directory where we will be storing the weights.

+```shell

+mkdir ./pretrained_weights

+```

+2. Download [pre-trained classification weights of

+the Swin Transformer](https://github.com/SwinTransformer/storage/releases/download/v1.0.0/swin_base_patch4_window12_384_22k.pth),

+and put the `pth` file in `./pretrained_weights`.

+These weights are needed for training to initialize the model.

+

+### Trained Weights of LAVT for Testing

+1. Create the `./checkpoints` directory where we will be storing the weights.

+```shell

+mkdir ./checkpoints

+```

+2. Download LAVT model weights (which are stored on Google Drive) using links below and put them in `./checkpoints`.

+

+| [RefCOCO](https://drive.google.com/file/d/13D-OeEOijV8KTC3BkFP-gOJymc6DLwVT/view?usp=sharing) | [RefCOCO+](https://drive.google.com/file/d/1B8Q44ZWsc8Pva2xD_M-KFh7-LgzeH2-2/view?usp=sharing) | [G-Ref (UMD)](https://drive.google.com/file/d/1BjUnPVpALurkGl7RXXvQiAHhA-gQYKvK/view?usp=sharing) | [G-Ref (Google)](https://drive.google.com/file/d/1weiw5UjbPfo3tCBPfB8tu6xFXCUG16yS/view?usp=sharing) |

+|---|---|---|---|

+

+3. Model weights and training logs of the new lavt_one implementation are below.

+

+| RefCOCO | RefCOCO+ | G-Ref (UMD) | G-Ref (Google) |

+|:-----:|:-----:|:-----:|:-----:|

+|[log](https://drive.google.com/file/d/1YIojIHqe3bxxsWOltifa2U9jH67hPHLM/view?usp=sharing) | [weights](https://drive.google.com/file/d/1xFMEXr6AGU97Ypj1yr8oo00uObbeIQvJ/view?usp=sharing)|[log](https://drive.google.com/file/d/1Z34T4gEnWlvcSUQya7txOuM0zdLK7MRT/view?usp=sharing) | [weights](https://drive.google.com/file/d/1HS8ZnGaiPJr-OmoUn4-4LVnVtD_zHY6w/view?usp=sharing)|[log](https://drive.google.com/file/d/14VAgahngOV8NA6noLZCqDoqaUrlW14v8/view?usp=sharing) | [weights](https://drive.google.com/file/d/14g8NzgZn6HzC6tP_bsQuWmh5LnOcovsE/view?usp=sharing)|[log](https://drive.google.com/file/d/1JBXfmlwemWSvs92Rky0TlHcVuuLpt4Da/view?usp=sharing) | [weights](https://drive.google.com/file/d/1IJeahFVLgKxu_BVmWacZs3oUzgTCeWcz/view?usp=sharing)|

+

+* The Prec@K, overall IoU and mean IoU numbers in the training logs will differ

+from the final results obtained by running `test.py`,

+because only one out of multiple annotated expressions is

+randomly selected and evaluated for each object during training.

+But these numbers give a good idea about the test performance.

+The two should be fairly close.

+

+

+## Training

+We use `DistributedDataParallel` from PyTorch.

+The released `lavt` weights were trained using 4 x 32G V100 cards (max mem on each card was about 26G).

+The released `lavt_one` weights were trained using 8 x 32G V100 cards (max mem on each card was about 13G).

+Using more cards was to accelerate training.

+To run on 4 GPUs (with IDs 0, 1, 2, and 3) on a single node:

+```shell

+mkdir ./models

+

+mkdir ./models/refcoco

+CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node 4 --master_port 12345 train.py --model lavt --dataset refcoco --model_id refcoco --batch-size 8 --lr 0.00005 --wd 1e-2 --swin_type base --pretrained_swin_weights ./pretrained_weights/swin_base_patch4_window12_384_22k.pth --epochs 40 --img_size 480 2>&1 | tee ./models/refcoco/output

+

+mkdir ./models/refcoco+

+CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node 4 --master_port 12345 train.py --model lavt --dataset refcoco+ --model_id refcoco+ --batch-size 8 --lr 0.00005 --wd 1e-2 --swin_type base --pretrained_swin_weights ./pretrained_weights/swin_base_patch4_window12_384_22k.pth --epochs 40 --img_size 480 2>&1 | tee ./models/refcoco+/output

+

+mkdir ./models/gref_umd

+CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node 4 --master_port 12345 train.py --model lavt --dataset refcocog --splitBy umd --model_id gref_umd --batch-size 8 --lr 0.00005 --wd 1e-2 --swin_type base --pretrained_swin_weights ./pretrained_weights/swin_base_patch4_window12_384_22k.pth --epochs 40 --img_size 480 2>&1 | tee ./models/gref_umd/output

+

+mkdir ./models/gref_google

+CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nproc_per_node 4 --master_port 12345 train.py --model lavt --dataset refcocog --splitBy google --model_id gref_google --batch-size 8 --lr 0.00005 --wd 1e-2 --swin_type base --pretrained_swin_weights ./pretrained_weights/swin_base_patch4_window12_384_22k.pth --epochs 40 --img_size 480 2>&1 | tee ./models/gref_google/output

+```

+* *--model* is a pre-defined model name. Options include `lavt` and `lavt_one`. See [Updates](#updates).

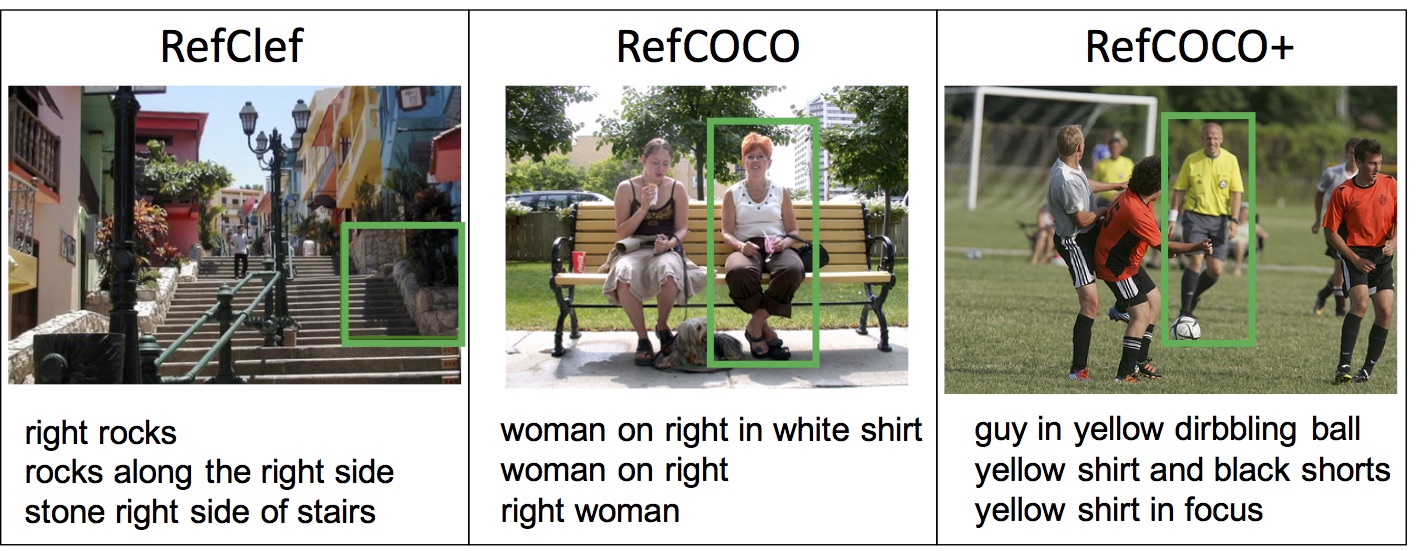

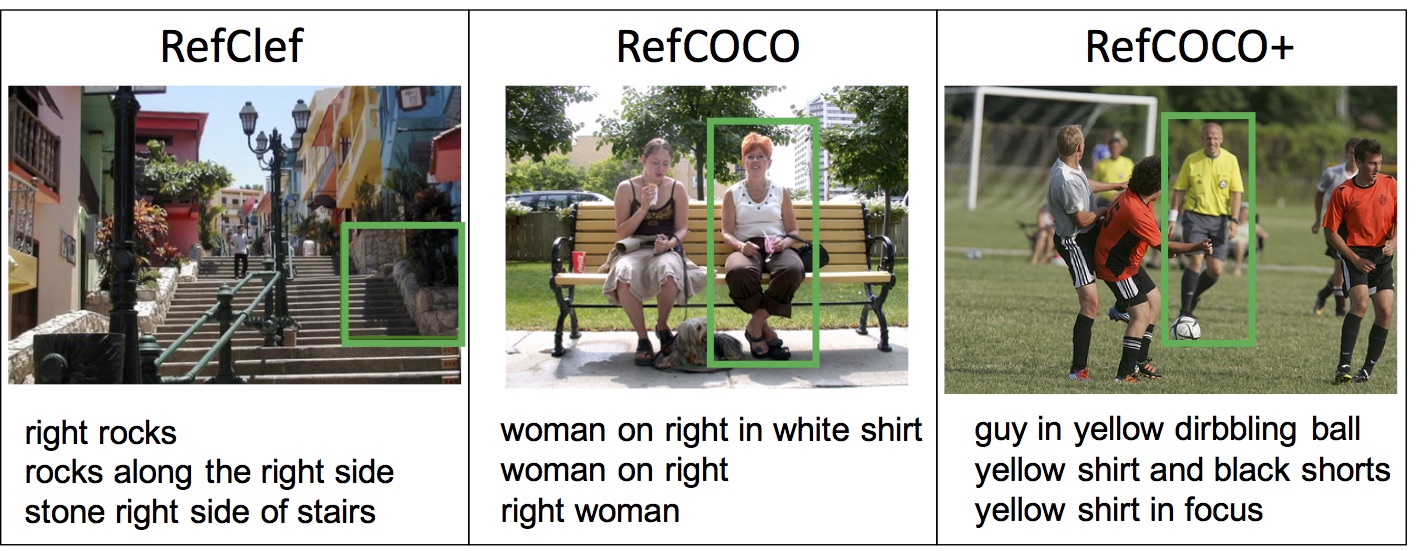

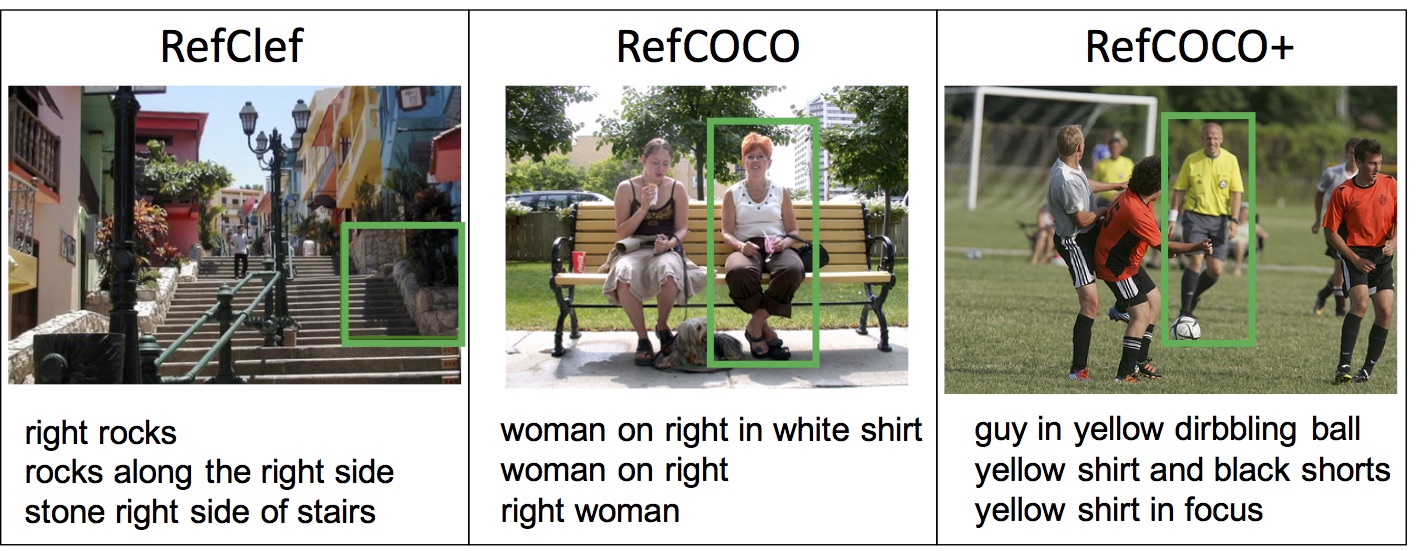

+* *--dataset* is the dataset name. One can choose from `refcoco`, `refcoco+`, and `refcocog`.

+* *--splitBy* needs to be specified if and only if the dataset is G-Ref (which is also called RefCOCOg).

+`umd` identifies the UMD partition and `google` identifies the Google partition.

+* *--model_id* is the model name one should define oneself (*e.g.*, customize it to contain training/model configurations, dataset information, experiment IDs, *etc*.).

+It is used in two ways: Training log will be saved as `./models/[args.model_id]/output` and the best checkpoint will be saved as `./checkpoints/model_best_[args.model_id].pth`.

+* *--swin_type* specifies the version of the Swin Transformer.

+One can choose from `tiny`, `small`, `base`, and `large`. The default is `base`.

+* *--pretrained_swin_weights* specifies the path to pre-trained Swin Transformer weights used for model initialization.

+* Note that currently we need to manually create the `./models/[args.model_id]` directory via `mkdir` before running `train.py`.

+This is because we use `tee` to redirect `stdout` and `stderr` to `./models/[args.model_id]/output` for logging.

+This is a nuisance and should be resolved in the future, *i.e.*, using a proper logger or a bash script for initiating training.

+

+## Testing

+For RefCOCO/RefCOCO+, run one of

+```shell

+python test.py --model lavt --swin_type base --dataset refcoco --split val --resume ./checkpoints/refcoco.pth --workers 4 --ddp_trained_weights --window12 --img_size 480

+python test.py --model lavt --swin_type base --dataset refcoco+ --split val --resume ./checkpoints/refcoco+.pth --workers 4 --ddp_trained_weights --window12 --img_size 480

+```

+* *--split* is the subset to evaluate, and one can choose from `val`, `testA`, and `testB`.

+* *--resume* is the path to the weights of a trained model.

+

+For G-Ref (UMD)/G-Ref (Google), run one of

+```shell

+python test.py --model lavt --swin_type base --dataset refcocog --splitBy umd --split val --resume ./checkpoints/gref_umd.pth --workers 4 --ddp_trained_weights --window12 --img_size 480

+python test.py --model lavt --swin_type base --dataset refcocog --splitBy google --split val --resume ./checkpoints/gref_google.pth --workers 4 --ddp_trained_weights --window12 --img_size 480

+```

+* *--splitBy* specifies the partition to evaluate.

+One can choose from `umd` or `google`.

+* *--split* is the subset (according to the specified partition) to evaluate, and one can choose from `val` and `test` for the UMD partition, and only `val` for the Google partition..

+* *--resume* is the path to the weights of a trained model.

+

+## Results

+The complete test results of the released LAVT models are summarized as follows:

+

+| Dataset | P@0.5 | P@0.6 | P@0.7 | P@0.8 | P@0.9 | Overall IoU | Mean IoU |

+|:---------------:|:-----:|:-----:|:-----:|:-----:|:-----:|:-----------:|:--------:|

+| RefCOCO val | 84.46 | 80.90 | 75.28 | 64.71 | 34.30 | 72.73 | 74.46 |

+| RefCOCO test A | 88.07 | 85.17 | 79.90 | 68.52 | 35.69 | 75.82 | 76.89 |

+| RefCOCO test B | 79.12 | 74.94 | 69.17 | 59.37 | 34.45 | 68.79 | 70.94 |

+| RefCOCO+ val | 74.44 | 70.91 | 65.58 | 56.34 | 30.23 | 62.14 | 65.81 |

+| RefCOCO+ test A | 80.68 | 77.96 | 72.90 | 62.21 | 32.36 | 68.38 | 70.97 |

+| RefCOCO+ test B | 65.66 | 61.85 | 55.94 | 47.56 | 27.24 | 55.10 | 59.23 |

+| G-Ref val (UMD) | 70.81 | 65.28 | 58.60 | 47.49 | 22.73 | 61.24 | 63.34 |

+| G-Ref test (UMD)| 71.54 | 66.38 | 59.00 | 48.21 | 23.10 | 62.09 | 63.62 |

+|G-Ref val (Goog.)| 71.16 | 67.21 | 61.76 | 51.98 | 27.30 | 60.50 | 63.66 |

+

+We have validated LAVT on RefCOCO with multiple runs.

+The overall IoU on the val set generally lies in the range of 72.73±0.5%.

+

+

+## Demo: Try LAVT on Your Own Image-text Pairs!

+One can run inference on a custom image-text pair

+and visualize the result by running the script `./demo_inference.py`.

+Choose your photos and expessions and have fun.

+

+

+## Citing LAVT

+```

+@inproceedings{yang2022lavt,

+ title={LAVT: Language-Aware Vision Transformer for Referring Image Segmentation},

+ author={Yang, Zhao and Wang, Jiaqi and Tang, Yansong and Chen, Kai and Zhao, Hengshuang and Torr, Philip HS},

+ booktitle={CVPR},

+ year={2022}

+}

+```

+

+

+## Contributing

+We appreciate all contributions.

+It helps the project if you could

+- report issues you are facing,

+- give a :+1: on issues reported by others that are relevant to you,

+- answer issues reported by others for which you have found solutions,

+- and implement helpful new features or improve the code otherwise with pull requests.

+

+## Acknowledgements

+Code in this repository is built upon several public repositories.

+Specifically,

+* data pre-processing leverages the [refer](https://github.com/lichengunc/refer) repository,

+* the backbone model is implemented based on code from [Swin Transformer for Semantic Segmentation](https://github.com/SwinTransformer/Swin-Transformer-Semantic-Segmentation),

+* the training and testing pipelines are adapted from [RefVOS](https://github.com/miriambellver/refvos),

+* and implementation of the BERT model (files in the bert directory) is from [Hugging Face Transformers v3.0.2](https://github.com/huggingface/transformers/tree/v3.0.2)

+(we migrated over the relevant code to fix a bug and simplify the installation process).

+

+Some of these repositories in turn adapt code from [OpenMMLab](https://github.com/open-mmlab) and [TorchVision](https://github.com/pytorch/vision).

+We'd like to thank the authors/organizations of these repositories for open sourcing their projects.

+

+

+## License

+GNU GPLv3

diff --git a/elia/__pycache__/args.cpython-37.pyc b/__pycache__/args.cpython-37.pyc

similarity index 100%

rename from elia/__pycache__/args.cpython-37.pyc

rename to __pycache__/args.cpython-37.pyc

diff --git a/elia/__pycache__/args.cpython-38.pyc b/__pycache__/args.cpython-38.pyc

similarity index 100%

rename from elia/__pycache__/args.cpython-38.pyc

rename to __pycache__/args.cpython-38.pyc

diff --git a/elia/__pycache__/transforms.cpython-37.pyc b/__pycache__/transforms.cpython-37.pyc

similarity index 100%

rename from elia/__pycache__/transforms.cpython-37.pyc

rename to __pycache__/transforms.cpython-37.pyc

diff --git a/elia/__pycache__/transforms.cpython-38.pyc b/__pycache__/transforms.cpython-38.pyc

similarity index 100%

rename from elia/__pycache__/transforms.cpython-38.pyc

rename to __pycache__/transforms.cpython-38.pyc

diff --git a/elia/__pycache__/utils.cpython-37.pyc b/__pycache__/utils.cpython-37.pyc

similarity index 100%

rename from elia/__pycache__/utils.cpython-37.pyc

rename to __pycache__/utils.cpython-37.pyc

diff --git a/elia/__pycache__/utils.cpython-38.pyc b/__pycache__/utils.cpython-38.pyc

similarity index 99%

rename from elia/__pycache__/utils.cpython-38.pyc

rename to __pycache__/utils.cpython-38.pyc

index 59c425f22032f4435c681238cd31a83bd8fb1061..4d9a65a30ce4b04c63946a7156066658f76ad96f 100644

Binary files a/elia/__pycache__/utils.cpython-38.pyc and b/__pycache__/utils.cpython-38.pyc differ

diff --git a/elia/app.py b/app.py

similarity index 98%

rename from elia/app.py

rename to app.py

index 03b11a42bb61ff9a4b2dc51fd6d8e4fe106de893..9e8a0eed302489afa274220157d47411d4ed5022 100644

--- a/elia/app.py

+++ b/app.py

@@ -2,7 +2,7 @@ import gradio as gr

image_path = './image001.png'

sentence = 'spoon on the dish'

-weights = './checkpoints/model_best_refcoco_0508.pth'

+weights = './checkpoints/gradio.pth'

device = 'cpu'

# pre-process the input image

@@ -185,7 +185,7 @@ model = WrapperModel(single_model.backbone, single_bert_model, maskformer_head)

checkpoint = torch.load(weights, map_location='cpu')

-model.load_state_dict(checkpoint['model'], strict=False)

+model.load_state_dict(checkpoint, strict=False)

model.to(device)

model.eval()

#single_bert_model.load_state_dict(checkpoint['bert_model'])

diff --git a/elia/args.py b/args.py

similarity index 100%

rename from elia/args.py

rename to args.py

diff --git a/elia/bert/__pycache__/activations.cpython-37.pyc b/bert/__pycache__/activations.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/activations.cpython-37.pyc

rename to bert/__pycache__/activations.cpython-37.pyc

diff --git a/elia/bert/__pycache__/activations.cpython-38.pyc b/bert/__pycache__/activations.cpython-38.pyc

similarity index 96%

rename from elia/bert/__pycache__/activations.cpython-38.pyc

rename to bert/__pycache__/activations.cpython-38.pyc

index 3d3804e94dab5c36f8cdda958065b4e32dc4aabd..95d7574b27f87ba4a183070e0b28ac76c34cd93e 100644

Binary files a/elia/bert/__pycache__/activations.cpython-38.pyc and b/bert/__pycache__/activations.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/configuration_bert.cpython-37.pyc b/bert/__pycache__/configuration_bert.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/configuration_bert.cpython-37.pyc

rename to bert/__pycache__/configuration_bert.cpython-37.pyc

diff --git a/elia/bert/__pycache__/configuration_bert.cpython-38.pyc b/bert/__pycache__/configuration_bert.cpython-38.pyc

similarity index 99%

rename from elia/bert/__pycache__/configuration_bert.cpython-38.pyc

rename to bert/__pycache__/configuration_bert.cpython-38.pyc

index 9eb473e317fe8fb5c667a3dbe69bace5c29e8986..473602c33e886e9796ad1bde3f63d1091177de1d 100644

Binary files a/elia/bert/__pycache__/configuration_bert.cpython-38.pyc and b/bert/__pycache__/configuration_bert.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/configuration_utils.cpython-37.pyc b/bert/__pycache__/configuration_utils.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/configuration_utils.cpython-37.pyc

rename to bert/__pycache__/configuration_utils.cpython-37.pyc

diff --git a/elia/bert/__pycache__/configuration_utils.cpython-38.pyc b/bert/__pycache__/configuration_utils.cpython-38.pyc

similarity index 94%

rename from elia/bert/__pycache__/configuration_utils.cpython-38.pyc

rename to bert/__pycache__/configuration_utils.cpython-38.pyc

index eefb7f8062db85c760031b0999814cf84c2f992b..babcf78a077ee51e54ffc97893dd5ab0bc3e2fb3 100644

Binary files a/elia/bert/__pycache__/configuration_utils.cpython-38.pyc and b/bert/__pycache__/configuration_utils.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/file_utils.cpython-37.pyc b/bert/__pycache__/file_utils.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/file_utils.cpython-37.pyc

rename to bert/__pycache__/file_utils.cpython-37.pyc

diff --git a/elia/bert/__pycache__/file_utils.cpython-38.pyc b/bert/__pycache__/file_utils.cpython-38.pyc

similarity index 74%

rename from elia/bert/__pycache__/file_utils.cpython-38.pyc

rename to bert/__pycache__/file_utils.cpython-38.pyc

index 29ede2127f22edebb5409a5bfcbec143227b7d01..941c87d2e2a9c7e584c0e2730c06328c59727806 100644

Binary files a/elia/bert/__pycache__/file_utils.cpython-38.pyc and b/bert/__pycache__/file_utils.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/generation_utils.cpython-37.pyc b/bert/__pycache__/generation_utils.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/generation_utils.cpython-37.pyc

rename to bert/__pycache__/generation_utils.cpython-37.pyc

diff --git a/elia/bert/__pycache__/generation_utils.cpython-38.pyc b/bert/__pycache__/generation_utils.cpython-38.pyc

similarity index 99%

rename from elia/bert/__pycache__/generation_utils.cpython-38.pyc

rename to bert/__pycache__/generation_utils.cpython-38.pyc

index c36afeb132be1ff8b5cca362f0911bf211e1b1e3..211707b3b2fbfe3f02d064184ab0ab5ab698b961 100644

Binary files a/elia/bert/__pycache__/generation_utils.cpython-38.pyc and b/bert/__pycache__/generation_utils.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/modeling_bert.cpython-37.pyc b/bert/__pycache__/modeling_bert.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/modeling_bert.cpython-37.pyc

rename to bert/__pycache__/modeling_bert.cpython-37.pyc

diff --git a/elia/bert/__pycache__/modeling_bert.cpython-38.pyc b/bert/__pycache__/modeling_bert.cpython-38.pyc

similarity index 75%

rename from elia/bert/__pycache__/modeling_bert.cpython-38.pyc

rename to bert/__pycache__/modeling_bert.cpython-38.pyc

index 3e4f837799032e0940315dd0506de477acb1acf1..c728a7f70ea9ad1d20bdfd85ed5ac6cfb874f90a 100644

Binary files a/elia/bert/__pycache__/modeling_bert.cpython-38.pyc and b/bert/__pycache__/modeling_bert.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/modeling_utils.cpython-37.pyc b/bert/__pycache__/modeling_utils.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/modeling_utils.cpython-37.pyc

rename to bert/__pycache__/modeling_utils.cpython-37.pyc

diff --git a/elia/bert/__pycache__/modeling_utils.cpython-38.pyc b/bert/__pycache__/modeling_utils.cpython-38.pyc

similarity index 83%

rename from elia/bert/__pycache__/modeling_utils.cpython-38.pyc

rename to bert/__pycache__/modeling_utils.cpython-38.pyc

index 52806021ea6d36cc4fad71c04b02165b2e14f4e7..d750c965c11520180b7fb4369d436f2fc98f459b 100644

Binary files a/elia/bert/__pycache__/modeling_utils.cpython-38.pyc and b/bert/__pycache__/modeling_utils.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/multimodal_bert.cpython-37.pyc b/bert/__pycache__/multimodal_bert.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/multimodal_bert.cpython-37.pyc

rename to bert/__pycache__/multimodal_bert.cpython-37.pyc

diff --git a/elia/bert/__pycache__/multimodal_bert.cpython-38.pyc b/bert/__pycache__/multimodal_bert.cpython-38.pyc

similarity index 98%

rename from elia/bert/__pycache__/multimodal_bert.cpython-38.pyc

rename to bert/__pycache__/multimodal_bert.cpython-38.pyc

index 0919175640605c3d91aa56da62a3a916bca08648..c36ada98c9cae9d0318b7b03e248cb42e60ee0e4 100644

Binary files a/elia/bert/__pycache__/multimodal_bert.cpython-38.pyc and b/bert/__pycache__/multimodal_bert.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/tokenization_bert.cpython-37.pyc b/bert/__pycache__/tokenization_bert.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/tokenization_bert.cpython-37.pyc

rename to bert/__pycache__/tokenization_bert.cpython-37.pyc

diff --git a/elia/bert/__pycache__/tokenization_bert.cpython-38.pyc b/bert/__pycache__/tokenization_bert.cpython-38.pyc

similarity index 99%

rename from elia/bert/__pycache__/tokenization_bert.cpython-38.pyc

rename to bert/__pycache__/tokenization_bert.cpython-38.pyc

index 46e86bdfb72fd99e3a12e8304d1a87da8874af31..e9c35a24fb91108ac59b0627df02952d75b38e55 100644

Binary files a/elia/bert/__pycache__/tokenization_bert.cpython-38.pyc and b/bert/__pycache__/tokenization_bert.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/tokenization_utils.cpython-37.pyc b/bert/__pycache__/tokenization_utils.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/tokenization_utils.cpython-37.pyc

rename to bert/__pycache__/tokenization_utils.cpython-37.pyc

diff --git a/elia/bert/__pycache__/tokenization_utils.cpython-38.pyc b/bert/__pycache__/tokenization_utils.cpython-38.pyc

similarity index 99%

rename from elia/bert/__pycache__/tokenization_utils.cpython-38.pyc

rename to bert/__pycache__/tokenization_utils.cpython-38.pyc

index d0c7b8e10c8c808b0d8466184f2107f6efa0261c..e14dd5fad83a5a010883c13379281d78e5075523 100644

Binary files a/elia/bert/__pycache__/tokenization_utils.cpython-38.pyc and b/bert/__pycache__/tokenization_utils.cpython-38.pyc differ

diff --git a/elia/bert/__pycache__/tokenization_utils_base.cpython-37.pyc b/bert/__pycache__/tokenization_utils_base.cpython-37.pyc

similarity index 100%

rename from elia/bert/__pycache__/tokenization_utils_base.cpython-37.pyc

rename to bert/__pycache__/tokenization_utils_base.cpython-37.pyc

diff --git a/elia/bert/__pycache__/tokenization_utils_base.cpython-38.pyc b/bert/__pycache__/tokenization_utils_base.cpython-38.pyc

similarity index 99%

rename from elia/bert/__pycache__/tokenization_utils_base.cpython-38.pyc

rename to bert/__pycache__/tokenization_utils_base.cpython-38.pyc

index c9d46a9416d56f3d4ed2ae4473434002b42eb68c..322088ddf84ea2465f20d9e423c6d973b27b504b 100644

Binary files a/elia/bert/__pycache__/tokenization_utils_base.cpython-38.pyc and b/bert/__pycache__/tokenization_utils_base.cpython-38.pyc differ

diff --git a/elia/bert/activations.py b/bert/activations.py

similarity index 100%

rename from elia/bert/activations.py

rename to bert/activations.py

diff --git a/elia/bert/configuration_bert.py b/bert/configuration_bert.py

similarity index 100%

rename from elia/bert/configuration_bert.py

rename to bert/configuration_bert.py

diff --git a/elia/bert/configuration_utils.py b/bert/configuration_utils.py

similarity index 100%

rename from elia/bert/configuration_utils.py

rename to bert/configuration_utils.py

diff --git a/elia/bert/file_utils.py b/bert/file_utils.py

similarity index 100%

rename from elia/bert/file_utils.py

rename to bert/file_utils.py

diff --git a/elia/bert/generation_utils.py b/bert/generation_utils.py

similarity index 100%

rename from elia/bert/generation_utils.py

rename to bert/generation_utils.py

diff --git a/elia/bert/modeling_bert.py b/bert/modeling_bert.py

similarity index 100%

rename from elia/bert/modeling_bert.py

rename to bert/modeling_bert.py

diff --git a/elia/bert/modeling_utils.py b/bert/modeling_utils.py

similarity index 100%

rename from elia/bert/modeling_utils.py

rename to bert/modeling_utils.py

diff --git a/elia/bert/multimodal_bert.py b/bert/multimodal_bert.py

similarity index 100%

rename from elia/bert/multimodal_bert.py

rename to bert/multimodal_bert.py

diff --git a/elia/bert/tokenization_bert.py b/bert/tokenization_bert.py

similarity index 100%

rename from elia/bert/tokenization_bert.py

rename to bert/tokenization_bert.py

diff --git a/elia/bert/tokenization_utils.py b/bert/tokenization_utils.py

similarity index 100%

rename from elia/bert/tokenization_utils.py

rename to bert/tokenization_utils.py

diff --git a/elia/bert/tokenization_utils_base.py b/bert/tokenization_utils_base.py

similarity index 100%

rename from elia/bert/tokenization_utils_base.py

rename to bert/tokenization_utils_base.py

diff --git a/checkpoints/.test.py.swp b/checkpoints/.test.py.swp

new file mode 100644

index 0000000000000000000000000000000000000000..94ccf4c542156d44239742ea75e7168372cf8085

Binary files /dev/null and b/checkpoints/.test.py.swp differ

diff --git a/checkpoints/test.py b/checkpoints/test.py

new file mode 100644

index 0000000000000000000000000000000000000000..e782c9988784a4cd8c1d5d3fd8724bed14f95ac7

--- /dev/null

+++ b/checkpoints/test.py

@@ -0,0 +1,14 @@

+

+import torch

+

+model = torch.load('model_best_refcoco_0508.pth', map_location='cpu')

+

+print(model['model'].keys())

+

+new_dict = {}

+for k in model['model'].keys():

+ if 'image_model' in k or 'language_model' in k or 'classifier' in k:

+ new_dict[k] = model['model'][k]

+

+#torch.save('gradio.pth', new_dict)

+torch.save(new_dict, 'gradio.pth')

diff --git a/data/__pycache__/dataset_refer_bert.cpython-37.pyc b/data/__pycache__/dataset_refer_bert.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..fc1cd4c769860d1f40ade461f36d9d7cb0e68a7f

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert.cpython-37.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert.cpython-38.pyc b/data/__pycache__/dataset_refer_bert.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..f7c69b7ce42b267eea273fc1eed1bd1baba31653

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_aug.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_aug.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..c76576c13163642f5bdca1fa1dc9ff8b9c786c14

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_aug.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_cl.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_cl.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..35c1ddade26303f024905642489100c7aa35a3ff

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_cl.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_concat.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_concat.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..bc94a15ce0fb8be199291661477716432bd4c559

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_concat.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_hfai.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_hfai.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..0338b11ce23efd96087ee848dbad8c26fc9be5ea

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_hfai.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_mixed.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_mixed.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..fad17e438b67dccada5925f126c86af2f4ffead5

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_mixed.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_mlm.cpython-37.pyc b/data/__pycache__/dataset_refer_bert_mlm.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..4e12b0ad4971f62085b505faa69da499fad0ac3c

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_mlm.cpython-37.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_mlm.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_mlm.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..1d85c3f3ed484413e83a84414185f40ee5bc8d30

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_mlm.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_refcoco.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_refcoco.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..fb6e8336d1021eb13894249b7f924a6a5e1cb40d

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_refcoco.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_refcocog_umd.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_refcocog_umd.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..4ac6a230e8b755eb83830429ec50c57a31ee6fd9

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_refcocog_umd.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_refcocoplus.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_refcocoplus.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..75cf2b3415ee9512982f0e4bd4f2a80d4c9199f1

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_refcocoplus.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_returnidx.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_returnidx.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..8bc6d75c2086b44e16dd3999b3e73117cafc2d68

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_returnidx.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_save.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_save.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..dd7d80e53edc0ea1e51b4c334dd5752e1af5d8e8

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_save.cpython-38.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_vis.cpython-37.pyc b/data/__pycache__/dataset_refer_bert_vis.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..47ff74d240b445fc293224f4d3dd589ac74bf266

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_vis.cpython-37.pyc differ

diff --git a/data/__pycache__/dataset_refer_bert_vpd.cpython-38.pyc b/data/__pycache__/dataset_refer_bert_vpd.cpython-38.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..9153c2bb3b4296e9ec6fb48a07f63836881e0df0

Binary files /dev/null and b/data/__pycache__/dataset_refer_bert_vpd.cpython-38.pyc differ

diff --git a/data/dataset_refer_bert.py b/data/dataset_refer_bert.py

new file mode 100644

index 0000000000000000000000000000000000000000..a8465d326d933d3c46df17de7d45dd0eb0de8516

--- /dev/null

+++ b/data/dataset_refer_bert.py

@@ -0,0 +1,121 @@

+import os

+import sys

+import torch.utils.data as data

+import torch

+from torchvision import transforms

+from torch.autograd import Variable

+import numpy as np

+from PIL import Image

+import torchvision.transforms.functional as TF

+import random

+

+from bert.tokenization_bert import BertTokenizer

+

+import h5py

+from refer.refer import REFER

+

+from args import get_parser

+

+# Dataset configuration initialization

+parser = get_parser()

+args = parser.parse_args()

+

+

+class ReferDataset(data.Dataset):

+

+ def __init__(self,

+ args,

+ image_transforms=None,

+ target_transforms=None,

+ split='train',

+ eval_mode=False):

+

+ self.classes = []

+ self.image_transforms = image_transforms

+ self.target_transform = target_transforms

+ self.split = split

+ self.refer = REFER(args.refer_data_root, args.dataset, args.splitBy)

+

+ self.max_tokens = 20

+

+ ref_ids = self.refer.getRefIds(split=self.split)

+ img_ids = self.refer.getImgIds(ref_ids)

+

+ all_imgs = self.refer.Imgs

+ self.imgs = list(all_imgs[i] for i in img_ids)

+ self.ref_ids = ref_ids

+

+ self.input_ids = []

+ self.attention_masks = []

+ self.tokenizer = BertTokenizer.from_pretrained(args.bert_tokenizer)

+

+ self.eval_mode = eval_mode

+ # if we are testing on a dataset, test all sentences of an object;

+ # o/w, we are validating during training, randomly sample one sentence for efficiency

+ for r in ref_ids:

+ ref = self.refer.Refs[r]

+

+ sentences_for_ref = []

+ attentions_for_ref = []

+

+ for i, (el, sent_id) in enumerate(zip(ref['sentences'], ref['sent_ids'])):

+ sentence_raw = el['raw']

+ attention_mask = [0] * self.max_tokens

+ padded_input_ids = [0] * self.max_tokens

+

+ input_ids = self.tokenizer.encode(text=sentence_raw, add_special_tokens=True)

+

+ # truncation of tokens

+ input_ids = input_ids[:self.max_tokens]

+

+ padded_input_ids[:len(input_ids)] = input_ids

+ attention_mask[:len(input_ids)] = [1]*len(input_ids)

+

+ sentences_for_ref.append(torch.tensor(padded_input_ids).unsqueeze(0))

+ attentions_for_ref.append(torch.tensor(attention_mask).unsqueeze(0))

+

+ self.input_ids.append(sentences_for_ref)

+ self.attention_masks.append(attentions_for_ref)

+

+ def get_classes(self):

+ return self.classes

+

+ def __len__(self):

+ return len(self.ref_ids)

+

+ def __getitem__(self, index):

+ this_ref_id = self.ref_ids[index]

+ this_img_id = self.refer.getImgIds(this_ref_id)

+ this_img = self.refer.Imgs[this_img_id[0]]

+

+ img = Image.open(os.path.join(self.refer.IMAGE_DIR, this_img['file_name'])).convert("RGB")

+

+ ref = self.refer.loadRefs(this_ref_id)

+

+ ref_mask = np.array(self.refer.getMask(ref[0])['mask'])

+ annot = np.zeros(ref_mask.shape)

+ annot[ref_mask == 1] = 1

+

+ annot = Image.fromarray(annot.astype(np.uint8), mode="P")

+

+ if self.image_transforms is not None:

+ # resize, from PIL to tensor, and mean and std normalization

+ img, target = self.image_transforms(img, annot)

+

+ if self.eval_mode:

+ embedding = []

+ att = []

+ for s in range(len(self.input_ids[index])):

+ e = self.input_ids[index][s]

+ a = self.attention_masks[index][s]

+ embedding.append(e.unsqueeze(-1))

+ att.append(a.unsqueeze(-1))

+

+ tensor_embeddings = torch.cat(embedding, dim=-1)

+ attention_mask = torch.cat(att, dim=-1)

+ else:

+ choice_sent = np.random.choice(len(self.input_ids[index]))

+ tensor_embeddings = self.input_ids[index][choice_sent]

+ attention_mask = self.attention_masks[index][choice_sent]

+

+ return img, target, tensor_embeddings, attention_mask

diff --git a/data/dataset_refer_bert_aug.py b/data/dataset_refer_bert_aug.py

new file mode 100644

index 0000000000000000000000000000000000000000..9ccd7d82d1ee3f7c2cf190517103ddbf5f50c68e

--- /dev/null

+++ b/data/dataset_refer_bert_aug.py

@@ -0,0 +1,225 @@

+import os

+import sys

+import torch.utils.data as data

+import torch

+from torchvision import transforms

+from torch.autograd import Variable

+import numpy as np

+from PIL import Image

+import torchvision.transforms.functional as TF

+import random

+

+from bert.tokenization_bert import BertTokenizer

+

+import h5py

+from refer.refer import REFER

+

+from args import get_parser

+

+# Dataset configuration initialization

+parser = get_parser()

+args = parser.parse_args()

+

+from hfai.datasets import CocoDetection

+

+from PIL import Image

+import numpy as np

+#from ffrecord.torch import DataLoader,Dataset

+import ffrecord

+

+_EXIF_ORIENT = 274

+def _apply_exif_orientation(image):

+ """

+ Applies the exif orientation correctly.

+

+ This code exists per the bug:

+ https://github.com/python-pillow/Pillow/issues/3973

+ with the function `ImageOps.exif_transpose`. The Pillow source raises errors with

+ various methods, especially `tobytes`

+

+ Function based on:

+ https://github.com/wkentaro/labelme/blob/v4.5.4/labelme/utils/image.py#L59

+ https://github.com/python-pillow/Pillow/blob/7.1.2/src/PIL/ImageOps.py#L527

+

+ Args:

+ image (PIL.Image): a PIL image

+

+ Returns:

+ (PIL.Image): the PIL image with exif orientation applied, if applicable

+ """

+ if not hasattr(image, "getexif"):

+ return image

+

+ try:

+ exif = image.getexif()

+ except Exception: # https://github.com/facebookresearch/detectron2/issues/1885

+ exif = None

+

+ if exif is None:

+ return image

+

+ orientation = exif.get(_EXIF_ORIENT)

+

+ method = {

+ 2: Image.FLIP_LEFT_RIGHT,

+ 3: Image.ROTATE_180,

+ 4: Image.FLIP_TOP_BOTTOM,

+ 5: Image.TRANSPOSE,

+ 6: Image.ROTATE_270,

+ 7: Image.TRANSVERSE,

+ 8: Image.ROTATE_90,

+ }.get(orientation)

+

+ if method is not None:

+ return image.transpose(method)

+ return image

+

+def convert_PIL_to_numpy(image, format):

+ """

+ Convert PIL image to numpy array of target format.

+

+ Args:

+ image (PIL.Image): a PIL image

+ format (str): the format of output image

+

+ Returns:

+ (np.ndarray): also see `read_image`

+ """

+ if format is not None:

+ # PIL only supports RGB, so convert to RGB and flip channels over below

+ conversion_format = format

+ if format in ["BGR", "YUV-BT.601"]:

+ conversion_format = "RGB"

+ image = image.convert(conversion_format)

+ image = np.asarray(image)

+ # PIL squeezes out the channel dimension for "L", so make it HWC

+ if format == "L":

+ image = np.expand_dims(image, -1)

+

+ # handle formats not supported by PIL

+ elif format == "BGR":

+ # flip channels if needed

+ image = image[:, :, ::-1]

+ elif format == "YUV-BT.601":

+ image = image / 255.0

+ image = np.dot(image, np.array(_M_RGB2YUV).T)

+

+ return image

+

+class ReferDataset(data.Dataset):

+#class ReferDataset(ffrecord.torch.Dataset):

+

+ def __init__(self,

+ args,

+ image_transforms=None,

+ target_transforms=None,

+ split='train',

+ eval_mode=False):

+

+ self.classes = []

+ self.image_transforms = image_transforms

+ self.target_transform = target_transforms

+ self.split = split

+ self.refer = REFER(args.refer_data_root, args.dataset, args.splitBy)

+

+ self.max_tokens = 40

+

+ ref_ids = self.refer.getRefIds(split=self.split)

+ img_ids = self.refer.getImgIds(ref_ids)

+

+ all_imgs = self.refer.Imgs

+ self.imgs = list(all_imgs[i] for i in img_ids)

+ self.ref_ids = ref_ids

+

+ self.input_ids = []

+ self.attention_masks = []

+ self.tokenizer = BertTokenizer.from_pretrained(args.bert_tokenizer)

+

+ self.eval_mode = eval_mode

+ # if we are testing on a dataset, test all sentences of an object;

+ # o/w, we are validating during training, randomly sample one sentence for efficiency

+ for r in ref_ids:

+ ref = self.refer.Refs[r]

+

+ sentences_for_ref = []

+ attentions_for_ref = []

+

+ for i, (el, sent_id) in enumerate(zip(ref['sentences'], ref['sent_ids'])):

+ sentence_raw = el['raw']

+ attention_mask = [0] * self.max_tokens

+ padded_input_ids = [0] * self.max_tokens

+

+ input_ids = self.tokenizer.encode(text=sentence_raw, add_special_tokens=True)

+

+ # truncation of tokens

+ input_ids = input_ids[:self.max_tokens]

+

+ padded_input_ids[:len(input_ids)] = input_ids

+ attention_mask[:len(input_ids)] = [1]*len(input_ids)

+

+ sentences_for_ref.append(torch.tensor(padded_input_ids).unsqueeze(0))

+ attentions_for_ref.append(torch.tensor(attention_mask).unsqueeze(0))

+

+ self.input_ids.append(sentences_for_ref)

+ self.attention_masks.append(attentions_for_ref)

+

+ split = 'train'

+ print(split)

+ self.hfai_dataset = CocoDetection(split, transform=None)

+ self.keys = {}

+ for i in range(len(self.hfai_dataset.reader.ids)):

+ self.keys[self.hfai_dataset.reader.ids[i]] = i

+

+ def get_classes(self):

+ return self.classes

+

+ def __len__(self):

+ return len(self.ref_ids)

+

+ def __getitem__(self, index):

+ #print(index)

+ #index = index[0]

+ this_ref_id = self.ref_ids[index]

+ this_img_id = self.refer.getImgIds(this_ref_id)

+ this_img = self.refer.Imgs[this_img_id[0]]

+

+ #print("this_ref_id", this_ref_id)

+ #print("this_img_id", this_img_id)

+ #print("this_img", this_img)

+ #img = Image.open(os.path.join(self.refer.IMAGE_DIR, this_img['file_name'])).convert("RGB")

+ img = self.hfai_dataset.reader.read_imgs([self.keys[this_img_id[0]]])[0]

+ img = _apply_exif_orientation(img)

+ img = convert_PIL_to_numpy(img, 'RGB')

+ #print(img.shape)

+ img = Image.fromarray(img)

+

+ ref = self.refer.loadRefs(this_ref_id)

+

+ ref_mask = np.array(self.refer.getMask(ref[0])['mask'])

+ annot = np.zeros(ref_mask.shape)

+ annot[ref_mask == 1] = 1

+

+ annot = Image.fromarray(annot.astype(np.uint8), mode="P")

+

+ if self.image_transforms is not None:

+ # resize, from PIL to tensor, and mean and std normalization

+ img, target = self.image_transforms(img, annot)

+

+ if self.eval_mode:

+ embedding = []

+ att = []

+ for s in range(len(self.input_ids[index])):

+ e = self.input_ids[index][s]

+ a = self.attention_masks[index][s]

+ embedding.append(e.unsqueeze(-1))

+ att.append(a.unsqueeze(-1))

+

+ tensor_embeddings = torch.cat(embedding, dim=-1)

+ attention_mask = torch.cat(att, dim=-1)

+ else:

+ choice_sent = np.random.choice(len(self.input_ids[index]))

+ tensor_embeddings = self.input_ids[index][choice_sent]

+ attention_mask = self.attention_masks[index][choice_sent]

+

+ #print(img.shape)

+ return img, target, tensor_embeddings, attention_mask

diff --git a/data/dataset_refer_bert_cl.py b/data/dataset_refer_bert_cl.py

new file mode 100644

index 0000000000000000000000000000000000000000..28fa672bd3ef6f4d8edbc28de51df9cca0d564f2

--- /dev/null

+++ b/data/dataset_refer_bert_cl.py

@@ -0,0 +1,233 @@

+import os

+import sys

+import torch.utils.data as data

+import torch

+from torchvision import transforms

+from torch.autograd import Variable

+import numpy as np

+from PIL import Image

+import torchvision.transforms.functional as TF

+import random

+

+from bert.tokenization_bert import BertTokenizer

+

+import h5py

+from refer.refer import REFER

+

+from args import get_parser

+

+# Dataset configuration initialization

+parser = get_parser()

+args = parser.parse_args()

+

+from hfai.datasets import CocoDetection

+

+from PIL import Image

+import numpy as np

+#from ffrecord.torch import DataLoader,Dataset

+import ffrecord

+import pickle

+

+_EXIF_ORIENT = 274

+def _apply_exif_orientation(image):

+ """

+ Applies the exif orientation correctly.

+

+ This code exists per the bug:

+ https://github.com/python-pillow/Pillow/issues/3973

+ with the function `ImageOps.exif_transpose`. The Pillow source raises errors with

+ various methods, especially `tobytes`

+

+ Function based on:

+ https://github.com/wkentaro/labelme/blob/v4.5.4/labelme/utils/image.py#L59

+ https://github.com/python-pillow/Pillow/blob/7.1.2/src/PIL/ImageOps.py#L527

+

+ Args:

+ image (PIL.Image): a PIL image

+

+ Returns:

+ (PIL.Image): the PIL image with exif orientation applied, if applicable

+ """

+ if not hasattr(image, "getexif"):

+ return image

+

+ try:

+ exif = image.getexif()

+ except Exception: # https://github.com/facebookresearch/detectron2/issues/1885

+ exif = None

+

+ if exif is None:

+ return image

+

+ orientation = exif.get(_EXIF_ORIENT)

+

+ method = {

+ 2: Image.FLIP_LEFT_RIGHT,

+ 3: Image.ROTATE_180,

+ 4: Image.FLIP_TOP_BOTTOM,

+ 5: Image.TRANSPOSE,

+ 6: Image.ROTATE_270,

+ 7: Image.TRANSVERSE,

+ 8: Image.ROTATE_90,

+ }.get(orientation)

+

+ if method is not None:

+ return image.transpose(method)

+ return image

+

+def convert_PIL_to_numpy(image, format):

+ """

+ Convert PIL image to numpy array of target format.

+

+ Args:

+ image (PIL.Image): a PIL image

+ format (str): the format of output image

+

+ Returns:

+ (np.ndarray): also see `read_image`

+ """

+ if format is not None:

+ # PIL only supports RGB, so convert to RGB and flip channels over below

+ conversion_format = format

+ if format in ["BGR", "YUV-BT.601"]:

+ conversion_format = "RGB"

+ image = image.convert(conversion_format)

+ image = np.asarray(image)

+ # PIL squeezes out the channel dimension for "L", so make it HWC

+ if format == "L":

+ image = np.expand_dims(image, -1)

+

+ # handle formats not supported by PIL

+ elif format == "BGR":

+ # flip channels if needed

+ image = image[:, :, ::-1]

+ elif format == "YUV-BT.601":

+ image = image / 255.0

+ image = np.dot(image, np.array(_M_RGB2YUV).T)

+

+ return image

+

+class ReferDataset(data.Dataset):

+#class ReferDataset(ffrecord.torch.Dataset):

+

+ def __init__(self,

+ args,

+ image_transforms=None,

+ target_transforms=None,

+ split='train',

+ eval_mode=False):

+

+ self.classes = []

+ self.image_transforms = image_transforms

+ self.target_transform = target_transforms

+ self.split = split

+ self.refer = REFER(args.refer_data_root, args.dataset, args.splitBy)

+

+ self.max_tokens = 20

+

+ ref_ids = self.refer.getRefIds(split=self.split)

+ img_ids = self.refer.getImgIds(ref_ids)

+

+ all_imgs = self.refer.Imgs

+ self.imgs = list(all_imgs[i] for i in img_ids)

+ self.ref_ids = ref_ids

+

+ self.input_ids = []

+ self.attention_masks = []

+ self.tokenizer = BertTokenizer.from_pretrained(args.bert_tokenizer)

+

+ self.eval_mode = eval_mode

+ # if we are testing on a dataset, test all sentences of an object;

+ # o/w, we are validating during training, randomly sample one sentence for efficiency

+ for r in ref_ids:

+ ref = self.refer.Refs[r]

+

+ sentences_for_ref = []

+ attentions_for_ref = []

+

+ for i, (el, sent_id) in enumerate(zip(ref['sentences'], ref['sent_ids'])):

+ sentence_raw = el['raw']

+ attention_mask = [0] * self.max_tokens

+ padded_input_ids = [0] * self.max_tokens

+

+ input_ids = self.tokenizer.encode(text=sentence_raw, add_special_tokens=True)

+

+ # truncation of tokens

+ input_ids = input_ids[:self.max_tokens]

+

+ padded_input_ids[:len(input_ids)] = input_ids

+ attention_mask[:len(input_ids)] = [1]*len(input_ids)

+

+ sentences_for_ref.append(torch.tensor(padded_input_ids).unsqueeze(0))

+ attentions_for_ref.append(torch.tensor(attention_mask).unsqueeze(0))

+

+ self.input_ids.append(sentences_for_ref)

+ self.attention_masks.append(attentions_for_ref)

+

+ split = 'train'

+ print(split)

+ self.hfai_dataset = CocoDetection(split, transform=None)

+ self.keys = {}

+ for i in range(len(self.hfai_dataset.reader.ids)):

+ self.keys[self.hfai_dataset.reader.ids[i]] = i

+

+ with open('/ceph-jd/pub/jupyter/zhuangrongxian/notebooks/LAVT-RIS-bidirectional-refactor-mask2former/LAVT-RIS-fuckddp/refcoco_int8.pkl', 'rb') as handle:

+ self.mixed_masks = pickle.load(handle)

+

+ def get_classes(self):

+ return self.classes

+

+ def __len__(self):

+ return len(self.ref_ids)

+

+ def __getitem__(self, index):

+ #print(index)

+ #index = index[0]

+ this_ref_id = self.ref_ids[index]

+ this_img_id = self.refer.getImgIds(this_ref_id)

+ this_img = self.refer.Imgs[this_img_id[0]]

+

+ #print("this_ref_id", this_ref_id)

+ #print("this_img_id", this_img_id)

+ #print("this_img", this_img)

+ #img = Image.open(os.path.join(self.refer.IMAGE_DIR, this_img['file_name'])).convert("RGB")

+ img = self.hfai_dataset.reader.read_imgs([self.keys[this_img_id[0]]])[0]

+ img = _apply_exif_orientation(img)

+ img = convert_PIL_to_numpy(img, 'RGB')

+ #print(img.shape)

+ img = Image.fromarray(img)

+

+ ref = self.refer.loadRefs(this_ref_id)

+

+ ref_mask = np.array(self.refer.getMask(ref[0])['mask'])

+ annot = np.zeros(ref_mask.shape)

+ annot[ref_mask == 1] = 1

+

+ annot = Image.fromarray(annot.astype(np.uint8), mode="P")

+

+ if self.image_transforms is not None:

+ # resize, from PIL to tensor, and mean and std normalization

+ img, target = self.image_transforms(img, annot)

+

+ if self.eval_mode:

+ embedding = []

+ att = []

+ for s in range(len(self.input_ids[index])):

+ e = self.input_ids[index][s]

+ a = self.attention_masks[index][s]

+ embedding.append(e.unsqueeze(-1))

+ att.append(a.unsqueeze(-1))

+

+ tensor_embeddings = torch.cat(embedding, dim=-1)

+ attention_mask = torch.cat(att, dim=-1)

+ return img, target, tensor_embeddings, attention_mask

+ else:

+ choice_sent = np.random.choice(len(self.input_ids[index]))

+ tensor_embeddings = self.input_ids[index][choice_sent]

+ attention_mask = self.attention_masks[index][choice_sent]

+

+ #print(img.shape)

+ if self.split == 'val':

+ return img, target, tensor_embeddings, attention_mask

+ else:

+ return img, target, tensor_embeddings, attention_mask, torch.tensor(self.mixed_masks[this_img_id[0]]['masks'])

diff --git a/data/dataset_refer_bert_concat.py b/data/dataset_refer_bert_concat.py

new file mode 100644

index 0000000000000000000000000000000000000000..6ae1cca1f14346a5877650894cc53f58026b56db

--- /dev/null

+++ b/data/dataset_refer_bert_concat.py

@@ -0,0 +1,253 @@

+import os

+import sys

+import torch.utils.data as data

+import torch

+from torchvision import transforms

+from torch.autograd import Variable

+import numpy as np

+from PIL import Image

+import torchvision.transforms.functional as TF

+import random

+

+from bert.tokenization_bert import BertTokenizer

+

+import h5py

+from refer.refer import REFER

+

+from args import get_parser

+

+# Dataset configuration initialization

+parser = get_parser()

+args = parser.parse_args()

+

+from hfai.datasets import CocoDetection

+

+from PIL import Image

+import numpy as np

+#from ffrecord.torch import DataLoader,Dataset

+import ffrecord

+

+_EXIF_ORIENT = 274

+

+

+from itertools import permutations

+

+def _apply_exif_orientation(image):

+ """

+ Applies the exif orientation correctly.

+

+ This code exists per the bug:

+ https://github.com/python-pillow/Pillow/issues/3973

+ with the function `ImageOps.exif_transpose`. The Pillow source raises errors with

+ various methods, especially `tobytes`

+

+ Function based on:

+ https://github.com/wkentaro/labelme/blob/v4.5.4/labelme/utils/image.py#L59

+ https://github.com/python-pillow/Pillow/blob/7.1.2/src/PIL/ImageOps.py#L527

+

+ Args:

+ image (PIL.Image): a PIL image

+

+ Returns:

+ (PIL.Image): the PIL image with exif orientation applied, if applicable

+ """

+ if not hasattr(image, "getexif"):

+ return image

+

+ try:

+ exif = image.getexif()

+ except Exception: # https://github.com/facebookresearch/detectron2/issues/1885

+ exif = None

+

+ if exif is None:

+ return image

+

+ orientation = exif.get(_EXIF_ORIENT)

+

+ method = {

+ 2: Image.FLIP_LEFT_RIGHT,

+ 3: Image.ROTATE_180,

+ 4: Image.FLIP_TOP_BOTTOM,

+ 5: Image.TRANSPOSE,

+ 6: Image.ROTATE_270,

+ 7: Image.TRANSVERSE,

+ 8: Image.ROTATE_90,

+ }.get(orientation)

+

+ if method is not None:

+ return image.transpose(method)

+ return image

+

+def convert_PIL_to_numpy(image, format):

+ """

+ Convert PIL image to numpy array of target format.

+

+ Args:

+ image (PIL.Image): a PIL image

+ format (str): the format of output image

+

+ Returns:

+ (np.ndarray): also see `read_image`

+ """

+ if format is not None:

+ # PIL only supports RGB, so convert to RGB and flip channels over below

+ conversion_format = format

+ if format in ["BGR", "YUV-BT.601"]:

+ conversion_format = "RGB"

+ image = image.convert(conversion_format)

+ image = np.asarray(image)

+ # PIL squeezes out the channel dimension for "L", so make it HWC

+ if format == "L":

+ image = np.expand_dims(image, -1)

+

+ # handle formats not supported by PIL

+ elif format == "BGR":

+ # flip channels if needed

+ image = image[:, :, ::-1]

+ elif format == "YUV-BT.601":

+ image = image / 255.0

+ image = np.dot(image, np.array(_M_RGB2YUV).T)

+

+ return image

+

+class ReferDataset(data.Dataset):

+#class ReferDataset(ffrecord.torch.Dataset):

+

+ def __init__(self,

+ args,

+ image_transforms=None,

+ target_transforms=None,

+ split='train',

+ eval_mode=False):

+

+ self.classes = []

+ self.image_transforms = image_transforms

+ self.target_transform = target_transforms

+ self.split = split

+ self.refer = REFER(args.refer_data_root, args.dataset, args.splitBy)

+

+ #self.max_tokens = 20

+ self.max_tokens = 40

+

+ ref_ids = self.refer.getRefIds(split=self.split)

+ img_ids = self.refer.getImgIds(ref_ids)

+

+ all_imgs = self.refer.Imgs

+ self.imgs = list(all_imgs[i] for i in img_ids)

+ self.ref_ids = ref_ids

+

+ self.input_ids = []

+ self.attention_masks = []

+ self.tokenizer = BertTokenizer.from_pretrained(args.bert_tokenizer)

+

+ self.eval_mode = eval_mode

+ # if we are testing on a dataset, test all sentences of an object;

+ # o/w, we are validating during training, randomly sample one sentence for efficiency

+ for r in ref_ids:

+ ref = self.refer.Refs[r]

+

+ sentences_for_ref = []

+ attentions_for_ref = []

+

+ # initializing list

+ test_list = ref['sentences']

+

+ # All possible concatenations in String List

+ # Using permutations() + loop

+ if not self.eval_mode:

+ test_list = [el['raw'] for el in test_list]

+ res = []

+

+ for k in test_list:

+ for l in test_list:

+ res.append(k + ', ' + l)

+ #for idx in range(1, len(test_list) + 1):

+ # temp.extend(list(permutations(test_list, idx)))

+ #res = []

+ #for ele in temp:

+ # res.append(", ".join(ele))

+ else:

+ print("eval mode")

+ test_list = [el['raw'] + ', ' + el ['raw'] for el in test_list]

+ res = test_list

+

+ #for i, (el, sent_id) in enumerate(zip(ref['sentences'], ref['sent_ids'])):

+ for i, el in enumerate(res):

+ sentence_raw = el

+ attention_mask = [0] * self.max_tokens

+ padded_input_ids = [0] * self.max_tokens

+

+ input_ids = self.tokenizer.encode(text=sentence_raw, add_special_tokens=True)

+

+ # truncation of tokens

+ input_ids = input_ids[:self.max_tokens]

+

+ padded_input_ids[:len(input_ids)] = input_ids

+ attention_mask[:len(input_ids)] = [1]*len(input_ids)

+

+ sentences_for_ref.append(torch.tensor(padded_input_ids).unsqueeze(0))

+ attentions_for_ref.append(torch.tensor(attention_mask).unsqueeze(0))

+

+ self.input_ids.append(sentences_for_ref)

+ self.attention_masks.append(attentions_for_ref)

+

+ split = 'train'

+ print(split)

+ self.hfai_dataset = CocoDetection(split, transform=None)

+ self.keys = {}

+ for i in range(len(self.hfai_dataset.reader.ids)):

+ self.keys[self.hfai_dataset.reader.ids[i]] = i

+

+ def get_classes(self):

+ return self.classes

+

+ def __len__(self):

+ return len(self.ref_ids)

+

+ def __getitem__(self, index):

+ #print(index)

+ #index = index[0]

+ this_ref_id = self.ref_ids[index]

+ this_img_id = self.refer.getImgIds(this_ref_id)

+ this_img = self.refer.Imgs[this_img_id[0]]

+

+ #print("this_ref_id", this_ref_id)

+ #print("this_img_id", this_img_id)

+ #print("this_img", this_img)

+ #img = Image.open(os.path.join(self.refer.IMAGE_DIR, this_img['file_name'])).convert("RGB")

+ img = self.hfai_dataset.reader.read_imgs([self.keys[this_img_id[0]]])[0]

+ img = _apply_exif_orientation(img)

+ img = convert_PIL_to_numpy(img, 'RGB')

+ #print(img.shape)

+ img = Image.fromarray(img)

+

+ ref = self.refer.loadRefs(this_ref_id)

+

+ ref_mask = np.array(self.refer.getMask(ref[0])['mask'])

+ annot = np.zeros(ref_mask.shape)

+ annot[ref_mask == 1] = 1

+

+ annot = Image.fromarray(annot.astype(np.uint8), mode="P")

+

+ if self.image_transforms is not None:

+ # resize, from PIL to tensor, and mean and std normalization

+ img, target = self.image_transforms(img, annot)

+

+ if self.eval_mode:

+ embedding = []

+ att = []

+ for s in range(len(self.input_ids[index])):

+ e = self.input_ids[index][s]

+ a = self.attention_masks[index][s]

+ embedding.append(e.unsqueeze(-1))

+ att.append(a.unsqueeze(-1))

+

+ tensor_embeddings = torch.cat(embedding, dim=-1)

+ attention_mask = torch.cat(att, dim=-1)

+ else:

+ choice_sent = np.random.choice(len(self.input_ids[index]))

+ tensor_embeddings = self.input_ids[index][choice_sent]

+ attention_mask = self.attention_masks[index][choice_sent]

+

+ #print(img.shape)

+ return img, target, tensor_embeddings, attention_mask

diff --git a/data/dataset_refer_bert_hfai.py b/data/dataset_refer_bert_hfai.py

new file mode 100644

index 0000000000000000000000000000000000000000..2c0602a43b87e0e12480ad773691aa6d08940c5a

--- /dev/null

+++ b/data/dataset_refer_bert_hfai.py

@@ -0,0 +1,240 @@

+import os

+import sys

+import torch.utils.data as data

+import torch

+from torchvision import transforms

+from torch.autograd import Variable

+import numpy as np

+from PIL import Image

+import torchvision.transforms.functional as TF

+import random

+

+from bert.tokenization_bert import BertTokenizer

+

+import h5py

+from refer.refer import REFER

+

+from args import get_parser

+

+# Dataset configuration initialization

+parser = get_parser()

+args = parser.parse_args()

+

+from hfai.datasets import CocoDetection

+

+from PIL import Image

+import numpy as np

+#from ffrecord.torch import DataLoader,Dataset

+import ffrecord

+

+_EXIF_ORIENT = 274

+def _apply_exif_orientation(image):

+ """

+ Applies the exif orientation correctly.

+

+ This code exists per the bug:

+ https://github.com/python-pillow/Pillow/issues/3973

+ with the function `ImageOps.exif_transpose`. The Pillow source raises errors with

+ various methods, especially `tobytes`

+

+ Function based on:

+ https://github.com/wkentaro/labelme/blob/v4.5.4/labelme/utils/image.py#L59

+ https://github.com/python-pillow/Pillow/blob/7.1.2/src/PIL/ImageOps.py#L527

+

+ Args:

+ image (PIL.Image): a PIL image

+

+ Returns:

+ (PIL.Image): the PIL image with exif orientation applied, if applicable

+ """

+ if not hasattr(image, "getexif"):

+ return image

+

+ try:

+ exif = image.getexif()

+ except Exception: # https://github.com/facebookresearch/detectron2/issues/1885

+ exif = None

+

+ if exif is None:

+ return image

+

+ orientation = exif.get(_EXIF_ORIENT)

+

+ method = {

+ 2: Image.FLIP_LEFT_RIGHT,

+ 3: Image.ROTATE_180,

+ 4: Image.FLIP_TOP_BOTTOM,

+ 5: Image.TRANSPOSE,

+ 6: Image.ROTATE_270,

+ 7: Image.TRANSVERSE,

+ 8: Image.ROTATE_90,

+ }.get(orientation)

+

+ if method is not None:

+ return image.transpose(method)

+ return image

+

+def convert_PIL_to_numpy(image, format):

+ """

+ Convert PIL image to numpy array of target format.

+

+ Args:

+ image (PIL.Image): a PIL image

+ format (str): the format of output image

+

+ Returns:

+ (np.ndarray): also see `read_image`

+ """

+ if format is not None:

+ # PIL only supports RGB, so convert to RGB and flip channels over below

+ conversion_format = format

+ if format in ["BGR", "YUV-BT.601"]:

+ conversion_format = "RGB"

+ image = image.convert(conversion_format)

+ image = np.asarray(image)

+ # PIL squeezes out the channel dimension for "L", so make it HWC

+ if format == "L":

+ image = np.expand_dims(image, -1)

+

+ # handle formats not supported by PIL

+ elif format == "BGR":

+ # flip channels if needed

+ image = image[:, :, ::-1]

+ elif format == "YUV-BT.601":

+ image = image / 255.0

+ image = np.dot(image, np.array(_M_RGB2YUV).T)

+

+ return image

+

+#class ReferDataset(data.Dataset):

+class ReferDataset(ffrecord.torch.Dataset):

+

+ def __init__(self,

+ args,

+ image_transforms=None,

+ target_transforms=None,

+ split='train',

+ eval_mode=False):

+

+ self.classes = []

+ self.image_transforms = image_transforms

+ self.target_transform = target_transforms

+ self.split = split

+ self.refer = REFER(args.refer_data_root, args.dataset, args.splitBy)

+

+ self.max_tokens = 20

+

+ ref_ids = self.refer.getRefIds(split=self.split)

+ img_ids = self.refer.getImgIds(ref_ids)

+

+ all_imgs = self.refer.Imgs

+ self.imgs = list(all_imgs[i] for i in img_ids)

+ self.ref_ids = ref_ids

+

+ self.input_ids = []

+ self.attention_masks = []

+ self.tokenizer = BertTokenizer.from_pretrained(args.bert_tokenizer)

+

+ self.eval_mode = eval_mode

+ # if we are testing on a dataset, test all sentences of an object;

+ # o/w, we are validating during training, randomly sample one sentence for efficiency

+ for r in ref_ids:

+ ref = self.refer.Refs[r]

+

+ sentences_for_ref = []

+ attentions_for_ref = []

+

+ for i, (el, sent_id) in enumerate(zip(ref['sentences'], ref['sent_ids'])):

+ sentence_raw = el['raw']

+ attention_mask = [0] * self.max_tokens

+ padded_input_ids = [0] * self.max_tokens

+

+ input_ids = self.tokenizer.encode(text=sentence_raw, add_special_tokens=True)

+

+ # truncation of tokens

+ input_ids = input_ids[:self.max_tokens]

+

+ padded_input_ids[:len(input_ids)] = input_ids

+ attention_mask[:len(input_ids)] = [1]*len(input_ids)

+

+ sentences_for_ref.append(torch.tensor(padded_input_ids).unsqueeze(0))

+ attentions_for_ref.append(torch.tensor(attention_mask).unsqueeze(0))

+

+ self.input_ids.append(sentences_for_ref)

+ self.attention_masks.append(attentions_for_ref)

+

+ split = 'train'

+ print(split)

+ self.hfai_dataset = CocoDetection(split, transform=None)

+ self.keys = {}

+ for i in range(len(self.hfai_dataset.reader.ids)):

+ self.keys[self.hfai_dataset.reader.ids[i]] = i

+

+ def get_classes(self):

+ return self.classes

+

+ def __len__(self):

+ return len(self.ref_ids)

+

+ def __getitem__(self, indices):

+ #print(index)

+ #index = index[0]

+ img_list = []

+ target_list = []

+ tensor_embedding_list = []

+ attention_mask_list = []

+ for index in indices:

+ this_ref_id = self.ref_ids[index]

+ this_img_id = self.refer.getImgIds(this_ref_id)

+ this_img = self.refer.Imgs[this_img_id[0]]

+

+ #print("this_ref_id", this_ref_id)

+ #print("this_img_id", this_img_id)

+ #print("this_img", this_img)

+ #img = Image.open(os.path.join(self.refer.IMAGE_DIR, this_img['file_name'])).convert("RGB")

+ img = self.hfai_dataset.reader.read_imgs([self.keys[this_img_id[0]]])[0]

+ img = _apply_exif_orientation(img)

+ img = convert_PIL_to_numpy(img, 'RGB')

+ #print(img.shape)

+ img = Image.fromarray(img)

+

+ ref = self.refer.loadRefs(this_ref_id)

+

+ ref_mask = np.array(self.refer.getMask(ref[0])['mask'])

+ annot = np.zeros(ref_mask.shape)

+ annot[ref_mask == 1] = 1

+

+ annot = Image.fromarray(annot.astype(np.uint8), mode="P")

+

+ if self.image_transforms is not None:

+ # resize, from PIL to tensor, and mean and std normalization

+ img, target = self.image_transforms(img, annot)

+

+ if self.eval_mode:

+ embedding = []

+ att = []

+ for s in range(len(self.input_ids[index])):

+ e = self.input_ids[index][s]

+ a = self.attention_masks[index][s]

+ embedding.append(e.unsqueeze(-1))

+ att.append(a.unsqueeze(-1))

+

+ tensor_embeddings = torch.cat(embedding, dim=-1)

+ attention_mask = torch.cat(att, dim=-1)

+ else:

+ choice_sent = np.random.choice(len(self.input_ids[index]))

+ tensor_embeddings = self.input_ids[index][choice_sent]

+ attention_mask = self.attention_masks[index][choice_sent]

+ img_list.append(img.unsqueeze(0))

+ target_list.append(target.unsqueeze(0))

+ tensor_embedding_list.append(tensor_embeddings.unsqueeze(0))

+ attention_mask_list.append(attention_mask.unsqueeze(0))

+

+ img_list = torch.cat(img_list, dim=0)

+ target_list = torch.cat(target_list, dim=0)

+ tensor_embedding_list = torch.cat(tensor_embedding_list, dim=0)

+ attention_mask_list = torch.cat(attention_mask_list, dim=0)

+ #print(img_list.shape, target_list.shape, tensor_embedding_list.shape, attention_mask_list.shape)

+ return img_list, target_list, tensor_embedding_list, attention_mask_list

+ #print(img.shape)

+ #return img, target, tensor_embeddings, attention_mask

diff --git a/data/dataset_refer_bert_mixed.py b/data/dataset_refer_bert_mixed.py

new file mode 100644

index 0000000000000000000000000000000000000000..a1c365a82333d96392c28ecdc112b0ac488bef9c

--- /dev/null

+++ b/data/dataset_refer_bert_mixed.py

@@ -0,0 +1,242 @@

+import os

+import sys

+import torch.utils.data as data

+import torch

+from torchvision import transforms

+from torch.autograd import Variable

+import numpy as np

+from PIL import Image

+import torchvision.transforms.functional as TF

+import random

+

+from bert.tokenization_bert import BertTokenizer

+

+import h5py

+from refer.refer import REFER

+

+from args import get_parser

+

+# Dataset configuration initialization

+parser = get_parser()

+args = parser.parse_args()

+

+from hfai.datasets import CocoDetection

+

+from PIL import Image

+import numpy as np

+#from ffrecord.torch import DataLoader,Dataset

+import ffrecord

+import pickle

+

+_EXIF_ORIENT = 274

+def _apply_exif_orientation(image):

+ """

+ Applies the exif orientation correctly.

+

+ This code exists per the bug:

+ https://github.com/python-pillow/Pillow/issues/3973

+ with the function `ImageOps.exif_transpose`. The Pillow source raises errors with

+ various methods, especially `tobytes`

+

+ Function based on:

+ https://github.com/wkentaro/labelme/blob/v4.5.4/labelme/utils/image.py#L59

+ https://github.com/python-pillow/Pillow/blob/7.1.2/src/PIL/ImageOps.py#L527

+

+ Args:

+ image (PIL.Image): a PIL image

+

+ Returns:

+ (PIL.Image): the PIL image with exif orientation applied, if applicable

+ """

+ if not hasattr(image, "getexif"):

+ return image

+

+ try:

+ exif = image.getexif()

+ except Exception: # https://github.com/facebookresearch/detectron2/issues/1885

+ exif = None

+

+ if exif is None:

+ return image

+

+ orientation = exif.get(_EXIF_ORIENT)

+

+ method = {

+ 2: Image.FLIP_LEFT_RIGHT,

+ 3: Image.ROTATE_180,

+ 4: Image.FLIP_TOP_BOTTOM,

+ 5: Image.TRANSPOSE,

+ 6: Image.ROTATE_270,

+ 7: Image.TRANSVERSE,

+ 8: Image.ROTATE_90,

+ }.get(orientation)

+

+ if method is not None:

+ return image.transpose(method)

+ return image

+

+def convert_PIL_to_numpy(image, format):

+ """

+ Convert PIL image to numpy array of target format.

+

+ Args:

+ image (PIL.Image): a PIL image

+ format (str): the format of output image

+

+ Returns:

+ (np.ndarray): also see `read_image`

+ """

+ if format is not None:

+ # PIL only supports RGB, so convert to RGB and flip channels over below

+ conversion_format = format

+ if format in ["BGR", "YUV-BT.601"]:

+ conversion_format = "RGB"

+ image = image.convert(conversion_format)

+ image = np.asarray(image)

+ # PIL squeezes out the channel dimension for "L", so make it HWC

+ if format == "L":

+ image = np.expand_dims(image, -1)

+

+ # handle formats not supported by PIL

+ elif format == "BGR":

+ # flip channels if needed

+ image = image[:, :, ::-1]

+ elif format == "YUV-BT.601":

+ image = image / 255.0

+ image = np.dot(image, np.array(_M_RGB2YUV).T)

+

+ return image

+

+class ReferDataset(data.Dataset):

+#class ReferDataset(ffrecord.torch.Dataset):

+

+ def __init__(self,

+ args,

+ image_transforms=None,

+ target_transforms=None,

+ split='train',

+ eval_mode=False):

+

+ self.classes = []

+ self.image_transforms = image_transforms

+ self.target_transform = target_transforms

+ self.split = split

+ self.refer = REFER(args.refer_data_root, args.dataset, args.splitBy)

+

+ self.max_tokens = 20

+

+ ref_ids = self.refer.getRefIds(split=self.split)

+ img_ids = self.refer.getImgIds(ref_ids)

+

+ all_imgs = self.refer.Imgs

+ self.imgs = list(all_imgs[i] for i in img_ids)

+ self.ref_ids = ref_ids

+

+ self.input_ids = []

+ self.attention_masks = []

+ self.tokenizer = BertTokenizer.from_pretrained(args.bert_tokenizer)

+

+ self.eval_mode = eval_mode

+ # if we are testing on a dataset, test all sentences of an object;

+ # o/w, we are validating during training, randomly sample one sentence for efficiency

+ for r in ref_ids:

+ ref = self.refer.Refs[r]

+

+ sentences_for_ref = []