Spaces:

Running

Running

SirlyDreamer

commited on

Commit

·

c6faeb4

1

Parent(s):

c4f49df

VLM

Browse files- .gitattributes +12 -0

- LICENSE +21 -0

- README.md +152 -2

- asset/images/人脸.jpg +3 -0

- asset/images/会员卡.jpg +3 -0

- asset/images/会员登记表.jpg +3 -0

- asset/images/前台工作人员.jpg +3 -0

- asset/images/双节棍.jpg +3 -0

- asset/images/手串.jpg +3 -0

- asset/images/手机.jpg +3 -0

- asset/images/油漆桶.jpg +3 -0

- asset/images/烟头.jpg +3 -0

- asset/images/鲸娱秘境1.jpg +3 -0

- asset/images/鲸娱秘境2.jpg +3 -0

- asset/images/鲸娱秘境3.jpg +3 -0

- config/police.yaml +42 -0

- config/taoist.yaml +63 -0

- demo_info.md +0 -0

- gradio_with_state.py +182 -0

- requirements.txt +4 -0

- src/GameMaster.py +256 -0

- src/__init__.py +6 -0

- src/fishTTS.py +165 -0

- src/llm_response.py +60 -0

- src/parse_json.py +68 -0

- src/recognize_from_image_glm.py +128 -0

- src/resize_img.py +22 -0

- test/0630discuss_prompt.txt +18 -0

- test/gradio_interface.py +207 -0

- test/pyproject.toml +15 -0

- test/test_gradio_state.py +16 -0

- test/test_playground.py +28 -0

- test/test_vlm.py +61 -0

- test/trans_image2html.py +14 -0

- uv.lock +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,15 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

asset/images/会员登记表.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

asset/images/手机.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

asset/images/油漆桶.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

asset/images/鲸娱秘境1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

asset/images/鲸娱秘境3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

asset/images/鲸娱秘境2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

asset/images/人脸.jpg filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

asset/images/会员卡.jpg filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

asset/images/前台工作人员.jpg filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

asset/images/双节棍.jpg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

asset/images/手串.jpg filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

asset/images/烟头.jpg filter=lfs diff=lfs merge=lfs -text

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2025 Cheng Li @ SenseTime

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -5,9 +5,159 @@ colorFrom: pink

|

|

| 5 |

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.35.0

|

| 8 |

-

app_file:

|

| 9 |

pinned: false

|

| 10 |

license: gpl-3.0

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 5 |

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.35.0

|

| 8 |

+

app_file: gradio_with_state.py

|

| 9 |

pinned: false

|

| 10 |

license: gpl-3.0

|

| 11 |

---

|

| 12 |

|

| 13 |

+

# 鲸娱秘境-实景AI游戏

|

| 14 |

+

|

| 15 |

+

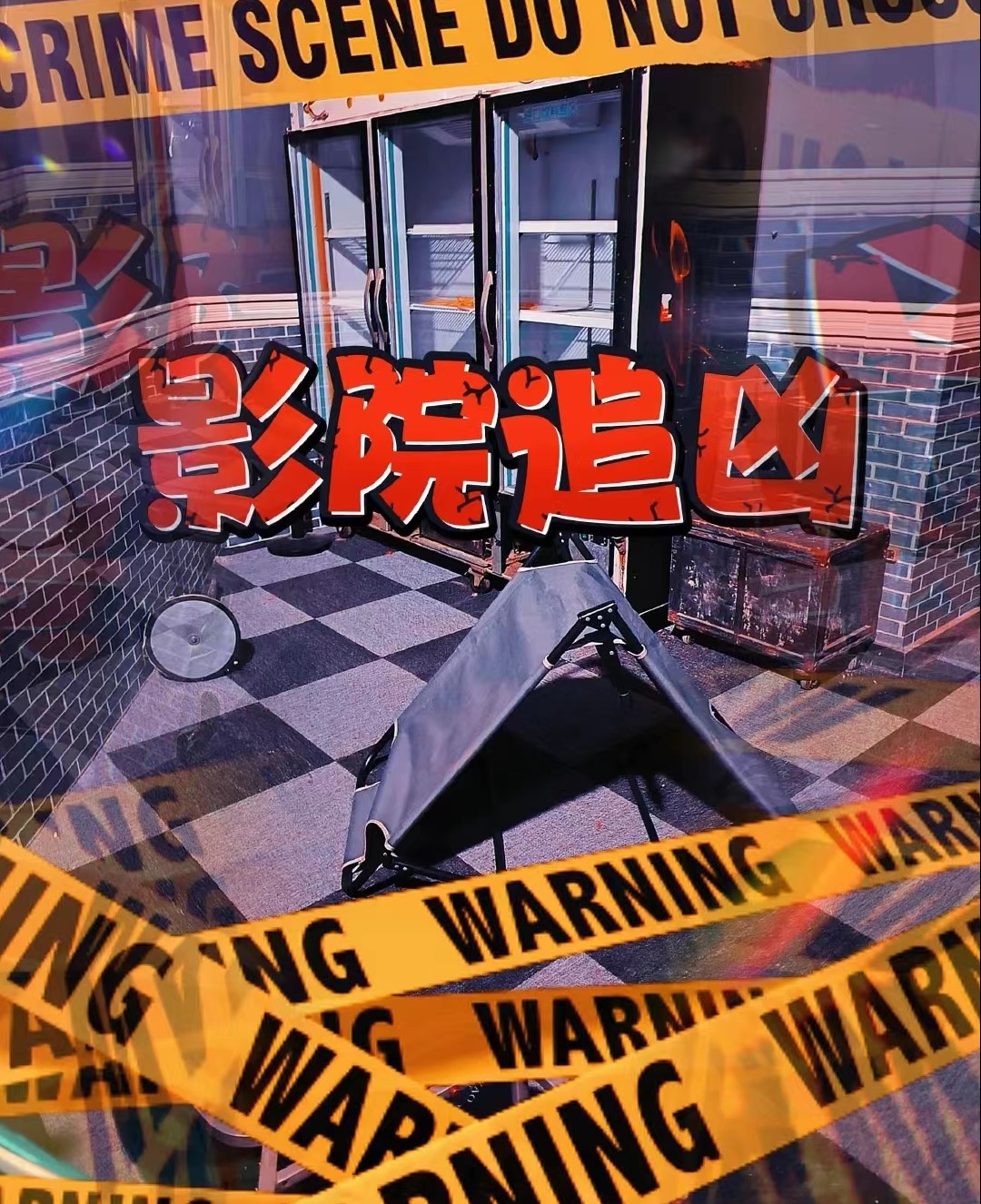

李鲁鲁老师指导的鲸娱秘境队伍在Intel2025创新大赛中的提交,鲸娱秘境是刘济帆经营的在北京望京的AI线下实体密室逃脱

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

<div style="display: flex; align-items: center;">

|

| 19 |

+

<div style="flex: 0 0 300px;">

|

| 20 |

+

<img src="asset/images/鲸娱秘境1.jpg" style="width: 300px;">

|

| 21 |

+

</div>

|

| 22 |

+

<div style="flex: 1; padding-left: 20px;">

|

| 23 |

+

<!-- 在这里添加右侧文本内容 -->

|

| 24 |

+

鲸娱密境AI实景游戏,是由清华-中戏跨学科团队打造的沉浸式娱乐解决方案。项目基于生成式AI技术,通过智能体(AIAgent)重构传统的线下密室与剧本杀产业,已在实际商业场景实现4000+玩家验证。我们试图使用最新的语言模型技术以及创新的运营模式,解决行业痛点:内容生产成本高、人力运营成本高、空间利用率低。鲸娱密境在游戏流程中,使用大量的角色扮演Agent,来代替玩家阅读剧本的方式,向玩家提供信息。

|

| 25 |

+

</div>

|

| 26 |

+

</div>

|

| 27 |

+

|

| 28 |

+

<details>

|

| 29 |

+

<summary>demo开发者: 李鲁鲁, 王莹莹, sirly(黄泓森), 刘济帆</summary>

|

| 30 |

+

- 李鲁鲁负责了gradio大部分的交互和api连接

|

| 31 |

+

- 王莹莹实现了从vlm中抽取物体 并且根据物体生成角色台词

|

| 32 |

+

- 刘济帆提供了角色的剧情设计

|

| 33 |

+

- Sirly完成了OpenVINO的部署

|

| 34 |

+

</details>

|

| 35 |

+

|

| 36 |

+

# 原型项目的动机

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

https://github.com/user-attachments/assets/e2b707b6-dcdf-44de-b43d-e6765945ac38

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

在传统的线下密室中,往往需要玩家通过将特定的物品放到特定的位置来推动剧情。这时如果使用射频装置来进行验证,玩家往往会摸索检查道具中RFID的芯片以及寻找芯片的感应区,这一行为会造成严重的“出戏”。并且,对于错误的道具感应,往往由于主题设计的人力原因,没有过多的反馈。而如果使用人力来进行检验,往往会极大程度地拉高密室的运营成本。在这次比赛的项目中,我们希望借助VLM的泛化能力,能够实现对任意场景中的物品都能够触发对应的反馈。并且,当玩家将任意场景中的物品展示到场景区域的时候,会先由VLM确定物品,然后再触发对应的AIGC的文本。如果物品命中剧情需要的物品列表时,则会进一步推进剧情。借助语言模型的多样化文本的生成能力,可以为场景中的所有道具,都设计匹配的感应语音,以增加游戏的趣味性。项目计划最终也支持在具有OpenVINO的Intel AIPC上运行,以期待可以最终以较小的终端设备形式,加入到实际运营的线下场馆中。

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

# 运行说明

|

| 48 |

+

|

| 49 |

+

在运行之前需要参照.env.example的方式部署.env,对于在线端可以这么设置

|

| 50 |

+

|

| 51 |

+

```bash

|

| 52 |

+

LLM_BACKEND = zhipu

|

| 53 |

+

MODEL_NAME = glm-4-air

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

对于使用openvino本地模型的,使用"openvino",并且需要设置模型名称,在提交视频中使用了Qwen2.5-7B-Instruct-fp16-ov。同时你需要在本地建立openai形式的fastapi,使用8000端口。

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

```bash

|

| 60 |

+

LLM_BACKEND = openvino

|

| 61 |

+

MODEL_NAME = Qwen2.5-7B-Instruct-fp16-ov

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

同时LLM_BACKEND额外还支持openai和siliconflow

|

| 65 |

+

|

| 66 |

+

配置好之后直接运行gradio_with_state.py就可以

|

| 67 |

+

|

| 68 |

+

# 使用VLM和显式COT对广泛物体进行识别

|

| 69 |

+

|

| 70 |

+

在剧本杀场景中,物品识别的挑战在于需要处理高度多样化的物品类型——包括剧情相关的关键道具、环境装饰物品以及玩家携带的意外物品(如手机、个人配饰等)。为解决这一问题,我们创新性地采用了视觉语言模型(VLM)结合显式思维链(Chain-of-Thought, CoT)的技术方案,其核心设计如下:

|

| 71 |

+

|

| 72 |

+

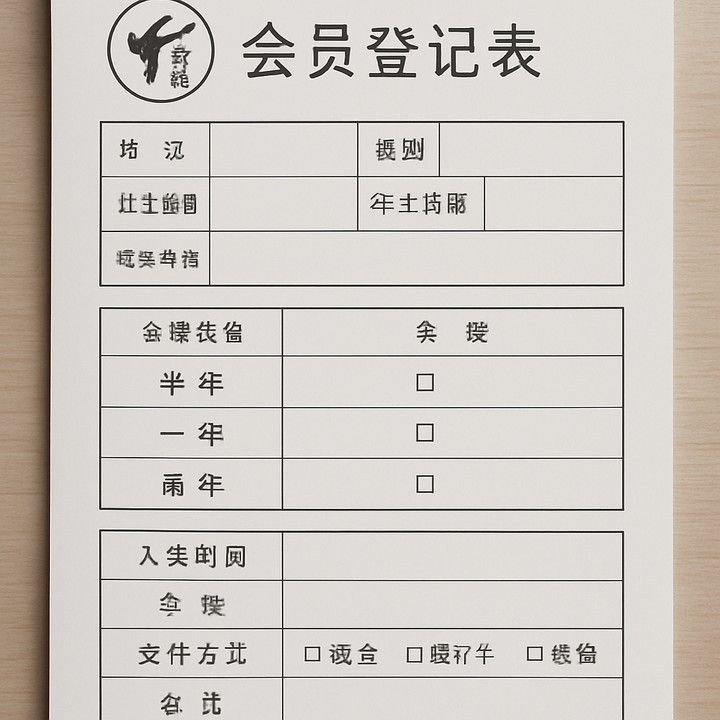

1. **覆盖长尾物品**:传统CV模型难以覆盖剧本杀中可能出现的非常规物品(如"会员登记表"、"烟头"、"双节棍"等)

|

| 73 |

+

2. **语义灵活性**:同一物品可能有多种名称(如"会员卡" vs "VIP卡"),需要动态匹配候选词

|

| 74 |

+

3. **推理可解释性**:通过显式CoT确保模型决策过程透明可追溯

|

| 75 |

+

|

| 76 |

+

我们的核心prompt设计如下

|

| 77 |

+

|

| 78 |

+

```

|

| 79 |

+

请帮助我抽取图片中的主要物体,如果命中candidates中的物品,则按照candidates输出,否则,输出主要物品的名字

|

| 80 |

+

candidates: {candidates}

|

| 81 |

+

Let's think step by step and output in json format, 包括以下字段:

|

| 82 |

+

- caption 详细描述图像

|

| 83 |

+

- major_object 物品名称

|

| 84 |

+

- echo 重复字符串: 我将检查candidates中的物品,如果major_object有同义词在candidates中,则修正为candidate对应的名字,不然则保留major_object

|

| 85 |

+

- fixed_object_name: 检查candidates后修正(如果命中)的名词,如果不命中则重复输出major_object

|

| 86 |

+

```

|

| 87 |

+

|

| 88 |

+

这一段核心代码部分在src/recognize.py中。

|

| 89 |

+

|

| 90 |

+

# 使用显式COT对特定物品的台词生成

|

| 91 |

+

|

| 92 |

+

我们也使用一���显示的CoT,来对特定物品的台词进行生成。

|

| 93 |

+

|

| 94 |

+

这部分在GameMaster.py的generate_item_response函数中。具体使用了这样一个prompt

|

| 95 |

+

|

| 96 |

+

```

|

| 97 |

+

该游戏阶段的背景设定:{background}

|

| 98 |

+

对于道具 {item_i} 的回复是 {response_i}

|

| 99 |

+

|

| 100 |

+

你的剧情设定如下: {current_prompt}

|

| 101 |

+

|

| 102 |

+

Let's think it step-by-step and output into JSON format,包括下列关键字

|

| 103 |

+

"item_name" - 要针对输出的物品名称{item_name}

|

| 104 |

+

"analysis" - 结合剧情判断剧情中的人物应该进行怎样的输出

|

| 105 |

+

"echo" - 重复下列字符串: 我认为在剧情设定的人物眼里,看到物品 {item_name}时,会说

|

| 106 |

+

"character_response" - 根据人物性格和剧情设定,输出人物对物品 {item_name} 的反应

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

比如当物品输入手机的时候,LLM的回复为

|

| 110 |

+

|

| 111 |

+

```json

|

| 112 |

+

{

|

| 113 |

+

"item_name": "手机",

|

| 114 |

+

"analysis": "在剧情中,手机作为一个可能的线索,可能会含有凶手的通讯记录或者与受害者最后的联系信息。队长李伟会指示队员们检查手机,以寻找可能的线索,如通话记录、短信、社

|

| 115 |

+

交媒体应用等。",

|

| 116 |

+

"echo": "我认为在剧情设定的人物眼里,看到物品 手机时,会说",

|

| 117 |

+

"character_response": "队长李伟可能会说:'这手机可能是死者最后的通讯工具,检查一下有没有未接电话或者最近的通话记录,看看能否找到凶手的线索。'"

|

| 118 |

+

}

|

| 119 |

+

```

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

# 鲸娱秘境

|

| 123 |

+

|

| 124 |

+

**鲸娱秘境·现实游戏** 地址:酒仙桥路新辰里3楼(米瑞酷影城旁)

|

| 125 |

+

|

| 126 |

+

【鲸娱秘境·现实游戏】成立于2023年5月,团队致力于将游戏与真实场景结合,利用AIGC技术,打造出在现实中完全沉浸的游戏体验。

|

| 127 |

+

|

| 128 |

+

<img src="asset/images/鲸娱秘境1.jpg" style="height: 300px;">

|

| 129 |

+

|

| 130 |

+

不同于传统密室或沉浸式体验的封闭空间,鲸娱秘境的每个主题都拥有开放的实景地图。例如在《影院追凶》游戏中,玩家需要进入真实的电影院里寻找线索,走访附近商家。《朝阳浮生记》则把商战搬到整层商场,商场里的每个商户都是NPC,玩家要在真实商场里买地、炒股、斗智斗勇。

|

| 131 |

+

|

| 132 |

+

<img src="asset/images/鲸娱秘境2.jpg" style="height: 300px;">

|

| 133 |

+

|

| 134 |

+

此外,我们还利用各类AI技术增强游戏的沉浸感:比如让AI扮演证人与玩家进行对话,通过视觉模型分析玩家的动作并及时给出反馈,推动剧情发展。

|

| 135 |

+

|

| 136 |

+

<img src="asset/images/鲸娱秘境3.jpg" style="height: 300px;">

|

| 137 |

+

|

| 138 |

+

这种 “现实游戏” 的设计,让玩家在自由探索中获得更加真实、沉浸的体验。

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

|

| 142 |

+

## Detailed TODO

|

| 143 |

+

|

| 144 |

+

本项目的开发成员在开源社区招募,下面的TODO记录了每个人的贡献

|

| 145 |

+

|

| 146 |

+

- [x] DONE by 鲁叔, 参考了王莹莹的原始代码 调通一个openai形式的response

|

| 147 |

+

- [x] (DONE by 鲁叔) 在gamemaster引入config配置(一个gamemaster载入一个yaml文件)

|

| 148 |

+

- [x] (DONE by 鲁叔) 准备一堆物品照片,确定gamemaster的物品载入格式

|

| 149 |

+

- [x] (DONE by 鲁叔) gradio和GM增加图片上传接口

|

| 150 |

+

- [x] 鲁叔, 完成剧情内物体 调试物品在chatbot的submit功能

|

| 151 |

+

- [x] 剧情外物体在chatbot的submit

|

| 152 |

+

- [x] (DONE by 鲁叔)在yaml中定义物品-台词的对应关系

|

| 153 |

+

- [x] 鲁叔 fix prompt, 鲁叔 fix解析 DONE by 王莹莹 实现根据prompt 物品 生成台词的函数

|

| 154 |

+

- [x](DONE by 鲁叔) 接通chat history - 鲁叔

|

| 155 |

+

- [x] (DONE by 王莹莹, 鲁叔fix 输入type) VLM接口识别物体

|

| 156 |

+

- [x] 调通语音生成

|

| 157 |

+

- [x] (DONE by sirly) , 调通OpenVINO后端LLM对接

|

| 158 |

+

- [x] (DONE by sirly) , 调通OpenVINO后端VLM对接

|

| 159 |

+

- [ ] (DONE by sirly) , 部署gradio到魔搭和hugging face

|

| 160 |

+

- [x] (Done by 鲁叔) 装修界面

|

| 161 |

+

- [ ] 每个阶段都可以看到所有物品,感觉有点乱,我们可以限制每个阶段看到的物品不一样

|

| 162 |

+

- [ ] 目前每个物品的台词暂时是单一的 不受到阶段的控制, 可以之后升级定义为 支持某个阶段 某个物品的台词(单阶段响应)

|

| 163 |

+

|

asset/images/人脸.jpg

ADDED

|

Git LFS Details

|

asset/images/会员卡.jpg

ADDED

|

Git LFS Details

|

asset/images/会员登记表.jpg

ADDED

|

Git LFS Details

|

asset/images/前台工作人员.jpg

ADDED

|

Git LFS Details

|

asset/images/双节棍.jpg

ADDED

|

Git LFS Details

|

asset/images/手串.jpg

ADDED

|

Git LFS Details

|

asset/images/手机.jpg

ADDED

|

Git LFS Details

|

asset/images/油漆桶.jpg

ADDED

|

Git LFS Details

|

asset/images/烟头.jpg

ADDED

|

Git LFS Details

|

asset/images/鲸娱秘境1.jpg

ADDED

|

Git LFS Details

|

asset/images/鲸娱秘境2.jpg

ADDED

|

Git LFS Details

|

asset/images/鲸娱秘境3.jpg

ADDED

|

Git LFS Details

|

config/police.yaml

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

prompt_steps:

|

| 2 |

+

- prompt: 你是朝阳市刑侦大队第一支队的队长李伟,最近,你正在调查一起发生在朝阳市内,针对于二次元女生的连环凶杀案。就在前天,又有一名喜欢二次元的女生死在了"北投潮街"的一间仓库里。现在,你的队员正在案发现场仓库进行调查。而你,正通过监控远程查看队员们的调查情况。队员们会把一些他们认为重要的,在案发现场找到的物品线索展示给你,你可以根据这些物品线索推测凶手的作案方式,以及凶手究竟是什么样的人,也可以单纯对线索进行描述。

|

| 3 |

+

conds:

|

| 4 |

+

- - 烟头

|

| 5 |

+

- - 会员卡

|

| 6 |

+

- - 手串

|

| 7 |

+

welcome_info: 各位调查员你们好,我是朝阳市刑侦大队第一支队的队长李伟。近期,我市发生一系列针对于二次元女生的连环凶杀案,性质及其恶劣。你们现在所在的位置,就是最近一位受害者被发现的案发现场。今天早上,保洁阿姨张芳丽向我们报案。目前,尸体已经被移交到法医这里,尸检结果随后也会发给你们。现在,请你们对案发现场进行调查,我们通过摄像头远程对你们进行协助。如果你们找到觉得可疑的物品或线索,可疑把它放到摄像头下,让我更清楚的看到它。但也请注意,对证物要轻拿轻放,千万不要破坏现场证物。

|

| 8 |

+

- prompt: 你是朝阳市刑侦大队第一支队的队长李伟,最近,你正在调查一起发生在朝阳市内,针对于二次元女生的连环凶杀案。就在前天,又有一名喜欢二次元的女生死在了"北投潮街"的一间仓库里。你的队员们也对案发现场进行了勘察。在案发现场,队员们发现了半支烟头与一个手串,还找到了一张"正心馆"的会员卡。现在,你的队员们正在正心馆跆拳道馆进行调查。而你,正通过监控远程查看队员们的调查情况。队员们会把一些他们认为重要的,在正心馆找到的物品线索展示给你,你可以根据这些物品线索推测凶手的作案方式,以及凶手究竟是什么样的人,也可以单纯对线索进行描述。

|

| 9 |

+

conds:

|

| 10 |

+

- - 前台工作人员

|

| 11 |

+

- - 双节棍

|

| 12 |

+

- - 会员登记表

|

| 13 |

+

welcome_info: 各位调查员,看来,你们已经对现场进行了仔细的勘察,你们找到了很重要的线索。凶手可能吸烟,可能在现场遗落了自己的手串,正心馆这个地方也很可疑。接下来,请各位前往正心馆调查,并让我看到正心馆里有哪些可疑的线索。

|

| 14 |

+

- prompt: 你是朝阳市刑侦大队第一支队的队长李伟,最近,你正在调查一起发生在朝阳市内,针对于二次元女生的连环凶杀案。就在前天,又有一名喜欢二次元的女生死在了"北投潮街"的一间仓库里。你的队员们也对案发现场进行了勘察,又发现了前台工作人员,双节棍,会员登记表这三个线索,现在,你需要根据这些线索,推断凶手的作案方式以及凶手的具体情况。

|

| 15 |

+

conds: []

|

| 16 |

+

welcome_info: 如果你们已经对正心馆调查的差不多了,可以回到调查室。根据你们刚刚调查的结果,指认你们认为最有可能是凶手的人!

|

| 17 |

+

|

| 18 |

+

items:

|

| 19 |

+

- name: 烟头

|

| 20 |

+

img_path: asset/images/烟头.jpg

|

| 21 |

+

text: 现场找到了烟头...? 你们先收好,随后把它交给助理警员,我们会对这个烟头进行检查,看看上面是否存在嫌疑人的DNA。

|

| 22 |

+

- name: 油漆桶

|

| 23 |

+

img_path: asset/images/油漆桶.jpg

|

| 24 |

+

text: 根据商场提供的信息,这个油漆桶已经放在这里很久了,是之前装修时遗留的。

|

| 25 |

+

- name: 会员卡

|

| 26 |

+

img_path: asset/images/会员卡.jpg

|

| 27 |

+

text: 这是"正心馆"的会员卡?据调查,死者并没有办过正心馆的会员,难道,这是凶手行凶时不小心掉落的?这是个值得调查的突破口。

|

| 28 |

+

- name: 手串

|

| 29 |

+

img_path: asset/images/手串.jpg

|

| 30 |

+

text: 这个手串看起来有点年头了,被害人是个小姑娘,肯定不是被害人的。如果它属于凶手,那凶手一定是个上了年纪的男人。

|

| 31 |

+

- name: 一张人脸

|

| 32 |

+

img_path: asset/images/人脸.jpg

|

| 33 |

+

text: 这是案发现场的尸体吗?不对啊,我们已经将尸体带到法医这里了... 啊不好意思,我看错了,原来这是你们的脸,我的调查员们,面色略显苍白啊。

|

| 34 |

+

- name: 前台工作人员

|

| 35 |

+

img_path: asset/images/前台工作人员.jpg

|

| 36 |

+

text: 这位应该就是正心馆的工作人员了,请您配合我们的调查。各位队员,你们也可以向他询问案发时的情况,了解更多线索。

|

| 37 |

+

- name: 双节棍

|

| 38 |

+

img_path: asset/images/双节棍.jpg

|

| 39 |

+

text: 经过法医鉴定,被害的女生也是被钝器砸死的,但现在还没有找到凶器。不知这双节棍是否可以成为凶器?

|

| 40 |

+

- name: 会员登记表

|

| 41 |

+

img_path: asset/images/会员登记表.jpg

|

| 42 |

+

text: 你们可以仔细研究一下这个会员登记表,并��问一下前台工作人员,这里面有没有喜欢带手串,吸烟的中年男人。

|

config/taoist.yaml

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

prompt_steps:

|

| 3 |

+

- prompt: 你在扮演一个在路边摆摊算命的道士,当前阶段主要为路人提供基础命理咨询

|

| 4 |

+

conds:

|

| 5 |

+

- - 修仙界的物品 # 示例:储物袋、符纸、罗盘等

|

| 6 |

+

- 储物袋

|

| 7 |

+

- 符纸

|

| 8 |

+

- 罗盘

|

| 9 |

+

- 玄铁剑

|

| 10 |

+

- 青木枝

|

| 11 |

+

- 灵竹芯

|

| 12 |

+

- 寒潭水

|

| 13 |

+

- 玉露瓶

|

| 14 |

+

welcome_info: 小道在此算命,不知阁下是问姻缘、前程?

|

| 15 |

+

- prompt: 你在扮演已传授《五行锻体诀》的道士,当前阶段需要引导弟子收集金属性灵物(土属性灵玉本道已备)

|

| 16 |

+

conds:

|

| 17 |

+

- - 菜刀 # 凡铁打造的普通金属器物

|

| 18 |

+

- 金锭 # 蕴含金行灵气的灵金属

|

| 19 |

+

- 玄铁剑 # 高阶金属性法宝

|

| 20 |

+

welcome_info: 既然这样,我也可以指导你修炼,这是《五行锻体诀》入门篇,先去寻来金属性灵物,本道助你引气入体

|

| 21 |

+

- prompt: 你在扮演等待弟子凑齐五行灵物的传道者,当前阶段需确认木、水属性灵物已备

|

| 22 |

+

conds:

|

| 23 |

+

- - 青木枝 # 百年古木精华

|

| 24 |

+

- 灵竹芯 # 竹中凝结的木行灵气

|

| 25 |

+

- - 寒潭水 # 深山寒潭的灵水

|

| 26 |

+

- 玉露瓶 # 收集晨露的法器(含水性灵气)

|

| 27 |

+

welcome_info: 不错,金属性灵物找到了!现在需要找木属性和水属性灵物,凑齐了帮你进一步炼制。

|

| 28 |

+

- prompt: 准备帮你炼制筑基丹,并解答修仙问题

|

| 29 |

+

conds: []

|

| 30 |

+

welcome_info: 木属性和水属性灵物都齐了!这就为你炼制筑基丹,有任何修仙问题尽管问。

|

| 31 |

+

|

| 32 |

+

items:

|

| 33 |

+

- name: 菜刀

|

| 34 |

+

img_path: lcoal_data/images/菜刀.jpg

|

| 35 |

+

text: 根据菜刀反馈算命信息

|

| 36 |

+

- name: 符纸

|

| 37 |

+

img_path: lcoal_data/images/符纸.jpg

|

| 38 |

+

text: 这符纸。。。?你是从哪里得到的?莫非你与我道门有缘?

|

| 39 |

+

- name: 罗盘

|

| 40 |

+

img_path: lcoal_data/images/罗盘.jpg

|

| 41 |

+

text: 这罗盘。。。?莫非你与我道门有缘?

|

| 42 |

+

- name: 储物袋

|

| 43 |

+

img_path: lcoal_data/images/储物袋.jpg

|

| 44 |

+

text: 这储物袋...看来你有奇遇?莫非与我道门有缘?

|

| 45 |

+

- name: 金锭

|

| 46 |

+

img_path: lcoal_data/images/金锭.jpg

|

| 47 |

+

text: 此金锭有灵气,是炼制的好材料!

|

| 48 |

+

- name: 玄铁剑

|

| 49 |

+

img_path: lcoal_data/images/玄铁剑.jpg

|

| 50 |

+

text: 这玄铁剑...竟有高阶金行之气!

|

| 51 |

+

- name: 青木枝

|

| 52 |

+

img_path: lcoal_data/images/青木枝.jpg

|

| 53 |

+

text: 百年古木精华,木属性灵物难得!

|

| 54 |

+

- name: 灵竹芯

|

| 55 |

+

img_path: lcoal_data/images/灵竹芯.jpg

|

| 56 |

+

text: 竹中灵气凝结,木属性正好合用。

|

| 57 |

+

- name: 寒潭水

|

| 58 |

+

img_path: lcoal_data/images/寒潭水.jpg

|

| 59 |

+

text: 深山寒潭之水,水属性灵物已备。

|

| 60 |

+

- name: 玉露瓶

|

| 61 |

+

img_path: lcoal_data/images/玉露瓶.jpg

|

| 62 |

+

text: 晨露法器含水性灵气,甚好!

|

| 63 |

+

|

demo_info.md

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

gradio_with_state.py

ADDED

|

@@ -0,0 +1,182 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from src.GameMaster import GameMaster

|

| 3 |

+

import os

|

| 4 |

+

from src.resize_img import resize_image, get_img_html

|

| 5 |

+

from src.fishTTS import get_audio

|

| 6 |

+

|

| 7 |

+

yaml_path = "config/police.yaml"

|

| 8 |

+

|

| 9 |

+

def create_game_master():

|

| 10 |

+

return GameMaster(yaml_path)

|

| 11 |

+

|

| 12 |

+

class SessionState:

|

| 13 |

+

def __init__(self):

|

| 14 |

+

self.game_master = create_game_master()

|

| 15 |

+

self.item_str_list = self.game_master.get_item_names()

|

| 16 |

+

self.welcome_info = self.game_master.get_welcome_info()

|

| 17 |

+

|

| 18 |

+

def callback_generate_audio(chatbot):

|

| 19 |

+

if len(chatbot) == 0:

|

| 20 |

+

return None

|

| 21 |

+

response_message = chatbot[-1][1]

|

| 22 |

+

audio_path = get_audio(response_message)

|

| 23 |

+

return gr.update(value=audio_path, autoplay=True)

|

| 24 |

+

|

| 25 |

+

def chat_submit_callback(user_message, chat_history, state: SessionState):

|

| 26 |

+

if user_message.strip():

|

| 27 |

+

user_input, bot_response = state.game_master.submit_chat(user_message)

|

| 28 |

+

chat_history.append((user_input, bot_response))

|

| 29 |

+

return chat_history, ""

|

| 30 |

+

|

| 31 |

+

def item_submit_callback(item_name, chat_history, state: SessionState):

|

| 32 |

+

if not item_name.strip():

|

| 33 |

+

return chat_history, ""

|

| 34 |

+

user_info, response_info = state.game_master.submit_item(item_name)

|

| 35 |

+

img_path = state.game_master.name2img_path(item_name)

|

| 36 |

+

if img_path and os.path.exists(img_path):

|

| 37 |

+

resized_img = resize_image(img_path, max_height=200)

|

| 38 |

+

img_html = get_img_html(resized_img)

|

| 39 |

+

user_info = gr.HTML(img_html)

|

| 40 |

+

chat_history.append((user_info, response_info))

|

| 41 |

+

return chat_history, ""

|

| 42 |

+

|

| 43 |

+

def img_submit_callback(image_input, chatbot, state: SessionState):

|

| 44 |

+

if image_input:

|

| 45 |

+

resized_img_to_rec = resize_image(image_input, max_height=400)

|

| 46 |

+

resized_img = resize_image(image_input, max_height=200)

|

| 47 |

+

img_html = get_img_html(resized_img)

|

| 48 |

+

user_info, response = state.game_master.submit_image(resized_img_to_rec)

|

| 49 |

+

chatbot.append((gr.HTML(img_html), response))

|

| 50 |

+

return chatbot

|

| 51 |

+

|

| 52 |

+

def update_status_show(state: SessionState):

|

| 53 |

+

return state.game_master.get_status()

|

| 54 |

+

|

| 55 |

+

def reload_game(state: SessionState):

|

| 56 |

+

state.game_master = create_game_master()

|

| 57 |

+

return [(None, state.game_master.get_welcome_info())], state.game_master.get_status()

|

| 58 |

+

|

| 59 |

+

css = """

|

| 60 |

+

.chatbot img {

|

| 61 |

+

max-height: 200px !important;

|

| 62 |

+

width: auto !important;

|

| 63 |

+

}"""

|

| 64 |

+

|

| 65 |

+

with gr.Blocks(title="鲸娱秘境-Intel参赛", css=css) as demo:

|

| 66 |

+

state = gr.State(SessionState())

|

| 67 |

+

|

| 68 |

+

with gr.Tabs() as tabs:

|

| 69 |

+

with gr.TabItem("demo"):

|

| 70 |

+

gr.Markdown("# 鲸娱秘境-英特尔人工智能创新应用")

|

| 71 |

+

gr.Markdown('欢迎大家在点评搜索"鲸娱秘境",线上demo为游戏环节一部分,并加入多模态元素')

|

| 72 |

+

|

| 73 |

+

with gr.Row():

|

| 74 |

+

with gr.Column(scale=2):

|

| 75 |

+

|

| 76 |

+

chatbot = gr.Chatbot(label="对话窗口", height=800, value=lambda: [(None, state.value.welcome_info)] if hasattr(state, 'value') else [(None, "")])

|

| 77 |

+

user_input = gr.Textbox(label="输入消息", placeholder="请输入您的消息...", interactive=True)

|

| 78 |

+

send_btn = gr.Button("发送", variant="primary")

|

| 79 |

+

|

| 80 |

+

with gr.Column(scale=1):

|

| 81 |

+

with gr.Row():

|

| 82 |

+

radio_choices = gr.Radio(label="向NPC提交场景中的物品",

|

| 83 |

+

choices=[],

|

| 84 |

+

value="生成描述", interactive=True)

|

| 85 |

+

|

| 86 |

+

with gr.Row():

|

| 87 |

+

item_submit_btn = gr.Button("提交场景内的物品", variant="primary")

|

| 88 |

+

|

| 89 |

+

image_input = gr.Image(type="filepath", label="上传图片")

|

| 90 |

+

|

| 91 |

+

with gr.Row():

|

| 92 |

+

img_submit_btn = gr.Button("提交图片中的物品", variant="primary")

|

| 93 |

+

|

| 94 |

+

with gr.Row():

|

| 95 |

+

reload_btn = gr.Button("重置剧情", variant="primary")

|

| 96 |

+

|

| 97 |

+

with gr.Row():

|

| 98 |

+

audio_player = gr.Audio()

|

| 99 |

+

|

| 100 |

+

with gr.Accordion("For debug", open=False):

|

| 101 |

+

with gr.Row():

|

| 102 |

+

item_text_to_submit = gr.Textbox(label="直接输入物品名", value="", interactive=True, scale=20)

|

| 103 |

+

item_text_submit_btn = gr.Button("提交", variant="primary", scale=1)

|

| 104 |

+

|

| 105 |

+

status_display = gr.Textbox(label="agent状态显示", interactive=False, max_lines=3)

|

| 106 |

+

|

| 107 |

+

send_btn.click(chat_submit_callback, [user_input, chatbot, state], [chatbot, user_input])

|

| 108 |

+

user_input.submit(chat_submit_callback, [user_input, chatbot, state], [chatbot, user_input])

|

| 109 |

+

|

| 110 |

+

img_submit_btn.click(

|

| 111 |

+

fn=img_submit_callback,

|

| 112 |

+

inputs=[image_input, chatbot, state],

|

| 113 |

+

outputs=[chatbot]

|

| 114 |

+

).then(

|

| 115 |

+

fn=update_status_show,

|

| 116 |

+

inputs=[state],

|

| 117 |

+

outputs=[status_display]

|

| 118 |

+

).then(

|

| 119 |

+

fn=callback_generate_audio,

|

| 120 |

+

inputs=[chatbot],

|

| 121 |

+

outputs=[audio_player]

|

| 122 |

+

)

|

| 123 |

+

|

| 124 |

+

item_submit_btn.click(

|

| 125 |

+

fn=item_submit_callback,

|

| 126 |

+

inputs=[radio_choices, chatbot, state],

|

| 127 |

+

outputs=[chatbot, radio_choices]

|

| 128 |

+

).then(

|

| 129 |

+

fn=update_status_show,

|

| 130 |

+

inputs=[state],

|

| 131 |

+

outputs=[status_display]

|

| 132 |

+

).then(

|

| 133 |

+

fn=callback_generate_audio,

|

| 134 |

+

inputs=[chatbot],

|

| 135 |

+

outputs=[audio_player]

|

| 136 |

+

)

|

| 137 |

+

|

| 138 |

+

item_text_submit_btn.click(

|

| 139 |

+

fn=item_submit_callback,

|

| 140 |

+

inputs=[item_text_to_submit, chatbot, state],

|

| 141 |

+

outputs=[chatbot, item_text_to_submit]

|

| 142 |

+

).then(

|

| 143 |

+

fn=update_status_show,

|

| 144 |

+

inputs=[state],

|

| 145 |

+

outputs=[status_display]

|

| 146 |

+

).then(

|

| 147 |

+

fn=callback_generate_audio,

|

| 148 |

+

inputs=[chatbot],

|

| 149 |

+

outputs=[audio_player]

|

| 150 |

+

)

|

| 151 |

+

|

| 152 |

+

reload_btn.click(

|

| 153 |

+

fn=reload_game,

|

| 154 |

+

inputs=[state],

|

| 155 |

+

outputs=[chatbot, status_display]

|

| 156 |

+

)

|

| 157 |

+

|

| 158 |

+

def update_radio_choices(state: SessionState):

|

| 159 |

+

return gr.update(choices=state.item_str_list)

|

| 160 |

+

|

| 161 |

+

demo.load(

|

| 162 |

+

fn=update_radio_choices,

|

| 163 |

+

inputs=[state],

|

| 164 |

+

outputs=[radio_choices]

|

| 165 |

+

)

|

| 166 |

+

|

| 167 |

+

def update_chatbot(state: SessionState):

|

| 168 |

+

return gr.update(value=[(None, state.welcome_info)])

|

| 169 |

+

|

| 170 |

+

demo.load(

|

| 171 |

+

fn=update_chatbot,

|

| 172 |

+

inputs=[state],

|

| 173 |

+

outputs=[chatbot]

|

| 174 |

+

)

|

| 175 |

+

|

| 176 |

+

with gr.TabItem("Readme"):

|

| 177 |

+

with open("demo_info.md", "r", encoding="utf-8") as f:

|

| 178 |

+

readme_content = f.read()

|

| 179 |

+

gr.Markdown(readme_content)

|

| 180 |

+

|

| 181 |

+

if __name__ == "__main__":

|

| 182 |

+

demo.launch(share=True)

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio

|

| 2 |

+

dotenv

|

| 3 |

+

openai

|

| 4 |

+

zhipuai

|

src/GameMaster.py

ADDED

|

@@ -0,0 +1,256 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .llm_response import get_llm_response

|

| 2 |

+

from .parse_json import parse_json

|

| 3 |

+

# from .recognize_from_image_glm import get_vlm_response

|

| 4 |

+

from .recognize_from_image_glm import get_vlm_response_cot

|

| 5 |

+

class GameMaster:

|

| 6 |

+

|

| 7 |

+

def __init__(self, yaml_file_path = None):

|

| 8 |

+

self.status = set()

|

| 9 |

+

self.history = []

|

| 10 |

+

|

| 11 |

+

self.items = []

|

| 12 |

+

self.prompt_steps = []

|

| 13 |

+

|

| 14 |

+

self.history_messages = [] # 以文字形式存储的过往历史对话

|

| 15 |

+

|

| 16 |

+

self.item_expand_name2name = {}

|

| 17 |

+

|

| 18 |

+

self.item2cache_text = {}

|

| 19 |

+

|

| 20 |

+

self.current_step = {

|

| 21 |

+

# default welcome info

|

| 22 |

+

"welcome_info": "欢迎来到游戏,快来和我一起探索吧",

|

| 23 |

+

"prompt": "",

|

| 24 |

+

"conds": []

|

| 25 |

+

}

|

| 26 |

+

|

| 27 |

+

if yaml_file_path is not None:

|

| 28 |

+

self.prompt_steps, self.items = self.load_yaml(yaml_file_path)

|

| 29 |

+

if len(self.prompt_steps) > 0:

|

| 30 |

+

self.current_step = self.prompt_steps[0]

|

| 31 |

+

self.current_index = 0

|

| 32 |

+

else:

|

| 33 |

+

print("没有成功从yaml载入关卡 使用了默认的example NPC")

|

| 34 |

+

self.item2text = self.load_default_item_text_map( self.items )

|

| 35 |

+

welcome_message = {

|

| 36 |

+

"role": "assistant",

|

| 37 |

+

"content": self.current_step["welcome_info"]

|

| 38 |

+

}

|

| 39 |

+

self.history_messages.append(welcome_message)

|

| 40 |

+

else:

|

| 41 |

+

self.item2text = self.load_default_item_text_map()

|

| 42 |

+

|

| 43 |

+

def load_yaml(self, yaml_file_path):

|

| 44 |

+

'''

|

| 45 |

+

从yaml中读取prompt_steps和items并返回

|

| 46 |

+

'''

|

| 47 |

+

import yaml

|

| 48 |

+

with open(yaml_file_path, 'r', encoding='utf-8') as f:

|

| 49 |

+

data = yaml.safe_load(f)

|

| 50 |

+

|

| 51 |

+

prompt_steps = data['prompt_steps']

|

| 52 |

+

items = []

|

| 53 |

+

for item in data['items']:

|

| 54 |

+

items.append({

|

| 55 |

+

'name': item['name'],

|

| 56 |

+

'text': item['text'],

|

| 57 |

+

'img_path' : item['img_path']

|

| 58 |

+

})

|

| 59 |

+

|

| 60 |

+

return prompt_steps, items

|

| 61 |

+

|

| 62 |

+

def name2img_path(self, name):

|

| 63 |

+

for item in self.items:

|

| 64 |

+

if item['name'] == name:

|

| 65 |

+

return item['img_path']

|

| 66 |

+

return None

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

def load_default_item_text_map(self, items = None):

|

| 70 |

+

|

| 71 |

+

item2text = {}

|

| 72 |

+

# 对于一些官方物品,应该有一个标准的 物品到text的map

|

| 73 |

+

if items is None:

|

| 74 |

+

for i in range(10):

|

| 75 |

+

_key = "物品_" + str(i)

|

| 76 |

+

_text = "物品_" + str(i) + "提交之后反馈的台词"

|

| 77 |

+

item2text[_key] = _text

|

| 78 |

+

return item2text

|

| 79 |

+

else:

|

| 80 |

+

for item in items:

|

| 81 |

+

name = item["name"]

|

| 82 |

+

text = item["text"]

|

| 83 |

+

item2text[name] = text

|

| 84 |

+

return item2text

|

| 85 |

+

|

| 86 |

+

def check_conditions(self):

|

| 87 |

+

current_conditions = self.current_step['conds']

|

| 88 |

+

if len(current_conditions) == 0:

|

| 89 |

+

return False

|

| 90 |

+

|

| 91 |

+

ans = True

|

| 92 |

+

|

| 93 |

+

for condition in current_conditions:

|

| 94 |

+

condition_flag = False

|

| 95 |

+

for item in condition:

|

| 96 |

+

if item in self.status:

|

| 97 |

+

condition_flag = True

|

| 98 |

+

if not condition_flag:

|

| 99 |

+

return False

|

| 100 |

+

|

| 101 |

+

return ans

|

| 102 |

+

|

| 103 |

+

def get_item_response(self, item_name):

|

| 104 |

+

if item_name in self.item_expand_name2name:

|

| 105 |

+

item_name = self.item_expand_name2name[item_name]

|

| 106 |

+

|

| 107 |

+

if item_name not in self.status:

|

| 108 |

+

self.status.add(item_name)

|

| 109 |

+

|

| 110 |

+

next_status_info = ""

|

| 111 |

+

|

| 112 |

+

if self.check_conditions():

|

| 113 |

+

print("进入下一阶段")

|

| 114 |

+

next_index = self.current_index + 1

|

| 115 |

+

if next_index < len(self.prompt_steps):

|

| 116 |

+

self.current_index = next_index

|

| 117 |

+

self.current_step = self.prompt_steps[self.current_index]

|

| 118 |

+

next_status_info = "\n" + self.current_step["welcome_info"]

|

| 119 |

+

self.status = set()

|

| 120 |

+

|

| 121 |

+

if item_name in self.item2text:

|

| 122 |

+

return self.item2text[item_name] + next_status_info

|

| 123 |

+

elif item_name in self.item2cache_text:

|

| 124 |

+

return self.item2cache_text[item_name] + next_status_info

|

| 125 |

+

else:

|

| 126 |

+

return self.generate_item_response(item_name) + next_status_info

|

| 127 |

+

|

| 128 |

+

def generate_item_response(self, item_name):

|

| 129 |

+

# generate( current_system_prompt, examples_current_conditsion, related_words(Rag), random_example )

|

| 130 |

+

# 1. realated_words:

|

| 131 |

+

background_info = ""

|

| 132 |

+

for step in self.prompt_steps:

|

| 133 |

+

background_info += f"该游戏阶段的背景设定: {step['prompt']}\n"

|

| 134 |

+

background_info += f"该阶段的欢迎语: {step['welcome_info']}\n"

|

| 135 |

+

for item in self.items:

|

| 136 |

+

background_info += f"对于该游戏阶段中的关键道具'{item['name']}'的回复是: {item['text']}\n"

|

| 137 |

+

|

| 138 |

+

# 2. get current_system_prompt :

|

| 139 |

+

|

| 140 |

+

current_system_prompt = self.get_system_prompt()

|

| 141 |

+

|

| 142 |

+

system_prompt = f"""

|

| 143 |

+

你的剧情设定如下:{current_system_prompt}\n

|

| 144 |

+

这是游戏的背景信息和对剧情推动有关键作用的道具信息:{background_info}

|

| 145 |

+

"""

|

| 146 |

+

user_prompt = f"""

|

| 147 |

+

Let's think it step-by-step and output into JSON format,包括下列关键字

|

| 148 |

+

"item_name" - 要针对输出的物品名称{item_name}

|

| 149 |

+

"analysis" - 结合剧情判断剧情中的人物应该进行怎样的输出

|

| 150 |

+

"echo" - 重复下列字符串: 我认为在剧情设定的人物眼里,看到物品 {item_name}时,会说

|

| 151 |

+

"character_response" - 根据人物性格和剧情设定,输出人物对物品 {item_name} 的反应

|

| 152 |

+

"""

|

| 153 |

+

|

| 154 |

+

messages = [

|

| 155 |

+

{"role": "system", "content": system_prompt},

|

| 156 |

+

{"role": "user", "content": user_prompt},

|

| 157 |

+

]

|

| 158 |

+

response_text = get_llm_response(messages)

|

| 159 |

+

|

| 160 |

+

response_in_dict = parse_json(response_text, forced_keywords=["character_response"])

|

| 161 |

+

|

| 162 |

+

if response_in_dict is not None and "character_response" in response_in_dict:

|

| 163 |

+

response_text = response_in_dict["character_response"]

|

| 164 |

+

else:

|

| 165 |

+

response_text = "这是什么?一张不知所云的图片。"

|

| 166 |

+

|

| 167 |

+

return response_text

|

| 168 |

+

|

| 169 |

+

def get_item_names(self):

|

| 170 |

+

ans = []

|

| 171 |

+

for item in self.items:

|

| 172 |

+

ans.append(item["name"])

|

| 173 |

+

return ans

|

| 174 |

+

|

| 175 |

+

def get_welcome_info(self):

|

| 176 |

+

return self.current_step["welcome_info"]

|

| 177 |

+

# return "欢迎来到游戏,这是一个默认信息,之后应该随着GameMaster指定不同的游戏而改变。"

|

| 178 |

+

|

| 179 |

+

def extract_object_from_image(self,resized_img):

|

| 180 |

+

# img_name为img的path路径

|

| 181 |

+

candidate_object_list_names = self.get_item_names()

|

| 182 |

+

str_response = get_vlm_response_cot(resized_img, candidate_object_list_names)

|

| 183 |

+

# response = get_vlm_response(img_name, candidate_object_list_names)

|

| 184 |

+

dict_response = parse_json(str_response, forced_keywords=["fixed_object_name","major_object"])

|

| 185 |

+

print(dict_response)

|

| 186 |

+

if dict_response is not None and "fixed_object_name" in dict_response:

|

| 187 |

+

response_text = dict_response["fixed_object_name"]

|

| 188 |

+

elif dict_response is not None and "major_object" in dict_response:

|

| 189 |

+

response_text = dict_response["major_object"]

|

| 190 |

+

else:

|

| 191 |

+

response_text = "一张不知所云的图片。"

|

| 192 |

+

|

| 193 |

+

return response_text

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

def submit_image( self, img_name ):

|

| 197 |

+

# 这里提交img是img_path

|

| 198 |

+

object_name = self.extract_object_from_image(img_name)

|

| 199 |

+

return self.submit_item( object_name )

|

| 200 |

+

|

| 201 |

+

|

| 202 |

+

def submit_item(self, item_name):

|

| 203 |

+

user_info = "用户提交了物品:" + item_name

|

| 204 |

+

response_info = self.get_item_response(item_name)

|

| 205 |

+

self.history.append( {"role": "user", "content": user_info} )

|

| 206 |

+

self.history.append( {"role": "assistant", "content": response_info} )

|

| 207 |

+

return user_info, response_info

|

| 208 |

+

|

| 209 |

+

def get_chat_response(self, system_prompt, user_input):

|

| 210 |

+

messages = [

|

| 211 |

+

{"role": "system", "content": system_prompt}

|

| 212 |

+

]

|

| 213 |

+

max_history_len = min(6, len(self.history))

|

| 214 |

+

for i in range( max_history_len):

|

| 215 |

+

messages.append( self.history[-(max_history_len-i)] )

|

| 216 |

+

|

| 217 |

+

messages.append({"role": "user", "content": user_input})

|

| 218 |

+

response = get_llm_response(messages, max_tokens=400)

|

| 219 |

+

self.history.append( {"role": "user", "content": user_input} )

|

| 220 |

+

self.history.append( {"role": "assistant", "content": response} )

|

| 221 |

+

return response

|

| 222 |

+

|

| 223 |

+

def submit_chat(self, user_input):

|

| 224 |

+

system_prompt = self.get_system_prompt()

|

| 225 |

+

response = self.get_chat_response(system_prompt, user_input)

|

| 226 |

+

return user_input, response

|

| 227 |

+

|

| 228 |

+

def get_system_prompt(self, status = None):

|

| 229 |

+

if status is None:

|

| 230 |

+

status = self.status

|

| 231 |

+

# 在我们的设计中, status是一个set的函数

|

| 232 |

+

# 如果程序很良好的话 应该支持后期从config来配置status到prompt的逻辑

|

| 233 |

+

# return "你是一个助手"

|

| 234 |

+

return self.current_step["prompt"]

|

| 235 |

+

|

| 236 |

+

# def get_chat_response(self, status, user_input):

|

| 237 |

+

# # 在我们的设计中, status是一个set的函数

|

| 238 |

+

# # 如果程序很良好的话 应该支持后期从config来配置status到prompt的逻辑

|

| 239 |

+

# return "你是一个助手"

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

def get_status(self):

|

| 243 |

+

# 把self.status转换成字符串返回

|

| 244 |

+

if len(self.status) > 0:

|

| 245 |

+

return "当前状态:" + ", ".join(self.status)

|

| 246 |

+

else:

|

| 247 |

+

return "当前状态:null"

|

| 248 |

+

|

| 249 |

+

if __name__ == '__main__':

|

| 250 |

+

yaml_path = "config/police.yaml"

|

| 251 |

+

gm = GameMaster(yaml_path)

|

| 252 |

+

print("GameMaster初始化成功!")

|

| 253 |

+

print("Prompt steps:", gm.prompt_steps)

|

| 254 |

+

print("Items:", gm.items)

|

| 255 |

+

print(gm.name2img_path('双节棍'))

|

| 256 |

+

print(gm.generate_item_response("手机"))

|

src/__init__.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .resize_img import resize_image, get_img_html

|

| 2 |

+

from .llm_response import get_llm_response

|

| 3 |

+

from .parse_json import parse_json

|

| 4 |

+

from .fishTTS import get_audio

|

| 5 |

+

from .GameMaster import GameMaster

|

| 6 |

+

from .recognize_from_image_glm import get_vlm_response_cot

|

src/fishTTS.py

ADDED

|

@@ -0,0 +1,165 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from pathlib import Path

|

| 2 |

+

from dotenv import load_dotenv

|

| 3 |

+

import os

|

| 4 |

+

import json

|

| 5 |

+

from openai import OpenAI

|

| 6 |

+

import time

|

| 7 |

+

|

| 8 |

+

class FishTTS:

|

| 9 |

+

def __init__(self,

|

| 10 |

+

model="fishaudio/fish-speech-1.5",

|

| 11 |

+

voice="fishaudio/fish-speech-1.5:david",

|

| 12 |

+

speed=1.0,

|

| 13 |

+

output_format="mp3"):

|

| 14 |

+

"""

|

| 15 |

+

Initialize the FishTTS instance

|

| 16 |

+

|

| 17 |

+

Args:

|

| 18 |

+

model (str): The model to use for TTS

|

| 19 |

+

voice (str): The voice to use

|

| 20 |

+

speed (float): Speech speed (0.5-2.0)

|

| 21 |

+

output_format (str): Audio format (mp3/wav/pcm/opus)

|

| 22 |

+

"""

|

| 23 |

+

load_dotenv()

|

| 24 |

+

|

| 25 |

+

# Set proxy if needed

|

| 26 |

+

# os.environ['HTTP_PROXY'] = 'http://localhost:8234'

|

| 27 |

+

# os.environ['HTTPS_PROXY'] = 'http://localhost:8234'

|

| 28 |

+

|

| 29 |

+

# Initialize OpenAI client

|

| 30 |

+

self.client = OpenAI(

|

| 31 |

+

api_key=os.getenv('SILICONFLOW_API_KEY'),

|

| 32 |

+

base_url="https://api.siliconflow.cn/v1"

|

| 33 |

+

)

|

| 34 |

+

|

| 35 |

+

# Store parameters

|

| 36 |

+

self.model = model

|

| 37 |

+

self.voice = voice

|

| 38 |

+

self.speed = speed

|

| 39 |

+

self.output_format = output_format

|