Upload 52 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- DEPLOYMENT.md +37 -0

- DEPLOYMENT_en.md +42 -0

- MODEL_LICENSE +65 -0

- PROMPT.md +198 -0

- PROMPT_en.md +198 -0

- README.md +240 -13

- README_en.md +243 -0

- app.py +70 -0

- cli_demo.py +61 -0

- composite_demo/.streamlit/config.toml +2 -0

- composite_demo/README.md +85 -0

- composite_demo/README_en.md +85 -0

- composite_demo/assets/demo.png +0 -0

- composite_demo/assets/emojis.png +0 -0

- composite_demo/assets/heart.png +0 -0

- composite_demo/assets/tool.png +0 -0

- composite_demo/client.py +137 -0

- composite_demo/conversation.py +119 -0

- composite_demo/demo_chat.py +77 -0

- composite_demo/demo_ci.py +327 -0

- composite_demo/demo_tool.py +191 -0

- composite_demo/main.py +56 -0

- composite_demo/requirements.txt +12 -0

- composite_demo/tool_registry.py +109 -0

- langchain_demo/ChatGLM3.py +123 -0

- langchain_demo/README.md +54 -0

- langchain_demo/Tool/Calculator.py +24 -0

- langchain_demo/Tool/Calculator.yaml +10 -0

- langchain_demo/Tool/Weather.py +35 -0

- langchain_demo/Tool/arxiv_example.yaml +10 -0

- langchain_demo/Tool/weather.yaml +10 -0

- langchain_demo/main.py +57 -0

- langchain_demo/requirements.txt +2 -0

- langchain_demo/utils.py +12 -0

- openai_api.py +229 -0

- requirements.txt +14 -0

- resources/WECHAT.md +7 -0

- resources/cli-demo.png +0 -0

- resources/heart.png +0 -0

- resources/tool.png +0 -0

- resources/web-demo.gif +3 -0

- resources/web-demo2.gif +3 -0

- resources/web-demo2.png +0 -0

- resources/wechat.jpg +0 -0

- tool_using/README.md +74 -0

- tool_using/README_en.md +75 -0

- tool_using/cli_demo_tool.py +60 -0

- tool_using/openai_api_demo.py +57 -0

- tool_using/tool_register.py +115 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

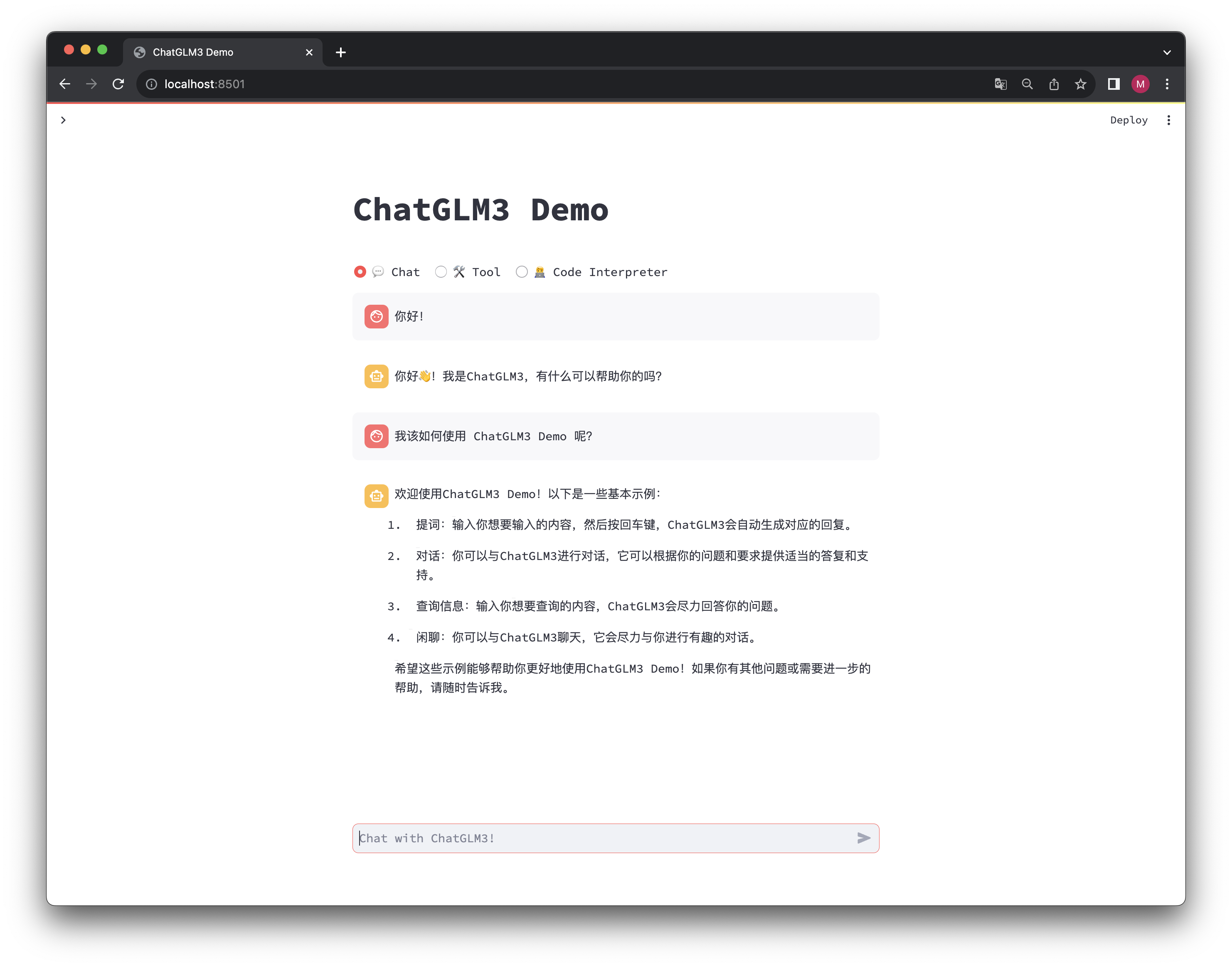

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

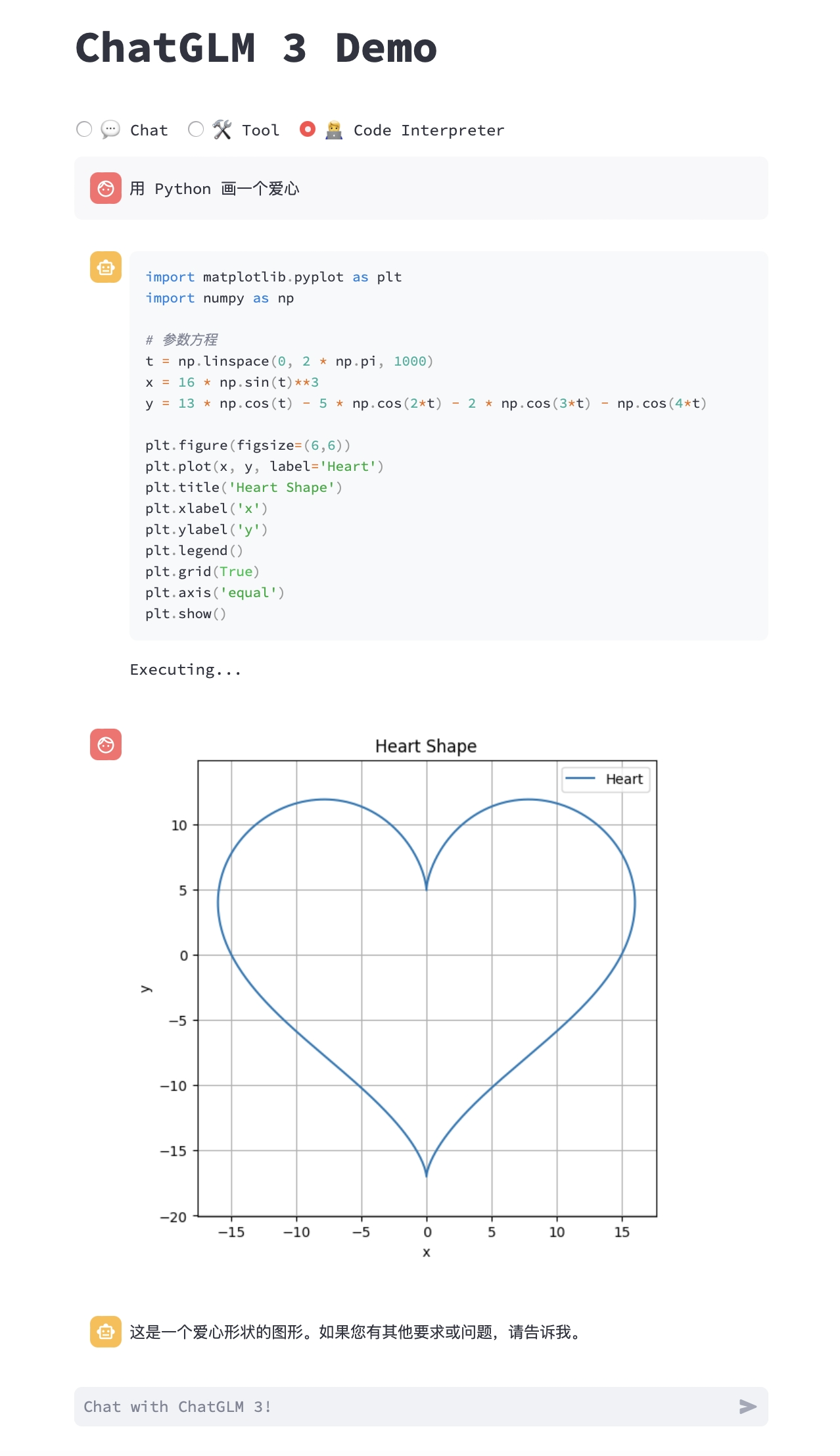

+

resources/web-demo.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

resources/web-demo2.gif filter=lfs diff=lfs merge=lfs -text

|

DEPLOYMENT.md

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## 低成本部署

|

| 2 |

+

|

| 3 |

+

### 模型量化

|

| 4 |

+

|

| 5 |

+

默认情况下,模型以 FP16 精度加载,运行上述代码需要大概 13GB 显存。如果你的 GPU 显存有限,可以尝试以量化方式加载模型,使用方法如下:

|

| 6 |

+

|

| 7 |

+

```python

|

| 8 |

+

model = AutoModel.from_pretrained("THUDM/chatglm3-6b",trust_remote_code=True).quantize(4).cuda()

|

| 9 |

+

```

|

| 10 |

+

|

| 11 |

+

模型量化会带来一定的性能损失,经过测试,ChatGLM3-6B 在 4-bit 量化下仍然能够进行自然流畅的生成。

|

| 12 |

+

|

| 13 |

+

### CPU 部署

|

| 14 |

+

|

| 15 |

+

如果你没有 GPU 硬件的话,也可以在 CPU 上进行推理,但是推理速度会更慢。使用方法如下(需要大概 32GB 内存)

|

| 16 |

+

```python

|

| 17 |

+

model = AutoModel.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True).float()

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

### Mac 部署

|

| 21 |

+

|

| 22 |

+

对于搭载了 Apple Silicon 或者 AMD GPU 的 Mac,可以使用 MPS 后端来在 GPU 上运行 ChatGLM3-6B。需要参考 Apple 的 [官方说明](https://developer.apple.com/metal/pytorch) 安装 PyTorch-Nightly(正确的版本号应该是2.x.x.dev2023xxxx,而不是 2.x.x)。

|

| 23 |

+

|

| 24 |

+

目前在 MacOS 上只支持[从本地加载模型](README.md#从本地加载模型)。将代码中的模型加载改为从本地加载,并使用 mps 后端:

|

| 25 |

+

```python

|

| 26 |

+

model = AutoModel.from_pretrained("your local path", trust_remote_code=True).to('mps')

|

| 27 |

+

```

|

| 28 |

+

|

| 29 |

+

加载半精度的 ChatGLM3-6B 模型需要大概 13GB 内存。内存较小的机器(比如 16GB 内存的 MacBook Pro),在空余内存不足的情况下会使用硬盘上的虚拟内存,导致推理速度严重变慢。

|

| 30 |

+

|

| 31 |

+

### 多卡部署

|

| 32 |

+

如果你有多张 GPU,但是每张 GPU 的显存大小都不足以容纳完整的模型,那么可以将模型切分在多张GPU上。首先安装 accelerate: `pip install accelerate`,然后通过如下方法加载模型:

|

| 33 |

+

```python

|

| 34 |

+

from utils import load_model_on_gpus

|

| 35 |

+

model = load_model_on_gpus("THUDM/chatglm3-6b", num_gpus=2)

|

| 36 |

+

```

|

| 37 |

+

即可将模型部署到两张 GPU 上进行推理。你可以将 `num_gpus` 改为你希望使用的 GPU 数。默认是均匀切分的,你也可以传入 `device_map` 参数来自己指定。

|

DEPLOYMENT_en.md

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Low-Cost Deployment

|

| 2 |

+

|

| 3 |

+

### Model Quantization

|

| 4 |

+

|

| 5 |

+

By default, the model is loaded with FP16 precision, running the above code requires about 13GB of VRAM. If your GPU's VRAM is limited, you can try loading the model quantitatively, as follows:

|

| 6 |

+

|

| 7 |

+

```python

|

| 8 |

+

model = AutoModel.from_pretrained("THUDM/chatglm3-6b",trust_remote_code=True).quantize(4).cuda()

|

| 9 |

+

```

|

| 10 |

+

|

| 11 |

+

Model quantization will bring some performance loss. Through testing, ChatGLM3-6B can still perform natural and smooth generation under 4-bit quantization.

|

| 12 |

+

|

| 13 |

+

### CPU Deployment

|

| 14 |

+

|

| 15 |

+

If you don't have GPU hardware, you can also run inference on the CPU, but the inference speed will be slower. The usage is as follows (requires about 32GB of memory):

|

| 16 |

+

|

| 17 |

+

```python

|

| 18 |

+

model = AutoModel.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True).float()

|

| 19 |

+

```

|

| 20 |

+

|

| 21 |

+

### Mac Deployment

|

| 22 |

+

|

| 23 |

+

For Macs equipped with Apple Silicon or AMD GPUs, the MPS backend can be used to run ChatGLM3-6B on the GPU. Refer to Apple's [official instructions](https://developer.apple.com/metal/pytorch) to install PyTorch-Nightly (the correct version number should be 2.x.x.dev2023xxxx, not 2.x.x).

|

| 24 |

+

|

| 25 |

+

Currently, only [loading the model locally](README_en.md#load-model-locally) is supported on MacOS. Change the model loading in the code to load locally and use the MPS backend:

|

| 26 |

+

|

| 27 |

+

```python

|

| 28 |

+

model = AutoModel.from_pretrained("your local path", trust_remote_code=True).to('mps')

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

Loading the half-precision ChatGLM3-6B model requires about 13GB of memory. Machines with smaller memory (such as a 16GB memory MacBook Pro) will use virtual memory on the hard disk when there is insufficient free memory, resulting in a significant slowdown in inference speed.

|

| 32 |

+

|

| 33 |

+

### Multi-GPU Deployment

|

| 34 |

+

|

| 35 |

+

If you have multiple GPUs, but each GPU's VRAM size is not enough to accommodate the complete model, then the model can be split across multiple GPUs. First, install accelerate: `pip install accelerate`, and then load the model through the following methods:

|

| 36 |

+

|

| 37 |

+

```python

|

| 38 |

+

from utils import load_model_on_gpus

|

| 39 |

+

model = load_model_on_gpus("THUDM/chatglm3-6b", num_gpus=2)

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

This allows the model to be deployed on two GPUs for inference. You can change `num_gpus` to the number of GPUs you want to use. It is evenly split by default, but you can also pass the `device_map` parameter to specify it yourself.

|

MODEL_LICENSE

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The ChatGLM3-6B License

|

| 2 |

+

|

| 3 |

+

1. 定义

|

| 4 |

+

|

| 5 |

+

“许可方”是指分发其软件的 ChatGLM3-6B 模型团队。

|

| 6 |

+

|

| 7 |

+

“软件”是指根据本许可提供的 ChatGLM3-6B 模型参数。

|

| 8 |

+

|

| 9 |

+

2. 许可授予

|

| 10 |

+

|

| 11 |

+

根据本许可的条款和条件,许可方特此授予您非排他性、全球性、不可转让、不可再许可、可撤销、免版税的版权许可。

|

| 12 |

+

|

| 13 |

+

上述版权声明和本许可声明应包含在本软件的所有副本或重要部分中。

|

| 14 |

+

|

| 15 |

+

3.限制

|

| 16 |

+

|

| 17 |

+

您不得出于任何军事或非法目的使用、复制、修改、合并、发布、分发、复制或创建本软件的全部或部分衍生作品。

|

| 18 |

+

|

| 19 |

+

您不得利用本软件从事任何危害国家安全和国家统一、危害社会公共利益、侵犯人身权益的行为。

|

| 20 |

+

|

| 21 |

+

4.免责声明

|

| 22 |

+

|

| 23 |

+

本软件“按原样”提供,不提供任何明示或暗示的保证,包括但不限于对适销性、特定用途的适用性和非侵权性的保证。 在任何情况下,作者或版权持有人均不对任何索赔、损害或其他责任负责,无论是在合同诉讼、侵权行为还是其他方面,由软件或软件的使用或其他交易引起、由软件引起或与之相关 软件。

|

| 24 |

+

|

| 25 |

+

5. 责任限制

|

| 26 |

+

|

| 27 |

+

除适用法律禁止的范围外,在任何情况下且根据任何法律理论,无论是基于侵权行为、疏忽、合同、责任或其他原因,任何许可方均不对您承担任何直接、间接、特殊、偶然、示范性、 或间接损害,或任何其他商业损失,即使许可人已被告知此类损害的可能性。

|

| 28 |

+

|

| 29 |

+

6.争议解决

|

| 30 |

+

|

| 31 |

+

本许可受中华人民共和国法律管辖并按其解释。 因本许可引起的或与本许可有关的任何争议应提交北京市海淀区人民法院。

|

| 32 |

+

|

| 33 |

+

请注意,许可证可能会更新到更全面的版本。 有关许可和版权的任何问题,请通过 [email protected] 与我们联系。

|

| 34 |

+

|

| 35 |

+

1. Definitions

|

| 36 |

+

|

| 37 |

+

“Licensor” means the ChatGLM3-6B Model Team that distributes its Software.

|

| 38 |

+

|

| 39 |

+

“Software” means the ChatGLM3-6B model parameters made available under this license.

|

| 40 |

+

|

| 41 |

+

2. License Grant

|

| 42 |

+

|

| 43 |

+

Subject to the terms and conditions of this License, the Licensor hereby grants to you a non-exclusive, worldwide, non-transferable, non-sublicensable, revocable, royalty-free copyright license to use the Software.

|

| 44 |

+

|

| 45 |

+

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

| 46 |

+

|

| 47 |

+

3. Restriction

|

| 48 |

+

|

| 49 |

+

You will not use, copy, modify, merge, publish, distribute, reproduce, or create derivative works of the Software, in whole or in part, for any military, or illegal purposes.

|

| 50 |

+

|

| 51 |

+

You will not use the Software for any act that may undermine China's national security and national unity, harm the public interest of society, or infringe upon the rights and interests of human beings.

|

| 52 |

+

|

| 53 |

+

4. Disclaimer

|

| 54 |

+

|

| 55 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

| 56 |

+

|

| 57 |

+

5. Limitation of Liability

|

| 58 |

+

|

| 59 |

+

EXCEPT TO THE EXTENT PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL THEORY, WHETHER BASED IN TORT, NEGLIGENCE, CONTRACT, LIABILITY, OR OTHERWISE WILL ANY LICENSOR BE LIABLE TO YOU FOR ANY DIRECT, INDIRECT, SPECIAL, INCIDENTAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES, OR ANY OTHER COMMERCIAL LOSSES, EVEN IF THE LICENSOR HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

|

| 60 |

+

|

| 61 |

+

6. Dispute Resolution

|

| 62 |

+

|

| 63 |

+

This license shall be governed and construed in accordance with the laws of People’s Republic of China. Any dispute arising from or in connection with this License shall be submitted to Haidian District People's Court in Beijing.

|

| 64 |

+

|

| 65 |

+

Note that the license is subject to update to a more comprehensive version. For any questions related to the license and copyright, please contact us at [email protected].

|

PROMPT.md

ADDED

|

@@ -0,0 +1,198 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## ChatGLM3 对话格式

|

| 2 |

+

为了避免用户输入的注入攻击,以及统一 Code Interpreter,Tool & Agent 等任务的输入,ChatGLM3 采用了全新的对话格式。

|

| 3 |

+

|

| 4 |

+

### 规定

|

| 5 |

+

#### 整体结构

|

| 6 |

+

ChatGLM3 对话的格式由若干对话组成,其中每个对话包含对话头和内容,一个典型的多轮对话结构如下

|

| 7 |

+

```text

|

| 8 |

+

<|system|>

|

| 9 |

+

You are ChatGLM3, a large language model trained by Zhipu.AI. Follow the user's instructions carefully. Respond using markdown.

|

| 10 |

+

<|user|>

|

| 11 |

+

Hello

|

| 12 |

+

<|assistant|>

|

| 13 |

+

Hello, I'm ChatGLM3. What can I assist you today?

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

#### 对话头

|

| 17 |

+

对话头占完整的一行,格式为

|

| 18 |

+

```text

|

| 19 |

+

<|role|>{metadata}

|

| 20 |

+

```

|

| 21 |

+

其中 `<|role|>` 部分使用 special token 表示,无法从文本形式被 tokenizer 编码以防止注入。metadata 部分采用纯文本表示,为可选内容。

|

| 22 |

+

* `<|system|>`:系统信息,设计上可穿插于对话中,**但目前规定仅可以出现在开头**

|

| 23 |

+

* `<|user|>`:用户

|

| 24 |

+

- 不会连续出现多个来自 `<|user|>` 的信息

|

| 25 |

+

* `<|assistant|>`:AI 助手

|

| 26 |

+

- 在出现之前必须有一个来自 `<|user|>` 的信息

|

| 27 |

+

* `<|observation|>`:外部的返回结果

|

| 28 |

+

- 必须在 `<|assistant|>` 的信息之后

|

| 29 |

+

|

| 30 |

+

### 样例场景

|

| 31 |

+

#### 多轮对话

|

| 32 |

+

* 有且仅有 `<|user|>`、`<|assistant|>`、`<|system|>` 三种 role

|

| 33 |

+

```text

|

| 34 |

+

<|system|>

|

| 35 |

+

You are ChatGLM3, a large language model trained by Zhipu.AI. Follow the user's instructions carefully. Respond using markdown.

|

| 36 |

+

<|user|>

|

| 37 |

+

Hello

|

| 38 |

+

<|assistant|>

|

| 39 |

+

Hello, I'm ChatGLM3. What can I assist you today?

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

#### 工具调用

|

| 43 |

+

````

|

| 44 |

+

<|system|>

|

| 45 |

+

Answer the following questions as best as you can. You have access to the following tools:

|

| 46 |

+

[

|

| 47 |

+

{

|

| 48 |

+

"name": "get_current_weather",

|

| 49 |

+

"description": "Get the current weather in a given location",

|

| 50 |

+

"parameters": {

|

| 51 |

+

"type": "object",

|

| 52 |

+

"properties": {

|

| 53 |

+

"location": {

|

| 54 |

+

"type": "string",

|

| 55 |

+

"description": "The city and state, e.g. San Francisco, CA",

|

| 56 |

+

},

|

| 57 |

+

"unit": {"type": "string"},

|

| 58 |

+

},

|

| 59 |

+

"required": ["location"],

|

| 60 |

+

},

|

| 61 |

+

}

|

| 62 |

+

]

|

| 63 |

+

<|user|>

|

| 64 |

+

今天北京的天气怎么样?

|

| 65 |

+

<|assistant|>

|

| 66 |

+

好的,让我们来查看今天的天气

|

| 67 |

+

<|assistant|>get_current_weather

|

| 68 |

+

```python

|

| 69 |

+

tool_call(location="beijing", unit="celsius")

|

| 70 |

+

```

|

| 71 |

+

<|observation|>

|

| 72 |

+

{"temperature": 22}

|

| 73 |

+

<|assistant|>

|

| 74 |

+

根据查询结果,今天北京的气温为 22 摄氏度。

|

| 75 |

+

````

|

| 76 |

+

|

| 77 |

+

#### 代码执行

|

| 78 |

+

* 有 `<|user|>`、`<|assistant|>`、`<|system|>`、`<|observation|>` 四种 role。其中 `<|assistant|>` 的 metadata 只有 interpreter。

|

| 79 |

+

|

| 80 |

+

`````text

|

| 81 |

+

<|system|>

|

| 82 |

+

你是一位智能AI助手,你叫ChatGLM3,你连接着一台电脑,但请注意不能联网。在使用Python解决任务时,你可以运行代码并得到结果,如果运行结果有错误,你需要尽可能对代码进行改进。你可以处理用户上传到电脑上的文件,文件默认存储路径是/mnt/data/。

|

| 83 |

+

<|user|>

|

| 84 |

+

#File: /mnt/data/metadata.jsonl

|

| 85 |

+

#Size: 35380

|

| 86 |

+

#File uploaded

|

| 87 |

+

文件中是否存在缺失值或异常值?

|

| 88 |

+

<|assistant|>

|

| 89 |

+

首先,我将读取您提供的文件,并查看其内容以确定是否存在缺失值或异常值。

|

| 90 |

+

|

| 91 |

+

我们从加载文件开始:

|

| 92 |

+

<|assistant|>interpreter

|

| 93 |

+

```python

|

| 94 |

+

import json

|

| 95 |

+

|

| 96 |

+

# Load the uploaded file

|

| 97 |

+

data = []

|

| 98 |

+

with open("/mnt/data/metadata.jsonl", 'r') as file:

|

| 99 |

+

for line in file:

|

| 100 |

+

data.append(json.loads(line))

|

| 101 |

+

|

| 102 |

+

# Display the first few records to get a sense of the data

|

| 103 |

+

data[:5]

|

| 104 |

+

```

|

| 105 |

+

<|observation|>

|

| 106 |

+

```result

|

| 107 |

+

[{'file_name': 'aceinthehole.png',

|

| 108 |

+

'name': 'Ace in the Hole',

|

| 109 |

+

'type': 'survivor',

|

| 110 |

+

'description': 'Lady Luck always seems to be throwing something good your way.'},

|

| 111 |

+

{'file_name': 'adrenaline.png',

|

| 112 |

+

'name': 'Adrenaline',

|

| 113 |

+

'type': 'survivor',

|

| 114 |

+

'description': 'You are fuelled by unexpected energy when on the verge of escape.'},

|

| 115 |

+

{'file_name': 'aftercare.png',

|

| 116 |

+

'name': 'Aftercare',

|

| 117 |

+

'type': 'survivor',

|

| 118 |

+

'description': "Unlocks potential in one's aura reading ability."},

|

| 119 |

+

{'file_name': 'agitation.png',

|

| 120 |

+

'name': 'Agitation',

|

| 121 |

+

'type': 'killer',

|

| 122 |

+

'description': 'You get excited in anticipation of hooking your prey.'},

|

| 123 |

+

{'file_name': 'alert.png',

|

| 124 |

+

'name': 'Alert',

|

| 125 |

+

'type': 'survivor',

|

| 126 |

+

'description': 'Your acute senses are on high alert.'}]

|

| 127 |

+

```

|

| 128 |

+

<|assistant|>

|

| 129 |

+

该文件看起来包含有关某些条目的元数据,每个条目有以下字段:

|

| 130 |

+

|

| 131 |

+

- `file_name`: 文件名称

|

| 132 |

+

- `name`: 名称

|

| 133 |

+

- `type`: 类型(例如 "survivor" 或 "killer")

|

| 134 |

+

- `description`: 描述

|

| 135 |

+

|

| 136 |

+

接下来,我将检查这些字段是否存在缺失值。

|

| 137 |

+

<|assistant|>interpreter

|

| 138 |

+

```python

|

| 139 |

+

# Check for missing values in each column

|

| 140 |

+

missing_values = {key: sum(1 for item in data if key not in item) for key in data[0].keys()}

|

| 141 |

+

|

| 142 |

+

missing_values

|

| 143 |

+

```

|

| 144 |

+

<|observation|>

|

| 145 |

+

```result

|

| 146 |

+

{'file_name': 0, 'name': 0, 'type': 0, 'description': 0}

|

| 147 |

+

```

|

| 148 |

+

<|assistant|>

|

| 149 |

+

每个字段都没有缺失值。这意味着每个条目都完整。

|

| 150 |

+

|

| 151 |

+

接下来,我们可以检查是否存在任何潜在的异常值。这通常需要更深入的分析和对数据的了解,但我可以从一些基本的统计和分布开始进行检查。为了这样做,我们可以查看“type”字段的值分布,看看是否有任何意外的类别。

|

| 152 |

+

<|assistant|>interpreter

|

| 153 |

+

```python

|

| 154 |

+

# Check the distribution of values in the 'type' column

|

| 155 |

+

type_distribution = {key: sum(1 for item in data if item['type'] == key) for key in set(item['type'] for item in data)}

|

| 156 |

+

|

| 157 |

+

type_distribution

|

| 158 |

+

```

|

| 159 |

+

<|observation|>

|

| 160 |

+

```result

|

| 161 |

+

{'survivor': 116, 'killer': 103}

|

| 162 |

+

```

|

| 163 |

+

<|assistant|>

|

| 164 |

+

“type”字段有两个值:“survivor”和“killer”。其中,“survivor”有116个条目,而“killer”有103个条目。

|

| 165 |

+

|

| 166 |

+

从这个初步的检查中,我们没有发现任何明显的异常值。

|

| 167 |

+

|

| 168 |

+

如果您有任何特定的标准或其他字段需要检查,我可以为您提供更详细的分析。您还希望我检查什么呢?

|

| 169 |

+

<|user|>

|

| 170 |

+

帮我画一个爱心

|

| 171 |

+

<|assistant|>interpreter

|

| 172 |

+

```python

|

| 173 |

+

import numpy as np

|

| 174 |

+

import matplotlib.pyplot as plt

|

| 175 |

+

|

| 176 |

+

# 爱心形状的参数方程

|

| 177 |

+

def heart(t):

|

| 178 |

+

x = 16 * np.sin(t) ** 3

|

| 179 |

+

y = 13 * np.cos(t) - 5 * np.cos(2 * t) - 2 * np.cos(3 * t) - np.cos(4 * t)

|

| 180 |

+

return x, y

|

| 181 |

+

|

| 182 |

+

t = np.linspace(0, 2 * np.pi, 1000)

|

| 183 |

+

x, y = heart(t)

|

| 184 |

+

|

| 185 |

+

plt.figure(figsize=(6, 6))

|

| 186 |

+

plt.plot(x, y, color='red')

|

| 187 |

+

plt.axis('equal')

|

| 188 |

+

plt.axis('off')

|

| 189 |

+

plt.show()

|

| 190 |

+

```

|

| 191 |

+

<|observation|>

|

| 192 |

+

```result

|

| 193 |

+

【image】

|

| 194 |

+

```

|

| 195 |

+

<|assistant|>

|

| 196 |

+

这是一个爱心形状。我使用了参数方程来描述这个形状,并使用matplotlib进行了绘制。如果您有任何其他需求或问题,请随时告诉我。

|

| 197 |

+

<|user|> # End

|

| 198 |

+

````

|

PROMPT_en.md

ADDED

|

@@ -0,0 +1,198 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## ChatGLM3 Chat Format

|

| 2 |

+

To avoid injection attacks from user input, and to unify the input of Code Interpreter, Tool & Agent and other tasks, ChatGLM3 adopts a brand-new dialogue format.

|

| 3 |

+

|

| 4 |

+

### Regulations

|

| 5 |

+

#### Overall Structure

|

| 6 |

+

The format of the ChatGLM3 dialogue consists of several conversations, each of which contains a dialogue header and content. A typical multi-turn dialogue structure is as follows:

|

| 7 |

+

```text

|

| 8 |

+

<|system|>

|

| 9 |

+

You are ChatGLM3, a large language model trained by Zhipu.AI. Follow the user's instructions carefully. Respond using markdown.

|

| 10 |

+

<|user|>

|

| 11 |

+

Hello

|

| 12 |

+

<|assistant|>

|

| 13 |

+

Hello, I'm ChatGLM3. What can I assist you today?

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

#### Chat Header

|

| 17 |

+

The chat header occupies a complete line, formatted as:

|

| 18 |

+

```text

|

| 19 |

+

<|role|>{metadata}

|

| 20 |

+

```

|

| 21 |

+

Where `<|role|>` part is represented in a special token, which can’t be encoded by the tokenizer from the text form to prevent injection attacks. The `metadata` part is represented in plain texts and is optional content.

|

| 22 |

+

* `<|system|>`: System information, which can be interspersed in the dialogue in design, **but currently only appears at the beginning**

|

| 23 |

+

* `<|user|>`: User

|

| 24 |

+

- Multiple messages from `<|user|>` will not appear continuously

|

| 25 |

+

* `<|assistant|>`: AI assistant

|

| 26 |

+

- There must be a message from `<|user|>` before it appears

|

| 27 |

+

* `<|observation|>`: External return result

|

| 28 |

+

- Must be after the message from `<|assistant|>`

|

| 29 |

+

|

| 30 |

+

### Example Scenarios

|

| 31 |

+

#### Multi-turn Dialogue

|

| 32 |

+

* There are only three roles: `<|user|>`, `<|assistant|>`, and `<|system|>`.

|

| 33 |

+

```text

|

| 34 |

+

<|system|>

|

| 35 |

+

You are ChatGLM3, a large language model trained by Zhipu.AI. Follow the user's instructions carefully. Respond using markdown.

|

| 36 |

+

<|user|>

|

| 37 |

+

Hello

|

| 38 |

+

<|assistant|>

|

| 39 |

+

Hello, I'm ChatGLM3. What can I assist you today?

|

| 40 |

+

```

|

| 41 |

+

|

| 42 |

+

#### Tool Calling

|

| 43 |

+

````

|

| 44 |

+

<|system|>

|

| 45 |

+

Answer the following questions as best as you can. You have access to the following tools:

|

| 46 |

+

[

|

| 47 |

+

{

|

| 48 |

+

"name": "get_current_weather",

|

| 49 |

+

"description": "Get the current weather in a given location",

|

| 50 |

+

"parameters": {

|

| 51 |

+

"type": "object",

|

| 52 |

+

"properties": {

|

| 53 |

+

"location": {

|

| 54 |

+

"type": "string",

|

| 55 |

+

"description": "The city and state, e.g. San Francisco, CA",

|

| 56 |

+

},

|

| 57 |

+

"unit": {"type": "string"},

|

| 58 |

+

},

|

| 59 |

+

"required": ["location"],

|

| 60 |

+

},

|

| 61 |

+

}

|

| 62 |

+

]

|

| 63 |

+

<|user|>

|

| 64 |

+

What's the weather in Beijing today?

|

| 65 |

+

<|assistant|>

|

| 66 |

+

Okay, let's look up the weather in Bejing today.

|

| 67 |

+

<|assistant|>get_current_weather

|

| 68 |

+

```python

|

| 69 |

+

tool_call(location="beijing", unit="celsius")

|

| 70 |

+

```

|

| 71 |

+

<|observation|>

|

| 72 |

+

{"temperature": 22}

|

| 73 |

+

<|assistant|>

|

| 74 |

+

According to the query results, the temperature in Beijing today is 22 degrees Celsius.

|

| 75 |

+

````

|

| 76 |

+

|

| 77 |

+

#### Code Execution

|

| 78 |

+

* Four available roles: `<|user|>`, `<|assistant|>`, `<|system|>`, and `<|observation|>`, where `metadata` of `<|assistant|>` can only be interpreter.

|

| 79 |

+

|

| 80 |

+

`````text

|

| 81 |

+

<|system|>

|

| 82 |

+

You are an intelligent AI assistant, named ChatGLM3. You are connected to a computer, but please note that you cannot connect to the internet. When using Python to solve tasks, you can run the code and get the results. If there are errors in the running results, you need to improve the code as much as possible. You can process files uploaded by users to your computer, and the default storage path for files is /mnt/data/.

|

| 83 |

+

<|user|>

|

| 84 |

+

#File: /mnt/data/metadata.jsonl

|

| 85 |

+

#Size: 35380

|

| 86 |

+

#File uploaded

|

| 87 |

+

Are there missing or abnormal values in the file?

|

| 88 |

+

<|assistant|>

|

| 89 |

+

Firstly, I will read the file you provided and review its contents to determine if there are missing or abnormal values.

|

| 90 |

+

|

| 91 |

+

We start by loading the file:

|

| 92 |

+

<|assistant|>interpreter

|

| 93 |

+

```python

|

| 94 |

+

import json

|

| 95 |

+

|

| 96 |

+

# Load the uploaded file

|

| 97 |

+

data = []

|

| 98 |

+

with open("/mnt/data/metadata.jsonl", 'r') as file:

|

| 99 |

+

for line in file:

|

| 100 |

+

data.append(json.loads(line))

|

| 101 |

+

|

| 102 |

+

# Display the first few records to get a sense of the data

|

| 103 |

+

data[:5]

|

| 104 |

+

```

|

| 105 |

+

<|observation|>

|

| 106 |

+

```result

|

| 107 |

+

[{'file_name': 'aceinthehole.png',

|

| 108 |

+

'name': 'Ace in the Hole',

|

| 109 |

+

'type': 'survivor',

|

| 110 |

+

'description': 'Lady Luck always seems to be throwing something good your way.'},

|

| 111 |

+

{'file_name': 'adrenaline.png',

|

| 112 |

+

'name': 'Adrenaline',

|

| 113 |

+

'type': 'survivor',

|

| 114 |

+

'description': 'You are fuelled by unexpected energy when on the verge of escape.'},

|

| 115 |

+

{'file_name': 'aftercare.png',

|

| 116 |

+

'name': 'Aftercare',

|

| 117 |

+

'type': 'survivor',

|

| 118 |

+

'description': "Unlocks potential in one's aura reading ability."},

|

| 119 |

+

{'file_name': 'agitation.png',

|

| 120 |

+

'name': 'Agitation',

|

| 121 |

+

'type': 'killer',

|

| 122 |

+

'description': 'You get excited in anticipation of hooking your prey.'},

|

| 123 |

+

{'file_name': 'alert.png',

|

| 124 |

+

'name': 'Alert',

|

| 125 |

+

'type': 'survivor',

|

| 126 |

+

'description': 'Your acute senses are on high alert.'}]

|

| 127 |

+

```

|

| 128 |

+

<|assistant|>

|

| 129 |

+

This file appears to contain metadata about certain items, each with the following fields:

|

| 130 |

+

|

| 131 |

+

- `file_name`

|

| 132 |

+

- `name`

|

| 133 |

+

- `type` (e.g. "survivor" or "killer")

|

| 134 |

+

- `description`

|

| 135 |

+

|

| 136 |

+

Next, I will check for missing values in these fields.

|

| 137 |

+

<|assistant|>interpreter

|

| 138 |

+

```python

|

| 139 |

+

# Check for missing values in each column

|

| 140 |

+

missing_values = {key: sum(1 for item in data if key not in item) for key in data[0].keys()}

|

| 141 |

+

|

| 142 |

+

missing_values

|

| 143 |

+

```

|

| 144 |

+

<|observation|>

|

| 145 |

+

```result

|

| 146 |

+

{'file_name': 0, 'name': 0, 'type': 0, 'description': 0}

|

| 147 |

+

```

|

| 148 |

+

<|assistant|>

|

| 149 |

+

Each field has no missing values. This means that each entry is complete.

|

| 150 |

+

|

| 151 |

+

Next, we can check for any potential outliers. This usually requires deeper analysis and understanding of the data, but I can start by examining some basic statistics and distributions. To do this, we can check the value distribution of the "type" field to see if there are any unexpected categories.

|

| 152 |

+

<|assistant|>interpreter

|

| 153 |

+

```python

|

| 154 |

+

# Check the distribution of values in the 'type' column

|

| 155 |

+

type_distribution = {key: sum(1 for item in data if item['type'] == key) for key in set(item['type'] for item in data)}

|

| 156 |

+

|

| 157 |

+

type_distribution

|

| 158 |

+

```

|

| 159 |

+

<|observation|>

|

| 160 |

+

```result

|

| 161 |

+

{'survivor': 116, 'killer': 103}

|

| 162 |

+

```

|

| 163 |

+

<|assistant|>

|

| 164 |

+

The 'type' field has two values: 'survivor' and 'killer'. Among them, 'survivor' has 116 entries, while 'killer' has 103 entries.

|

| 165 |

+

|

| 166 |

+

From this preliminary examination, we did not find any obvious abnormal values.

|

| 167 |

+

|

| 168 |

+

If you have any specific standards or other fields that need to be checked, I can provide you with a more detailed analysis. What else do you want me to check?

|

| 169 |

+

<|user|>

|

| 170 |

+

Help me draw a heart

|

| 171 |

+

<|assistant|>interpreter

|

| 172 |

+

```python

|

| 173 |

+

import numpy as np

|

| 174 |

+

import matplotlib.pyplot as plt

|

| 175 |

+

|

| 176 |

+

# The parametric equation for the shape of a heart

|

| 177 |

+

def heart(t):

|

| 178 |

+

x = 16 * np.sin(t) ** 3

|

| 179 |

+

y = 13 * np.cos(t) - 5 * np.cos(2 * t) - 2 * np.cos(3 * t) - np.cos(4 * t)

|

| 180 |

+

return x, y

|

| 181 |

+

|

| 182 |

+

t = np.linspace(0, 2 * np.pi, 1000)

|

| 183 |

+

x, y = heart(t)

|

| 184 |

+

|

| 185 |

+

plt.figure(figsize=(6, 6))

|

| 186 |

+

plt.plot(x, y, color='red')

|

| 187 |

+

plt.axis('equal')

|

| 188 |

+

plt.axis('off')

|

| 189 |

+

plt.show()

|

| 190 |

+

```

|

| 191 |

+

<|observation|>

|

| 192 |

+

```result

|

| 193 |

+

【image】

|

| 194 |

+

```

|

| 195 |

+

<|assistant|>

|

| 196 |

+

This is a shape of heart. I used parametric equations to describe this shape and drew it using Matplotlib. If you have any other needs or questions, please feel free to let me know.

|

| 197 |

+

<|user|> # End

|

| 198 |

+

````

|

README.md

CHANGED

|

@@ -1,13 +1,240 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ChatGLM3

|

| 2 |

+

|

| 3 |

+

<p align="center">

|

| 4 |

+

🤗 <a href="https://huggingface.co/THUDM/chatglm3-6b" target="_blank">HF Repo</a> • 🤖 <a href="https://modelscope.cn/models/ZhipuAI/chatglm3-6b" target="_blank">ModelScope</a> • 🐦 <a href="https://twitter.com/thukeg" target="_blank">Twitter</a> • 📃 <a href="https://arxiv.org/abs/2103.10360" target="_blank">[GLM@ACL 22]</a> <a href="https://github.com/THUDM/GLM" target="_blank">[GitHub]</a> • 📃 <a href="https://arxiv.org/abs/2210.02414" target="_blank">[GLM-130B@ICLR 23]</a> <a href="https://github.com/THUDM/GLM-130B" target="_blank">[GitHub]</a> <br>

|

| 5 |

+

</p>

|

| 6 |

+

<p align="center">

|

| 7 |

+

👋 加入我们的 <a href="https://join.slack.com/t/chatglm/shared_invite/zt-25ti5uohv-A_hs~am_D3Q8XPZMpj7wwQ" target="_blank">Slack</a> 和 <a href="resources/WECHAT.md" target="_blank">WeChat</a>

|

| 8 |

+

</p>

|

| 9 |

+

<p align="center">

|

| 10 |

+

📍在 <a href="https://www.chatglm.cn">chatglm.cn</a> 体验更大规模的 ChatGLM 模型。

|

| 11 |

+

</p>

|

| 12 |

+

|

| 13 |

+

[Read this in English.](./README_en.md)

|

| 14 |

+

|

| 15 |

+

## 介绍

|

| 16 |

+

|

| 17 |

+

ChatGLM3 是智谱AI和清华大学 KEG 实验室联合发布的新一代对话预训练模型。ChatGLM3-6B 是 ChatGLM3 系列中的开源模型,在保留了前两代模型对话流畅、部署门槛低等众多优秀特性的基础上,ChatGLM3-6B 引入了如下特性:

|

| 18 |

+

|

| 19 |

+

1. **更强大的基础模型:** ChatGLM3-6B 的基础模型 ChatGLM3-6B-Base 采用了更多样的训练数据、更充分的训练步数和更合理的训练策略。在语义、数学、推理、代码、知识等不同角度的数据集上测评显示,**ChatGLM3-6B-Base 具有在 10B 以下的基础模型中最强的性能**。

|

| 20 |

+

2. **更完整的功能支持:** ChatGLM3-6B 采用了全新设计的 [Prompt 格式](PROMPT.md),除正常的多轮对话外。同时原生支持[工具调用](tool_using/README.md)(Function Call)、代码执行(Code Interpreter)和 Agent 任务等复杂场景。

|

| 21 |

+

3. **更全面的开源序列:** 除了对话模型 [ChatGLM3-6B](https://huggingface.co/THUDM/chatglm3-6b) 外,还开源了基础模型 [ChatGLM3-6B-Base](https://huggingface.co/THUDM/chatglm3-6b-base)、长文本对话模型 [ChatGLM3-6B-32K](https://huggingface.co/THUDM/chatglm3-6b-32k)。以上所有权重对学术研究**完全开放**,在填写[问卷](https://open.bigmodel.cn/mla/form)进行登记后**亦允许免费商业使用**。

|

| 22 |

+

|

| 23 |

+

-----

|

| 24 |

+

|

| 25 |

+

ChatGLM3 开源模型旨在与开源社区一起推动大模型技术发展,恳请开发者和大家遵守[开源协议](MODEL_LICENSE),勿将开源模型和代码及基于开源项目产生的衍生物用于任何可能给国家和社会带来危害的用途以及用于任何未经过安全评估和备案的服务。目前,本项目团队未基于 **ChatGLM3 开源模型**开发任何应用,包括网页端、安卓、苹果 iOS 及 Windows App 等应用。

|

| 26 |

+

|

| 27 |

+

尽管模型在训练的各个阶段都尽力确保数据的合规性和准确性,但由于 ChatGLM3-6B 模型规模较小,且模型受概率随机性因素影响,无法保证输出内容的准确。同时模型的输出容易被用户的输入误导。**本项目不承担开源模型和代码导致的数据安全、舆情风险或发生任何模型被误导、滥用、传播、不当利用而产生的风险和责任。**

|

| 28 |

+

|

| 29 |

+

## 模型列表

|

| 30 |

+

|

| 31 |

+

| Model | Seq Length | Download

|

| 32 |

+

| :---: |:---------------------------:|:-----------------------------------------------------------------------------------------------------------------------------------:

|

| 33 |

+

| ChatGLM3-6B | 8k | [HuggingFace](https://huggingface.co/THUDM/chatglm3-6b) \| [ModelScope](https://modelscope.cn/models/ZhipuAI/chatglm3-6b)

|

| 34 |

+

| ChatGLM3-6B-Base | 8k | [HuggingFace](https://huggingface.co/THUDM/chatglm3-6b-base) \| [ModelScope](https://modelscope.cn/models/ZhipuAI/chatglm3-6b-base)

|

| 35 |

+

| ChatGLM3-6B-32K | 32k | [HuggingFace](https://huggingface.co/THUDM/chatglm3-6b-32k) \| [ModelScope](https://modelscope.cn/models/ZhipuAI/chatglm3-6b-32k)

|

| 36 |

+

|

| 37 |

+

## 友情链接

|

| 38 |

+

对 ChatGLM3 进行加速的开源项目:

|

| 39 |

+

* [chatglm.cpp](https://github.com/li-plus/chatglm.cpp): 类似 llama.cpp 的量化加速推理方案,实现笔记本上实时对话

|

| 40 |

+

|

| 41 |

+

## 评测结果

|

| 42 |

+

|

| 43 |

+

### 典型任务

|

| 44 |

+

|

| 45 |

+

我们选取了 8 个中英文典型数据集,在 ChatGLM3-6B (base) 版本上进行了性能测试。

|

| 46 |

+

|

| 47 |

+

| Model | GSM8K | MATH | BBH | MMLU | C-Eval | CMMLU | MBPP | AGIEval |

|

| 48 |

+

|------------------|:-----:|:----:|:----:|:----:|:------:|:-----:|:----:|:-------:|

|

| 49 |

+

| ChatGLM2-6B-Base | 32.4 | 6.5 | 33.7 | 47.9 | 51.7 | 50.0 | - | - |

|

| 50 |

+

| Best Baseline | 52.1 | 13.1 | 45.0 | 60.1 | 63.5 | 62.2 | 47.5 | 45.8

|

| 51 |

+

| ChatGLM3-6B-Base | 72.3 | 25.7 | 66.1 | 61.4 | 69.0 | 67.5 | 52.4 | 53.7 |

|

| 52 |

+

> Best Baseline 指的是截止 2023年10月27日、模型参数在 10B 以下、在对应数据集上表现最好的预训练模型,不包括只针对某一项任务训练而未保持通用能力的模型。

|

| 53 |

+

|

| 54 |

+

> 对 ChatGLM3-6B-Base 的测试中,BBH 采用 3-shot 测试,需要推理的 GSM8K、MATH 采用 0-shot CoT 测试,MBPP 采用 0-shot 生成后运行测例计算 Pass@1 ,其他选择题类型数据集均采用 0-shot 测试。

|

| 55 |

+

|

| 56 |

+

我们在多个长文本应用场景下对 ChatGLM3-6B-32K 进行了人工评估测试。与二代模型相比,其效果平均提升了超过 50%。在论文阅读、文档摘要和财报分析等应用中,这种提升尤为显著。此外,我们还在 LongBench 评测集上对模型进行了测试,具体结果如下表所示

|

| 57 |

+

|

| 58 |

+

| Model | 平均 | Summary | Single-Doc QA | Multi-Doc QA | Code | Few-shot | Synthetic |

|

| 59 |

+

|----------------------|:-----:|:----:|:----:|:----:|:------:|:-----:|:-----:|

|

| 60 |

+

| ChatGLM2-6B-32K | 41.5 | 24.8 | 37.6 | 34.7 | 52.8 | 51.3 | 47.7 |

|

| 61 |

+

| ChatGLM3-6B-32K | 50.2 | 26.6 | 45.8 | 46.1 | 56.2 | 61.2 | 65 |

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

## 使用方式

|

| 65 |

+

|

| 66 |

+

### 环境安装

|

| 67 |

+

首先需要下载本仓库:

|

| 68 |

+

```shell

|

| 69 |

+

git clone https://github.com/THUDM/ChatGLM3

|

| 70 |

+

cd ChatGLM3

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

然后使用 pip 安装依赖:

|

| 74 |

+

```

|

| 75 |

+

pip install -r requirements.txt

|

| 76 |

+

```

|

| 77 |

+

其中 `transformers` 库版本推荐为 `4.30.2`,`torch` 推荐使用 2.0 及以上的版本,以获得最佳的推理性能。

|

| 78 |

+

|

| 79 |

+

### 综合 Demo

|

| 80 |

+

|

| 81 |

+

我们提供了一个集成以下三种功能的综合 Demo,运行方法请参考 [综合 Demo](composite_demo/README.md)

|

| 82 |

+

|

| 83 |

+

- Chat: 对话模式,在此模式下可以与模型进行对话。

|

| 84 |

+

- Tool: 工具模式,模型除了对话外,还可以通过工具进行其他操作。

|

| 85 |

+

<img src="resources/tool.png" width="400">

|

| 86 |

+

- Code Interpreter: 代码解释器模式,模型可以在一个 Jupyter 环境中执行代码并获取结果,以完成复杂任务。

|

| 87 |

+

<img src="resources/heart.png" width="400">

|

| 88 |

+

|

| 89 |

+

### 代码调用

|

| 90 |

+

|

| 91 |

+

可以通过如下代码调用 ChatGLM 模型来生成对话:

|

| 92 |

+

|

| 93 |

+

```python

|

| 94 |

+

>>> from transformers import AutoTokenizer, AutoModel

|

| 95 |

+

>>> tokenizer = AutoTokenizer.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True)

|

| 96 |

+

>>> model = AutoModel.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True, device='cuda')

|

| 97 |

+

>>> model = model.eval()

|

| 98 |

+

>>> response, history = model.chat(tokenizer, "你好", history=[])

|

| 99 |

+

>>> print(response)

|

| 100 |

+

你好👋!我是人工智能助手 ChatGLM3-6B,很高兴见到你,欢迎问我任何问题。

|

| 101 |

+

>>> response, history = model.chat(tokenizer, "晚上睡不着应该怎么办", history=history)

|

| 102 |

+

>>> print(response)

|

| 103 |

+

晚上睡不着可能会让你感到焦虑或不舒服,但以下是一些可以帮助你入睡的方法:

|

| 104 |

+

|

| 105 |

+

1. 制定规律的睡眠时间表:保持规律的睡眠时间表可以帮助你建立健康的睡眠习惯,使你更容易入睡。尽量在每天的相同时间上床,并在同一时间起床。

|

| 106 |

+

2. 创造一个舒适的睡眠环境:确保睡眠环境舒适,安静,黑暗且温度适宜。可以使用舒适的床上用品,并保持房间通风。

|

| 107 |

+

3. 放松身心:在睡前做些放松的活动,例如泡个热水澡,听些轻柔的音乐,阅读一些有趣的书籍等,有助于缓解紧张和焦虑,使你更容易入睡。

|

| 108 |

+

4. 避免饮用含有咖啡因的饮料:咖啡因是一种刺激性物质,会影响你的睡眠质量。尽量避免在睡前饮用含有咖啡因的饮料,例如咖啡,茶和可乐。

|

| 109 |

+

5. 避免在床上做与睡眠无关的事情:在床上做些与睡眠无关的事情,例如看电影,玩游戏或工作等,可能会干扰你的睡眠。

|

| 110 |

+

6. 尝试呼吸技巧:深呼吸是一种放松技巧,可以帮助你缓解紧张和焦虑,使你更容易入睡。试着慢慢吸气,保持几秒钟,然后缓慢呼气。

|

| 111 |

+

|

| 112 |

+

如果这些方法无法帮助你入睡,你可以考虑咨询医生或睡眠专家,寻求进一步的建议。

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

#### 从本地加载模型

|

| 116 |

+

以上代码会由 `transformers` 自动下载模型实现和参数。完整的模型实现在 [Hugging Face Hub](https://huggingface.co/THUDM/chatglm3-6b)。如果你的网络环境较差,下载模型参数可能会花费较长时间甚至失败。此时可以先将模型下载到本地,然后从本地加载。

|

| 117 |

+

|

| 118 |

+

从 Hugging Face Hub 下载模型需要先[安装Git LFS](https://docs.github.com/zh/repositories/working-with-files/managing-large-files/installing-git-large-file-storage),然后运行

|

| 119 |

+

```Shell

|

| 120 |

+

git clone https://huggingface.co/THUDM/chatglm3-6b

|

| 121 |

+

```

|

| 122 |

+

|

| 123 |

+

如果从你从 HuggingFace 下载比较慢,也可以从 [ModelScope](https://modelscope.cn/models/ZhipuAI/chatglm3-6b)

|

| 124 |

+

中下载。

|

| 125 |

+

|

| 126 |

+

### 网页版对话 Demo

|

| 127 |

+

|

| 128 |

+

可以通过以下命令启动基于 Gradio 的网页版 demo:

|

| 129 |

+

```shell

|

| 130 |

+

python web_demo.py

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

可以通过以下命令启动基于 Streamlit 的网页版 demo:

|

| 136 |

+

```shell

|

| 137 |

+

streamlit run web_demo2.py

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

网页版 demo 会运行一个 Web Server,并输出地址。在浏览器中打开输出的地址即可使用。 经测试,基于 Streamlit 的网页版 Demo 会更流畅。

|

| 141 |

+

|

| 142 |

+

### 命令行对话 Demo

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

运行仓库中 [cli_demo.py](cli_demo.py):

|

| 147 |

+

|

| 148 |

+

```shell

|

| 149 |

+

python cli_demo.py

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

程序会在命令行中进行交互式的对话,在命令行中输入指示并回车即可生成回复,输入 `clear` 可以清空对话历史,输入 `stop` 终止程序。

|

| 153 |

+

|

| 154 |

+

### LangChain Demo

|

| 155 |

+

请参考 [基于 LangChain 的工具调用 Demo](langchain_demo/README.md)。

|

| 156 |

+

|

| 157 |

+

### 工具调用

|

| 158 |

+

关于工具调用的方法请参考 [工具调用](tool_using/README.md)。

|

| 159 |

+

|

| 160 |

+

### API 部署

|

| 161 |

+

感谢 [@xusenlinzy](https://github.com/xusenlinzy) 实现了 OpenAI 格式的流式 API 部署,可以作为任意基于 ChatGPT 的应用的后端,比如 [ChatGPT-Next-Web](https://github.com/Yidadaa/ChatGPT-Next-Web)。可以通过运行仓库中的[openai_api.py](openai_api.py) 进行部署:

|

| 162 |

+

```shell

|

| 163 |

+

python openai_api.py

|

| 164 |

+

```

|

| 165 |

+

进行 API 调用的示例代码为

|

| 166 |

+

```python

|

| 167 |

+

import openai

|

| 168 |

+

if __name__ == "__main__":

|

| 169 |

+

openai.api_base = "http://localhost:8000/v1"

|

| 170 |

+

openai.api_key = "none"

|

| 171 |

+

for chunk in openai.ChatCompletion.create(

|

| 172 |

+

model="chatglm3-6b",

|

| 173 |

+

messages=[

|

| 174 |

+

{"role": "user", "content": "你好"}

|

| 175 |

+

],

|

| 176 |

+

stream=True

|

| 177 |

+

):

|

| 178 |

+

if hasattr(chunk.choices[0].delta, "content"):

|

| 179 |

+

print(chunk.choices[0].delta.content, end="", flush=True)

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

## 低成本部署

|

| 183 |

+

|

| 184 |

+

### 模型量化

|

| 185 |

+

|

| 186 |

+

默认情况下,模型以 FP16 精度加载,运行上述代码需要大概 13GB 显存。如果你的 GPU 显存有限,可以尝试以量化方式加载模型,使用方法如下:

|

| 187 |

+

|

| 188 |

+

```python

|

| 189 |

+

model = AutoModel.from_pretrained("THUDM/chatglm3-6b",trust_remote_code=True).quantize(4).cuda()

|

| 190 |

+

```

|

| 191 |

+

|

| 192 |

+

模型量化会带来一定的性能损失,经过测试,ChatGLM3-6B 在 4-bit 量化下仍然能够进行自然流畅的生成。

|

| 193 |

+

|

| 194 |

+

### CPU 部署

|

| 195 |

+

|

| 196 |

+

如果你没有 GPU 硬件的话,也可以在 CPU 上进行推理,但是推理速度会更慢。使用方法如下(需要大概 32GB 内存)

|

| 197 |

+

```python

|

| 198 |

+

model = AutoModel.from_pretrained("THUDM/chatglm3-6b", trust_remote_code=True).float()

|

| 199 |

+

```

|

| 200 |

+

|

| 201 |

+

### Mac 部署

|

| 202 |

+

|

| 203 |

+

对于搭载了 Apple Silicon 或者 AMD GPU 的 Mac,可以使用 MPS 后端来在 GPU 上运行 ChatGLM3-6B。需要参考 Apple 的 [官方说明](https://developer.apple.com/metal/pytorch) 安装 PyTorch-Nightly(正确的版本号应该是2.x.x.dev2023xxxx,而不是 2.x.x)。

|

| 204 |

+

|

| 205 |

+

目前在 MacOS 上只支持[从本地加载模型](README.md#从本地加载模型)。将代码中的模型加载改为从本地加载,并使用 mps 后端:

|

| 206 |

+

```python

|

| 207 |

+

model = AutoModel.from_pretrained("your local path", trust_remote_code=True).to('mps')

|

| 208 |

+

```

|

| 209 |

+

|

| 210 |

+

加载半精度的 ChatGLM3-6B 模型需要大概 13GB 内存。内存较小的机器(比如 16GB 内存的 MacBook Pro),在空余内存不足的情况下会使用硬盘上的虚拟内存,导致推理速度严重变慢。

|

| 211 |

+

|

| 212 |

+

### 多卡部署

|

| 213 |

+

如果你有多张 GPU,但是每张 GPU 的显存大小都不足以容纳完整的模型,那么可以将模型切分在多张GPU上。首先安装 accelerate: `pip install accelerate`,然后通过如下方法加载模型:

|

| 214 |

+

```python

|

| 215 |

+

from utils import load_model_on_gpus

|

| 216 |

+

model = load_model_on_gpus("THUDM/chatglm3-6b", num_gpus=2)

|

| 217 |

+

```

|

| 218 |

+

即可将模型部署到两张 GPU 上进行推理。你可以将 `num_gpus` 改为你希望使用的 GPU 数。默认是均匀切分的,你也可以传入 `device_map` 参数来自己指定。

|

| 219 |

+

|

| 220 |

+

## 引用

|

| 221 |

+

|

| 222 |

+

如果你觉得我们的工作有帮助的话,请考虑引用下列论文。

|

| 223 |

+

|

| 224 |

+

```

|

| 225 |

+

@article{zeng2022glm,

|

| 226 |

+

title={Glm-130b: An open bilingual pre-trained model},

|

| 227 |

+

author={Zeng, Aohan and Liu, Xiao and Du, Zhengxiao and Wang, Zihan and Lai, Hanyu and Ding, Ming and Yang, Zhuoyi and Xu, Yifan and Zheng, Wendi and Xia, Xiao and others},

|

| 228 |

+

journal={arXiv preprint arXiv:2210.02414},

|

| 229 |

+

year={2022}

|

| 230 |

+

}

|

| 231 |

+

```

|

| 232 |

+

```

|

| 233 |

+

@inproceedings{du2022glm,

|

| 234 |

+

title={GLM: General Language Model Pretraining with Autoregressive Blank Infilling},

|

| 235 |

+

author={Du, Zhengxiao and Qian, Yujie and Liu, Xiao and Ding, Ming and Qiu, Jiezhong and Yang, Zhilin and Tang, Jie},

|

| 236 |

+

booktitle={Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)},

|

| 237 |

+

pages={320--335},

|

| 238 |

+