Spaces:

Sleeping

Sleeping

Commit

·

be5548b

0

Parent(s):

Cleaned old git history

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .dockerignore +23 -0

- .gitignore +28 -0

- LICENSE.txt +8 -0

- README-rsrc/doorkey.png +0 -0

- README-rsrc/evaluate-terminal-logs.png +0 -0

- README-rsrc/model.png +0 -0

- README-rsrc/model.xml +1 -0

- README-rsrc/train-tensorboard.png +0 -0

- README-rsrc/train-terminal-logs.png +0 -0

- README.md +164 -0

- README_old.md +215 -0

- autocrop.sh +14 -0

- campain_continuer.py +282 -0

- campain_launcher.py +488 -0

- data_analysis.ipynb +0 -0

- data_analysis.py +1650 -0

- data_analysis_neurips.py +570 -0

- data_visualize.py +1436 -0

- display_LLM_evaluations.py +45 -0

- draw_tree.py +104 -0

- draw_trees.sh +19 -0

- dummy_run.sh +109 -0

- eval_LLMs.sh +42 -0

- gpuh.py +99 -0

- gym-minigrid/.gitignore +9 -0

- gym-minigrid/.travis.yml +10 -0

- gym-minigrid/LICENSE +201 -0

- gym-minigrid/README.md +511 -0

- gym-minigrid/benchmark.py +53 -0

- gym-minigrid/gym_minigrid/__init__.py +6 -0

- gym-minigrid/gym_minigrid/backup_envs/bobo.py +301 -0

- gym-minigrid/gym_minigrid/backup_envs/cointhief.py +431 -0

- gym-minigrid/gym_minigrid/backup_envs/dancewithonenpc.py +344 -0

- gym-minigrid/gym_minigrid/backup_envs/diverseexit.py +584 -0

- gym-minigrid/gym_minigrid/backup_envs/exiter.py +347 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoorpolite.py +292 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoorsesame.py +165 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoortalk.py +189 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoortalkhard.py +199 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoortalkhardnpc.py +283 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoortalkhardsesame.py +204 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoortalkhardsesamnpc.py +294 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoortalkhardsesamnpcguides.py +384 -0

- gym-minigrid/gym_minigrid/backup_envs/gotodoorwizard.py +209 -0

- gym-minigrid/gym_minigrid/backup_envs/guidethief.py +416 -0

- gym-minigrid/gym_minigrid/backup_envs/helper.py +295 -0

- gym-minigrid/gym_minigrid/backup_envs/showme.py +525 -0

- gym-minigrid/gym_minigrid/backup_envs/socialenv.py +194 -0

- gym-minigrid/gym_minigrid/backup_envs/spying.py +429 -0

- gym-minigrid/gym_minigrid/backup_envs/talkitout.py +385 -0

.dockerignore

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

storage/*

|

| 2 |

+

__pycache__/*

|

| 3 |

+

campain_logs/*

|

| 4 |

+

llm_log/*

|

| 5 |

+

*egg-info

|

| 6 |

+

.vscode

|

| 7 |

+

*.idea*

|

| 8 |

+

retrieve_plafrim_data.sh

|

| 9 |

+

sync_plafrim.sh

|

| 10 |

+

retrieve_remy.sh

|

| 11 |

+

sync_remy.sh

|

| 12 |

+

*.gif

|

| 13 |

+

viz/*

|

| 14 |

+

graphics/*

|

| 15 |

+

retrieve_graphics.sh

|

| 16 |

+

retrieve_grg.sh

|

| 17 |

+

run_seeds.sh

|

| 18 |

+

sync_grg.sh

|

| 19 |

+

get_node.sh

|

| 20 |

+

llm_log/

|

| 21 |

+

.git*

|

| 22 |

+

.cache*

|

| 23 |

+

storage_old_2021.tar.gz

|

.gitignore

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*__pycache__

|

| 2 |

+

storage/*

|

| 3 |

+

graphics/*

|

| 4 |

+

storage_old_2021.tar.gz

|

| 5 |

+

*egg-info

|

| 6 |

+

.vscode

|

| 7 |

+

*.idea*

|

| 8 |

+

retrieve_plafrim_data.sh

|

| 9 |

+

sync_plafrim.sh

|

| 10 |

+

retrieve_remy.sh

|

| 11 |

+

sync_remy.sh

|

| 12 |

+

*.gif

|

| 13 |

+

viz/*

|

| 14 |

+

retrieve_graphics.sh

|

| 15 |

+

retrieve_grg.sh

|

| 16 |

+

run_seeds.sh

|

| 17 |

+

sync_grg.sh

|

| 18 |

+

get_node.sh

|

| 19 |

+

llm_log/

|

| 20 |

+

.cache/

|

| 21 |

+

.ipynb_checkpoints/*

|

| 22 |

+

campain_logs

|

| 23 |

+

llm_data/backup

|

| 24 |

+

saved_logs_LLMs/*

|

| 25 |

+

plots/*

|

| 26 |

+

retrieve_viz_and_graphics.sh

|

| 27 |

+

retrieve_llm_log.sh

|

| 28 |

+

gym-minigrid/figures/*

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The MIT License (MIT)

|

| 2 |

+

Copyright © 2021 Flowers Team

|

| 3 |

+

|

| 4 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

|

| 5 |

+

|

| 6 |

+

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

|

| 7 |

+

|

| 8 |

+

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

|

README-rsrc/doorkey.png

ADDED

|

README-rsrc/evaluate-terminal-logs.png

ADDED

|

README-rsrc/model.png

ADDED

|

README-rsrc/model.xml

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

<mxfile userAgent="Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) draw.io/9.3.1 Chrome/66.0.3359.181 Electron/3.0.6 Safari/537.36" version="9.3.4" editor="www.draw.io" type="device"><diagram id="81a450cc-4610-c693-ab1c-18701f2b71dc" name="Page-1">7Vtbj5s4FP41SLsPW8UYSHhMssnsQytVnV3t9tEBh9ACjohz66+vCXYwNpkyEweS0Y40Gvv4fs53bsZjwWl6eMrRevWJhDix7EF4sOCflm0De2izPwXlWFJcb1ASojwOeaeK8Bz/wJwoum3jEG9qHSkhCY3XdWJAsgwHtEZDeU729W5LktRXXaOIrzioCM8BSrDW7d84pKuSOnKl3n/hOFqJlcGAtyxQ8D3KyTbj61k2XJ5+yuYUibl4/80KhWQvkeDMgtOcEFqW0sMUJwVvBdvKcfMLred95zijbQaIERt6FGfHIWMFr5KcrkhEMpTMKurkdD5czDBgtRVNE1YErIgPMf2vIH9wee0r78S2kx+lpqL6lY/6hik9cgygLSWMVK37kZA176efTWyebPOA754Dj6I8wmfplLTiYNI4zpAnTFLMdsM65DhBNN7VMYA4lKJzv4qdrMA5eoG75RQ7lGz5pFOS7RhF53qdp/tVTPHzGp1OtWc6VufzRU7scE7x4cVD8lZbIJZrKBSI3Fd4B4K2krAOB9fzxdH4oqMwC8eFIrNakKDNJg4UqL0AqIvskVFhtwSFxA+3gR2C1ho7fIXPJGabq8ThKeIYKWwuMc5HyQqtTjT8xUQlD7SJTiI7H7uVFF1NinFa2FXbSxhzJpvtghWjokgFjU0pkQV1kVcUBQYMzbQu+A3NyXc8JQnJGSUjWWGRlnGSKCSUxFFWoIeBADP6pNCNmJn4MW9I4zA8mbMmZaurowl9cxS5+Lq+OQ34sg2om/Cqj2DkZUky5xkiPFoGmthZy9ifOrNJW7fg6QbA78sreJrezNIFDsM4i65zCwZ51x7YfwAF2KClI3EMIHuom6CMnfYdmqAlyagkQW84nvhzMxKE/Vmm0W0DAYMKIVsO765CB9dQ5KA4KMe9WeDgdJ902PfmkHwdVmBo2iOdhjLtQUepw7qQ4KY1noA3eBE2aiKh9GeFcgNvBYuvmYinL/+8Az955nMXfrLJrt5rBNhKfUCDWQZtUzrjEZ3YjZ7o63zvO9E/J4idJPqPm3ksRwEOGq3CHPhT32+N1KYbKac3pF68koL3h9Qur6SAaxSpZzzKaCzB2RaPAu1AxvpphlshFXaB1OYoAqhRh+PXp7gQxb4hoBDHlFRg9ZqE8RHSw9nYm3ieGbX0FckMXU0rb5Ue2jdxH7pCtVXJb9t0LRaPTlJ4SdFvpaaOrqZ2R2oKlWtq4Cpqai5JhH7nocPw7mKHBlGbzxJbu0j9s83H578/XRc3GOTc2yMN0DYmtv3r2WhfD2zdDt1ZdjZsMFFtb82M4xZe70UekuFub4ZCv5ZnSUaAKM7Y73X2woD2A+UTKWibZxjRfj3P6gCMv7oo7/kytDHzgH3Bt+F5woUEYQL+TxIuBIYNWcL5kZTpNAFen7vfu4EXxlzWENjbfWfD04/5NkmOvZt29eOF16Flh977R2HDMwrY3+s6/SHFfaDQ7xGE+pcIazq3im/C3IGtUaY+fpA913RmTUashtKCL9lis5Y6Nr+dKKjSrL+x5T9YQ7bVAXnNvVox4BUXcb/fqZM1EaCq6anfoS+1NQDtOsTOjRHzII99THhCNSBrwBAwgyFWrR6slzd91X8FwNlP</diagram></mxfile>

|

README-rsrc/train-tensorboard.png

ADDED

|

README-rsrc/train-terminal-logs.png

ADDED

|

README.md

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: SocialAI School Demo

|

| 3 |

+

emoji: 🧙🏻♂️

|

| 4 |

+

colorFrom: gray

|

| 5 |

+

colorTo: indigo

|

| 6 |

+

sdk: docker

|

| 7 |

+

app_port: 7860

|

| 8 |

+

---

|

| 9 |

+

|

| 10 |

+

# SocialAI

|

| 11 |

+

|

| 12 |

+

[comment]: <> (This repository is the official implementation of [My Paper Title](https://arxiv.org/abs/2030.12345). )

|

| 13 |

+

|

| 14 |

+

[comment]: <> (TODO: add arxiv link later)

|

| 15 |

+

This repository is the official implementation of SocialAI: Benchmarking Socio-Cognitive Abilities inDeep Reinforcement Learning Agents.

|

| 16 |

+

|

| 17 |

+

The website of the project is [here](https://sites.google.com/view/socialai)

|

| 18 |

+

|

| 19 |

+

The code is based on:

|

| 20 |

+

[minigrid](https://github.com/maximecb/gym-minigrid)

|

| 21 |

+

|

| 22 |

+

Additional repositories used:

|

| 23 |

+

[BabyAI](https://github.com/mila-iqia/babyai)

|

| 24 |

+

[RIDE](https://github.com/facebookresearch/impact-driven-exploration)

|

| 25 |

+

[astar](https://github.com/jrialland/python-astar)

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

## Installation

|

| 29 |

+

|

| 30 |

+

[comment]: <> (Clone the repo)

|

| 31 |

+

|

| 32 |

+

[comment]: <> (```)

|

| 33 |

+

|

| 34 |

+

[comment]: <> (git clone https://gitlab.inria.fr/gkovac/act-and-speak.git)

|

| 35 |

+

|

| 36 |

+

[comment]: <> (```)

|

| 37 |

+

|

| 38 |

+

Create and activate your conda env

|

| 39 |

+

```

|

| 40 |

+

conda create --name social_ai python=3.7

|

| 41 |

+

conda activate social_ai

|

| 42 |

+

conda install -c anaconda graphviz

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

Install the required packages

|

| 46 |

+

```

|

| 47 |

+

pip install -r requirements.txt

|

| 48 |

+

pip install -e torch-ac

|

| 49 |

+

pip install -e gym-minigrid

|

| 50 |

+

conda install pytorch torchvision torchaudio pytorch-cuda=11.6 -c pytorch -c nvidia

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

## Interactive policy

|

| 54 |

+

|

| 55 |

+

To run an enviroment in the interactive mode run:

|

| 56 |

+

```

|

| 57 |

+

python -m scripts.manual_control.py

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

You can test different enviroments with the ```--env``` parameter.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

# RL experiments

|

| 66 |

+

|

| 67 |

+

## Training

|

| 68 |

+

|

| 69 |

+

### Minimal example

|

| 70 |

+

|

| 71 |

+

To train a policy, run:

|

| 72 |

+

```train

|

| 73 |

+

python -m scripts.train --model test_model_name --seed 1 --compact-save --algo ppo --env SocialAI-AsocialBoxInformationSeekingParamEnv-v1 --dialogue --save-interval 1 --log-interval 1 --frames 5000000 --multi-modal-babyai11-agent --arch original_endpool_res --custom-ppo-2

|

| 74 |

+

`````

|

| 75 |

+

|

| 76 |

+

The policy should be above 0.95 success rate after the first 2M environment interactions.

|

| 77 |

+

|

| 78 |

+

### Recreating all the experiments

|

| 79 |

+

|

| 80 |

+

See ```run_SAI_final_case_studies.txt``` for the experiments in the paper.

|

| 81 |

+

|

| 82 |

+

#### Regular machine

|

| 83 |

+

|

| 84 |

+

To run the experiments on a regular machine `run_SAI_final_case_studies.txt` contains all the bash commands running the RL experiments.

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

#### Slurm based cluster (todo:)

|

| 89 |

+

|

| 90 |

+

To recreate all the experiments from the paper on a slurm based server configure the `campaign_launcher.py` script and run:

|

| 91 |

+

|

| 92 |

+

```

|

| 93 |

+

python campaign_launcher.py run_NeurIPS.txt

|

| 94 |

+

```

|

| 95 |

+

|

| 96 |

+

[//]: # (The list of all the experiments and their parameters can be seen in run_NeurIPS.txt)

|

| 97 |

+

|

| 98 |

+

[//]: # ()

|

| 99 |

+

[//]: # (For example the bash equivalent of the following configuration:)

|

| 100 |

+

|

| 101 |

+

[//]: # (```)

|

| 102 |

+

|

| 103 |

+

[//]: # (--slurm_conf jz_long_2gpus_32g --nb_seeds 16 --model NeurIPS_Help_NoSocial_NO_BONUS_ABL --compact-save --algo ppo --*env MiniGrid-AblationExiter-8x8-v0 --*env_args hidden_npc True --dialogue --save-interval 10 --frames 5000000 --*multi-modal-babyai11-agent --*arch original_endpool_res --*custom-ppo-2)

|

| 104 |

+

|

| 105 |

+

[//]: # (```)

|

| 106 |

+

|

| 107 |

+

[//]: # (is:)

|

| 108 |

+

|

| 109 |

+

[//]: # (```)

|

| 110 |

+

|

| 111 |

+

[//]: # (for SEED in {1..16})

|

| 112 |

+

|

| 113 |

+

[//]: # (do)

|

| 114 |

+

|

| 115 |

+

[//]: # ( python -m scripts.train --model NeurIPS_Help_NoSocial_NO_BONUS_ABL --compact-save --algo ppo --*env MiniGrid-AblationExiter-8x8-v0 --*env_args hidden_npc True --dialogue --save-interval 10 --frames 5000000 --*multi-modal-babyai11-agent --*arch original_endpool_res --*custom-ppo-2 --seed $SEED & )

|

| 116 |

+

|

| 117 |

+

[//]: # (done)

|

| 118 |

+

|

| 119 |

+

[//]: # (```)

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

## Evaluation

|

| 124 |

+

|

| 125 |

+

To evaluate a policy, run:

|

| 126 |

+

|

| 127 |

+

```eval

|

| 128 |

+

python -m scripts.evaluate_new --episodes 500 --test-set-seed 1 --model-label test_model --eval-env SocialAI-TestLanguageFeedbackSwitchesInformationSeekingParamEnv-v1 --model-to-evaluate storage/test/ --n-seeds 8

|

| 129 |

+

````

|

| 130 |

+

|

| 131 |

+

To visualize a policy, run:

|

| 132 |

+

```

|

| 133 |

+

python -m scripts.visualize --model storage/test_model_name/1/ --pause 0.1 --seed $RANDOM --episodes 20 --gif viz/test

|

| 134 |

+

```

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

# LLM experiments

|

| 138 |

+

|

| 139 |

+

For LLMs set your ```OPENAI_API_KEY``` (and ```HF_TOKEN```) variable in ```~/.bashrc``` or wherever you want.

|

| 140 |

+

|

| 141 |

+

### Creating in-context examples

|

| 142 |

+

To create in_context examples you can use the ```create_LLM_examples.py``` script.

|

| 143 |

+

|

| 144 |

+

This script will open an interactive window, where you can manually control the agent.

|

| 145 |

+

By default, nothing is saved.

|

| 146 |

+

The general procedure is to press 'enter' to skip over environments which you don't like.

|

| 147 |

+

When you see a wanted enviroment, move the agent in the wanted position and start recording (press 'r'). The current and the following steps in the episode will be recorded.

|

| 148 |

+

Then control the agent and finish the episode. The new episode will start and recording will be turned off again.

|

| 149 |

+

|

| 150 |

+

If you already like some of the previously collected examples and want to append to them you can use the ```--load``` argument.

|

| 151 |

+

|

| 152 |

+

### Evaluating LLM-based agents

|

| 153 |

+

|

| 154 |

+

The script ```eval_LLMs.sh``` contains the bash commands to run all the experiments in the paper.

|

| 155 |

+

|

| 156 |

+

Here is an example of running evaluation on the ```text-ada-001``` model on the AsocialBox environment:

|

| 157 |

+

```

|

| 158 |

+

python -m scripts.LLM_test --episodes 10 --max-steps 15 --model text-ada-001 --env-args size 7 --env-name SocialAI-AsocialBoxInformationSeekingParamEnv-v1 --in-context-path llm_data/in_context_examples/in_context_asocialbox_SocialAI-AsocialBoxInformationSeekingParamEnv-v1_2023_07_19_19_28_48/episodes.pkl

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

If you want to control the agent yourself you can set the model to ```interactive```.

|

| 162 |

+

```dummy``` agent just executes the move forward action, and ```random``` executes a random action. These agent are usefull for testing.

|

| 163 |

+

|

| 164 |

+

|

README_old.md

ADDED

|

@@ -0,0 +1,215 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Embodied acting and speaking

|

| 2 |

+

|

| 3 |

+

This code was based on these repositories:

|

| 4 |

+

|

| 5 |

+

[`gym-minigrid`](https://github.com/maximecb/gym-minigrid)

|

| 6 |

+

|

| 7 |

+

[`torch-ac`](https://github.com/lcswillems/torch-ac)

|

| 8 |

+

|

| 9 |

+

[`rl-starter-files`](add_url)

|

| 10 |

+

|

| 11 |

+

## Features

|

| 12 |

+

|

| 13 |

+

- **Script to train**, including:

|

| 14 |

+

- Log in txt, CSV and Tensorboard

|

| 15 |

+

- Save model

|

| 16 |

+

- Stop and restart training

|

| 17 |

+

- Use A2C or PPO algorithms

|

| 18 |

+

- **Script to visualize**, including:

|

| 19 |

+

- Act by sampling or argmax

|

| 20 |

+

- Save as Gif

|

| 21 |

+

- **Script to evaluate**, including:

|

| 22 |

+

- Act by sampling or argmax

|

| 23 |

+

- List the worst performed episodes

|

| 24 |

+

|

| 25 |

+

## Installation

|

| 26 |

+

|

| 27 |

+

### Option 1

|

| 28 |

+

|

| 29 |

+

[comment]: <> todo: add this part

|

| 30 |

+

[comment]: <> (Clone the repo)

|

| 31 |

+

|

| 32 |

+

[comment]: <> (```)

|

| 33 |

+

|

| 34 |

+

[comment]: <> (git clone https://gitlab.inria.fr/gkovac/act-and-speak.git)

|

| 35 |

+

|

| 36 |

+

[comment]: <> (```)

|

| 37 |

+

Create and activate your conda env

|

| 38 |

+

```

|

| 39 |

+

conda create --name act_and_speak python=3.6

|

| 40 |

+

conda activate act_and_speak

|

| 41 |

+

```

|

| 42 |

+

Install the required packages

|

| 43 |

+

```

|

| 44 |

+

pip install -r requirements.txt

|

| 45 |

+

pip install -e torch-ac

|

| 46 |

+

pip install -e gym-minigrid --use-feature=2020-resolver

|

| 47 |

+

```

|

| 48 |

+

|

| 49 |

+

### Option 2

|

| 50 |

+

Alternative use the conda yaml file:

|

| 51 |

+

```

|

| 52 |

+

TODO:

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

## Example of use

|

| 56 |

+

|

| 57 |

+

Train, visualize and evaluate an agent on the `MiniGrid-DoorKey-5x5-v0` environment:

|

| 58 |

+

|

| 59 |

+

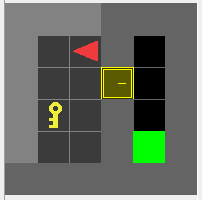

<p align="center"><img src="README-rsrc/doorkey.png"></p>

|

| 60 |

+

|

| 61 |

+

1. Train the agent on the `MiniGrid-DoorKey-5x5-v0` environment with PPO algorithm:

|

| 62 |

+

|

| 63 |

+

```

|

| 64 |

+

python3 -m scripts.train --algo ppo --env MiniGrid-DoorKey-5x5-v0 --model DoorKey --save-interval 10 --frames 80000

|

| 65 |

+

```

|

| 66 |

+

|

| 67 |

+

<p align="center"><img src="README-rsrc/train-terminal-logs.png"></p>

|

| 68 |

+

|

| 69 |

+

2. Visualize agent's behavior:

|

| 70 |

+

|

| 71 |

+

```

|

| 72 |

+

python3 -m scripts.visualize --env MiniGrid-DoorKey-5x5-v0 --model DoorKey

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

<p align="center"><img src="README-rsrc/visualize-doorkey.gif"></p>

|

| 76 |

+

|

| 77 |

+

3. Evaluate agent's performance:

|

| 78 |

+

|

| 79 |

+

```

|

| 80 |

+

python3 -m scripts.evaluate --env MiniGrid-DoorKey-5x5-v0 --model DoorKey

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

<p align="center"><img src="README-rsrc/evaluate-terminal-logs.png"></p>

|

| 84 |

+

|

| 85 |

+

**Note:** More details on the commands are given below.

|

| 86 |

+

|

| 87 |

+

## Other examples

|

| 88 |

+

|

| 89 |

+

### Handle textual instructions

|

| 90 |

+

|

| 91 |

+

In the `GoToDoor` environment, the agent receives an image along with a textual instruction. To handle the latter, add `--text` to the command:

|

| 92 |

+

|

| 93 |

+

```

|

| 94 |

+

python3 -m scripts.train --algo ppo --env MiniGrid-GoToDoor-5x5-v0 --model GoToDoor --text --save-interval 10 --frames 1000000

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

<p align="center"><img src="README-rsrc/visualize-gotodoor.gif"></p>

|

| 98 |

+

|

| 99 |

+

### Handle dialogue with multi a multi headed agent

|

| 100 |

+

|

| 101 |

+

In the `GoToDoorTalk` environment, the agent receives an image along with the dialogue. To handle the latter, add `--dialogue` and, to use the multi headed agent, add `--multi-headed-agent` to the command:

|

| 102 |

+

|

| 103 |

+

```

|

| 104 |

+

python3 -m scripts.train --algo ppo --env MiniGrid-GoToDoorTalk-5x5-v0 --model GoToDoorMultiHead --dialogue --multi-headed-agent --save-interval 10 --frames 1000000

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

### Add memory

|

| 108 |

+

|

| 109 |

+

In the `RedBlueDoors` environment, the agent has to open the red door then the blue one. To solve it efficiently, when it opens the red door, it has to remember it. To add memory to the agent, add `--recurrence X` to the command:

|

| 110 |

+

|

| 111 |

+

```

|

| 112 |

+

python3 -m scripts.train --algo ppo --env MiniGrid-RedBlueDoors-6x6-v0 --model RedBlueDoors --recurrence 4 --save-interval 10 --frames 1000000

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

<p align="center"><img src="README-rsrc/visualize-redbluedoors.gif"></p>

|

| 116 |

+

|

| 117 |

+

## Files

|

| 118 |

+

|

| 119 |

+

This package contains:

|

| 120 |

+

- scripts to:

|

| 121 |

+

- train an agent \

|

| 122 |

+

in `script/train.py` ([more details](#scripts-train))

|

| 123 |

+

- visualize agent's behavior \

|

| 124 |

+

in `script/visualize.py` ([more details](#scripts-visualize))

|

| 125 |

+

- evaluate agent's performances \

|

| 126 |

+

in `script/evaluate.py` ([more details](#scripts-evaluate))

|

| 127 |

+

- a default agent's model \

|

| 128 |

+

in `model.py` ([more details](#model))

|

| 129 |

+

- utilitarian classes and functions used by the scripts \

|

| 130 |

+

in `utils`

|

| 131 |

+

|

| 132 |

+

These files are suited for [`gym-minigrid`](https://github.com/maximecb/gym-minigrid) environments and [`torch-ac`](https://github.com/lcswillems/torch-ac) RL algorithms. They are easy to adapt to other environments and RL algorithms by modifying:

|

| 133 |

+

- `model.py`

|

| 134 |

+

- `utils/format.py`

|

| 135 |

+

|

| 136 |

+

<h2 id="scripts-train">scripts/train.py</h2>

|

| 137 |

+

|

| 138 |

+

An example of use:

|

| 139 |

+

|

| 140 |

+

```bash

|

| 141 |

+

python3 -m scripts.train --algo ppo --env MiniGrid-DoorKey-5x5-v0 --model DoorKey --save-interval 10 --frames 80000

|

| 142 |

+

```

|

| 143 |

+

|

| 144 |

+

The script loads the model in `storage/DoorKey` or creates it if it doesn't exist, then trains it with the PPO algorithm on the MiniGrid DoorKey environment, and saves it every 10 updates in `storage/DoorKey`. It stops after 80 000 frames.

|

| 145 |

+

|

| 146 |

+

**Note:** You can define a different storage location in the environment variable `PROJECT_STORAGE`.

|

| 147 |

+

|

| 148 |

+

More generally, the script has 2 required arguments:

|

| 149 |

+

- `--algo ALGO`: name of the RL algorithm used to train

|

| 150 |

+

- `--env ENV`: name of the environment to train on

|

| 151 |

+

|

| 152 |

+

and a bunch of optional arguments among which:

|

| 153 |

+

- `--recurrence N`: gradient will be backpropagated over N timesteps. By default, N = 1. If N > 1, a LSTM is added to the model to have memory.

|

| 154 |

+

- `--text`: a GRU is added to the model to handle text input.

|

| 155 |

+

- ... (see more using `--help`)

|

| 156 |

+

|

| 157 |

+

During training, logs are printed in your terminal (and saved in text and CSV format):

|

| 158 |

+

|

| 159 |

+

<p align="center"><img src="README-rsrc/train-terminal-logs.png"></p>

|

| 160 |

+

|

| 161 |

+

**Note:** `U` gives the update number, `F` the total number of frames, `FPS` the number of frames per second, `D` the total duration, `rR:μσmM` the mean, std, min and max reshaped return per episode, `F:μσmM` the mean, std, min and max number of frames per episode, `H` the entropy, `V` the value, `pL` the policy loss, `vL` the value loss and `∇` the gradient norm.

|

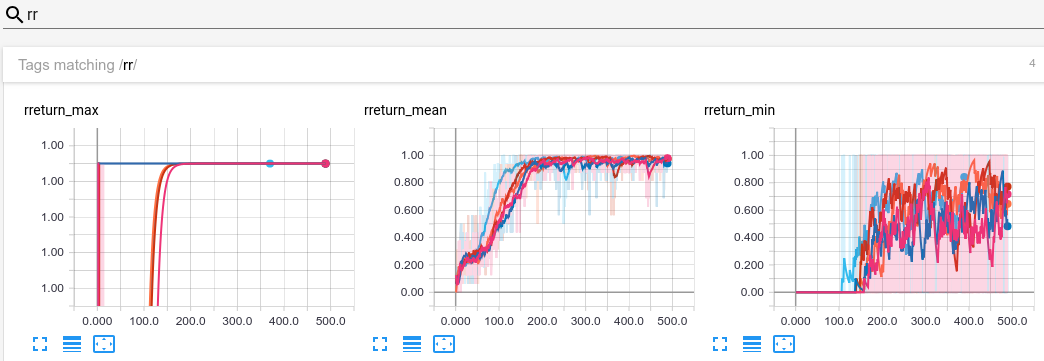

| 162 |

+

|

| 163 |

+

During training, logs are also plotted in Tensorboard:

|

| 164 |

+

|

| 165 |

+

<p><img src="README-rsrc/train-tensorboard.png"></p>

|

| 166 |

+

|

| 167 |

+

<h2 id="scripts-visualize">scripts/visualize.py</h2>

|

| 168 |

+

|

| 169 |

+

An example of use:

|

| 170 |

+

|

| 171 |

+

```

|

| 172 |

+

python3 -m scripts.visualize --env MiniGrid-DoorKey-5x5-v0 --model DoorKey

|

| 173 |

+

```

|

| 174 |

+

|

| 175 |

+

<p align="center"><img src="README-rsrc/visualize-doorkey.gif"></p>

|

| 176 |

+

|

| 177 |

+

In this use case, the script displays how the model in `storage/DoorKey` behaves on the MiniGrid DoorKey environment.

|

| 178 |

+

|

| 179 |

+

More generally, the script has 2 required arguments:

|

| 180 |

+

- `--env ENV`: name of the environment to act on.

|

| 181 |

+

- `--model MODEL`: name of the trained model.

|

| 182 |

+

|

| 183 |

+

and a bunch of optional arguments among which:

|

| 184 |

+

- `--argmax`: select the action with highest probability

|

| 185 |

+

- ... (see more using `--help`)

|

| 186 |

+

|

| 187 |

+

<h2 id="scripts-evaluate">scripts/evaluate.py</h2>

|

| 188 |

+

|

| 189 |

+

An example of use:

|

| 190 |

+

|

| 191 |

+

```

|

| 192 |

+

python3 -m scripts.evaluate --env MiniGrid-DoorKey-5x5-v0 --model DoorKey

|

| 193 |

+

```

|

| 194 |

+

|

| 195 |

+

<p align="center"><img src="README-rsrc/evaluate-terminal-logs.png"></p>

|

| 196 |

+

|

| 197 |

+

In this use case, the script prints in the terminal the performance among 100 episodes of the model in `storage/DoorKey`.

|

| 198 |

+

|

| 199 |

+

More generally, the script has 2 required arguments:

|

| 200 |

+

- `--env ENV`: name of the environment to act on.

|

| 201 |

+

- `--model MODEL`: name of the trained model.

|

| 202 |

+

|

| 203 |

+

and a bunch of optional arguments among which:

|

| 204 |

+

- `--episodes N`: number of episodes of evaluation. By default, N = 100.

|

| 205 |

+

- ... (see more using `--help`)

|

| 206 |

+

|

| 207 |

+

<h2 id="model">model.py</h2>

|

| 208 |

+

|

| 209 |

+

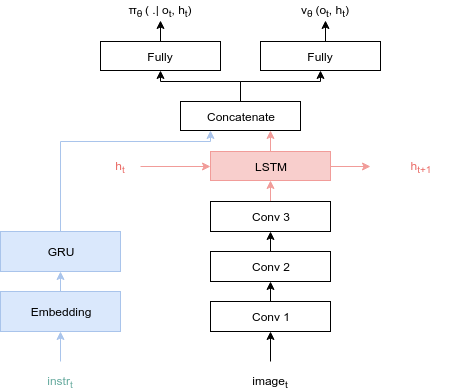

The default model is discribed by the following schema:

|

| 210 |

+

|

| 211 |

+

<p align="center"><img src="README-rsrc/model.png"></p>

|

| 212 |

+

|

| 213 |

+

By default, the memory part (in red) and the langage part (in blue) are disabled. They can be enabled by setting to `True` the `use_memory` and `use_text` parameters of the model constructor.

|

| 214 |

+

|

| 215 |

+

This model can be easily adapted to your needs.

|

autocrop.sh

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

# Loop through all files in the specified directory

|

| 5 |

+

for file in "$@"

|

| 6 |

+

do

|

| 7 |

+

# Check if the file is an image

|

| 8 |

+

if [[ $file == *.jpg || $file == *.png ]]

|

| 9 |

+

then

|

| 10 |

+

# Crop the image using the `convert` command from the ImageMagick suite

|

| 11 |

+

echo "Cropping $file"

|

| 12 |

+

convert $file -trim +repage $file

|

| 13 |

+

fi

|

| 14 |

+

done

|

campain_continuer.py

ADDED

|

@@ -0,0 +1,282 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

from datetime import date

|

| 4 |

+

import subprocess

|

| 5 |

+

import shutil

|

| 6 |

+

import os

|

| 7 |

+

import stat

|

| 8 |

+

import getpass

|

| 9 |

+

import re

|

| 10 |

+

import glob

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def process_arg_string(expe_args): # function to extract flagged (with a *) arguments as details for experience name

|

| 14 |

+

details_string = ''

|

| 15 |

+

processed_arg_string = expe_args.replace('*', '') # keep a version of args cleaned from exp name related flags

|

| 16 |

+

# args = [arg_chunk.split(' -') for arg_chunk in expe_args.split(' --')]

|

| 17 |

+

arg_chunks = [arg_chunk for arg_chunk in expe_args.split(' --')]

|

| 18 |

+

args_list = []

|

| 19 |

+

for arg in arg_chunks:

|

| 20 |

+

if ' -' in arg and arg.split(' -')[1].isalpha():

|

| 21 |

+

args_list.extend(arg.split(' -'))

|

| 22 |

+

else:

|

| 23 |

+

args_list.append(arg)

|

| 24 |

+

# args_list = [item for sublist in args for item in sublist] # flatten

|

| 25 |

+

for arg in args_list:

|

| 26 |

+

if arg == '':

|

| 27 |

+

continue

|

| 28 |

+

if arg[0] == '*':

|

| 29 |

+

if arg[-1] == ' ':

|

| 30 |

+

arg = arg[:-1]

|

| 31 |

+

details_string += '_' + arg[1:].replace(' ', '_').replace('/', '-')

|

| 32 |

+

return details_string, processed_arg_string

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

slurm_confs = {'curta_extra_long': "#SBATCH -p inria\n"

|

| 36 |

+

"#SBATCH -t 119:00:00\n",

|

| 37 |

+

'curta_long': "#SBATCH -p inria\n"

|

| 38 |

+

"#SBATCH -t 72:00:00\n",

|

| 39 |

+

'curta_medium': "#SBATCH -p inria\n"

|

| 40 |

+

"#SBATCH -t 48:00:00\n",

|

| 41 |

+

'curta_short': "#SBATCH -p inria\n"

|

| 42 |

+

"#SBATCH -t 24:00:00\n",

|

| 43 |

+

'jz_super_short_gpu':

|

| 44 |

+

'#SBATCH -A imi@v100\n'

|

| 45 |

+

'#SBATCH --gres=gpu:1\n'

|

| 46 |

+

"#SBATCH -t 9:59:00\n"

|

| 47 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 48 |

+

'jz_short_gpu': '#SBATCH -A imi@v100\n'

|

| 49 |

+

'#SBATCH --gres=gpu:1\n'

|

| 50 |

+

"#SBATCH -t 19:59:00\n"

|

| 51 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 52 |

+

'jz_short_gpu_chained': '#SBATCH -A imi@v100\n'

|

| 53 |

+

'#SBATCH --gres=gpu:1\n'

|

| 54 |

+

"#SBATCH -t 19:59:00\n"

|

| 55 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 56 |

+

'jz_short_2gpus_chained': '#SBATCH -A imi@v100\n'

|

| 57 |

+

'#SBATCH --gres=gpu:2\n'

|

| 58 |

+

"#SBATCH -t 19:59:00\n"

|

| 59 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 60 |

+

'jz_medium_gpu': '#SBATCH -A imi@v100\n'

|

| 61 |

+

'#SBATCH --gres=gpu:1\n'

|

| 62 |

+

"#SBATCH -t 48:00:00\n"

|

| 63 |

+

"#SBATCH --qos=qos_gpu-t4\n",

|

| 64 |

+

'jz_super_short_2gpus': '#SBATCH -A imi@v100\n'

|

| 65 |

+

'#SBATCH --gres=gpu:2\n'

|

| 66 |

+

"#SBATCH -t 14:59:00\n"

|

| 67 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 68 |

+

'jz_short_2gpus': '#SBATCH -A imi@v100\n'

|

| 69 |

+

'#SBATCH --gres=gpu:2\n'

|

| 70 |

+

"#SBATCH -t 19:59:00\n"

|

| 71 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 72 |

+

'jz_short_2gpus_32g': '#SBATCH -A imi@v100\n'

|

| 73 |

+

'#SBATCH -C v100-32g\n'

|

| 74 |

+

'#SBATCH --gres=gpu:2\n'

|

| 75 |

+

"#SBATCH -t 19:59:00\n"

|

| 76 |

+

"#SBATCH --qos=qos_gpu-t3\n",

|

| 77 |

+

'jz_medium_2gpus': '#SBATCH -A imi@v100\n'

|

| 78 |

+

'#SBATCH --gres=gpu:2\n'

|

| 79 |

+

"#SBATCH -t 48:00:00\n"

|

| 80 |

+

"#SBATCH --qos=qos_gpu-t4\n",

|

| 81 |

+

'jz_medium_2gpus_32g': '#SBATCH -A imi@v100\n'

|

| 82 |

+

'#SBATCH -C v100-32g\n'

|

| 83 |

+

'#SBATCH --gres=gpu:2\n'

|

| 84 |

+

"#SBATCH -t 48:00:00\n"

|

| 85 |

+

"#SBATCH --qos=qos_gpu-t4\n",

|

| 86 |

+

'jz_long_gpu': '#SBATCH -A imi@v100\n'

|

| 87 |

+

'#SBATCH --gres=gpu:1\n'

|

| 88 |

+

"#SBATCH -t 72:00:00\n"

|

| 89 |

+

"#SBATCH --qos=qos_gpu-t4\n",

|

| 90 |

+

'jz_long_2gpus': '#SBATCH -A imi@v100\n'

|

| 91 |

+

'#SBATCH --gres=gpu:2\n'

|

| 92 |

+

'#SBATCH -t 72:00:00\n'

|

| 93 |

+

'#SBATCH --qos=qos_gpu-t4\n',

|

| 94 |

+

'jz_long_2gpus_32g': '#SBATCH -A imi@v100\n'

|

| 95 |

+

'#SBATCH -C v100-32g\n'

|

| 96 |

+

'#SBATCH --gres=gpu:2\n'

|

| 97 |

+

"#SBATCH -t 72:00:00\n"

|

| 98 |

+

"#SBATCH --qos=qos_gpu-t4\n",

|

| 99 |

+

'jz_super_long_2gpus_32g': '#SBATCH -A imi@v100\n'

|

| 100 |

+

'#SBATCH -C v100-32g\n'

|

| 101 |

+

'#SBATCH --gres=gpu:2\n'

|

| 102 |

+

"#SBATCH -t 99:00:00\n"

|

| 103 |

+

"#SBATCH --qos=qos_gpu-t4\n",

|

| 104 |

+

'jz_short_cpu': '#SBATCH -A imi@cpu\n'

|

| 105 |

+

"#SBATCH -t 19:59:00\n"

|

| 106 |

+

"#SBATCH --qos=qos_cpu-t3\n",

|

| 107 |

+

'jz_medium_cpu': '#SBATCH -A imi@cpu\n'

|

| 108 |

+

"#SBATCH -t 48:00:00\n"

|

| 109 |

+

"#SBATCH --qos=qos_cpu-t4\n",

|

| 110 |

+

'jz_long_cpu': '#SBATCH -A imi@cpu\n'

|

| 111 |

+

"#SBATCH -t 72:00:00\n"

|

| 112 |

+

"#SBATCH --qos=qos_cpu-t4\n",

|

| 113 |

+

'plafrim_cpu_medium': "#SBATCH -t 48:00:00\n",

|

| 114 |

+

'plafrim_cpu_long': "#SBATCH -t 72:00:00\n",

|

| 115 |

+

'plafrim_gpu_medium': '#SBATCH -p long_sirocco\n'

|

| 116 |

+

"#SBATCH -t 48:00:00\n"

|

| 117 |

+

'#SBATCH --gres=gpu:1\n'

|

| 118 |

+

}

|

| 119 |

+

|

| 120 |

+

cur_path = str(Path.cwd())

|

| 121 |

+

date = date.today().strftime("%d-%m")

|

| 122 |

+

# create campain log dir if not already done

|

| 123 |

+

Path(cur_path + "/campain_logs/jobouts/").mkdir(parents=True, exist_ok=True)

|

| 124 |

+

Path(cur_path + "/campain_logs/scripts/").mkdir(parents=True, exist_ok=True)

|

| 125 |

+

# Load txt file containing experiments to run (give it as argument to this script)

|

| 126 |

+

filename = 'to_run.txt'

|

| 127 |

+

if len(sys.argv) >= 2:

|

| 128 |

+

filename = sys.argv[1]

|

| 129 |

+

launch = True

|

| 130 |

+

# Save a copy of txt file

|

| 131 |

+

shutil.copyfile(cur_path + "/" + filename, cur_path + '/campain_logs/scripts/' + date + '_' + filename)

|

| 132 |

+

|

| 133 |

+

# one_launch_per_n_seeds = 8

|

| 134 |

+

one_launch_per_n_seeds = 4

|

| 135 |

+

|

| 136 |

+

global_seed_offset = 0

|

| 137 |

+

incremental = False

|

| 138 |

+

if len(sys.argv) >= 3:

|

| 139 |

+

if sys.argv[2] == 'nolaunch':

|

| 140 |

+

launch = False

|

| 141 |

+

if sys.argv[2] == 'seed_offset':

|

| 142 |

+

global_seed_offset = int(sys.argv[3])

|

| 143 |

+

if sys.argv[2] == 'incremental_seed_offset':

|

| 144 |

+

global_seed_offset = int(sys.argv[3])

|

| 145 |

+

incremental = True

|

| 146 |

+

if launch:

|

| 147 |

+

print('Creating and Launching slurm scripts given arguments from {}'.format(filename))

|

| 148 |

+

# time.sleep(1.0)

|

| 149 |

+

expe_list = []

|

| 150 |

+

with open(filename, 'r') as f:

|

| 151 |

+

expe_list = [line.rstrip() for line in f]

|

| 152 |

+

|

| 153 |

+

exp_names = set()

|

| 154 |

+

for expe_args in expe_list:

|

| 155 |

+

seed_offset_to_use = global_seed_offset

|

| 156 |

+

|

| 157 |

+

if len(expe_args) == 0:

|

| 158 |

+

# empty line

|

| 159 |

+

continue

|

| 160 |

+

|

| 161 |

+

if expe_args[0] == '#':

|

| 162 |

+

# comment line

|

| 163 |

+

continue

|

| 164 |

+

|

| 165 |

+

exp_config = expe_args.split('--')[1:5]

|

| 166 |

+

|

| 167 |

+

if not [arg.split(' ')[0] for arg in exp_config] == ['slurm_conf', 'nb_seeds', 'frames', 'model']:

|

| 168 |

+

raise ValueError("Arguments must be in the following order {}".format(

|

| 169 |

+

['slurm_conf', 'nb_seeds', 'frames', 'model']))

|

| 170 |

+

|

| 171 |

+

slurm_conf_name, nb_seeds, frames, exp_name = [arg.split(' ')[1] for arg in exp_config]

|

| 172 |

+

|

| 173 |

+

user = getpass.getuser()

|

| 174 |

+

if 'curta' in slurm_conf_name:

|

| 175 |

+

gpu = ''

|

| 176 |

+

PYTHON_INTERP = "$HOME/anaconda3/envs/act_and_speak/bin/python"

|

| 177 |

+

n_cpus = 1

|

| 178 |

+

elif 'plafrim' in slurm_conf_name:

|

| 179 |

+

gpu = ''

|

| 180 |

+

PYTHON_INTERP = '/home/{}/USER/conda/envs/act_and_speak/bin/python'.format(user)

|

| 181 |

+

n_cpus = 1

|

| 182 |

+

elif 'jz' in slurm_conf_name:

|

| 183 |

+

|

| 184 |

+

if user == "utu57ed":

|

| 185 |

+

PYTHON_INTERP='/gpfsscratch/rech/imi/{}/miniconda3/envs/social_ai/bin/python'.format(user)

|

| 186 |

+

elif user == "uxo14qj":

|

| 187 |

+

PYTHON_INTERP='/gpfswork/rech/imi/{}/miniconda3/envs/act_and_speak/bin/python'.format(user)

|

| 188 |

+

else:

|

| 189 |

+

if user != "flowers":

|

| 190 |

+

raise ValueError("Who are you? User {} unknown.".format(user))

|

| 191 |

+

|

| 192 |

+

gpu = '' # '--gpu_id 0'

|

| 193 |

+

n_cpus = 2

|

| 194 |

+

|

| 195 |

+

n_cpus = 4

|

| 196 |

+

assert n_cpus*one_launch_per_n_seeds == 16 # cpus_per_task is 8 will result in 16 cpus

|

| 197 |

+

else:

|

| 198 |

+

raise Exception("Unrecognized conf name: {} ".format(slurm_conf_name))

|

| 199 |

+

|

| 200 |

+

# assert ((int(nb_seeds) % 8) == 0), 'number of seeds should be divisible by 8'

|

| 201 |

+

assert ((int(nb_seeds) % 4) == 0), 'number of seeds should be divisible by 8'

|

| 202 |

+

run_args = expe_args.split(exp_name, 1)[

|

| 203 |

+

1] # WARNING: assumes that exp_name comes after slurm_conf and nb_seeds and frames in txt

|

| 204 |

+

|

| 205 |

+

# prepare experiment name formatting (use --* or -* instead of -- or - to use argument in experiment name

|

| 206 |

+

# print(expe_args.split(exp_name))

|

| 207 |

+

exp_details, run_args = process_arg_string(run_args)

|

| 208 |

+

exp_name = date + '_' + exp_name + exp_details

|

| 209 |

+

|

| 210 |

+

# no two trains are to be put in the same dir

|

| 211 |

+

assert exp_names not in exp_names

|

| 212 |

+

exp_names.add(exp_name)

|

| 213 |

+

|

| 214 |

+

slurm_script_fullname = cur_path + "/campain_logs/scripts/{}".format(exp_name) + ".sh"

|

| 215 |

+

# create corresponding slurm script

|

| 216 |

+

|

| 217 |

+

# calculate how many chained jobs we need

|

| 218 |

+

chained_training = "chained" in slurm_conf_name

|

| 219 |

+

frames = int(frames)

|

| 220 |

+

|

| 221 |

+

if chained_training:

|

| 222 |

+

# assume 10M frames per 20h (fps 140 - very conservative)

|

| 223 |

+

timelimit = slurm_confs[slurm_conf_name].split("-t ")[-1].split("\n")[0]

|

| 224 |

+

assert timelimit == '19:59:00'

|

| 225 |

+

one_script_frames = 10000000

|

| 226 |

+

print(f"One script frames: {one_script_frames}")

|

| 227 |

+

|

| 228 |

+

num_chained_jobs = frames // one_script_frames + bool(frames % one_script_frames)

|

| 229 |

+

|

| 230 |

+

else:

|

| 231 |

+

one_script_frames = frames

|

| 232 |

+

num_chained_jobs = 1 # no chaining

|

| 233 |

+

|

| 234 |

+

assert "--frames " not in run_args

|

| 235 |

+

|

| 236 |

+

current_script_frames = min(one_script_frames, frames)

|

| 237 |

+

|

| 238 |

+

# launch scripts (1 launch per 4 seeds)

|

| 239 |

+

if launch:

|

| 240 |

+

for i in range(int(nb_seeds) // one_launch_per_n_seeds):

|

| 241 |

+

|

| 242 |

+

# continue jobs

|

| 243 |

+

cont_job_i = num_chained_jobs # last job

|

| 244 |

+

|

| 245 |

+

exp_name_no_date = exp_name[5:]

|

| 246 |

+

continue_slurm_script_fullname = cur_path + "/campain_logs/scripts/*{}_continue_{}".format(exp_name_no_date, "*")

|

| 247 |

+

matched_scripts = glob.glob(continue_slurm_script_fullname)

|

| 248 |

+

matched_scripts.sort(key=os.path.getctime)

|

| 249 |

+

|

| 250 |

+

for last_script in reversed(matched_scripts):

|

| 251 |

+

# start from the latest written script and start the first encountered that has a err file (that was ran)

|

| 252 |

+

|

| 253 |

+

p = re.compile("continue_(.*).sh")

|

| 254 |

+

last_job_id = int(p.search(last_script).group(1))

|

| 255 |

+

|

| 256 |

+

last_script_name = os.path.basename(last_script)[:-3].replace("_continue_", "_cont_")

|

| 257 |

+

if len(glob.glob(cur_path + "/campain_logs/jobouts/"+last_script_name+"*.sh.err")) == 1:

|

| 258 |

+

# error file found -> script was ran -> this is the script that crashed

|

| 259 |

+

break

|

| 260 |

+

|

| 261 |

+

print(f"Continuing job id: {last_job_id}")

|

| 262 |

+

# last_err_log = glob.glob(cur_path + "/campain_logs/jobouts/"+last_script_name+"*.sh.err")[0]

|

| 263 |

+

#

|

| 264 |

+

# print("Then ended with:\n")

|

| 265 |

+

# print('"""\n')

|

| 266 |

+

# for l in open(last_err_log).readlines():

|

| 267 |

+

# print("\t"+l, end='')

|

| 268 |

+

# print('"""\n')

|

| 269 |

+

|

| 270 |

+

# write continue script

|

| 271 |

+

cont_script_name = "{}_continue_{}.sh".format(exp_name, last_job_id)

|

| 272 |

+

continue_slurm_script_fullname = cur_path + "/campain_logs/scripts/"+cont_script_name

|

| 273 |

+

|

| 274 |

+

current_script_frames = min(one_script_frames*(2+cont_job_i), frames)

|

| 275 |

+

# run continue job

|

| 276 |

+

sbatch_pipe = subprocess.Popen(

|

| 277 |

+

['sbatch', 'campain_logs/scripts/{}'.format(os.path.basename(last_script)), str((i * one_launch_per_n_seeds) + seed_offset_to_use)], # 0 4 8 12

|

| 278 |

+

stdout=subprocess.PIPE

|

| 279 |

+

)

|

| 280 |

+

|

| 281 |

+

if incremental:

|

| 282 |

+

global_seed_offset += int(nb_seeds)

|

campain_launcher.py

ADDED

|

@@ -0,0 +1,488 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|