Spaces:

Running

Running

Migrated from GitHub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +12 -0

- INSTALL.md +54 -0

- LICENSE.txt +201 -0

- ORIGINAL_README.md +663 -0

- assets/comp_effic.png +3 -0

- assets/data_for_diff_stage.jpg +3 -0

- assets/i2v_res.png +3 -0

- assets/logo.png +0 -0

- assets/t2v_res.jpg +3 -0

- assets/vben_vs_sota.png +3 -0

- assets/video_dit_arch.jpg +3 -0

- assets/video_vae_res.jpg +3 -0

- examples/flf2v_input_first_frame.png +3 -0

- examples/flf2v_input_last_frame.png +3 -0

- examples/girl.png +3 -0

- examples/i2v_input.JPG +3 -0

- examples/snake.png +3 -0

- generate.py +572 -0

- gradio/fl2v_14B_singleGPU.py +252 -0

- gradio/i2v_14B_singleGPU.py +287 -0

- gradio/t2i_14B_singleGPU.py +205 -0

- gradio/t2v_1.3B_singleGPU.py +207 -0

- gradio/t2v_14B_singleGPU.py +205 -0

- gradio/vace.py +295 -0

- pyproject.toml +67 -0

- requirements.txt +16 -0

- tests/README.md +6 -0

- tests/test.sh +120 -0

- wan/__init__.py +5 -0

- wan/configs/__init__.py +53 -0

- wan/configs/shared_config.py +19 -0

- wan/configs/wan_i2v_14B.py +36 -0

- wan/configs/wan_t2v_14B.py +29 -0

- wan/configs/wan_t2v_1_3B.py +29 -0

- wan/distributed/__init__.py +0 -0

- wan/distributed/fsdp.py +41 -0

- wan/distributed/xdit_context_parallel.py +230 -0

- wan/first_last_frame2video.py +370 -0

- wan/image2video.py +347 -0

- wan/modules/__init__.py +18 -0

- wan/modules/attention.py +179 -0

- wan/modules/clip.py +542 -0

- wan/modules/model.py +630 -0

- wan/modules/t5.py +513 -0

- wan/modules/tokenizers.py +82 -0

- wan/modules/vace_model.py +233 -0

- wan/modules/vae.py +663 -0

- wan/modules/xlm_roberta.py +170 -0

- wan/text2video.py +267 -0

- wan/utils/__init__.py +10 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,15 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/comp_effic.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

assets/data_for_diff_stage.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

assets/i2v_res.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

assets/t2v_res.jpg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

assets/vben_vs_sota.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

assets/video_dit_arch.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

assets/video_vae_res.jpg filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

examples/flf2v_input_first_frame.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

examples/flf2v_input_last_frame.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

examples/girl.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

examples/i2v_input.JPG filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

examples/snake.png filter=lfs diff=lfs merge=lfs -text

|

INSTALL.md

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Installation Guide

|

| 2 |

+

|

| 3 |

+

## Install with pip

|

| 4 |

+

|

| 5 |

+

```bash

|

| 6 |

+

pip install .

|

| 7 |

+

pip install .[dev] # Installe aussi les outils de dev

|

| 8 |

+

```

|

| 9 |

+

|

| 10 |

+

## Install with Poetry

|

| 11 |

+

|

| 12 |

+

Ensure you have [Poetry](https://python-poetry.org/docs/#installation) installed on your system.

|

| 13 |

+

|

| 14 |

+

To install all dependencies:

|

| 15 |

+

|

| 16 |

+

```bash

|

| 17 |

+

poetry install

|

| 18 |

+

```

|

| 19 |

+

|

| 20 |

+

### Handling `flash-attn` Installation Issues

|

| 21 |

+

|

| 22 |

+

If `flash-attn` fails due to **PEP 517 build issues**, you can try one of the following fixes.

|

| 23 |

+

|

| 24 |

+

#### No-Build-Isolation Installation (Recommended)

|

| 25 |

+

```bash

|

| 26 |

+

poetry run pip install --upgrade pip setuptools wheel

|

| 27 |

+

poetry run pip install flash-attn --no-build-isolation

|

| 28 |

+

poetry install

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

#### Install from Git (Alternative)

|

| 32 |

+

```bash

|

| 33 |

+

poetry run pip install git+https://github.com/Dao-AILab/flash-attention.git

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

---

|

| 37 |

+

|

| 38 |

+

### Running the Model

|

| 39 |

+

|

| 40 |

+

Once the installation is complete, you can run **Wan2.1** using:

|

| 41 |

+

|

| 42 |

+

```bash

|

| 43 |

+

poetry run python generate.py --task t2v-14B --size '1280x720' --ckpt_dir ./Wan2.1-T2V-14B --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

#### Test

|

| 47 |

+

```bash

|

| 48 |

+

pytest tests/

|

| 49 |

+

```

|

| 50 |

+

#### Format

|

| 51 |

+

```bash

|

| 52 |

+

black .

|

| 53 |

+

isort .

|

| 54 |

+

```

|

LICENSE.txt

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

ORIGINAL_README.md

ADDED

|

@@ -0,0 +1,663 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Wan2.1

|

| 2 |

+

|

| 3 |

+

<p align="center">

|

| 4 |

+

<img src="assets/logo.png" width="400"/>

|

| 5 |

+

<p>

|

| 6 |

+

|

| 7 |

+

<p align="center">

|

| 8 |

+

💜 <a href="https://wan.video"><b>Wan</b></a>    |    🖥️ <a href="https://github.com/Wan-Video/Wan2.1">GitHub</a>    |   🤗 <a href="https://huggingface.co/Wan-AI/">Hugging Face</a>   |   🤖 <a href="https://modelscope.cn/organization/Wan-AI">ModelScope</a>   |    📑 <a href="https://arxiv.org/abs/2503.20314">Technical Report</a>    |    📑 <a href="https://wan.video/welcome?spm=a2ty_o02.30011076.0.0.6c9ee41eCcluqg">Blog</a>    |   💬 <a href="https://gw.alicdn.com/imgextra/i2/O1CN01tqjWFi1ByuyehkTSB_!!6000000000015-0-tps-611-1279.jpg">WeChat Group</a>   |    📖 <a href="https://discord.gg/AKNgpMK4Yj">Discord</a>

|

| 9 |

+

<br>

|

| 10 |

+

|

| 11 |

+

-----

|

| 12 |

+

|

| 13 |

+

[**Wan: Open and Advanced Large-Scale Video Generative Models**](https://arxiv.org/abs/2503.20314) <be>

|

| 14 |

+

|

| 15 |

+

In this repository, we present **Wan2.1**, a comprehensive and open suite of video foundation models that pushes the boundaries of video generation. **Wan2.1** offers these key features:

|

| 16 |

+

- 👍 **SOTA Performance**: **Wan2.1** consistently outperforms existing open-source models and state-of-the-art commercial solutions across multiple benchmarks.

|

| 17 |

+

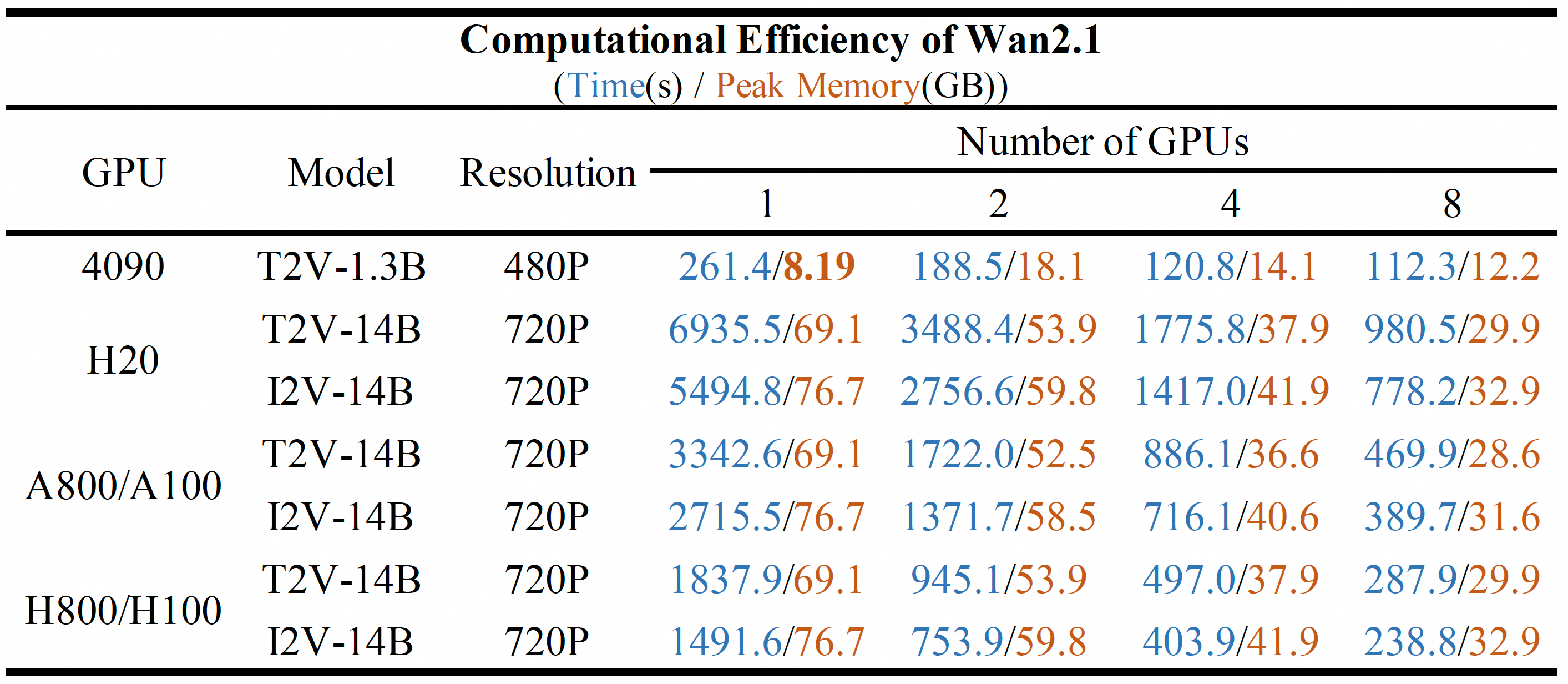

- 👍 **Supports Consumer-grade GPUs**: The T2V-1.3B model requires only 8.19 GB VRAM, making it compatible with almost all consumer-grade GPUs. It can generate a 5-second 480P video on an RTX 4090 in about 4 minutes (without optimization techniques like quantization). Its performance is even comparable to some closed-source models.

|

| 18 |

+

- 👍 **Multiple Tasks**: **Wan2.1** excels in Text-to-Video, Image-to-Video, Video Editing, Text-to-Image, and Video-to-Audio, advancing the field of video generation.

|

| 19 |

+

- 👍 **Visual Text Generation**: **Wan2.1** is the first video model capable of generating both Chinese and English text, featuring robust text generation that enhances its practical applications.

|

| 20 |

+

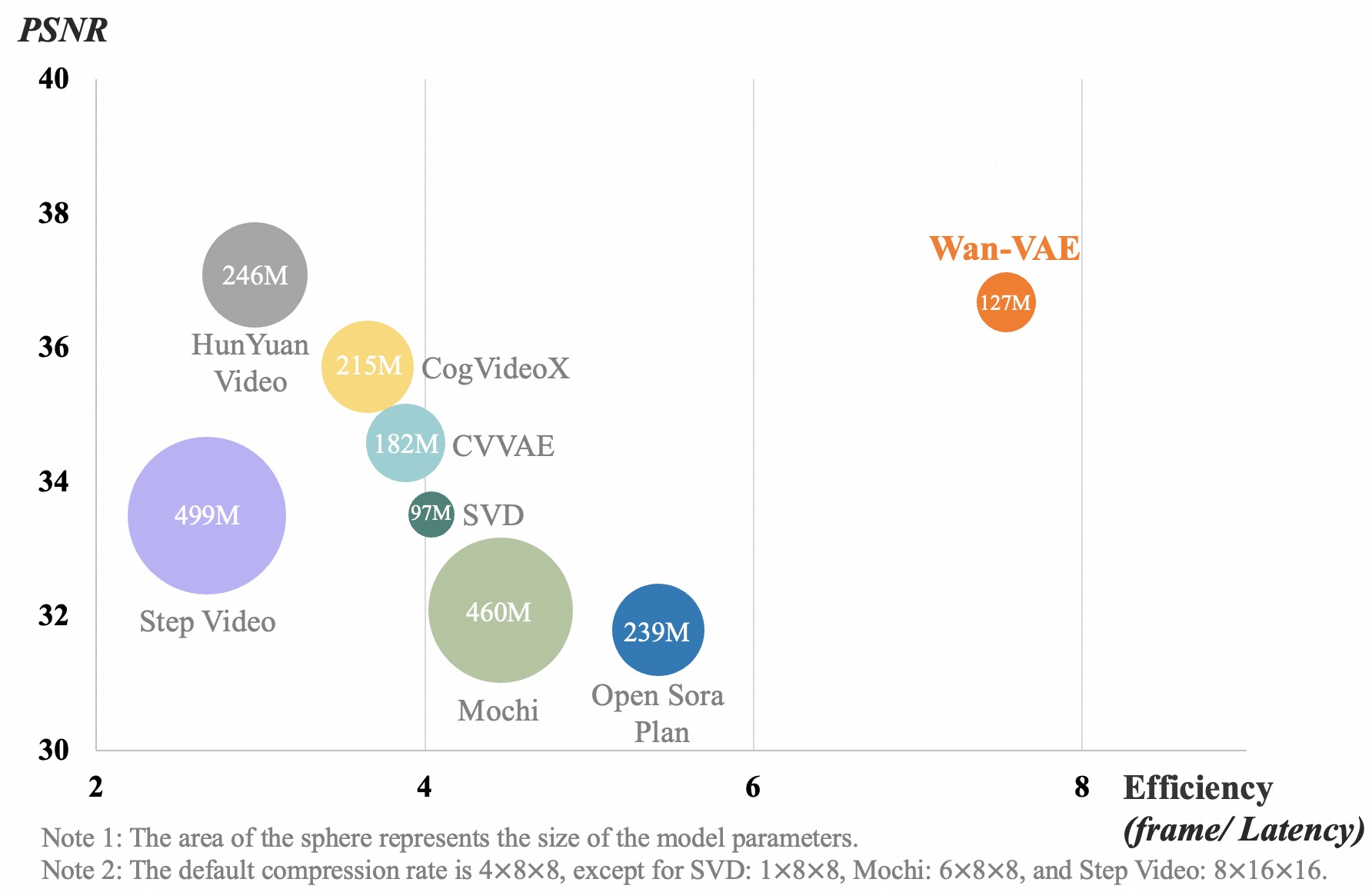

- 👍 **Powerful Video VAE**: **Wan-VAE** delivers exceptional efficiency and performance, encoding and decoding 1080P videos of any length while preserving temporal information, making it an ideal foundation for video and image generation.

|

| 21 |

+

|

| 22 |

+

## Video Demos

|

| 23 |

+

|

| 24 |

+

<div align="center">

|

| 25 |

+

<video src="https://github.com/user-attachments/assets/4aca6063-60bf-4953-bfb7-e265053f49ef" width="70%" poster=""> </video>

|

| 26 |

+

</div>

|

| 27 |

+

|

| 28 |

+

## 🔥 Latest News!!

|

| 29 |

+

|

| 30 |

+

* May 14, 2025: 👋 We introduce **Wan2.1** [VACE](https://github.com/ali-vilab/VACE), an all-in-one model for video creation and editing, along with its [inference code](#run-vace), [weights](#model-download), and [technical report](https://arxiv.org/abs/2503.07598)!

|

| 31 |

+

* Apr 17, 2025: 👋 We introduce **Wan2.1** [FLF2V](#run-first-last-frame-to-video-generation) with its inference code and weights!

|

| 32 |

+

* Mar 21, 2025: 👋 We are excited to announce the release of the **Wan2.1** [technical report](https://files.alicdn.com/tpsservice/5c9de1c74de03972b7aa657e5a54756b.pdf). We welcome discussions and feedback!

|

| 33 |

+

* Mar 3, 2025: 👋 **Wan2.1**'s T2V and I2V have been integrated into Diffusers ([T2V](https://huggingface.co/docs/diffusers/main/en/api/pipelines/wan#diffusers.WanPipeline) | [I2V](https://huggingface.co/docs/diffusers/main/en/api/pipelines/wan#diffusers.WanImageToVideoPipeline)). Feel free to give it a try!

|

| 34 |

+

* Feb 27, 2025: 👋 **Wan2.1** has been integrated into [ComfyUI](https://comfyanonymous.github.io/ComfyUI_examples/wan/). Enjoy!

|

| 35 |

+

* Feb 25, 2025: 👋 We've released the inference code and weights of **Wan2.1**.

|

| 36 |

+

|

| 37 |

+

## Community Works

|

| 38 |

+

If your work has improved **Wan2.1** and you would like more people to see it, please inform us.

|

| 39 |

+

- [Phantom](https://github.com/Phantom-video/Phantom) has developed a unified video generation framework for single and multi-subject references based on **Wan2.1-T2V-1.3B**. Please refer to [their examples](https://github.com/Phantom-video/Phantom).

|

| 40 |

+

- [UniAnimate-DiT](https://github.com/ali-vilab/UniAnimate-DiT), based on **Wan2.1-14B-I2V**, has trained a Human image animation model and has open-sourced the inference and training code. Feel free to enjoy it!

|

| 41 |

+

- [CFG-Zero](https://github.com/WeichenFan/CFG-Zero-star) enhances **Wan2.1** (covering both T2V and I2V models) from the perspective of CFG.

|

| 42 |

+

- [TeaCache](https://github.com/ali-vilab/TeaCache) now supports **Wan2.1** acceleration, capable of increasing speed by approximately 2x. Feel free to give it a try!

|

| 43 |

+

- [DiffSynth-Studio](https://github.com/modelscope/DiffSynth-Studio) provides more support for **Wan2.1**, including video-to-video, FP8 quantization, VRAM optimization, LoRA training, and more. Please refer to [their examples](https://github.com/modelscope/DiffSynth-Studio/tree/main/examples/wanvideo).

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

## 📑 Todo List

|

| 47 |

+

- Wan2.1 Text-to-Video

|

| 48 |

+

- [x] Multi-GPU Inference code of the 14B and 1.3B models

|

| 49 |

+

- [x] Checkpoints of the 14B and 1.3B models

|

| 50 |

+

- [x] Gradio demo

|

| 51 |

+

- [x] ComfyUI integration

|

| 52 |

+

- [x] Diffusers integration

|

| 53 |

+

- [ ] Diffusers + Multi-GPU Inference

|

| 54 |

+

- Wan2.1 Image-to-Video

|

| 55 |

+

- [x] Multi-GPU Inference code of the 14B model

|

| 56 |

+

- [x] Checkpoints of the 14B model

|

| 57 |

+

- [x] Gradio demo

|

| 58 |

+

- [x] ComfyUI integration

|

| 59 |

+

- [x] Diffusers integration

|

| 60 |

+

- [ ] Diffusers + Multi-GPU Inference

|

| 61 |

+

- Wan2.1 First-Last-Frame-to-Video

|

| 62 |

+

- [x] Multi-GPU Inference code of the 14B model

|

| 63 |

+

- [x] Checkpoints of the 14B model

|

| 64 |

+

- [x] Gradio demo

|

| 65 |

+

- [ ] ComfyUI integration

|

| 66 |

+

- [ ] Diffusers integration

|

| 67 |

+

- [ ] Diffusers + Multi-GPU Inference

|

| 68 |

+

- Wan2.1 VACE

|

| 69 |

+

- [x] Multi-GPU Inference code of the 14B and 1.3B models

|

| 70 |

+

- [x] Checkpoints of the 14B and 1.3B models

|

| 71 |

+

- [x] Gradio demo

|

| 72 |

+

- [x] ComfyUI integration

|

| 73 |

+

- [ ] Diffusers integration

|

| 74 |

+

- [ ] Diffusers + Multi-GPU Inference

|

| 75 |

+

|

| 76 |

+

## Quickstart

|

| 77 |

+

|

| 78 |

+

#### Installation

|

| 79 |

+

Clone the repo:

|

| 80 |

+

```sh

|

| 81 |

+

git clone https://github.com/Wan-Video/Wan2.1.git

|

| 82 |

+

cd Wan2.1

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

Install dependencies:

|

| 86 |

+

```sh

|

| 87 |

+

# Ensure torch >= 2.4.0

|

| 88 |

+

pip install -r requirements.txt

|

| 89 |

+

```

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

#### Model Download

|

| 93 |

+

|

| 94 |

+

| Models | Download Link | Notes |

|

| 95 |

+

|--------------|---------------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------|

|

| 96 |

+

| T2V-14B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-T2V-14B) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-T2V-14B) | Supports both 480P and 720P

|

| 97 |

+

| I2V-14B-720P | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-I2V-14B-720P) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-I2V-14B-720P) | Supports 720P

|

| 98 |

+

| I2V-14B-480P | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-I2V-14B-480P) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-I2V-14B-480P) | Supports 480P

|

| 99 |

+

| T2V-1.3B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-T2V-1.3B) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-T2V-1.3B) | Supports 480P

|

| 100 |

+

| FLF2V-14B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-FLF2V-14B-720P) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-FLF2V-14B-720P) | Supports 720P

|

| 101 |

+

| VACE-1.3B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-VACE-1.3B) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-VACE-1.3B) | Supports 480P

|

| 102 |

+

| VACE-14B | 🤗 [Huggingface](https://huggingface.co/Wan-AI/Wan2.1-VACE-14B) 🤖 [ModelScope](https://www.modelscope.cn/models/Wan-AI/Wan2.1-VACE-14B) | Supports both 480P and 720P

|

| 103 |

+

|

| 104 |

+

> 💡Note:

|

| 105 |

+

> * The 1.3B model is capable of generating videos at 720P resolution. However, due to limited training at this resolution, the results are generally less stable compared to 480P. For optimal performance, we recommend using 480P resolution.

|

| 106 |

+

> * For the first-last frame to video generation, we train our model primarily on Chinese text-video pairs. Therefore, we recommend using Chinese prompt to achieve better results.

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

Download models using huggingface-cli:

|

| 110 |

+

``` sh

|

| 111 |

+

pip install "huggingface_hub[cli]"

|

| 112 |

+

huggingface-cli download Wan-AI/Wan2.1-T2V-14B --local-dir ./Wan2.1-T2V-14B

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

Download models using modelscope-cli:

|

| 116 |

+

``` sh

|

| 117 |

+

pip install modelscope

|

| 118 |

+

modelscope download Wan-AI/Wan2.1-T2V-14B --local_dir ./Wan2.1-T2V-14B

|

| 119 |

+

```

|

| 120 |

+

#### Run Text-to-Video Generation

|

| 121 |

+

|

| 122 |

+

This repository supports two Text-to-Video models (1.3B and 14B) and two resolutions (480P and 720P). The parameters and configurations for these models are as follows:

|

| 123 |

+

|

| 124 |

+

<table>

|

| 125 |

+

<thead>

|

| 126 |

+

<tr>

|

| 127 |

+

<th rowspan="2">Task</th>

|

| 128 |

+

<th colspan="2">Resolution</th>

|

| 129 |

+

<th rowspan="2">Model</th>

|

| 130 |

+

</tr>

|

| 131 |

+

<tr>

|

| 132 |

+

<th>480P</th>

|

| 133 |

+

<th>720P</th>

|

| 134 |

+

</tr>

|

| 135 |

+

</thead>

|

| 136 |

+

<tbody>

|

| 137 |

+

<tr>

|

| 138 |

+

<td>t2v-14B</td>

|

| 139 |

+

<td style="color: green;">✔️</td>

|

| 140 |

+

<td style="color: green;">✔️</td>

|

| 141 |

+

<td>Wan2.1-T2V-14B</td>

|

| 142 |

+

</tr>

|

| 143 |

+

<tr>

|

| 144 |

+

<td>t2v-1.3B</td>

|

| 145 |

+

<td style="color: green;">✔️</td>

|

| 146 |

+

<td style="color: red;">❌</td>

|

| 147 |

+

<td>Wan2.1-T2V-1.3B</td>

|

| 148 |

+

</tr>

|

| 149 |

+

</tbody>

|

| 150 |

+

</table>

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

##### (1) Without Prompt Extension

|

| 154 |

+

|

| 155 |

+

To facilitate implementation, we will start with a basic version of the inference process that skips the [prompt extension](#2-using-prompt-extention) step.

|

| 156 |

+

|

| 157 |

+

- Single-GPU inference

|

| 158 |

+

|

| 159 |

+

``` sh

|

| 160 |

+

python generate.py --task t2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-T2V-14B --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

|

| 161 |

+

```

|

| 162 |

+

|

| 163 |

+

If you encounter OOM (Out-of-Memory) issues, you can use the `--offload_model True` and `--t5_cpu` options to reduce GPU memory usage. For example, on an RTX 4090 GPU:

|

| 164 |

+

|

| 165 |

+

``` sh

|

| 166 |

+

python generate.py --task t2v-1.3B --size 832*480 --ckpt_dir ./Wan2.1-T2V-1.3B --offload_model True --t5_cpu --sample_shift 8 --sample_guide_scale 6 --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

|

| 167 |

+

```

|

| 168 |

+

|

| 169 |

+

> 💡Note: If you are using the `T2V-1.3B` model, we recommend setting the parameter `--sample_guide_scale 6`. The `--sample_shift parameter` can be adjusted within the range of 8 to 12 based on the performance.

|

| 170 |

+

|

| 171 |

+

|

| 172 |

+

- Multi-GPU inference using FSDP + xDiT USP

|

| 173 |

+

|

| 174 |

+

We use FSDP and [xDiT](https://github.com/xdit-project/xDiT) USP to accelerate inference.

|

| 175 |

+

|

| 176 |

+

* Ulysess Strategy

|

| 177 |

+

|

| 178 |

+

If you want to use [`Ulysses`](https://arxiv.org/abs/2309.14509) strategy, you should set `--ulysses_size $GPU_NUMS`. Note that the `num_heads` should be divisible by `ulysses_size` if you wish to use `Ulysess` strategy. For the 1.3B model, the `num_heads` is `12` which can't be divided by 8 (as most multi-GPU machines have 8 GPUs). Therefore, it is recommended to use `Ring Strategy` instead.

|

| 179 |

+

|

| 180 |

+

* Ring Strategy

|

| 181 |

+

|

| 182 |

+

If you want to use [`Ring`](https://arxiv.org/pdf/2310.01889) strategy, you should set `--ring_size $GPU_NUMS`. Note that the `sequence length` should be divisible by `ring_size` when using the `Ring` strategy.

|

| 183 |

+

|

| 184 |

+

Of course, you can also combine the use of `Ulysses` and `Ring` strategies.

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

``` sh

|

| 188 |

+

pip install "xfuser>=0.4.1"

|

| 189 |

+

torchrun --nproc_per_node=8 generate.py --task t2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-T2V-14B --dit_fsdp --t5_fsdp --ulysses_size 8 --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

|

| 190 |

+

```

|

| 191 |

+

|

| 192 |

+

|

| 193 |

+

##### (2) Using Prompt Extension

|

| 194 |

+

|

| 195 |

+

Extending the prompts can effectively enrich the details in the generated videos, further enhancing the video quality. Therefore, we recommend enabling prompt extension. We provide the following two methods for prompt extension:

|

| 196 |

+

|

| 197 |

+

- Use the Dashscope API for extension.

|

| 198 |

+

- Apply for a `dashscope.api_key` in advance ([EN](https://www.alibabacloud.com/help/en/model-studio/getting-started/first-api-call-to-qwen) | [CN](https://help.aliyun.com/zh/model-studio/getting-started/first-api-call-to-qwen)).

|

| 199 |

+

- Configure the environment variable `DASH_API_KEY` to specify the Dashscope API key. For users of Alibaba Cloud's international site, you also need to set the environment variable `DASH_API_URL` to 'https://dashscope-intl.aliyuncs.com/api/v1'. For more detailed instructions, please refer to the [dashscope document](https://www.alibabacloud.com/help/en/model-studio/developer-reference/use-qwen-by-calling-api?spm=a2c63.p38356.0.i1).

|

| 200 |

+

- Use the `qwen-plus` model for text-to-video tasks and `qwen-vl-max` for image-to-video tasks.

|

| 201 |

+

- You can modify the model used for extension with the parameter `--prompt_extend_model`. For example:

|

| 202 |

+

```sh

|

| 203 |

+

DASH_API_KEY=your_key python generate.py --task t2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-T2V-14B --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage" --use_prompt_extend --prompt_extend_method 'dashscope' --prompt_extend_target_lang 'zh'

|

| 204 |

+

```

|

| 205 |

+

|

| 206 |

+

- Using a local model for extension.

|

| 207 |

+

|

| 208 |

+

- By default, the Qwen model on HuggingFace is used for this extension. Users can choose Qwen models or other models based on the available GPU memory size.

|

| 209 |

+

- For text-to-video tasks, you can use models like `Qwen/Qwen2.5-14B-Instruct`, `Qwen/Qwen2.5-7B-Instruct` and `Qwen/Qwen2.5-3B-Instruct`.

|

| 210 |

+

- For image-to-video or first-last-frame-to-video tasks, you can use models like `Qwen/Qwen2.5-VL-7B-Instruct` and `Qwen/Qwen2.5-VL-3B-Instruct`.

|

| 211 |

+

- Larger models generally provide better extension results but require more GPU memory.

|

| 212 |

+

- You can modify the model used for extension with the parameter `--prompt_extend_model` , allowing you to specify either a local model path or a Hugging Face model. For example:

|

| 213 |

+

|

| 214 |

+

``` sh

|

| 215 |

+

python generate.py --task t2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-T2V-14B --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage" --use_prompt_extend --prompt_extend_method 'local_qwen' --prompt_extend_target_lang 'zh'

|

| 216 |

+

```

|

| 217 |

+

|

| 218 |

+

|

| 219 |

+

##### (3) Running with Diffusers

|

| 220 |

+

|

| 221 |

+

You can easily inference **Wan2.1**-T2V using Diffusers with the following command:

|

| 222 |

+

``` python

|

| 223 |

+

import torch

|

| 224 |

+

from diffusers.utils import export_to_video

|

| 225 |

+

from diffusers import AutoencoderKLWan, WanPipeline

|

| 226 |

+

from diffusers.schedulers.scheduling_unipc_multistep import UniPCMultistepScheduler

|

| 227 |

+

|

| 228 |

+

# Available models: Wan-AI/Wan2.1-T2V-14B-Diffusers, Wan-AI/Wan2.1-T2V-1.3B-Diffusers

|

| 229 |

+

model_id = "Wan-AI/Wan2.1-T2V-14B-Diffusers"

|

| 230 |

+

vae = AutoencoderKLWan.from_pretrained(model_id, subfolder="vae", torch_dtype=torch.float32)

|

| 231 |

+

flow_shift = 5.0 # 5.0 for 720P, 3.0 for 480P

|

| 232 |

+

scheduler = UniPCMultistepScheduler(prediction_type='flow_prediction', use_flow_sigmas=True, num_train_timesteps=1000, flow_shift=flow_shift)

|

| 233 |

+

pipe = WanPipeline.from_pretrained(model_id, vae=vae, torch_dtype=torch.bfloat16)

|

| 234 |

+

pipe.scheduler = scheduler

|

| 235 |

+

pipe.to("cuda")

|

| 236 |

+

|

| 237 |

+

prompt = "A cat and a dog baking a cake together in a kitchen. The cat is carefully measuring flour, while the dog is stirring the batter with a wooden spoon. The kitchen is cozy, with sunlight streaming through the window."

|

| 238 |

+

negative_prompt = "Bright tones, overexposed, static, blurred details, subtitles, style, works, paintings, images, static, overall gray, worst quality, low quality, JPEG compression residue, ugly, incomplete, extra fingers, poorly drawn hands, poorly drawn faces, deformed, disfigured, misshapen limbs, fused fingers, still picture, messy background, three legs, many people in the background, walking backwards"

|

| 239 |

+

|

| 240 |

+

output = pipe(

|

| 241 |

+

prompt=prompt,

|

| 242 |

+

negative_prompt=negative_prompt,

|

| 243 |

+

height=720,

|

| 244 |

+

width=1280,

|

| 245 |

+

num_frames=81,

|

| 246 |

+

guidance_scale=5.0,

|

| 247 |

+

).frames[0]

|

| 248 |

+

export_to_video(output, "output.mp4", fps=16)

|

| 249 |

+

```

|

| 250 |

+

> 💡Note: Please note that this example does not integrate Prompt Extension and distributed inference. We will soon update with the integrated prompt extension and multi-GPU version of Diffusers.

|

| 251 |

+

|

| 252 |

+

|

| 253 |

+

##### (4) Running local gradio

|

| 254 |

+

|

| 255 |

+

``` sh

|

| 256 |

+

cd gradio

|

| 257 |

+

# if one uses dashscope’s API for prompt extension

|

| 258 |

+

DASH_API_KEY=your_key python t2v_14B_singleGPU.py --prompt_extend_method 'dashscope' --ckpt_dir ./Wan2.1-T2V-14B

|

| 259 |

+

|

| 260 |

+

# if one uses a local model for prompt extension

|

| 261 |

+

python t2v_14B_singleGPU.py --prompt_extend_method 'local_qwen' --ckpt_dir ./Wan2.1-T2V-14B

|

| 262 |

+

```

|

| 263 |

+

|

| 264 |

+

|

| 265 |

+

|

| 266 |

+

#### Run Image-to-Video Generation

|

| 267 |

+

|

| 268 |

+

Similar to Text-to-Video, Image-to-Video is also divided into processes with and without the prompt extension step. The specific parameters and their corresponding settings are as follows:

|

| 269 |

+

<table>

|

| 270 |

+

<thead>

|

| 271 |

+

<tr>

|

| 272 |

+

<th rowspan="2">Task</th>

|

| 273 |

+

<th colspan="2">Resolution</th>

|

| 274 |

+

<th rowspan="2">Model</th>

|

| 275 |

+

</tr>

|

| 276 |

+

<tr>

|

| 277 |

+

<th>480P</th>

|

| 278 |

+

<th>720P</th>

|

| 279 |

+

</tr>

|

| 280 |

+

</thead>

|

| 281 |

+

<tbody>

|

| 282 |

+

<tr>

|

| 283 |

+

<td>i2v-14B</td>

|

| 284 |

+

<td style="color: green;">❌</td>

|

| 285 |

+

<td style="color: green;">✔️</td>

|

| 286 |

+

<td>Wan2.1-I2V-14B-720P</td>

|

| 287 |

+

</tr>

|

| 288 |

+

<tr>

|

| 289 |

+

<td>i2v-14B</td>

|

| 290 |

+

<td style="color: green;">✔️</td>

|

| 291 |

+

<td style="color: red;">❌</td>

|

| 292 |

+

<td>Wan2.1-T2V-14B-480P</td>

|

| 293 |

+

</tr>

|

| 294 |

+

</tbody>

|

| 295 |

+

</table>

|

| 296 |

+

|

| 297 |

+

|

| 298 |

+

##### (1) Without Prompt Extension

|

| 299 |

+

|

| 300 |

+

- Single-GPU inference

|

| 301 |

+

```sh

|

| 302 |

+

python generate.py --task i2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-I2V-14B-720P --image examples/i2v_input.JPG --prompt "Summer beach vacation style, a white cat wearing sunglasses sits on a surfboard. The fluffy-furred feline gazes directly at the camera with a relaxed expression. Blurred beach scenery forms the background featuring crystal-clear waters, distant green hills, and a blue sky dotted with white clouds. The cat assumes a naturally relaxed posture, as if savoring the sea breeze and warm sunlight. A close-up shot highlights the feline's intricate details and the refreshing atmosphere of the seaside."

|

| 303 |

+

```

|

| 304 |

+

|

| 305 |

+

> 💡For the Image-to-Video task, the `size` parameter represents the area of the generated video, with the aspect ratio following that of the original input image.

|

| 306 |

+

|

| 307 |

+

|

| 308 |

+

- Multi-GPU inference using FSDP + xDiT USP

|

| 309 |

+

|

| 310 |

+

```sh

|

| 311 |

+

pip install "xfuser>=0.4.1"

|

| 312 |

+

torchrun --nproc_per_node=8 generate.py --task i2v-14B --size 1280*720 --ckpt_dir ./Wan2.1-I2V-14B-720P --image examples/i2v_input.JPG --dit_fsdp --t5_fsdp --ulysses_size 8 --prompt "Summer beach vacation style, a white cat wearing sunglasses sits on a surfboard. The fluffy-furred feline gazes directly at the camera with a relaxed expression. Blurred beach scenery forms the background featuring crystal-clear waters, distant green hills, and a blue sky dotted with white clouds. The cat assumes a naturally relaxed posture, as if savoring the sea breeze and warm sunlight. A close-up shot highlights the feline's intricate details and the refreshing atmosphere of the seaside."

|

| 313 |

+

```

|

| 314 |

+

|

| 315 |

+

##### (2) Using Prompt Extension

|

| 316 |

+

|

| 317 |

+

|

| 318 |

+

The process of prompt extension can be referenced [here](#2-using-prompt-extention).

|

| 319 |

+

|

| 320 |

+

Run with local prompt extension using `Qwen/Qwen2.5-VL-7B-Instruct`:

|

| 321 |

+

```

|

| 322 |

+