Spaces:

Running

Running

Commit

·

7d7f295

1

Parent(s):

f825473

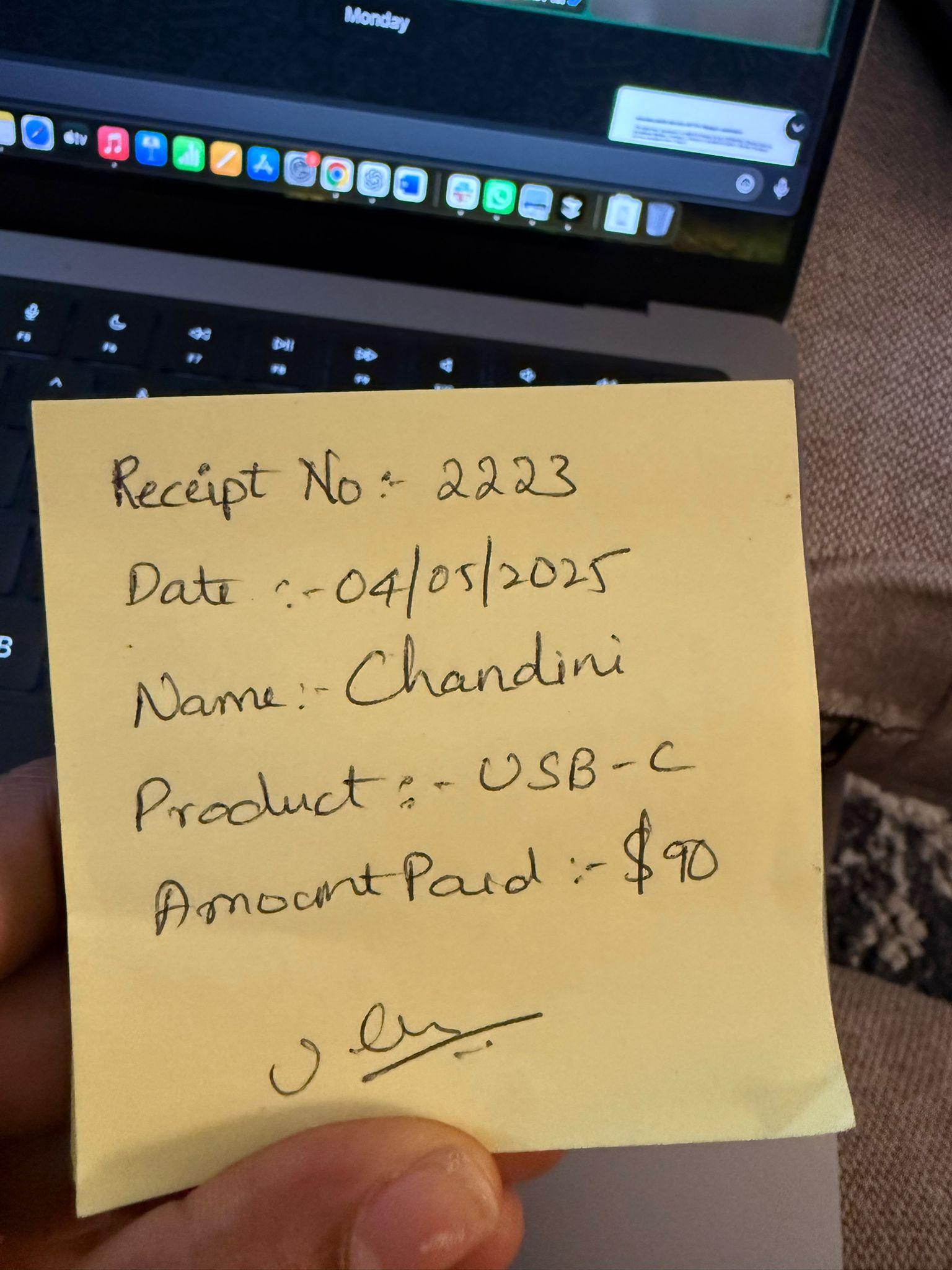

Added handwritten

Browse files- app.py +50 -49

- requirements.txt +2 -0

- temp_uploaded_image_paddle.jpg +3 -0

app.py

CHANGED

|

@@ -25,7 +25,7 @@ import matplotlib

|

|

| 25 |

import boto3

|

| 26 |

from decimal import Decimal

|

| 27 |

import uuid

|

| 28 |

-

|

| 29 |

|

| 30 |

# Configure logging

|

| 31 |

logging.basicConfig(level=logging.INFO)

|

|

@@ -193,6 +193,27 @@ def merge_extractions(regex_fields, llm_fields):

|

|

| 193 |

merged["products"] = llm_fields.get("products") or regex_fields.get("products")

|

| 194 |

return merged

|

| 195 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 196 |

def main():

|

| 197 |

st.set_page_config(

|

| 198 |

page_title="FormIQ - Intelligent Document Parser",

|

|

@@ -246,49 +267,43 @@ def main():

|

|

| 246 |

)

|

| 247 |

|

| 248 |

if uploaded_file is not None:

|

| 249 |

-

|

| 250 |

-

if uploaded_file.type == "application/pdf":

|

| 251 |

-

images = convert_from_bytes(uploaded_file.read())

|

| 252 |

-

image = images[0] # Use the first page

|

| 253 |

-

else:

|

| 254 |

-

image = Image.open(uploaded_file)

|

| 255 |

st.image(image, caption="Uploaded Document", width=600)

|

| 256 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 257 |

# Process button

|

| 258 |

if st.button("Process Document"):

|

| 259 |

with st.spinner("Processing document..."):

|

| 260 |

try:

|

| 261 |

-

# Save the uploaded file to a temporary location

|

| 262 |

temp_path = "temp_uploaded_image.jpg"

|

| 263 |

image.save(temp_path)

|

| 264 |

|

| 265 |

-

#

|

| 266 |

-

|

| 267 |

-

|

| 268 |

-

|

| 269 |

-

|

| 270 |

-

|

| 271 |

-

|

| 272 |

-

|

| 273 |

-

|

| 274 |

-

|

| 275 |

-

|

| 276 |

-

|

| 277 |

-

|

| 278 |

-

|

| 279 |

-

|

| 280 |

-

|

| 281 |

-

|

| 282 |

-

|

| 283 |

-

|

| 284 |

-

st.error(f"Failed to parse LLM output as JSON: {e}")

|

| 285 |

-

else:

|

| 286 |

-

st.warning("No valid JSON found in LLM output.")

|

| 287 |

-

|

| 288 |

-

# Display extracted products if present

|

| 289 |

-

if "products" in llm_data and llm_data["products"]:

|

| 290 |

-

st.subheader("Products (LLM Extracted)")

|

| 291 |

-

st.dataframe(pd.DataFrame(llm_data["products"]))

|

| 292 |

|

| 293 |

except Exception as e:

|

| 294 |

logger.error(f"Error processing document: {str(e)}")

|

|

@@ -351,19 +366,5 @@ def main():

|

|

| 351 |

else:

|

| 352 |

st.info("Confusion matrix not found.")

|

| 353 |

|

| 354 |

-

# Load model and processor

|

| 355 |

-

processor = TrOCRProcessor.from_pretrained('microsoft/trocr-base-handwritten')

|

| 356 |

-

model = VisionEncoderDecoderModel.from_pretrained('microsoft/trocr-base-handwritten')

|

| 357 |

-

|

| 358 |

-

# Load your image (crop to handwritten region if possible)

|

| 359 |

-

image = Image.open('handwritten_sample.jpg').convert("RGB")

|

| 360 |

-

|

| 361 |

-

# Preprocess and predict

|

| 362 |

-

pixel_values = processor(images=image, return_tensors="pt").pixel_values

|

| 363 |

-

generated_ids = model.generate(pixel_values)

|

| 364 |

-

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0]

|

| 365 |

-

|

| 366 |

-

print("Handwritten text:", generated_text)

|

| 367 |

-

|

| 368 |

if __name__ == "__main__":

|

| 369 |

main()

|

|

|

|

| 25 |

import boto3

|

| 26 |

from decimal import Decimal

|

| 27 |

import uuid

|

| 28 |

+

from paddleocr import PaddleOCR

|

| 29 |

|

| 30 |

# Configure logging

|

| 31 |

logging.basicConfig(level=logging.INFO)

|

|

|

|

| 193 |

merged["products"] = llm_fields.get("products") or regex_fields.get("products")

|

| 194 |

return merged

|

| 195 |

|

| 196 |

+

def extract_handwritten_text(image):

|

| 197 |

+

processor = TrOCRProcessor.from_pretrained('microsoft/trocr-base-handwritten')

|

| 198 |

+

model = VisionEncoderDecoderModel.from_pretrained('microsoft/trocr-base-handwritten')

|

| 199 |

+

pixel_values = processor(images=image, return_tensors="pt").pixel_values

|

| 200 |

+

generated_ids = model.generate(pixel_values)

|

| 201 |

+

generated_text = processor.batch_decode(generated_ids, skip_special_tokens=True)[0]

|

| 202 |

+

return generated_text

|

| 203 |

+

|

| 204 |

+

@st.cache_resource

|

| 205 |

+

def get_paddle_ocr():

|

| 206 |

+

return PaddleOCR(use_angle_cls=True, lang='en', show_log=False)

|

| 207 |

+

|

| 208 |

+

def extract_handwritten_text_paddle(image):

|

| 209 |

+

ocr = get_paddle_ocr()

|

| 210 |

+

# Save PIL image to a temporary file

|

| 211 |

+

temp_path = 'temp_uploaded_image_paddle.jpg'

|

| 212 |

+

image.save(temp_path)

|

| 213 |

+

result = ocr.ocr(temp_path, cls=True)

|

| 214 |

+

lines = [line[1][0] for line in result[0]]

|

| 215 |

+

return '\n'.join(lines)

|

| 216 |

+

|

| 217 |

def main():

|

| 218 |

st.set_page_config(

|

| 219 |

page_title="FormIQ - Intelligent Document Parser",

|

|

|

|

| 267 |

)

|

| 268 |

|

| 269 |

if uploaded_file is not None:

|

| 270 |

+

image = Image.open(uploaded_file).convert("RGB")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 271 |

st.image(image, caption="Uploaded Document", width=600)

|

| 272 |

|

| 273 |

+

handwritten_text = None

|

| 274 |

+

# Option to extract handwritten text with PaddleOCR

|

| 275 |

+

if st.checkbox("Extract handwritten text (PaddleOCR)?"):

|

| 276 |

+

with st.spinner("Extracting handwritten text with PaddleOCR..."):

|

| 277 |

+

handwritten_text = extract_handwritten_text_paddle(image)

|

| 278 |

+

st.subheader("Handwritten Text Extracted (PaddleOCR)")

|

| 279 |

+

st.write(handwritten_text)

|

| 280 |

+

|

| 281 |

# Process button

|

| 282 |

if st.button("Process Document"):

|

| 283 |

with st.spinner("Processing document..."):

|

| 284 |

try:

|

|

|

|

| 285 |

temp_path = "temp_uploaded_image.jpg"

|

| 286 |

image.save(temp_path)

|

| 287 |

|

| 288 |

+

# Use handwritten text if available, else fallback to pytesseract

|

| 289 |

+

if handwritten_text:

|

| 290 |

+

llm_input_text = handwritten_text

|

| 291 |

+

else:

|

| 292 |

+

llm_input_text = pytesseract.image_to_string(Image.open(temp_path))

|

| 293 |

+

|

| 294 |

+

llm_result = extract_with_perplexity_llm(llm_input_text)

|

| 295 |

+

llm_json = extract_json_from_llm_output(llm_result)

|

| 296 |

+

st.subheader("Structured Data (Perplexity LLM)")

|

| 297 |

+

if llm_json:

|

| 298 |

+

try:

|

| 299 |

+

llm_data = json.loads(llm_json)

|

| 300 |

+

st.json(llm_data)

|

| 301 |

+

save_to_dynamodb(llm_data)

|

| 302 |

+

st.success("Saved to DynamoDB!")

|

| 303 |

+

except Exception as e:

|

| 304 |

+

st.error(f"Failed to parse LLM output as JSON: {e}")

|

| 305 |

+

else:

|

| 306 |

+

st.warning("No valid JSON found in LLM output.")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 307 |

|

| 308 |

except Exception as e:

|

| 309 |

logger.error(f"Error processing document: {str(e)}")

|

|

|

|

| 366 |

else:

|

| 367 |

st.info("Confusion matrix not found.")

|

| 368 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 369 |

if __name__ == "__main__":

|

| 370 |

main()

|

requirements.txt

CHANGED

|

@@ -34,3 +34,5 @@ plotly==5.18.0

|

|

| 34 |

matplotlib

|

| 35 |

scikit-learn

|

| 36 |

pdf2image

|

|

|

|

|

|

|

|

|

| 34 |

matplotlib

|

| 35 |

scikit-learn

|

| 36 |

pdf2image

|

| 37 |

+

paddleocr

|

| 38 |

+

paddlepaddle

|

temp_uploaded_image_paddle.jpg

ADDED

|

Git LFS Details

|