Spaces:

Sleeping

Sleeping

Commit

·

d934fdc

1

Parent(s):

313787c

manual commit

Browse files- .gitattributes +2 -0

- README.md +1 -1

- app.py +57 -0

- data/data_sample_wikipedia.csv +3 -0

- data/topics_info.csv +3 -0

- images/logo.png +0 -0

- images/map.png +0 -0

- images/pipeline.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

data/data_sample_wikipedia.csv filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

data/topics_info.csv filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -9,4 +9,4 @@ app_file: app.py

|

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

| 12 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

|

| 12 |

+

Check out the configuration reference at <https://huggingface.co/docs/hub/spaces-config-reference>

|

app.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

import pandas as pd

|

| 3 |

+

|

| 4 |

+

st.sidebar.image("images/logo.png", use_column_width=True)

|

| 5 |

+

st.sidebar.write("Bunka Summarizes & Visualizes Information as Maps using LLMs.")

|

| 6 |

+

st.sidebar.title("Github Page")

|

| 7 |

+

st.sidebar.write(

|

| 8 |

+

"Have a look at the following package on GitHub: https://github.com/charlesdedampierre/BunkaTopics"

|

| 9 |

+

)

|

| 10 |

+

st.sidebar.title("Dataset")

|

| 11 |

+

st.sidebar.write(

|

| 12 |

+

"We used a subset of Wikipedia dataset: https://huggingface.co/datasets/wikimedia/wikipedia"

|

| 13 |

+

)

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

st.title("How to understand large textual datasets?")

|

| 17 |

+

st.info(

|

| 18 |

+

"We randomly sampled 40,000 articles from the English subset 20231101.en of the Wikipedia dataset. We then took the first 500 words of each articles in order to generate an abstract that will be used for topic modeling."

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

df = pd.read_csv("data/data_sample_wikipedia.csv", index_col=[0])

|

| 23 |

+

df = df[["text", "url"]]

|

| 24 |

+

st.dataframe(df, use_container_width=True)

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

st.title("Inside the Wikipedia dataset")

|

| 28 |

+

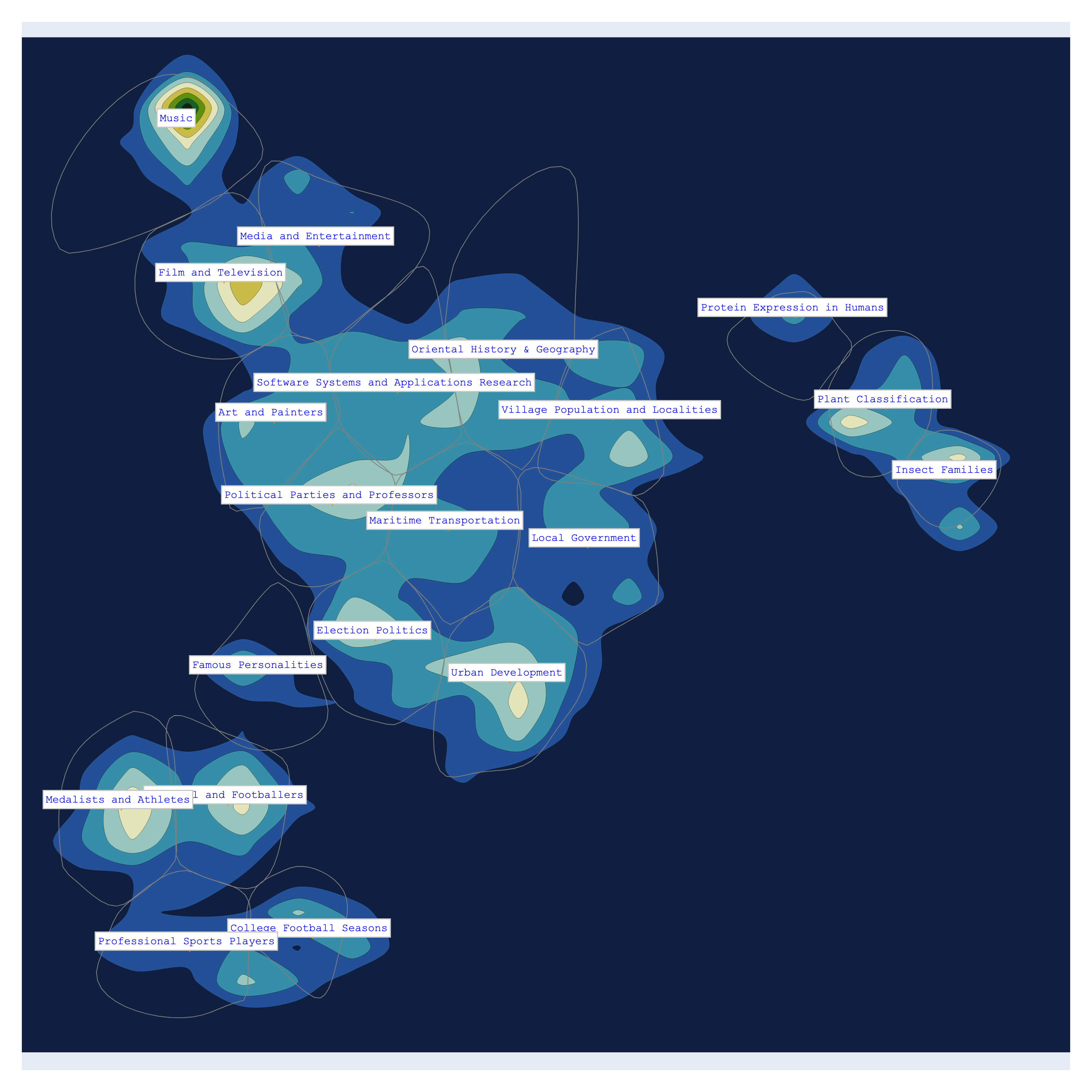

st.image(

|

| 29 |

+

"images/map.png",

|

| 30 |

+

use_column_width=True,

|

| 31 |

+

caption="This mapping can be done for each subset of the Wikipedia dataset, and the articles can be selected on a topic basis through the python package, allowing to filter and curate the data.",

|

| 32 |

+

)

|

| 33 |

+

|

| 34 |

+

st.markdown(

|

| 35 |

+

'<div align="center"><a href="https://charlesdedampierre.github.io/wikipedia-bunka-map"><h2 style="color: #0066ff;">Full Interactive Map</h2></a></div>',

|

| 36 |

+

unsafe_allow_html=True,

|

| 37 |

+

)

|

| 38 |

+

|

| 39 |

+

st.info(

|

| 40 |

+

"This interactive map explores each datapoint to get a more precise overview of the contents (it takes 10 seconds to load)"

|

| 41 |

+

)

|

| 42 |

+

|

| 43 |

+

st.title("Some insights by territory")

|

| 44 |

+

|

| 45 |

+

df_info = pd.read_csv("data/topics_info.csv", index_col=[0])

|

| 46 |

+

df_info = df_info[["name", "size", "percent"]]

|

| 47 |

+

df_info["percent"] = round(df_info["percent"] * 100, 3)

|

| 48 |

+

df_info["percent"] = df_info["percent"].apply(lambda x: str(int(x)) + "%")

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

st.dataframe(df_info, use_container_width=True)

|

| 52 |

+

|

| 53 |

+

st.title("Bunka Exploration Engine")

|

| 54 |

+

st.image(

|

| 55 |

+

"images/pipeline.png",

|

| 56 |

+

use_column_width=True,

|

| 57 |

+

)

|

data/data_sample_wikipedia.csv

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a176b6210f012dda75179d92af0a3471558db0f9a1569d7198338cc6915d6aaf

|

| 3 |

+

size 148888677

|

data/topics_info.csv

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7ac108f2e0ec494838a5fbb5b57cb36ded798f5bdd17fb9f64996bd5fdae230a

|

| 3 |

+

size 36270189

|

images/logo.png

ADDED

|

images/map.png

ADDED

|

images/pipeline.png

ADDED

|