Spaces:

Running

Running

ryan

commited on

Commit

·

c68e323

1

Parent(s):

a064f48

Initial commit

Browse files- .gitattributes +7 -0

- README.md +38 -5

- app.py +381 -0

- images/dark_mode_logo.png +3 -0

- images/examples/tryoff/outputs/1.webp +3 -0

- images/examples/tryoff/outputs/2.webp +3 -0

- images/examples/tryoff/outputs/3.webp +3 -0

- images/examples/tryoff/persons/1.jpg +3 -0

- images/examples/tryoff/persons/2.jpg +3 -0

- images/examples/tryoff/persons/3.jpg +3 -0

- images/examples/tryon/garments/1.jpg +3 -0

- images/examples/tryon/garments/2.jpg +3 -0

- images/examples/tryon/garments/3.jpg +3 -0

- images/examples/tryon/outputs/1.webp +3 -0

- images/examples/tryon/outputs/2.webp +3 -0

- images/examples/tryon/outputs/3.webp +3 -0

- images/examples/tryon/persons/1.jpg +3 -0

- images/examples/tryon/persons/2.jpg +3 -0

- images/examples/tryon/persons/3.jpg +3 -0

- images/garments/01.jpg +3 -0

- images/garments/02.jpg +3 -0

- images/garments/03.jpg +3 -0

- images/garments/04.jpg +3 -0

- images/garments/full_1.jpg +3 -0

- images/garments/full_3.jpg +3 -0

- images/garments/lower_1.jpg +3 -0

- images/garments/lower_2.png +3 -0

- images/garments/upper_1.png +3 -0

- images/garments/upper_2.jpg +3 -0

- images/garments/upper_3.jpg +3 -0

- images/garments/upper_5.jpg +3 -0

- images/nxn_logo_transparent.png +3 -0

- images/persons/01.jpg +3 -0

- images/persons/02.jpg +3 -0

- images/persons/03.jpg +3 -0

- images/persons/04.webp +3 -0

- images/persons/05.jpg +3 -0

- images/persons/06.jpg +3 -0

- images/persons/07.webp +3 -0

- images/persons/08.jpg +3 -0

- images/persons/09.jpg +3 -0

- images/persons/10.jpg +3 -0

- images/persons/11.jpg +3 -0

- images/persons/12.jpg +3 -0

- requirements.txt +4 -0

- styles.css +180 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,10 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

*.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

*.webp filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

*.gif filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

*.bmp filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

*.tiff filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,13 +1,46 @@

|

|

| 1 |

---

|

| 2 |

-

title: Voost

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom:

|

| 5 |

-

colorTo:

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.42.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: cc-by-nc-sa-4.0

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Voost - Virtual Try-On/Off

|

| 3 |

+

emoji: 👕

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.42.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

license: cc-by-nc-sa-4.0

|

| 11 |

+

short_description: Virtual Try-On&Off model from NXN Labs (https://nxn.ai/)

|

| 12 |

---

|

| 13 |

|

| 14 |

+

# Virtual Try-On Demo

|

| 15 |

+

|

| 16 |

+

This demo utilizes the Virtual Try-On and Try-Off models introduced in [Voost](https://arxiv.org/abs/2508.04825).

|

| 17 |

+

|

| 18 |

+

## Overview

|

| 19 |

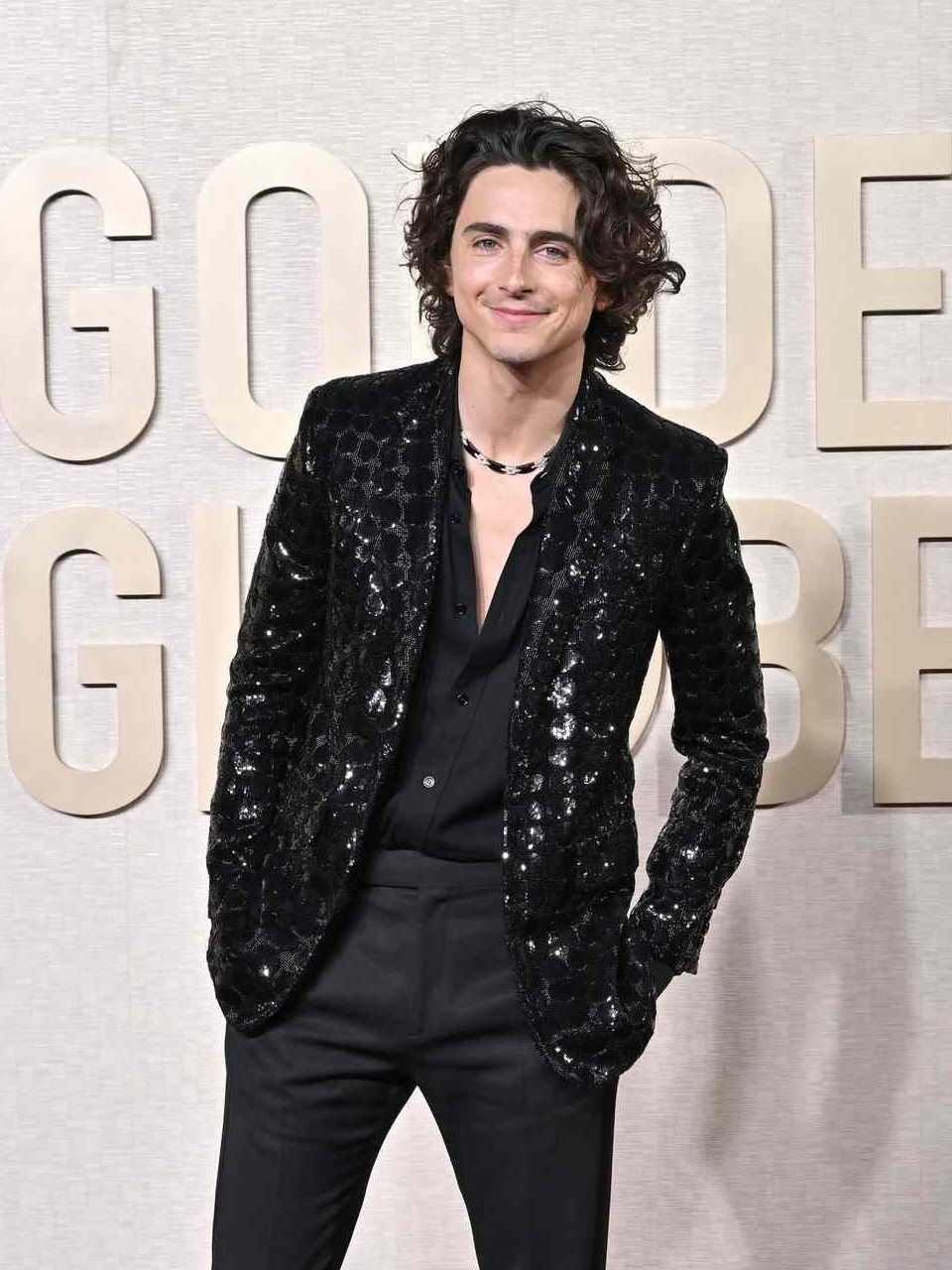

+

|

| 20 |

+

This application provides a live demo, allowing users to virtually try on and try off clothing items using advanced AI models.

|

| 21 |

+

|

| 22 |

+

## Commercial Use

|

| 23 |

+

|

| 24 |

+

If you are planning to use this for commercial purposes or need more advanced commercial-level services, please contact us through our website: [https://nxn.ai/contact](https://nxn.ai/contact)

|

| 25 |

+

|

| 26 |

+

## Citation

|

| 27 |

+

|

| 28 |

+

If you use this work in your research, please cite:

|

| 29 |

+

```bibtex

|

| 30 |

+

@article{lee2025voost,

|

| 31 |

+

author = {Seungyong Lee and Jeong-gi Kwak},

|

| 32 |

+

title = {Voost: A Unified and Scalable Diffusion Transformer for Bidirectional Virtual Try-On and Try-Off},

|

| 33 |

+

journal = {arXiv preprint arXiv:2508.04825},

|

| 34 |

+

year = {2025}

|

| 35 |

+

}

|

| 36 |

+

```

|

| 37 |

+

|

| 38 |

+

## Contact

|

| 39 |

+

|

| 40 |

+

For commercial inquiries and advanced services, visit: [https://nxn.ai](https://nxn.ai)

|

| 41 |

+

|

| 42 |

+

---

|

| 43 |

+

Most models and clothing images used are from internet and public datasets (VITON, DressCode).

|

| 44 |

+

All images and brands are the property of their respective owners.

|

| 45 |

+

|

| 46 |

+

This demo is licensed under a Creative [Commons Attribution-ShareAlike 4.0 International License.](http://creativecommons.org/licenses/by-sa/4.0/)

|

app.py

ADDED

|

@@ -0,0 +1,381 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from io import BytesIO

|

| 2 |

+

import gradio as gr

|

| 3 |

+

from PIL import Image

|

| 4 |

+

import httpx

|

| 5 |

+

from gradio_toggle import Toggle

|

| 6 |

+

from pathlib import Path

|

| 7 |

+

import numpy as np

|

| 8 |

+

import os

|

| 9 |

+

|

| 10 |

+

api_server = os.environ["NXN_API_SERVER"]

|

| 11 |

+

tryon_endpoint = os.environ["NXN_TRYON_ENDPOINT"]

|

| 12 |

+

tryoff_endpoint = os.environ["NXN_TRYOFF_ENDPOINT"]

|

| 13 |

+

|

| 14 |

+

MAX_DIM = 2048

|

| 15 |

+

MIN_DIM = 500

|

| 16 |

+

|

| 17 |

+

def encode_bytes(image: Image.Image, format="PNG"):

|

| 18 |

+

buffered = BytesIO()

|

| 19 |

+

image.save(buffered, format=format)

|

| 20 |

+

buffered.seek(0)

|

| 21 |

+

return buffered

|

| 22 |

+

|

| 23 |

+

# str to int

|

| 24 |

+

def garment_type_to_int(garment_type: str):

|

| 25 |

+

garment_dict = {"Upper": 0, "Lower": 1, "Full": 2}

|

| 26 |

+

if garment_dict[garment_type] is None:

|

| 27 |

+

raise gr.Error("Unexpected garment condition error")

|

| 28 |

+

else:

|

| 29 |

+

return garment_dict[garment_type]

|

| 30 |

+

|

| 31 |

+

def extract_image_from_input(image_data):

|

| 32 |

+

if isinstance(image_data, dict) and "background" in image_data:

|

| 33 |

+

return image_data["background"].convert("RGB")

|

| 34 |

+

else:

|

| 35 |

+

return image_data.convert("RGB")

|

| 36 |

+

|

| 37 |

+

def resize_image_if_needed(image: Image.Image):

|

| 38 |

+

if image is None:

|

| 39 |

+

return None, False

|

| 40 |

+

|

| 41 |

+

original_width, original_height = image.size

|

| 42 |

+

|

| 43 |

+

if original_width > MAX_DIM or original_height > MAX_DIM:

|

| 44 |

+

gr.Warning("A provided image is too large and has been resized")

|

| 45 |

+

scale_factor = min(MAX_DIM / original_width, MAX_DIM / original_height)

|

| 46 |

+

new_width = int(original_width * scale_factor)

|

| 47 |

+

new_height = int(original_height * scale_factor)

|

| 48 |

+

return image.resize((new_width, new_height), Image.Resampling.LANCZOS), True

|

| 49 |

+

elif original_width < MIN_DIM or original_height < MIN_DIM:

|

| 50 |

+

gr.Warning("A provided image is too small and has been resized")

|

| 51 |

+

scale_factor = max(MIN_DIM / original_width, MIN_DIM / original_height)

|

| 52 |

+

new_width = int(original_width * scale_factor)

|

| 53 |

+

new_height = int(original_height * scale_factor)

|

| 54 |

+

return image.resize((new_width, new_height), Image.Resampling.LANCZOS), True

|

| 55 |

+

|

| 56 |

+

return image, False

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

# API Helpers

|

| 60 |

+

async def _call_api(url: str, files: dict, data: dict):

|

| 61 |

+

try:

|

| 62 |

+

async with httpx.AsyncClient(timeout=3600) as client:

|

| 63 |

+

response = await client.post(url, data=data, files=files)

|

| 64 |

+

response.raise_for_status()

|

| 65 |

+

return Image.open(BytesIO(response.content))

|

| 66 |

+

except httpx.RequestError as e:

|

| 67 |

+

print(f"API request failed: {e}")

|

| 68 |

+

raise gr.Error("Network error: Could not connect to the model API. Please try again later.")

|

| 69 |

+

except Exception as e:

|

| 70 |

+

print(f"An unexpected error occurred: {e}")

|

| 71 |

+

raise

|

| 72 |

+

raise gr.Error("An unexpected error occurred. The model may have failed to process the images.")

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

async def call_tryon_api(model_image: Image.Image, garment_image: Image.Image, garment_type: int, mask: Image.Image=None, seed: int=1234):

|

| 76 |

+

files = [

|

| 77 |

+

("images", ("target.png", encode_bytes(model_image), "image/png")),

|

| 78 |

+

("images", ("garment.png", encode_bytes(garment_image), "image/png"))

|

| 79 |

+

]

|

| 80 |

+

if mask:

|

| 81 |

+

files.append(("images", ("mask.png", encode_bytes(mask, format="PNG"), "image/png")))

|

| 82 |

+

data = {'garment_type': garment_type, 'seed': seed}

|

| 83 |

+

return await _call_api(f"{api_server}/{tryon_endpoint}", files=files, data=data)

|

| 84 |

+

|

| 85 |

+

async def call_tryoff_api(model_image: Image.Image, garment_type: int, seed: int=1234):

|

| 86 |

+

files = [ ("images", ("target.png", encode_bytes(model_image), "image/png")) ]

|

| 87 |

+

data = {'garment_type': garment_type, 'seed': seed}

|

| 88 |

+

return await _call_api(f"{api_server}/{tryoff_endpoint}", files=files, data=data)

|

| 89 |

+

|

| 90 |

+

async def api_helper(model_image_dict: dict, garment_image: Image.Image, garment_type: str, is_tryoff: bool, seed: int):

|

| 91 |

+

if model_image_dict is None:

|

| 92 |

+

raise gr.Error("Missing model image")

|

| 93 |

+

elif not is_tryoff and garment_image is None:

|

| 94 |

+

raise gr.Error("Missing garment image for Try-On")

|

| 95 |

+

|

| 96 |

+

# Because Gradio ImageEditor can return a dict

|

| 97 |

+

model_image = extract_image_from_input(model_image_dict)

|

| 98 |

+

model_image, model_resized = resize_image_if_needed(model_image)

|

| 99 |

+

|

| 100 |

+

garment_image, _ = resize_image_if_needed(garment_image)

|

| 101 |

+

|

| 102 |

+

garment_type_int = garment_type_to_int(garment_type)

|

| 103 |

+

|

| 104 |

+

if is_tryoff:

|

| 105 |

+

return await call_tryoff_api(model_image, garment_type_int, seed)

|

| 106 |

+

else:

|

| 107 |

+

mask_image = None

|

| 108 |

+

if isinstance(model_image_dict, dict) and model_image_dict.get("layers"):

|

| 109 |

+

mask = model_image_dict["layers"][0]

|

| 110 |

+

mask_array = np.array(mask)

|

| 111 |

+

if not np.all(mask_array < 10):

|

| 112 |

+

is_black = np.all(mask_array < 10, axis=2)

|

| 113 |

+

mask_image = Image.fromarray(((~is_black) * 255).astype(np.uint8))

|

| 114 |

+

if model_resized:

|

| 115 |

+

mask_image = mask_image.resize(model_image.size, Image.Resampling.NEAREST)

|

| 116 |

+

else:

|

| 117 |

+

gr.Info("No mask provided, using auto-generated mask")

|

| 118 |

+

|

| 119 |

+

return await call_tryon_api(model_image, garment_image, garment_type_int, mask=mask_image, seed=seed)

|

| 120 |

+

|

| 121 |

+

# Event handler functions

|

| 122 |

+

def handle_toggle(toggle_value):

|

| 123 |

+

"""Handle toggle state changes - controls garment input visibility"""

|

| 124 |

+

toggle_label = gr.update(value=toggle_value, label="Try-Off") if toggle_value else gr.update(value=toggle_value, label="Try-On")

|

| 125 |

+

submit_btn_label = gr.update(value="Run Try-Off", elem_id="tryoff-color") if toggle_value else gr.update(value="Run Try-On", elem_id="tryon-color")

|

| 126 |

+

|

| 127 |

+

if toggle_value:

|

| 128 |

+

# Clear the image and disable the component

|

| 129 |

+

return gr.update(value=None, elem_classes=["disabled-image"], interactive=False), toggle_label, submit_btn_label

|

| 130 |

+

else:

|

| 131 |

+

# Re-enable the component without clearing the image

|

| 132 |

+

return gr.update(elem_classes=[], interactive=True), toggle_label, submit_btn_label

|

| 133 |

+

|

| 134 |

+

def set_tryon(garment_img, model_img, output_img, garment_condition):

|

| 135 |

+

garment_update, toggle_label, submit_btn_label = handle_toggle(False)

|

| 136 |

+

return garment_update, toggle_label, submit_btn_label

|

| 137 |

+

|

| 138 |

+

def set_tryoff(model_img, output_img, garment_condition):

|

| 139 |

+

garment_update, toggle_label, submit_btn_label = handle_toggle(True)

|

| 140 |

+

return garment_update, toggle_label, submit_btn_label

|

| 141 |

+

|

| 142 |

+

def garment_sort_key(filename):

|

| 143 |

+

if filename.startswith("upper_"):

|

| 144 |

+

return (0, filename)

|

| 145 |

+

elif filename.startswith("lower_"):

|

| 146 |

+

return (1, filename)

|

| 147 |

+

elif filename.startswith("full_"):

|

| 148 |

+

return (2, filename)

|

| 149 |

+

else:

|

| 150 |

+

return (3, filename)

|

| 151 |

+

|

| 152 |

+

# Get images for examples

|

| 153 |

+

images_path = os.path.join(os.path.dirname(__file__),'images')

|

| 154 |

+

|

| 155 |

+

garment_list = os.listdir(os.path.join(images_path, "garments"))

|

| 156 |

+

garment_list_path = [

|

| 157 |

+

os.path.join(images_path, "garments", cloth)

|

| 158 |

+

for cloth in sorted(garment_list, key=garment_sort_key)

|

| 159 |

+

]

|

| 160 |

+

|

| 161 |

+

people_list = os.listdir(os.path.join(images_path, "persons"))

|

| 162 |

+

people_list_path = [os.path.join(images_path, "persons", human) for human in sorted(people_list)]

|

| 163 |

+

|

| 164 |

+

gr.set_static_paths(paths=[Path.cwd().absolute()/"images"])

|

| 165 |

+

|

| 166 |

+

# Create the Gradio interface

|

| 167 |

+

with gr.Blocks(css_paths="styles.css", theme=gr.themes.Ocean(), title="Voost: Virtual Try-On/Off") as demo:

|

| 168 |

+

with gr.Row():

|

| 169 |

+

gr.HTML("""

|

| 170 |

+

<div class="header-container">

|

| 171 |

+

<div class="logo-container">

|

| 172 |

+

<a href="https://nxn.ai/">

|

| 173 |

+

<picture>

|

| 174 |

+

<source media="(prefers-color-scheme: dark)" srcset="/gradio_api/file=images/dark_mode_logo.png"/>

|

| 175 |

+

<img src='/gradio_api/file=images/nxn_logo_transparent.png' style="height: 120px; width: 150px;"/>

|

| 176 |

+

</picture>

|

| 177 |

+

</a>

|

| 178 |

+

</div>

|

| 179 |

+

<div style="display: flex; flex-direction: column; align-items: center; text-align: center;">

|

| 180 |

+

<div style="font-size: 45px; margin-bottom: 10px;">

|

| 181 |

+

<b>Voost: Virtual Try-On/Off</b>

|

| 182 |

+

</div>

|

| 183 |

+

<div style="display: flex; justify-content: center; align-items: center; text-align: center;">

|

| 184 |

+

<a href="https://arxiv.org/abs/2508.04825">

|

| 185 |

+

<img src='https://img.shields.io/badge/arXiv-2508.04825-red?style=flat&logo=arXiv&logoColor=red' alt='arxiv'>

|

| 186 |

+

</a>

|

| 187 |

+

|

| 188 |

+

<a href='https://nxnai.github.io/Voost/'>

|

| 189 |

+

<img src='https://img.shields.io/badge/Webpage-Project-silver?style=flat&logo=&logoColor=orange' alt='webpage'>

|

| 190 |

+

</a>

|

| 191 |

+

|

| 192 |

+

<a href="https://github.com/nxnai/Voost">

|

| 193 |

+

<img src='https://img.shields.io/badge/GitHub-Repo-blue?style=flat&logo=GitHub' alt='GitHub'>

|

| 194 |

+

</a>

|

| 195 |

+

|

| 196 |

+

<a href="https://github.com/nxnai/Voost/blob/main/LICENSE">

|

| 197 |

+

<img src='https://img.shields.io/badge/License-CC BY--NC--SA--4.0-lightgreen?style=flat&logo=Lisence' alt='License'>

|

| 198 |

+

</a>

|

| 199 |

+

</div>

|

| 200 |

+

<div style="font-size: 14px; color: #666; margin-top: 5px;">

|

| 201 |

+

Website: <a href="https://nxn.ai" target="_blank">https://nxn.ai</a> Inquiries: <a href="mailto:[email protected]">[email protected]</a>

|

| 202 |

+

</div>

|

| 203 |

+

</div>

|

| 204 |

+

</div>

|

| 205 |

+

""")

|

| 206 |

+

|

| 207 |

+

gr.Markdown("---")

|

| 208 |

+

|

| 209 |

+

with gr.Row():

|

| 210 |

+

with gr.Column(scale=1):

|

| 211 |

+

gr.Markdown("<center><h4>Step 1: Select <em>Try-On</em> or <em>Try-Off</em> mode. </h4></center>")

|

| 212 |

+

input_toggle = Toggle(

|

| 213 |

+

label="Try-On",

|

| 214 |

+

value=False,

|

| 215 |

+

interactive=True,

|

| 216 |

+

elem_classes=["button-container"],

|

| 217 |

+

color="rgba(177, 162, 239, .5)",

|

| 218 |

+

elem_id="toggle-modify"

|

| 219 |

+

)

|

| 220 |

+

|

| 221 |

+

with gr.Column(scale=1):

|

| 222 |

+

gr.Markdown("<center><h4>Step 2: Select your desired garment type.</h4></center>")

|

| 223 |

+

garment_condition = gr.Radio(

|

| 224 |

+

choices=["Upper", "Lower", "Full"],

|

| 225 |

+

value="Upper",

|

| 226 |

+

interactive=True,

|

| 227 |

+

elem_classes=["center-item"],

|

| 228 |

+

show_label=False,

|

| 229 |

+

label="Garment Type"

|

| 230 |

+

)

|

| 231 |

+

|

| 232 |

+

with gr.Row():

|

| 233 |

+

with gr.Column(scale=1, elem_id="col-left"):

|

| 234 |

+

gr.Markdown("<center><h4>Step 3: Upload a model image. <br> (Optional) Use the draw tool to create the mask. ⬇️</h4></center>")

|

| 235 |

+

model_image = gr.ImageEditor(

|

| 236 |

+

label="Model Image",

|

| 237 |

+

type="pil",

|

| 238 |

+

height=450,

|

| 239 |

+

width=600,

|

| 240 |

+

interactive=True,

|

| 241 |

+

brush=gr.Brush(

|

| 242 |

+

default_color=f"rgba(255, 255, 255, 0.5)",

|

| 243 |

+

colors=["rgb(255, 255, 255)"]

|

| 244 |

+

),

|

| 245 |

+

eraser=gr.Eraser(),

|

| 246 |

+

placeholder="Upload an image\n or\n select the draw tool on the left\n to start editing mask"

|

| 247 |

+

)

|

| 248 |

+

|

| 249 |

+

model_examples = gr.Examples(

|

| 250 |

+

examples=people_list_path,

|

| 251 |

+

inputs=[model_image],

|

| 252 |

+

label="Model Examples",

|

| 253 |

+

examples_per_page=12,

|

| 254 |

+

)

|

| 255 |

+

|

| 256 |

+

with gr.Column(scale=1, elem_id="col-mid"):

|

| 257 |

+

gr.Markdown("<center><h4>Step 4: Upload a garment image. ⬇️ <br><br></h4></center>")

|

| 258 |

+

garment_input = gr.Image(

|

| 259 |

+

label="Garment Image",

|

| 260 |

+

type="pil",

|

| 261 |

+

height=450,

|

| 262 |

+

width=350,

|

| 263 |

+

visible=True,

|

| 264 |

+

interactive=True,

|

| 265 |

+

)

|

| 266 |

+

|

| 267 |

+

garment_examples = gr.Examples(

|

| 268 |

+

examples=garment_list_path,

|

| 269 |

+

inputs=[garment_input],

|

| 270 |

+

label="Garment Examples",

|

| 271 |

+

examples_per_page=12

|

| 272 |

+

)

|

| 273 |

+

|

| 274 |

+

with gr.Column(scale=1, elem_id="col-right"):

|

| 275 |

+

gr.Markdown("<center><h4>Step 5: Click the button below to run the model! ⬇️ <br><br></h4></center>")

|

| 276 |

+

output_image = gr.Image(

|

| 277 |

+

format="png",

|

| 278 |

+

label="Output Image",

|

| 279 |

+

type="pil",

|

| 280 |

+

height=450,

|

| 281 |

+

width=550,

|

| 282 |

+

interactive=False,

|

| 283 |

+

)

|

| 284 |

+

|

| 285 |

+

submit_btn = gr.Button(

|

| 286 |

+

value="Run Try-On",

|

| 287 |

+

elem_id="tryon-color"

|

| 288 |

+

)

|

| 289 |

+

seed_input = gr.Slider(

|

| 290 |

+

label="Seed",

|

| 291 |

+

value=1234,

|

| 292 |

+

minimum=0,

|

| 293 |

+

maximum=2**16 - 1, # 2**32 - 1

|

| 294 |

+

step=1,

|

| 295 |

+

interactive=True,

|

| 296 |

+

elem_id="seed-input",

|

| 297 |

+

)

|

| 298 |

+

|

| 299 |

+

gr.HTML("""

|

| 300 |

+

<div style="margin-top: 15px; padding: 10px; background-color: #f8f9fa; border-radius: 8px; border-left: 4px solid #ffc107;">

|

| 301 |

+

<p style="margin: 0; font-size: 16px; color: #856404;">

|

| 302 |

+

<strong>⚠️ Note:</strong> Errors may occur due to high concurrent requests or NSFW content detection. Please try again if needed.

|

| 303 |

+

</p>

|

| 304 |

+

</div>

|

| 305 |

+

""")

|

| 306 |

+

|

| 307 |

+

gr.Markdown("---")

|

| 308 |

+

|

| 309 |

+

with gr.Row():

|

| 310 |

+

tryon_examples = gr.Examples(

|

| 311 |

+

examples=[

|

| 312 |

+

["Upper", "images/examples/tryon/persons/1.jpg", "images/examples/tryon/garments/1.jpg", "images/examples/tryon/outputs/1.webp"],

|

| 313 |

+

["Lower", "images/examples/tryon/persons/2.jpg", "images/examples/tryon/garments/2.jpg", "images/examples/tryon/outputs/2.webp"],

|

| 314 |

+

["Full", "images/examples/tryon/persons/3.jpg", "images/examples/tryon/garments/3.jpg", "images/examples/tryon/outputs/3.webp"],

|

| 315 |

+

],

|

| 316 |

+

inputs=[garment_condition, model_image, garment_input, output_image],

|

| 317 |

+

fn=set_tryon,

|

| 318 |

+

outputs=[garment_input, input_toggle, submit_btn],

|

| 319 |

+

label="Try-on Examples",

|

| 320 |

+

run_on_click=True

|

| 321 |

+

)

|

| 322 |

+

|

| 323 |

+

tryoff_examples = gr.Examples(

|

| 324 |

+

examples=[

|

| 325 |

+

["Upper", "images/examples/tryoff/persons/1.jpg", "images/examples/tryoff/outputs/1.webp"],

|

| 326 |

+

["Lower", "images/examples/tryoff/persons/2.jpg", "images/examples/tryoff/outputs/2.webp"],

|

| 327 |

+

["Full", "images/examples/tryoff/persons/3.jpg", "images/examples/tryoff/outputs/3.webp"],

|

| 328 |

+

],

|

| 329 |

+

inputs=[garment_condition, model_image, output_image],

|

| 330 |

+

fn=set_tryoff,

|

| 331 |

+

outputs=[garment_input, input_toggle, submit_btn],

|

| 332 |

+

label="Try-Off Examples",

|

| 333 |

+

run_on_click=True

|

| 334 |

+

)

|

| 335 |

+

|

| 336 |

+

gr.Markdown("---")

|

| 337 |

+

|

| 338 |

+

gr.HTML("""

|

| 339 |

+

<div class="footer-container">

|

| 340 |

+

<div class="footer-col footer-logo">

|

| 341 |

+

</div>

|

| 342 |

+

<div class="footer-col footer-main">

|

| 343 |

+

<h3>AI Studio Shaping the New Architecture of Fashion Imagery</h3>

|

| 344 |

+

<p>We’re a team of researchers from <b>Stanford</b>, <b>NYU</b>, <b>Seoul National University</b>, and <b>KAIST</b>. At <b>NXN Labs</b>, we’re developing an <b>image-to-image virtual try-on/try-off diffusion model</b>, designed to push the boundaries of digital production in the fashion industry.

|

| 345 |

+

This demo is <b>not the full version</b> of our model - it is based on our recent research work, <a href="https://arxiv.org/abs/2508.04825">Voost</a> - but it reflects the underlying research direction.

|

| 346 |

+

We’re headquartered in <b>San Francisco</b> and <b>Seoul</b>. If you’re a <b>brand or retailer</b> interested in using our full model API, please sign up at <a href="https://nxn.ai" target="_blank">https://nxn.ai</a> with your business name, and we’ll get back to you within 1–2 business days.

|

| 347 |

+

For part-time or full-time research roles, contact <a href="mailto:[email protected]">[email protected]</a>.

|

| 348 |

+

</p>

|

| 349 |

+

<p>©2025 NXN Labs ——— Copyright.</p>

|

| 350 |

+

</div>

|

| 351 |

+

<div class="footer-col footer-credits">

|

| 352 |

+

<h3>Special Thanks to NXN Labs Summer Interns:</h3>

|

| 353 |

+

<p>

|

| 354 |

+

<a href="https://www.linkedin.com/in/james-fu-74a16524b/" target="_blank">James Fu</a>,

|

| 355 |

+

<a href="https://www.linkedin.com/in/wing-lai-7a8987271/" target="_blank">Wing Lai</a>,

|

| 356 |

+

<a href="https://www.linkedin.com/in/stephen-park-53640332b/" target="_blank">Stephen Park</a>

|

| 357 |

+

<br><small>for their valuable contributions to this demo space</small>

|

| 358 |

+

</p>

|

| 359 |

+

</div>

|

| 360 |

+

</div>

|

| 361 |

+

""")

|

| 362 |

+

|

| 363 |

+

# Connect toggle to control garment input visibility

|

| 364 |

+

input_toggle.change(

|

| 365 |

+

fn=handle_toggle,

|

| 366 |

+

inputs=[input_toggle],

|

| 367 |

+

outputs=[garment_input, input_toggle, submit_btn],

|

| 368 |

+

api_name=False

|

| 369 |

+

)

|

| 370 |

+

|

| 371 |

+

submit_btn.click(

|

| 372 |

+

fn=api_helper,

|

| 373 |

+

inputs=[model_image, garment_input, garment_condition, input_toggle, seed_input],

|

| 374 |

+

outputs=[output_image],

|

| 375 |

+

concurrency_limit=7,

|

| 376 |

+

api_name=False

|

| 377 |

+

)

|

| 378 |

+

|

| 379 |

+

|

| 380 |

+

if __name__ == "__main__":

|

| 381 |

+

demo.launch(allowed_paths=["/gradio_api/images/examples"], share=True)

|

images/dark_mode_logo.png

ADDED

|

Git LFS Details

|

images/examples/tryoff/outputs/1.webp

ADDED

|

Git LFS Details

|

images/examples/tryoff/outputs/2.webp

ADDED

|

Git LFS Details

|

images/examples/tryoff/outputs/3.webp

ADDED

|

Git LFS Details

|

images/examples/tryoff/persons/1.jpg

ADDED

|

Git LFS Details

|

images/examples/tryoff/persons/2.jpg

ADDED

|

Git LFS Details

|

images/examples/tryoff/persons/3.jpg

ADDED

|

Git LFS Details

|

images/examples/tryon/garments/1.jpg

ADDED

|

Git LFS Details

|

images/examples/tryon/garments/2.jpg

ADDED

|

Git LFS Details

|

images/examples/tryon/garments/3.jpg

ADDED

|

Git LFS Details

|

images/examples/tryon/outputs/1.webp

ADDED

|

Git LFS Details

|

images/examples/tryon/outputs/2.webp

ADDED

|

Git LFS Details

|

images/examples/tryon/outputs/3.webp

ADDED

|

Git LFS Details

|

images/examples/tryon/persons/1.jpg

ADDED

|

Git LFS Details

|

images/examples/tryon/persons/2.jpg

ADDED

|

Git LFS Details

|

images/examples/tryon/persons/3.jpg

ADDED

|

Git LFS Details

|

images/garments/01.jpg

ADDED

|

Git LFS Details

|

images/garments/02.jpg

ADDED

|

Git LFS Details

|

images/garments/03.jpg

ADDED

|

Git LFS Details

|

images/garments/04.jpg

ADDED

|

Git LFS Details

|

images/garments/full_1.jpg

ADDED

|

Git LFS Details

|

images/garments/full_3.jpg

ADDED

|

Git LFS Details

|

images/garments/lower_1.jpg

ADDED

|

Git LFS Details

|

images/garments/lower_2.png

ADDED

|

Git LFS Details

|

images/garments/upper_1.png

ADDED

|

Git LFS Details

|

images/garments/upper_2.jpg

ADDED

|

Git LFS Details

|

images/garments/upper_3.jpg

ADDED

|

Git LFS Details

|

images/garments/upper_5.jpg

ADDED

|

Git LFS Details

|

images/nxn_logo_transparent.png

ADDED

|

Git LFS Details

|

images/persons/01.jpg

ADDED

|

Git LFS Details

|

images/persons/02.jpg

ADDED

|

Git LFS Details

|

images/persons/03.jpg

ADDED

|

Git LFS Details

|

images/persons/04.webp

ADDED

|

Git LFS Details

|

images/persons/05.jpg

ADDED

|

Git LFS Details

|

images/persons/06.jpg

ADDED

|

Git LFS Details

|

images/persons/07.webp

ADDED

|

Git LFS Details

|

images/persons/08.jpg

ADDED

|

Git LFS Details

|

images/persons/09.jpg

ADDED

|

Git LFS Details

|

images/persons/10.jpg

ADDED

|

Git LFS Details

|

images/persons/11.jpg

ADDED

|

Git LFS Details

|

images/persons/12.jpg

ADDED

|

Git LFS Details

|

requirements.txt

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio

|

| 2 |

+

gradio-toggle

|

| 3 |

+

Pillow

|

| 4 |

+

httpx

|

styles.css

ADDED

|

@@ -0,0 +1,180 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.header-container {

|

| 2 |

+

display: flex !important;

|

| 3 |

+

align-items: center !important;

|

| 4 |

+

justify-content: center !important;

|

| 5 |

+

position: relative !important;

|

| 6 |

+

width: 100% !important;

|

| 7 |

+

padding: 8px 0 !important;

|

| 8 |

+

}

|

| 9 |

+

|

| 10 |

+

.logo-container {

|

| 11 |

+

position: absolute !important;

|

| 12 |

+

left: 3% !important;

|

| 13 |

+

top: 50% !important;

|

| 14 |

+

transform: translateY(-50%) !important;

|

| 15 |

+

}

|

| 16 |

+

|

| 17 |

+

.title-container {

|

| 18 |

+

flex: 1 !important;

|

| 19 |

+

text-align: center !important;

|

| 20 |

+

}

|

| 21 |

+

|

| 22 |

+

.disabled-image {

|

| 23 |

+

opacity: 0.5;

|

| 24 |

+

pointer-events: none;

|

| 25 |

+

filter: grayscale(100%);

|

| 26 |

+

}

|

| 27 |

+

|

| 28 |

+

/* Hide Gradio footer */

|

| 29 |

+

footer {

|

| 30 |

+

display: none !important;

|

| 31 |

+

}

|

| 32 |

+

|

| 33 |

+

.footer {

|

| 34 |

+

display: none !important;

|

| 35 |

+

}

|

| 36 |

+

|

| 37 |

+

.gradio-container .footer {

|

| 38 |

+

display: none !important;

|

| 39 |

+

}

|

| 40 |

+

|

| 41 |

+

.button-container {

|

| 42 |

+

display: flex;

|

| 43 |

+

align-items: center !important;

|

| 44 |

+

justify-content: center;

|

| 45 |

+

gap: 8px;

|

| 46 |

+

}

|

| 47 |

+

|

| 48 |

+

.center-item {

|

| 49 |

+

display: flex;

|

| 50 |

+

align-items: center;

|

| 51 |

+

justify-content: center;

|

| 52 |

+

width: 100%;

|

| 53 |

+

}

|

| 54 |

+

|

| 55 |

+

.button-container .button {

|

| 56 |

+

margin: 0 !important;

|

| 57 |

+

padding: 0.5em 1.2em !important;

|

| 58 |

+

height: 40px !important;

|

| 59 |

+

line-height: 40px !important;

|

| 60 |

+

box-sizing: border-box !important;

|

| 61 |

+

vertical-align: middle !important;

|

| 62 |

+

font-size: 1rem !important;

|

| 63 |

+

}

|

| 64 |

+

|

| 65 |

+

.footer-container {

|

| 66 |

+

display: flex;

|

| 67 |

+

flex-direction: row;

|

| 68 |

+

justify-content: space-between;

|

| 69 |

+

align-items: flex-start;

|

| 70 |

+

width: 100%;

|

| 71 |

+

padding: 15px 0 !important;

|

| 72 |

+

gap: 32px;

|

| 73 |

+

}

|

| 74 |

+

|

| 75 |

+

.footer-col {

|

| 76 |

+

display: flex;

|

| 77 |

+

flex-direction: column;

|

| 78 |

+

align-items: center;

|

| 79 |

+

text-align: center;

|

| 80 |

+

min-width: 0;

|

| 81 |

+

}

|

| 82 |

+

|

| 83 |

+

.footer-logo {

|

| 84 |

+

flex: 1;

|

| 85 |

+

align-items: flex-start;

|

| 86 |

+

text-align: left;

|

| 87 |

+

}

|

| 88 |

+

|

| 89 |

+

.footer-main {

|

| 90 |

+

flex: 3; /* 다른 컬럼보다 3배 더 넓게 */

|

| 91 |

+

align-items: center;

|

| 92 |

+

text-align: center;

|

| 93 |

+

}

|

| 94 |

+

|

| 95 |

+

.footer-credits {

|

| 96 |

+

flex: 1;

|

| 97 |

+

align-items: flex-end;

|

| 98 |

+

text-align: right;

|

| 99 |

+

}

|

| 100 |

+

|

| 101 |

+

#tryoff-color {

|

| 102 |

+

background-image: linear-gradient(120deg, rgb(177, 162, 239) 0%, rgb(179, 172, 241) 20%, rgb(181, 182, 243) 30%, rgb(183, 192, 245) 40%, rgb(185, 202, 247) 50%, rgb(175, 212, 239) 60%, rgb(165, 222, 231) 70%, rgb(155, 232, 223) 80%, rgb(145, 235, 215) 90%, rgb(127, 215, 185) 100%);

|

| 103 |

+

}

|

| 104 |

+

|

| 105 |

+

#tryon-color {

|

| 106 |

+

background-image: linear-gradient(120deg, rgb(102, 205, 170) 0%, rgb(124, 215, 180) 15%, rgb(146, 225, 190) 30%, rgb(168, 235, 200) 45%, rgb(190, 235, 220) 60%, rgb(212, 235, 240) 75%, rgb(174, 225, 247) 90%, rgb(135, 206, 250) 100%);

|

| 107 |

+

}

|

| 108 |

+

|

| 109 |

+

#toggle-modify{

|

| 110 |

+

padding: 17.4px;

|

| 111 |

+

}

|

| 112 |

+

|

| 113 |

+

/* Model & Garment Examples images */

|

| 114 |

+

.gallery-item {

|

| 115 |

+

width: 90px !important;

|

| 116 |

+

height: 120px !important;

|

| 117 |

+

display: flex;

|

| 118 |

+

justify-content: center;

|

| 119 |

+

align-items: center;

|

| 120 |

+

}

|

| 121 |

+

|

| 122 |

+

.gallery-item > * {

|

| 123 |

+

width: auto !important;

|

| 124 |

+

height: auto !important;

|

| 125 |

+

max-width: 90px !important;

|

| 126 |

+

max-height: 120px !important;

|

| 127 |

+

border: none !important;

|

| 128 |

+

}

|

| 129 |

+

|

| 130 |

+

@media only screen and (min-width: 768px){

|

| 131 |

+

#col-left {

|

| 132 |

+

margin: 0 auto;

|

| 133 |

+

max-width: 450px;

|

| 134 |

+

}

|

| 135 |

+

#col-mid {

|

| 136 |

+

margin: 0 auto;

|

| 137 |

+

max-width: 450px;

|

| 138 |

+

}

|

| 139 |

+

#col-right {

|

| 140 |

+

margin: 0 auto;

|

| 141 |

+

max-width: 450px;

|

| 142 |

+

}

|

| 143 |

+

}

|

| 144 |

+

|

| 145 |

+

@media only screen and (max-width: 768px){

|

| 146 |

+

#col-left {

|

| 147 |

+

margin: 0 auto;

|

| 148 |

+

max-width: 300px;

|

| 149 |

+

}

|

| 150 |

+

#col-mid {

|

| 151 |

+

margin: 0 auto;

|

| 152 |

+

max-width: 300px;

|

| 153 |

+

}

|

| 154 |

+

#col-right {

|

| 155 |

+

margin: 0 auto;

|

| 156 |

+

max-width: 300px;

|

| 157 |

+

}

|

| 158 |

+

}

|

| 159 |

+

|

| 160 |

+

/* Hide the Garment Type column in Examples */

|

| 161 |

+

.svelte-p5q82i table th:first-child,

|

| 162 |

+

.svelte-p5q82i table td:first-child {

|

| 163 |

+

display: none;

|

| 164 |

+

}

|

| 165 |

+

|

| 166 |

+

/* For Model Image because for some reason it is seperate */

|

| 167 |

+

.container {

|

| 168 |

+

overflow: hidden;

|

| 169 |

+

width: 100% !important;

|

| 170 |

+

height: auto !important;

|

| 171 |

+

max-width: 90px !important;

|

| 172 |

+

max-height: 120px !important;

|

| 173 |

+

}

|

| 174 |

+

|

| 175 |

+

/* Dark mode styles */

|

| 176 |

+

@media (prefers-color-scheme: dark) {

|

| 177 |

+

.toggle-label{

|

| 178 |

+

color:white;

|

| 179 |

+

}

|

| 180 |

+

}

|