Sarthak

commited on

Commit

·

ecfceb8

1

Parent(s):

7bb46ce

initial commit

Browse files- .gitattributes +4 -35

- .gitignore +14 -0

- .python-version +1 -0

- LICENSE +201 -0

- MTEB_evaluate.py +350 -0

- README.md +3 -3

- config.json +13 -0

- distill.py +116 -0

- evaluate.py +422 -0

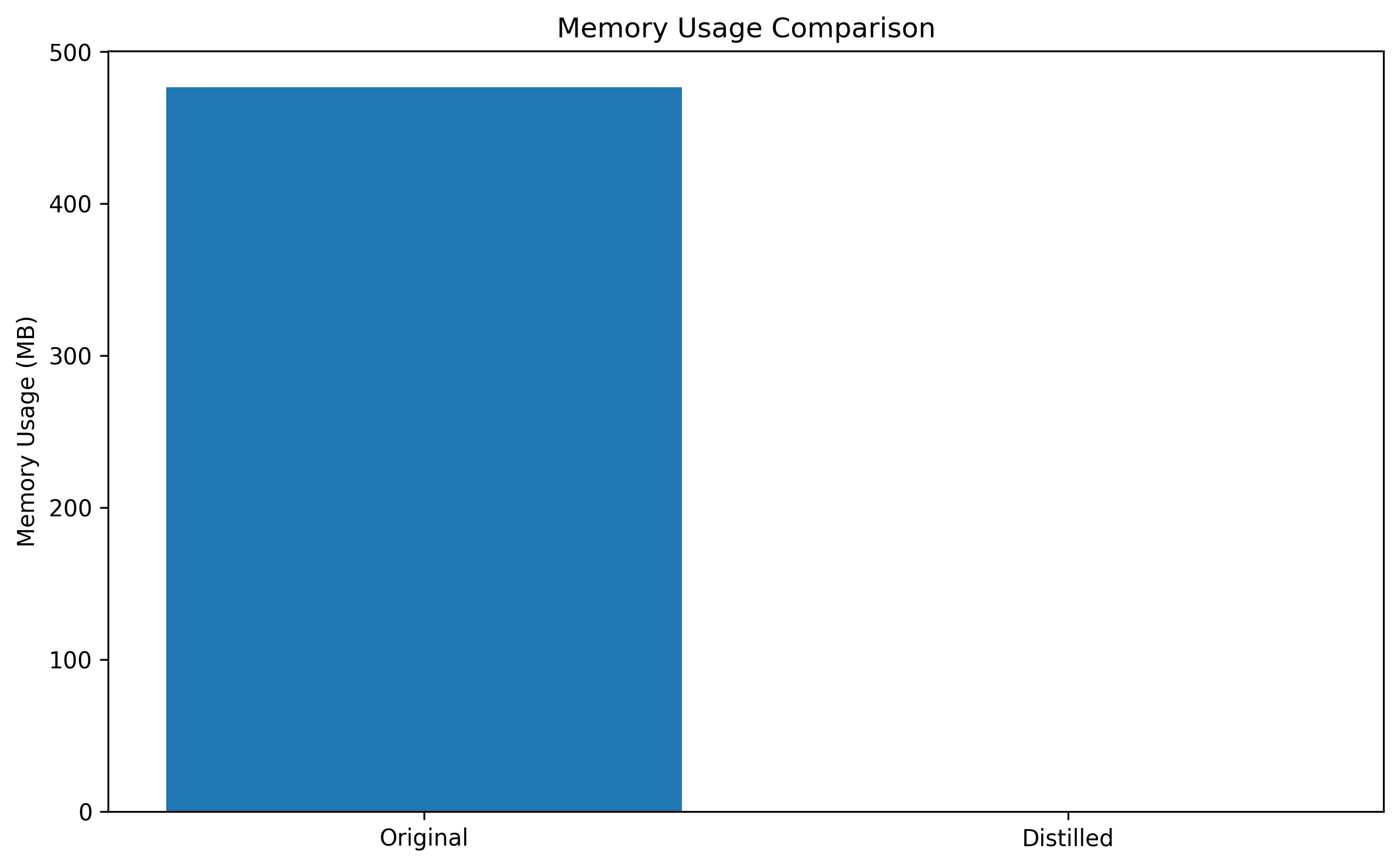

- evaluation/memory_comparison.png +3 -0

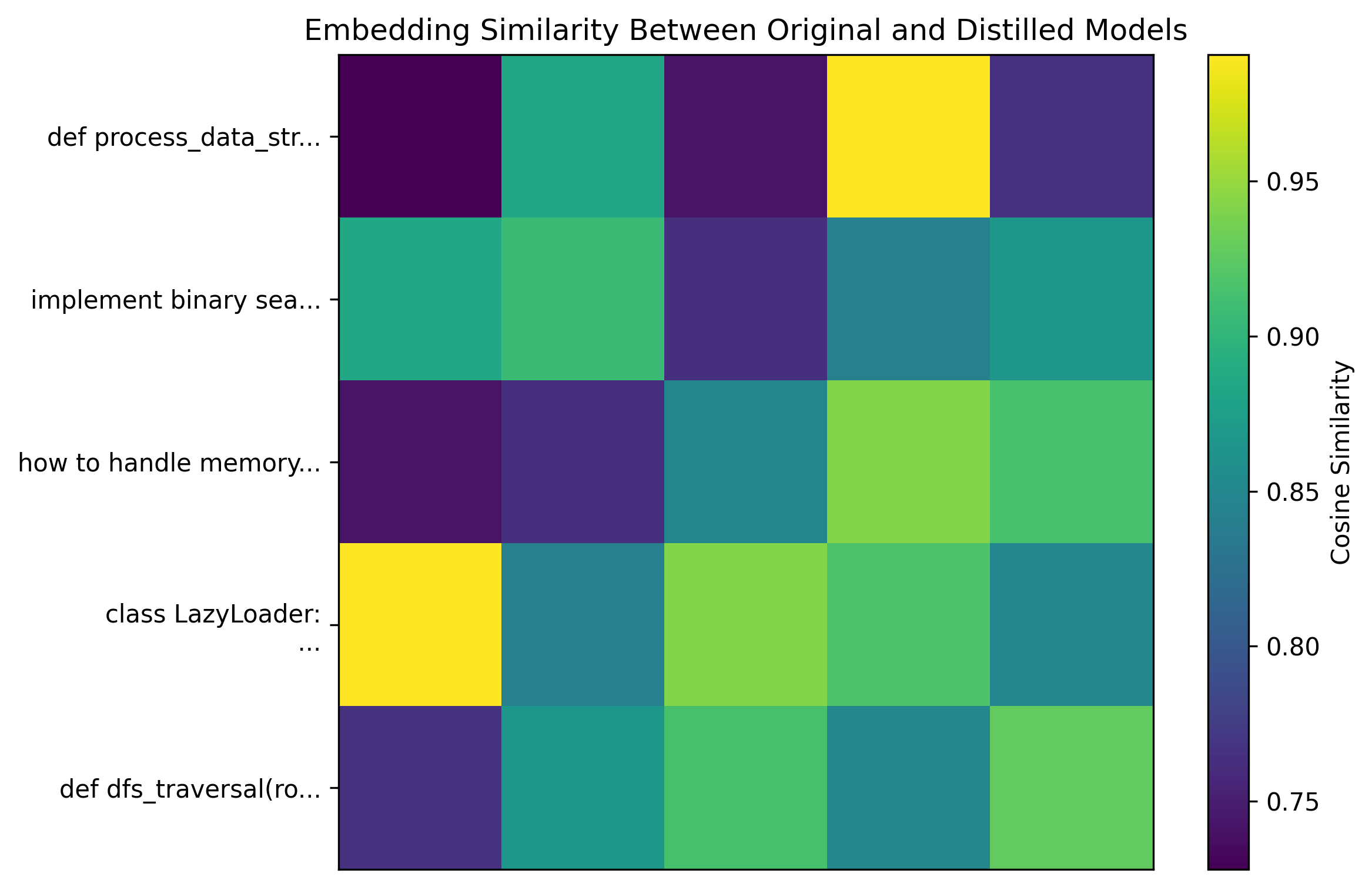

- evaluation/similarity_matrix.png +3 -0

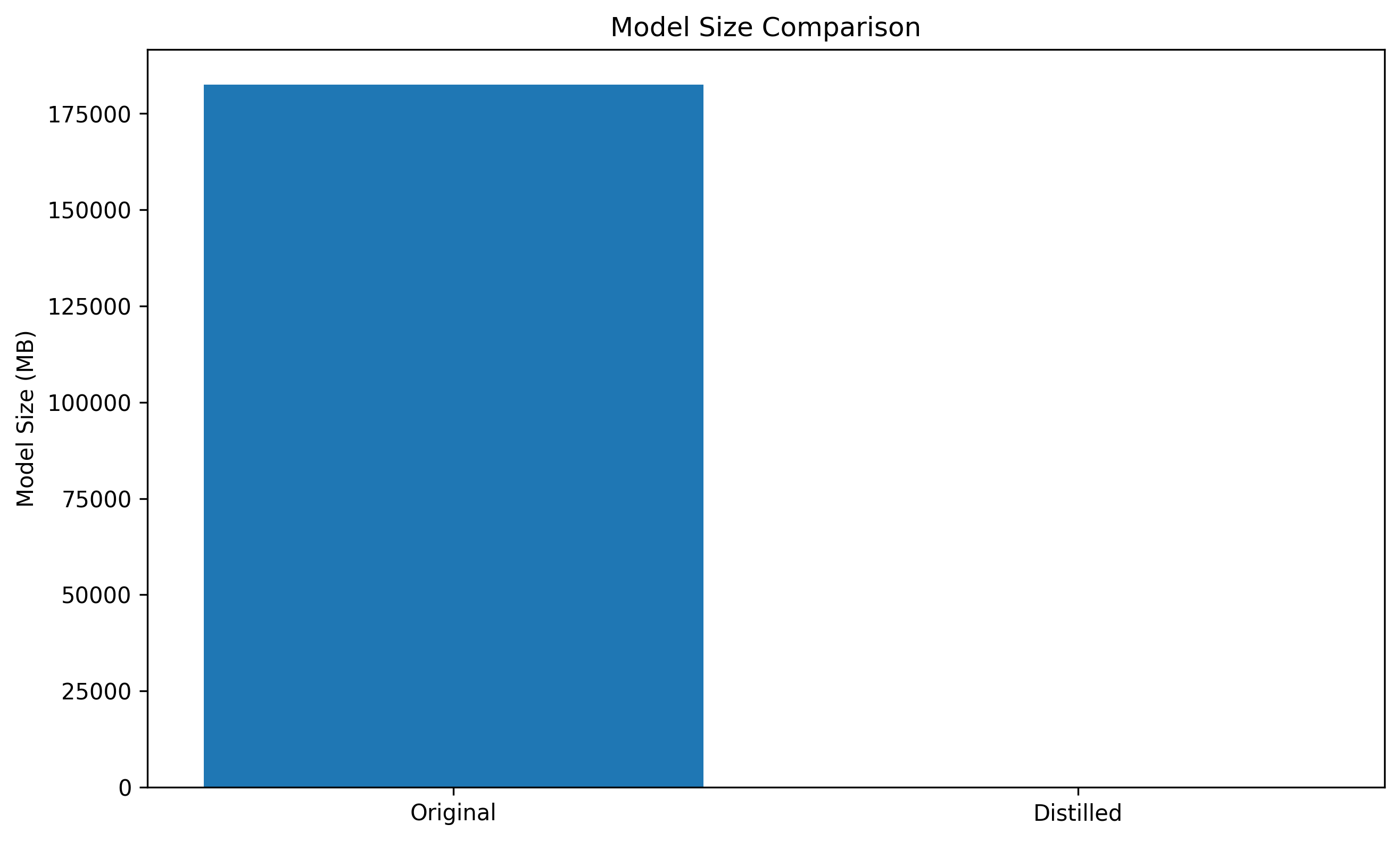

- evaluation/size_comparison.png +3 -0

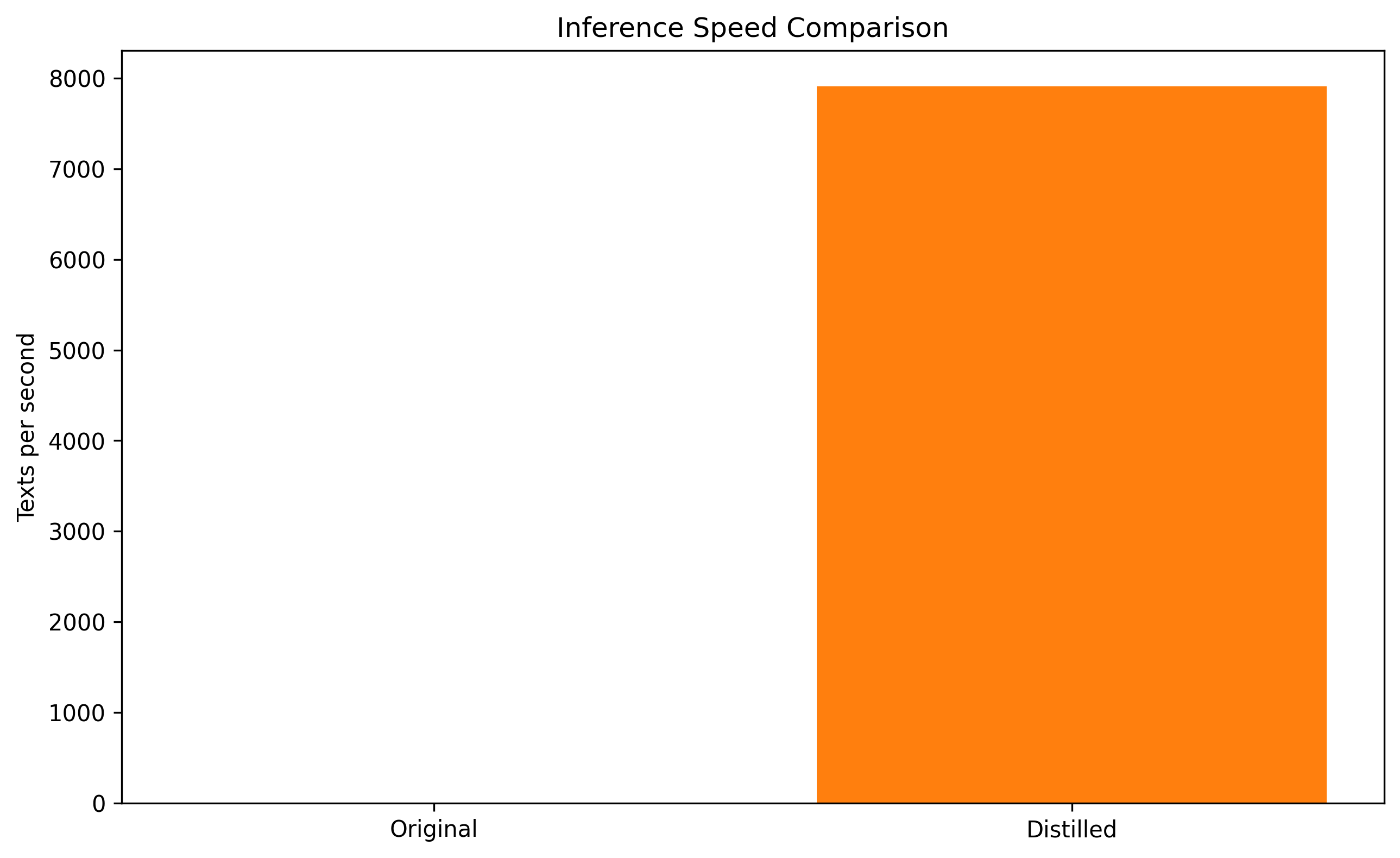

- evaluation/speed_comparison.png +3 -0

- model.safetensors +3 -0

- modules.json +14 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/AmazonCounterfactualClassification.json +179 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/Banking77Classification.json +73 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/CQADupstackProgrammersRetrieval.json +158 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/STSBenchmark.json +26 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/SprintDuplicateQuestions.json +58 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/StackExchangeClustering.json +47 -0

- mteb_results/gte-Qwen2-7B-instruct-M2V-Distilled/distilled/model_meta.json +1 -0

- mteb_results/mteb_parsed_results.json +3 -0

- mteb_results/mteb_raw_results.json +7 -0

- mteb_results/mteb_report.txt +21 -0

- mteb_results/mteb_summary.json +20 -0

- pipeline.skops +3 -0

- pyproject.toml +101 -0

- tokenizer.json +3 -0

- train_code_classification.py +365 -0

- uv.lock +0 -0

.gitattributes

CHANGED

|

@@ -1,37 +1,6 @@

|

|

| 1 |

-

*.7z filter=lfs diff=lfs merge=lfs -text

|

| 2 |

-

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

-

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

-

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

-

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

-

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

-

*.h5 filter=lfs diff=lfs merge=lfs -text

|

| 9 |

-

*.joblib filter=lfs diff=lfs merge=lfs -text

|

| 10 |

-

*.lfs.* filter=lfs diff=lfs merge=lfs -text

|

| 11 |

-

*.mlmodel filter=lfs diff=lfs merge=lfs -text

|

| 12 |

-

*.model filter=lfs diff=lfs merge=lfs -text

|

| 13 |

-

*.msgpack filter=lfs diff=lfs merge=lfs -text

|

| 14 |

-

*.npy filter=lfs diff=lfs merge=lfs -text

|

| 15 |

-

*.npz filter=lfs diff=lfs merge=lfs -text

|

| 16 |

-

*.onnx filter=lfs diff=lfs merge=lfs -text

|

| 17 |

-

*.ot filter=lfs diff=lfs merge=lfs -text

|

| 18 |

-

*.parquet filter=lfs diff=lfs merge=lfs -text

|

| 19 |

-

*.pb filter=lfs diff=lfs merge=lfs -text

|

| 20 |

-

*.pickle filter=lfs diff=lfs merge=lfs -text

|

| 21 |

-

*.pkl filter=lfs diff=lfs merge=lfs -text

|

| 22 |

-

*.pt filter=lfs diff=lfs merge=lfs -text

|

| 23 |

-

*.pth filter=lfs diff=lfs merge=lfs -text

|

| 24 |

-

*.rar filter=lfs diff=lfs merge=lfs -text

|

| 25 |

-

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 26 |

-

saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

| 27 |

-

*.tar.* filter=lfs diff=lfs merge=lfs -text

|

| 28 |

-

*.tar filter=lfs diff=lfs merge=lfs -text

|

| 29 |

-

*.tflite filter=lfs diff=lfs merge=lfs -text

|

| 30 |

-

*.tgz filter=lfs diff=lfs merge=lfs -text

|

| 31 |

-

*.wasm filter=lfs diff=lfs merge=lfs -text

|

| 32 |

-

*.xz filter=lfs diff=lfs merge=lfs -text

|

| 33 |

-

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

-

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

-

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 37 |

*.png filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

model.safetensors filter=lfs diff=lfs merge=lfs -text

|

| 3 |

*.png filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

evaluation/** filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.skops* filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.safetensors filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Python-generated files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[oc]

|

| 4 |

+

build/

|

| 5 |

+

dist/

|

| 6 |

+

wheels/

|

| 7 |

+

*.egg-info

|

| 8 |

+

|

| 9 |

+

# Virtual environments

|

| 10 |

+

.venv

|

| 11 |

+

|

| 12 |

+

# Cache

|

| 13 |

+

.ruff_cache

|

| 14 |

+

.mypy_cache

|

.python-version

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

3.12

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

MTEB_evaluate.py

ADDED

|

@@ -0,0 +1,350 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

"""

|

| 3 |

+

MTEB Evaluation Script for Distilled Model - Code-Focused Tasks.

|

| 4 |

+

|

| 5 |

+

This script evaluates the distilled gte-Qwen2-7B-instruct model using MTEB

|

| 6 |

+

(Massive Text Embedding Benchmark) with a focus on tasks relevant for code:

|

| 7 |

+

|

| 8 |

+

- Classification: Tests ability to distinguish between different categories (e.g., programming languages)

|

| 9 |

+

- Clustering: Tests ability to group similar code by functionality

|

| 10 |

+

- STS: Tests semantic similarity understanding between code snippets

|

| 11 |

+

- Retrieval: Tests code search and duplicate detection capabilities

|

| 12 |

+

|

| 13 |

+

Features:

|

| 14 |

+

- Incremental evaluation: Skips tasks that already have results in mteb_results/

|

| 15 |

+

- Combines existing and new results automatically

|

| 16 |

+

- Saves results in multiple formats for analysis

|

| 17 |

+

|

| 18 |

+

Usage:

|

| 19 |

+

python MTEB_evaluate.py

|

| 20 |

+

|

| 21 |

+

Configuration:

|

| 22 |

+

- Set EVAL_ALL_TASKS = False to use only CODE_SPECIFIC_TASKS

|

| 23 |

+

- Modify CODE_SPECIFIC_TASKS for granular task selection

|

| 24 |

+

"""

|

| 25 |

+

|

| 26 |

+

import json

|

| 27 |

+

import logging

|

| 28 |

+

import sys

|

| 29 |

+

import time

|

| 30 |

+

from pathlib import Path

|

| 31 |

+

|

| 32 |

+

import mteb

|

| 33 |

+

from model2vec import StaticModel

|

| 34 |

+

from mteb import ModelMeta

|

| 35 |

+

|

| 36 |

+

from evaluation import (

|

| 37 |

+

CustomMTEB,

|

| 38 |

+

get_tasks,

|

| 39 |

+

make_leaderboard,

|

| 40 |

+

parse_mteb_results,

|

| 41 |

+

summarize_results,

|

| 42 |

+

)

|

| 43 |

+

|

| 44 |

+

# =============================================================================

|

| 45 |

+

# CONFIGURATION CONSTANTS

|

| 46 |

+

# =============================================================================

|

| 47 |

+

|

| 48 |

+

# Model Configuration

|

| 49 |

+

MODEL_PATH = "." # Path to the distilled model directory

|

| 50 |

+

MODEL_NAME = "gte-Qwen2-7B-instruct-M2V-Distilled" # Name for the model in results

|

| 51 |

+

|

| 52 |

+

# Evaluation Configuration

|

| 53 |

+

OUTPUT_DIR = "mteb_results" # Directory to save evaluation results

|

| 54 |

+

|

| 55 |

+

EVAL_ALL_TASKS = True

|

| 56 |

+

|

| 57 |

+

# Specific tasks most relevant for code evaluation (focused selection)

|

| 58 |

+

CODE_SPECIFIC_TASKS = [

|

| 59 |

+

# Classification - Programming language/category classification

|

| 60 |

+

"Banking77Classification", # Fine-grained classification (77 classes)

|

| 61 |

+

# Clustering - Code grouping by functionality

|

| 62 |

+

"StackExchangeClustering.v2", # Technical Q&A clustering (most relevant)

|

| 63 |

+

# STS - Code similarity understanding

|

| 64 |

+

"STSBenchmark", # Standard semantic similarity benchmark

|

| 65 |

+

# Retrieval - Code search capabilities

|

| 66 |

+

"CQADupstackProgrammersRetrieval", # Programming Q&A retrieval

|

| 67 |

+

# PairClassification - Duplicate/similar code detection

|

| 68 |

+

"SprintDuplicateQuestions", # Duplicate question detection

|

| 69 |

+

]

|

| 70 |

+

|

| 71 |

+

# Evaluation settings

|

| 72 |

+

EVAL_SPLITS = ["test"] # Dataset splits to evaluate on

|

| 73 |

+

VERBOSITY = 2 # MTEB verbosity level

|

| 74 |

+

|

| 75 |

+

# =============================================================================

|

| 76 |

+

|

| 77 |

+

# Configure logging

|

| 78 |

+

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

|

| 79 |

+

logger = logging.getLogger(__name__)

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

def check_existing_results(output_path: Path, tasks: list) -> list:

|

| 83 |

+

"""Check for existing task results and filter out completed tasks."""

|

| 84 |

+

remaining_tasks = []

|

| 85 |

+

completed_tasks = []

|

| 86 |

+

|

| 87 |

+

for task in tasks:

|

| 88 |

+

task_name = task.metadata.name

|

| 89 |

+

# MTEB saves results as {model_name}__{task_name}.json

|

| 90 |

+

result_file = output_path / MODEL_NAME / f"{task_name}.json"

|

| 91 |

+

|

| 92 |

+

if result_file.exists():

|

| 93 |

+

completed_tasks.append(task_name)

|

| 94 |

+

logger.info(f"Skipping {task_name} - results already exist")

|

| 95 |

+

else:

|

| 96 |

+

remaining_tasks.append(task)

|

| 97 |

+

|

| 98 |

+

if completed_tasks:

|

| 99 |

+

logger.info(f"Found existing results for {len(completed_tasks)} tasks: {completed_tasks}")

|

| 100 |

+

|

| 101 |

+

return remaining_tasks

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

def load_existing_parsed_results(output_path: Path) -> dict:

|

| 105 |

+

"""Load existing parsed results if they exist."""

|

| 106 |

+

parsed_results_file = output_path / "mteb_parsed_results.json"

|

| 107 |

+

if parsed_results_file.exists():

|

| 108 |

+

try:

|

| 109 |

+

with parsed_results_file.open("r") as f:

|

| 110 |

+

return json.load(f)

|

| 111 |

+

except (json.JSONDecodeError, OSError) as e:

|

| 112 |

+

logger.warning(f"Could not load existing parsed results: {e}")

|

| 113 |

+

return {}

|

| 114 |

+

|

| 115 |

+

|

| 116 |

+

def load_and_display_existing_results(output_path: Path) -> None:

|

| 117 |

+

"""Load and display existing MTEB results."""

|

| 118 |

+

summary_file = output_path / "mteb_summary.json"

|

| 119 |

+

if summary_file.exists():

|

| 120 |

+

with summary_file.open("r") as f:

|

| 121 |

+

summary = json.load(f)

|

| 122 |

+

|

| 123 |

+

logger.info("=" * 80)

|

| 124 |

+

logger.info("EXISTING MTEB EVALUATION RESULTS:")

|

| 125 |

+

logger.info("=" * 80)

|

| 126 |

+

|

| 127 |

+

stats = summary.get("summary_stats")

|

| 128 |

+

if stats:

|

| 129 |

+

logger.info(f"Total Datasets: {stats.get('total_datasets', 'N/A')}")

|

| 130 |

+

logger.info(f"Average Score: {stats.get('average_score', 0):.4f}")

|

| 131 |

+

logger.info(f"Median Score: {stats.get('median_score', 0):.4f}")

|

| 132 |

+

|

| 133 |

+

logger.info("=" * 80)

|

| 134 |

+

else:

|

| 135 |

+

logger.info("No existing summary found. Individual task results may still exist.")

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

def run_mteb_evaluation() -> None:

|

| 139 |

+

"""Run MTEB evaluation using the evaluation package."""

|

| 140 |

+

output_path = Path(OUTPUT_DIR)

|

| 141 |

+

output_path.mkdir(parents=True, exist_ok=True)

|

| 142 |

+

|

| 143 |

+

logger.info(f"Loading model from {MODEL_PATH}")

|

| 144 |

+

model = StaticModel.from_pretrained(MODEL_PATH)

|

| 145 |

+

logger.info("Model loaded successfully")

|

| 146 |

+

|

| 147 |

+

# Set up model metadata for MTEB

|

| 148 |

+

model.mteb_model_meta = ModelMeta( # type: ignore[attr-defined]

|

| 149 |

+

name=MODEL_NAME, revision="distilled", release_date=None, languages=["eng"]

|

| 150 |

+

)

|

| 151 |

+

|

| 152 |

+

# Get specific code-relevant tasks (focused selection)

|

| 153 |

+

logger.info("Getting focused code-relevant MTEB tasks")

|

| 154 |

+

logger.info(f"Selected specific tasks: {CODE_SPECIFIC_TASKS}")

|

| 155 |

+

|

| 156 |

+

if EVAL_ALL_TASKS:

|

| 157 |

+

all_tasks = get_tasks()

|

| 158 |

+

else:

|

| 159 |

+

all_tasks = [mteb.get_task(task_name, languages=["eng"]) for task_name in CODE_SPECIFIC_TASKS]

|

| 160 |

+

|

| 161 |

+

logger.info(f"Found {len(all_tasks)} total tasks")

|

| 162 |

+

|

| 163 |

+

# Check for existing results and filter out completed tasks

|

| 164 |

+

tasks = check_existing_results(output_path, all_tasks)

|

| 165 |

+

logger.info(f"Will evaluate {len(tasks)} remaining tasks")

|

| 166 |

+

|

| 167 |

+

if not tasks:

|

| 168 |

+

logger.info("No new tasks to evaluate - all tasks already completed!")

|

| 169 |

+

|

| 170 |

+

# Load and display existing results

|

| 171 |

+

logger.info("Loading existing results...")

|

| 172 |

+

try:

|

| 173 |

+

load_and_display_existing_results(output_path)

|

| 174 |

+

except (json.JSONDecodeError, OSError, KeyError) as e:

|

| 175 |

+

logger.warning(f"Could not load existing results: {e}")

|

| 176 |

+

return

|

| 177 |

+

|

| 178 |

+

# Define the CustomMTEB object with the specified tasks

|

| 179 |

+

evaluation = CustomMTEB(tasks=tasks)

|

| 180 |

+

|

| 181 |

+

# Run the evaluation

|

| 182 |

+

logger.info("Starting MTEB evaluation...")

|

| 183 |

+

start_time = time.time()

|

| 184 |

+

|

| 185 |

+

results = evaluation.run(model, eval_splits=EVAL_SPLITS, output_folder=str(output_path), verbosity=VERBOSITY)

|

| 186 |

+

|

| 187 |

+

end_time = time.time()

|

| 188 |

+

evaluation_time = end_time - start_time

|

| 189 |

+

logger.info(f"Evaluation completed in {evaluation_time:.2f} seconds")

|

| 190 |

+

|

| 191 |

+

# Parse the results and summarize them

|

| 192 |

+

logger.info("Parsing and summarizing results...")

|

| 193 |

+

parsed_results = parse_mteb_results(mteb_results=results, model_name=MODEL_NAME)

|

| 194 |

+

|

| 195 |

+

# Load existing results if any and combine them

|

| 196 |

+

existing_results = load_existing_parsed_results(output_path)

|

| 197 |

+

if existing_results:

|

| 198 |

+

logger.info("Combining with existing results...")

|

| 199 |

+

# Convert to dict for merging

|

| 200 |

+

parsed_dict = dict(parsed_results) if hasattr(parsed_results, "items") else {}

|

| 201 |

+

# Simple merge - existing results take precedence to avoid overwriting

|

| 202 |

+

for key, value in existing_results.items():

|

| 203 |

+

if key not in parsed_dict:

|

| 204 |

+

parsed_dict[key] = value

|

| 205 |

+

parsed_results = parsed_dict

|

| 206 |

+

|

| 207 |

+

task_scores = summarize_results(parsed_results)

|

| 208 |

+

|

| 209 |

+

# Save results in different formats

|

| 210 |

+

save_results(output_path, results, parsed_results, task_scores, evaluation_time)

|

| 211 |

+

|

| 212 |

+

# Print the results in a leaderboard format

|

| 213 |

+

logger.info("MTEB Evaluation Results:")

|

| 214 |

+

logger.info("=" * 80)

|

| 215 |

+

leaderboard = make_leaderboard(task_scores) # type: ignore[arg-type]

|

| 216 |

+

logger.info(leaderboard.to_string(index=False))

|

| 217 |

+

logger.info("=" * 80)

|

| 218 |

+

|

| 219 |

+

logger.info(f"Evaluation completed successfully. Results saved to {OUTPUT_DIR}")

|

| 220 |

+

|

| 221 |

+

|

| 222 |

+

def save_results(

|

| 223 |

+

output_path: Path, raw_results: list, parsed_results: dict, task_scores: dict, evaluation_time: float

|

| 224 |

+

) -> None:

|

| 225 |

+

"""Save evaluation results in multiple formats."""

|

| 226 |

+

# Save raw results

|

| 227 |

+

raw_results_file = output_path / "mteb_raw_results.json"

|

| 228 |

+

with raw_results_file.open("w") as f:

|

| 229 |

+

json.dump(raw_results, f, indent=2, default=str)

|

| 230 |

+

logger.info(f"Raw results saved to {raw_results_file}")

|

| 231 |

+

|

| 232 |

+

# Save parsed results

|

| 233 |

+

parsed_results_file = output_path / "mteb_parsed_results.json"

|

| 234 |

+

with parsed_results_file.open("w") as f:

|

| 235 |

+

json.dump(parsed_results, f, indent=2, default=str)

|

| 236 |

+

logger.info(f"Parsed results saved to {parsed_results_file}")

|

| 237 |

+

|

| 238 |

+

# Generate summary statistics

|

| 239 |

+

summary_stats = generate_summary_stats(task_scores)

|

| 240 |

+

|

| 241 |

+

# Save task scores summary

|

| 242 |

+

summary = {

|

| 243 |

+

"model_name": MODEL_NAME,

|

| 244 |

+

"evaluation_time_seconds": evaluation_time,

|

| 245 |

+

"task_scores": task_scores,

|

| 246 |

+

"summary_stats": summary_stats,

|

| 247 |

+

}

|

| 248 |

+

|

| 249 |

+

summary_file = output_path / "mteb_summary.json"

|

| 250 |

+

with summary_file.open("w") as f:

|

| 251 |

+

json.dump(summary, f, indent=2, default=str)

|

| 252 |

+

logger.info(f"Summary saved to {summary_file}")

|

| 253 |

+

|

| 254 |

+

# Save human-readable report

|

| 255 |

+

report_file = output_path / "mteb_report.txt"

|

| 256 |

+

generate_report(output_path, task_scores, summary_stats, evaluation_time)

|

| 257 |

+

logger.info(f"Report saved to {report_file}")

|

| 258 |

+

|

| 259 |

+

|

| 260 |

+

def generate_summary_stats(task_scores: dict) -> dict:

|

| 261 |

+

"""Generate summary statistics from task scores."""

|

| 262 |

+

if not task_scores:

|

| 263 |

+

return {}

|

| 264 |

+

|

| 265 |

+

# Extract all individual dataset scores

|

| 266 |

+

all_scores = []

|

| 267 |

+

for model_data in task_scores.values():

|

| 268 |

+

if isinstance(model_data, dict) and "dataset_scores" in model_data:

|

| 269 |

+

dataset_scores = model_data["dataset_scores"]

|

| 270 |

+

if isinstance(dataset_scores, dict):

|

| 271 |

+

all_scores.extend(

|

| 272 |

+

[

|

| 273 |

+

float(score)

|

| 274 |

+

for score in dataset_scores.values()

|

| 275 |

+

if isinstance(score, int | float) and str(score).lower() != "nan"

|

| 276 |

+

]

|

| 277 |

+

)

|

| 278 |

+

|

| 279 |

+

if not all_scores:

|

| 280 |

+

return {}

|

| 281 |

+

|

| 282 |

+

import numpy as np

|

| 283 |

+

|

| 284 |

+

return {

|

| 285 |

+

"total_datasets": len(all_scores),

|

| 286 |

+

"average_score": float(np.mean(all_scores)),

|

| 287 |

+

"median_score": float(np.median(all_scores)),

|

| 288 |

+

"std_dev": float(np.std(all_scores)),

|

| 289 |

+

"min_score": float(np.min(all_scores)),

|

| 290 |

+

"max_score": float(np.max(all_scores)),

|

| 291 |

+

}

|

| 292 |

+

|

| 293 |

+

|

| 294 |

+

def generate_report(output_path: Path, task_scores: dict, summary_stats: dict, evaluation_time: float) -> None:

|

| 295 |

+

"""Generate human-readable evaluation report."""

|

| 296 |

+

report_file = output_path / "mteb_report.txt"

|

| 297 |

+

|

| 298 |

+

with report_file.open("w") as f:

|

| 299 |

+

f.write("=" * 80 + "\n")

|

| 300 |

+

f.write("MTEB Evaluation Report\n")

|

| 301 |

+

f.write("=" * 80 + "\n\n")

|

| 302 |

+

f.write(f"Model: {MODEL_NAME}\n")

|

| 303 |

+

f.write(f"Model Path: {MODEL_PATH}\n")

|

| 304 |

+

f.write(f"Evaluation Time: {evaluation_time:.2f} seconds\n")

|

| 305 |

+

|

| 306 |

+

# Write summary stats

|

| 307 |

+

if summary_stats:

|

| 308 |

+

f.write(f"Total Datasets: {summary_stats['total_datasets']}\n\n")

|

| 309 |

+

f.write("Summary Statistics:\n")

|

| 310 |

+

f.write(f" Average Score: {summary_stats['average_score']:.4f}\n")

|

| 311 |

+

f.write(f" Median Score: {summary_stats['median_score']:.4f}\n")

|

| 312 |

+

f.write(f" Standard Deviation: {summary_stats['std_dev']:.4f}\n")

|

| 313 |

+

f.write(f" Score Range: {summary_stats['min_score']:.4f} - {summary_stats['max_score']:.4f}\n\n")

|

| 314 |

+

else:

|

| 315 |

+

f.write("Summary Statistics: No valid results found\n\n")

|

| 316 |

+

|

| 317 |

+

# Write leaderboard

|

| 318 |

+

f.write("Detailed Results:\n")

|

| 319 |

+

f.write("-" * 50 + "\n")

|

| 320 |

+

if task_scores:

|

| 321 |

+

leaderboard = make_leaderboard(task_scores) # type: ignore[arg-type]

|

| 322 |

+

f.write(leaderboard.to_string(index=False))

|

| 323 |

+

else:

|

| 324 |

+

f.write("No results available\n")

|

| 325 |

+

|

| 326 |

+

f.write("\n\n" + "=" * 80 + "\n")

|

| 327 |

+

|

| 328 |

+

|

| 329 |

+

def main() -> None:

|

| 330 |

+

"""Main evaluation function."""

|

| 331 |

+

logger.info(f"Starting MTEB evaluation for {MODEL_NAME}")

|

| 332 |

+

logger.info(f"Model path: {MODEL_PATH}")

|

| 333 |

+

logger.info(f"Output directory: {OUTPUT_DIR}")

|

| 334 |

+

logger.info("Running focused MTEB evaluation on code-relevant tasks:")

|

| 335 |

+

logger.info(" - Classification: Programming language classification")

|

| 336 |

+

logger.info(" - Clustering: Code clustering by functionality")

|

| 337 |

+

logger.info(" - STS: Semantic similarity between code snippets")

|

| 338 |

+

logger.info(" - Retrieval: Code search and retrieval")

|

| 339 |

+

|

| 340 |

+

try:

|

| 341 |

+

run_mteb_evaluation()

|

| 342 |

+

logger.info("Evaluation pipeline completed successfully!")

|

| 343 |

+

|

| 344 |

+

except Exception:

|

| 345 |

+

logger.exception("Evaluation failed")

|

| 346 |

+

sys.exit(1)

|

| 347 |

+

|

| 348 |

+

|

| 349 |

+

if __name__ == "__main__":

|

| 350 |

+

main()

|

README.md

CHANGED

|

@@ -1,3 +1,3 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

---

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

config.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"model_type": "model2vec",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"StaticModel"

|

| 5 |

+

],

|

| 6 |

+

"tokenizer_name": "Alibaba-NLP/gte-Qwen2-7B-instruct",

|

| 7 |

+

"apply_pca": 256,

|

| 8 |

+

"apply_zipf": null,

|

| 9 |

+

"sif_coefficient": 0.0001,

|

| 10 |

+

"hidden_dim": 256,

|

| 11 |

+

"seq_length": 1000000,

|

| 12 |

+

"normalize": true

|

| 13 |

+

}

|

distill.py

ADDED

|

@@ -0,0 +1,116 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python

|

| 2 |

+

"""

|

| 3 |

+

Script to distill Alibaba-NLP/gte-Qwen2-7B-instruct using Model2Vec.

|

| 4 |

+

|

| 5 |

+

This script performs the following operations:

|

| 6 |

+

1. Downloads the Alibaba-NLP/gte-Qwen2-7B-instruct model

|

| 7 |

+

2. Distills it using Model2Vec to create a smaller, faster static model

|

| 8 |

+

3. Saves the distilled model for further use

|

| 9 |

+

"""

|

| 10 |

+

|

| 11 |

+

import logging

|

| 12 |

+

import shutil

|

| 13 |

+

import time

|

| 14 |

+

from pathlib import Path

|

| 15 |

+

|

| 16 |

+

from model2vec.distill import distill

|

| 17 |

+

|

| 18 |

+

# =============================================================================

|

| 19 |

+

# CONFIGURATION CONSTANTS

|

| 20 |

+

# =============================================================================

|

| 21 |

+

|

| 22 |

+

# Model Configuration

|

| 23 |

+

MODEL_NAME = "Alibaba-NLP/gte-Qwen2-7B-instruct" # Model name or path for the source model

|

| 24 |

+

OUTPUT_DIR = "." # Directory to save the distilled model (current directory)

|

| 25 |

+

PCA_DIMS = 256 # Dimensions for PCA reduction (smaller = faster but less accurate)

|

| 26 |

+

|

| 27 |

+

# Hub Configuration

|

| 28 |

+

SAVE_TO_HUB = False # Whether to push the model to HuggingFace Hub

|

| 29 |

+

HUB_MODEL_ID = None # Model ID for HuggingFace Hub (if saving to hub)

|

| 30 |

+

|

| 31 |

+

# Generation Configuration

|

| 32 |

+

SKIP_README = True # Skip generating the README file

|

| 33 |

+

|

| 34 |

+

# =============================================================================

|

| 35 |

+

|

| 36 |

+

# Configure logging

|

| 37 |

+

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

|

| 38 |

+

logger = logging.getLogger(__name__)

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def main() -> None:

|

| 42 |

+

"""Run the distillation process for Alibaba-NLP/gte-Qwen2-7B-instruct."""

|

| 43 |

+

# Create output directory if it doesn't exist

|

| 44 |

+

output_dir = Path(OUTPUT_DIR)

|

| 45 |

+

output_dir.mkdir(parents=True, exist_ok=True)

|

| 46 |

+

|

| 47 |

+

logger.info(f"Starting distillation of {MODEL_NAME}")

|

| 48 |

+

logger.info(f"Distilled model will be saved to {output_dir}")

|

| 49 |

+

logger.info(f"Using PCA dimensions: {PCA_DIMS}")

|

| 50 |

+

logger.info(f"Skipping README generation: {SKIP_README}")

|

| 51 |

+

|

| 52 |

+

# Record start time for benchmarking

|

| 53 |

+

start_time = time.time()

|

| 54 |

+

|

| 55 |

+

# Run the distillation

|

| 56 |

+

try:

|

| 57 |

+

logger.info("Starting Model2Vec distillation...")

|

| 58 |

+

m2v_model = distill(

|

| 59 |

+

model_name=MODEL_NAME,

|

| 60 |

+

pca_dims=PCA_DIMS,

|

| 61 |

+

)

|

| 62 |

+

|

| 63 |

+

distill_time = time.time() - start_time

|

| 64 |

+

logger.info(f"Distillation completed in {distill_time:.2f} seconds")

|

| 65 |

+

|

| 66 |

+

# Save the distilled model

|

| 67 |

+

m2v_model.save_pretrained(OUTPUT_DIR)

|

| 68 |

+

logger.info(f"Model saved to {OUTPUT_DIR}")

|

| 69 |

+

|

| 70 |

+

# Remove README.md if it was created and we want to skip it

|

| 71 |

+

if SKIP_README and (output_dir / "README.md").exists():

|

| 72 |

+

(output_dir / "README.md").unlink()

|

| 73 |

+

logger.info("Removed auto-generated README.md")

|

| 74 |

+

|

| 75 |

+

# Get model size information

|

| 76 |

+

model_size_mb = sum(

|

| 77 |

+

f.stat().st_size for f in output_dir.glob("**/*") if f.is_file() and f.name != "README.md"

|

| 78 |

+

) / (1024 * 1024)

|

| 79 |

+

logger.info(f"Distilled model size: {model_size_mb:.2f} MB")

|

| 80 |

+

|

| 81 |

+

# Push to hub if requested

|

| 82 |

+

if SAVE_TO_HUB:

|

| 83 |

+

if HUB_MODEL_ID:

|

| 84 |

+

logger.info(f"Pushing model to HuggingFace Hub as {HUB_MODEL_ID}")

|

| 85 |

+

|

| 86 |

+

# Create a temporary README for Hub upload if needed

|

| 87 |

+

readme_path = output_dir / "README.md"

|

| 88 |

+

had_readme = readme_path.exists()

|

| 89 |

+

|

| 90 |

+

if SKIP_README and had_readme:

|

| 91 |

+

# Backup the README

|

| 92 |

+

shutil.move(readme_path, output_dir / "README.md.bak")

|

| 93 |

+

|

| 94 |

+

# Push to Hub

|

| 95 |

+

m2v_model.push_to_hub(HUB_MODEL_ID)

|

| 96 |

+

|

| 97 |

+

# Restore state

|

| 98 |

+

if SKIP_README:

|

| 99 |

+

if had_readme:

|

| 100 |

+

# Restore the backup

|

| 101 |

+

shutil.move(output_dir / "README.md.bak", readme_path)

|

| 102 |

+

elif (output_dir / "README.md").exists():

|

| 103 |

+

# Remove README created during push_to_hub

|

| 104 |

+

(output_dir / "README.md").unlink()

|

| 105 |

+

else:

|

| 106 |

+

logger.error("HUB_MODEL_ID must be specified when SAVE_TO_HUB is True")

|

| 107 |

+

|

| 108 |

+

logger.info("Distillation process completed successfully!")

|

| 109 |

+

|

| 110 |

+

except Exception:

|

| 111 |

+

logger.exception("Error during distillation")

|

| 112 |

+

raise

|

| 113 |

+

|

| 114 |

+

|

| 115 |

+

if __name__ == "__main__":

|

| 116 |

+

main()

|

evaluate.py

ADDED

|

@@ -0,0 +1,422 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|