Sarthak

commited on

Commit

·

1bc7e54

1

Parent(s):

454e47c

chore: remove unused scripts and update dependencies

Browse filesThis commit removes the MTEB evaluation script, distillation script, and evaluation script as they are no longer needed. Additionally, updates the pyproject.toml file to remove dependencies related to the removed scripts and adds typing-extensions to the dependencies.

- .codemap.yml +294 -0

- MTEB_evaluate.py +0 -343

- REPORT.md +299 -0

- Taskfile.yml +23 -0

- analysis_charts/batch_size_scaling.png +3 -0

- analysis_charts/benchmark_performance.png +3 -0

- analysis_charts/code_performance_radar.png +3 -0

- analysis_charts/comparative_radar.png +3 -0

- analysis_charts/efficiency_analysis.png +3 -0

- analysis_charts/language_heatmap.png +3 -0

- analysis_charts/memory_scaling.png +3 -0

- analysis_charts/model_comparison.png +3 -0

- analysis_charts/model_specifications.png +3 -0

- analysis_charts/peer_comparison.png +3 -0

- analysis_charts/radar_code_model2vec_Linq_Embed_Mistral.png +3 -0

- analysis_charts/radar_code_model2vec_Qodo_Embed_1_15B.png +3 -0

- analysis_charts/radar_code_model2vec_Reason_ModernColBERT.png +3 -0

- analysis_charts/radar_code_model2vec_all_MiniLM_L6_v2.png +3 -0

- analysis_charts/radar_code_model2vec_all_mpnet_base_v2.png +3 -0

- analysis_charts/radar_code_model2vec_bge_m3.png +3 -0

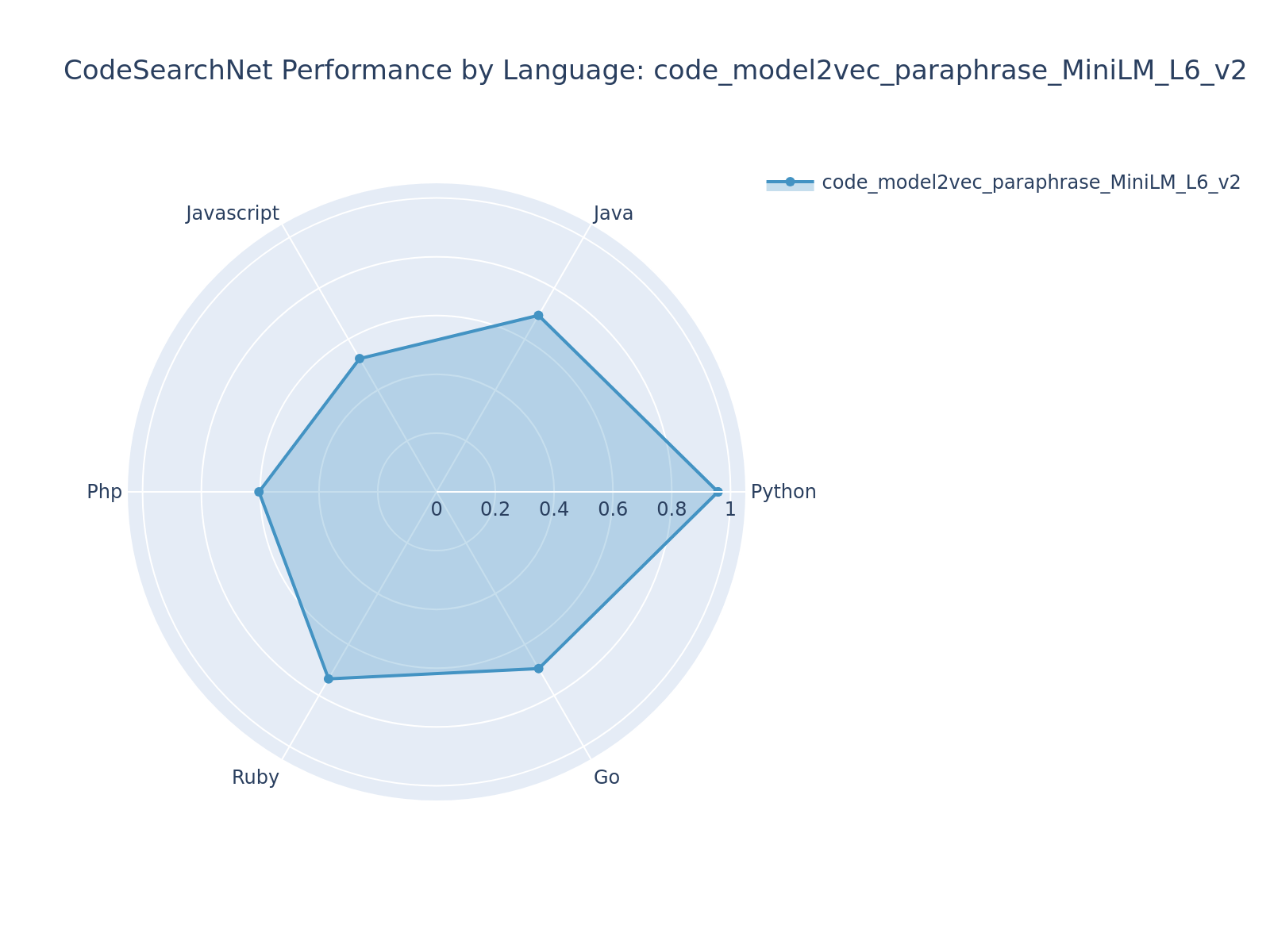

- analysis_charts/radar_code_model2vec_codebert_base.png +3 -0

- analysis_charts/radar_code_model2vec_graphcodebert_base.png +3 -0

- analysis_charts/radar_code_model2vec_gte_Qwen2_15B_instruct.png +3 -0

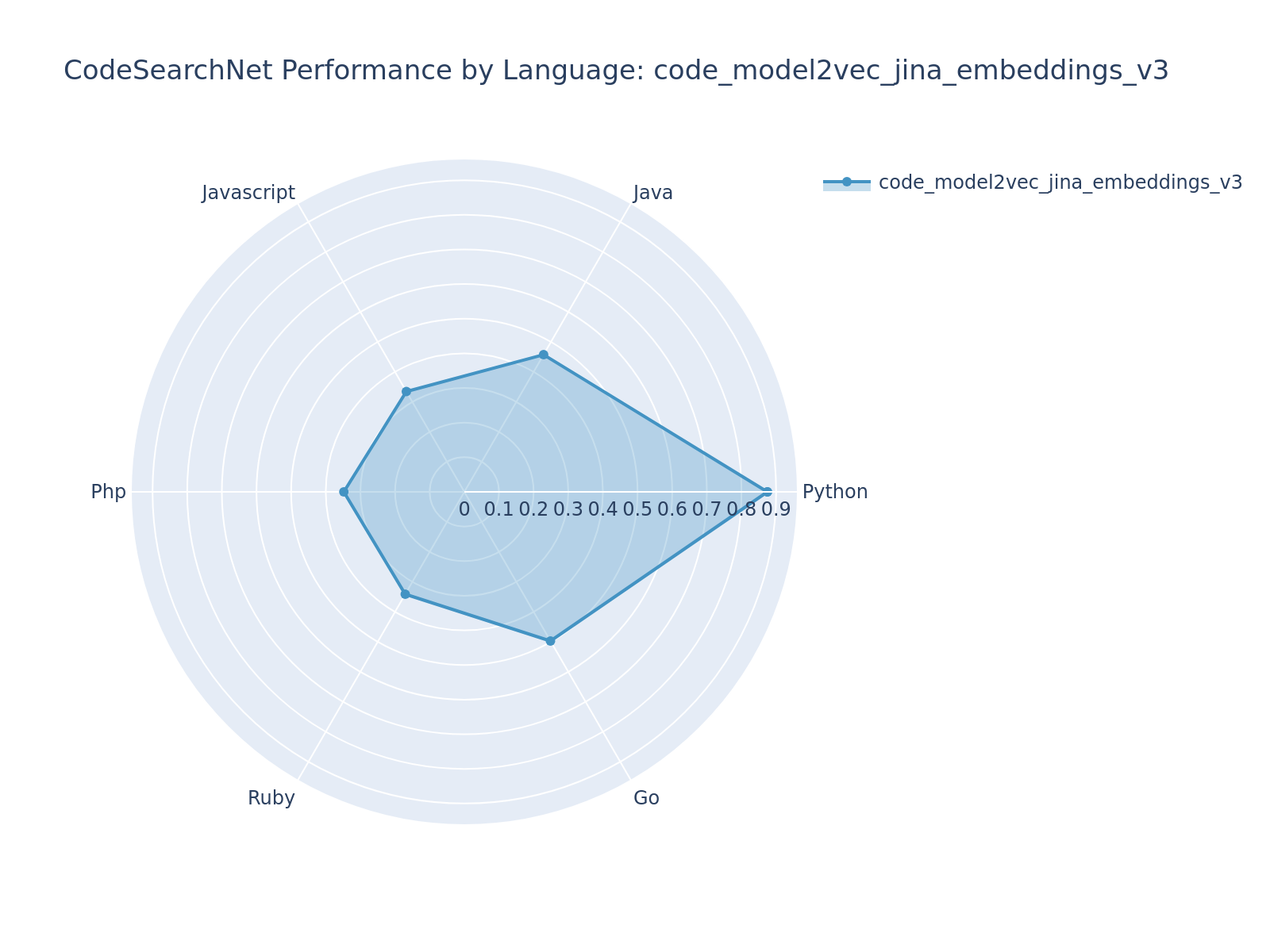

- analysis_charts/radar_code_model2vec_gte_Qwen2_7B_instruct.png +3 -0

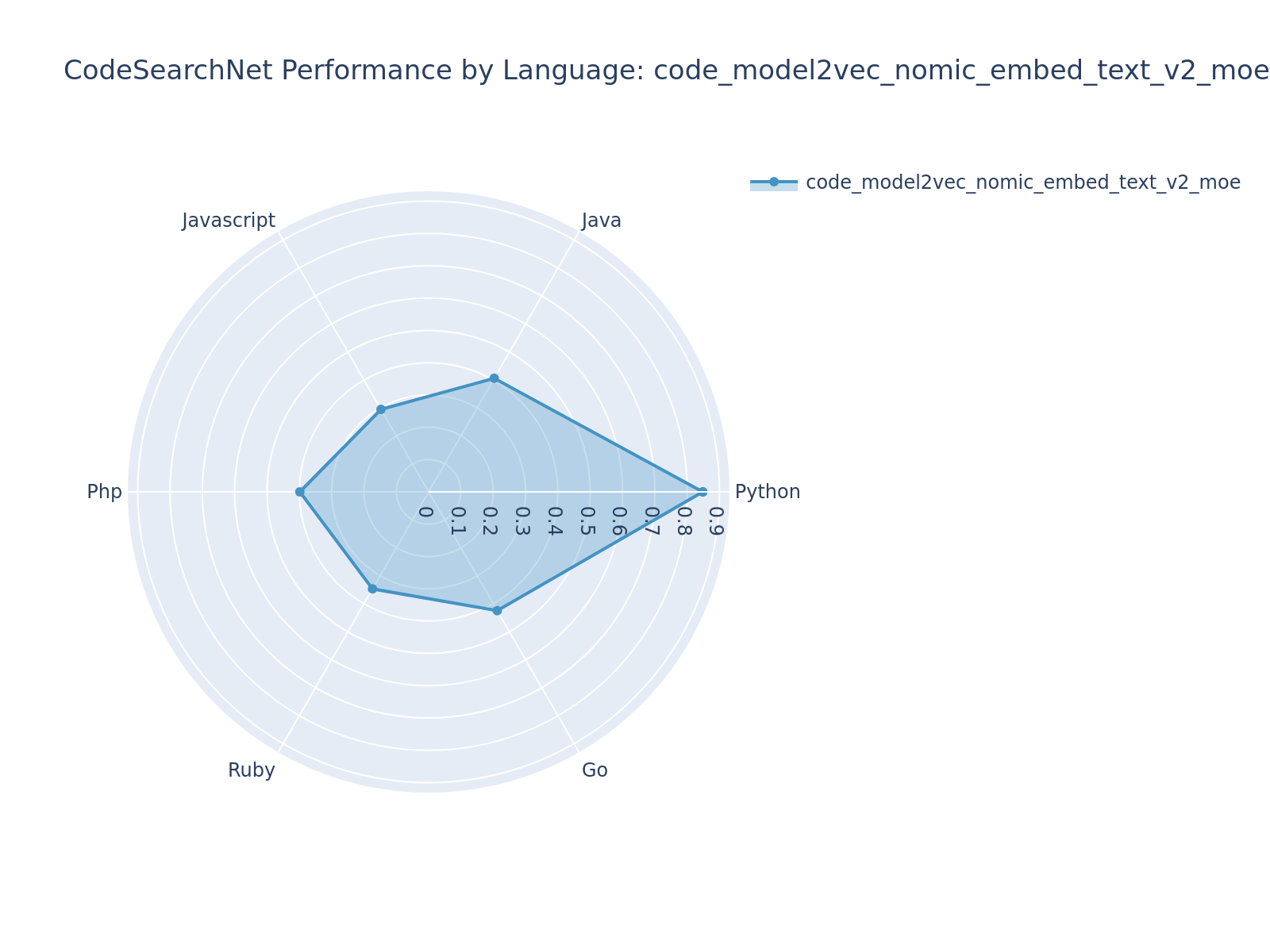

- analysis_charts/radar_code_model2vec_jina_embeddings_v2_base_code.png +3 -0

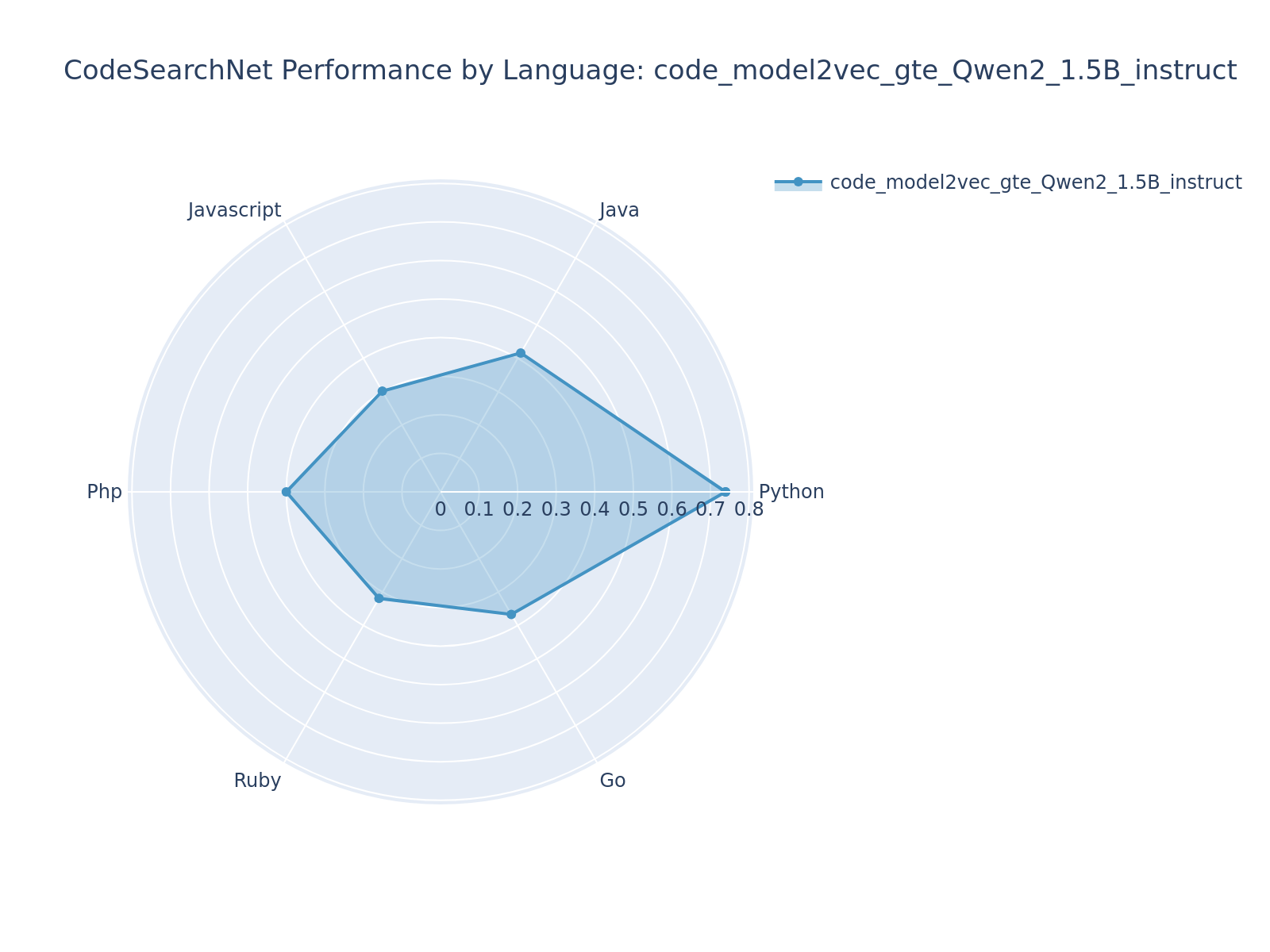

- analysis_charts/radar_code_model2vec_jina_embeddings_v3.png +3 -0

- analysis_charts/radar_code_model2vec_nomic_embed_text_v2_moe.png +3 -0

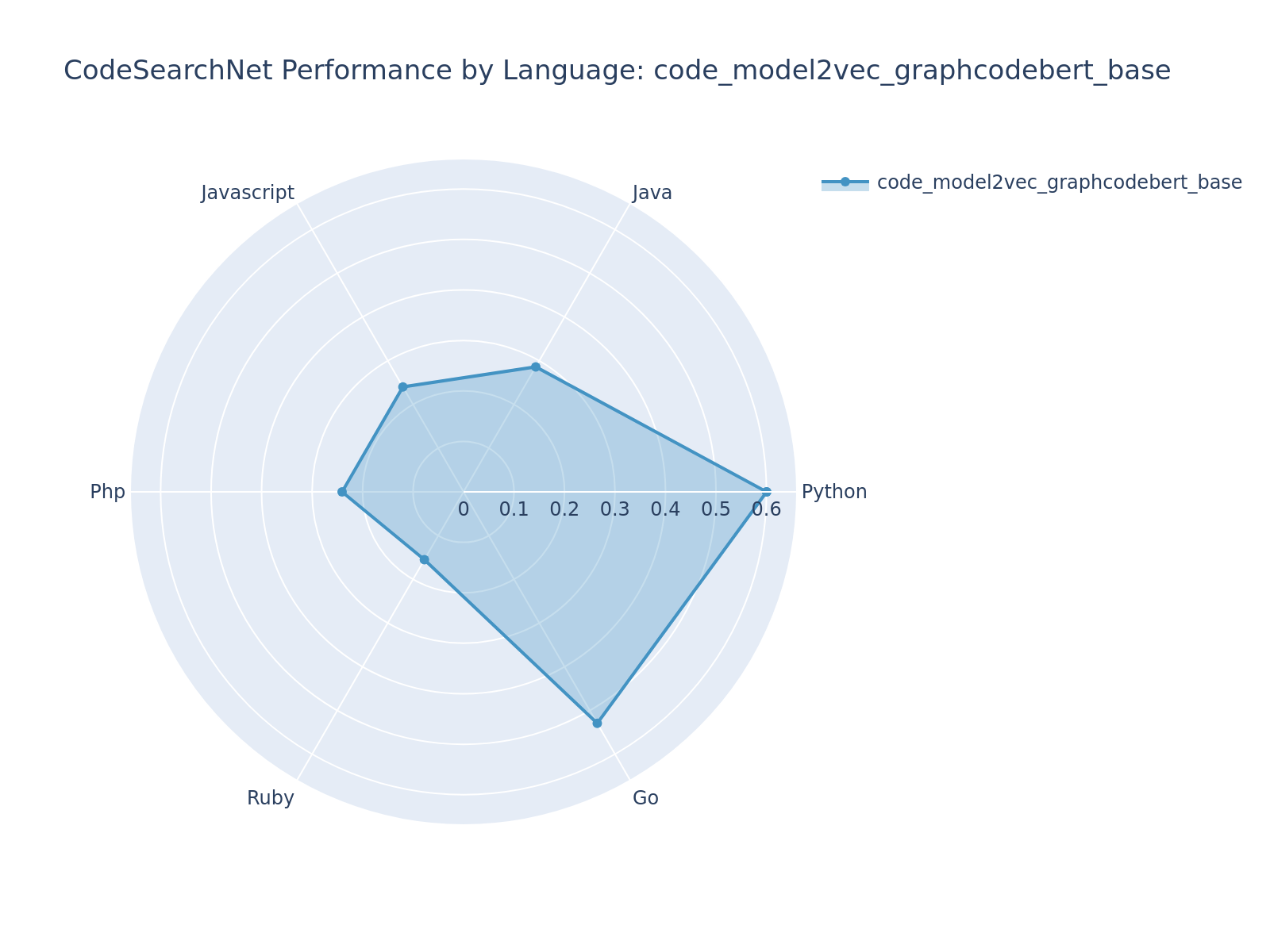

- analysis_charts/radar_code_model2vec_paraphrase_MiniLM_L6_v2.png +3 -0

- distill.py +0 -116

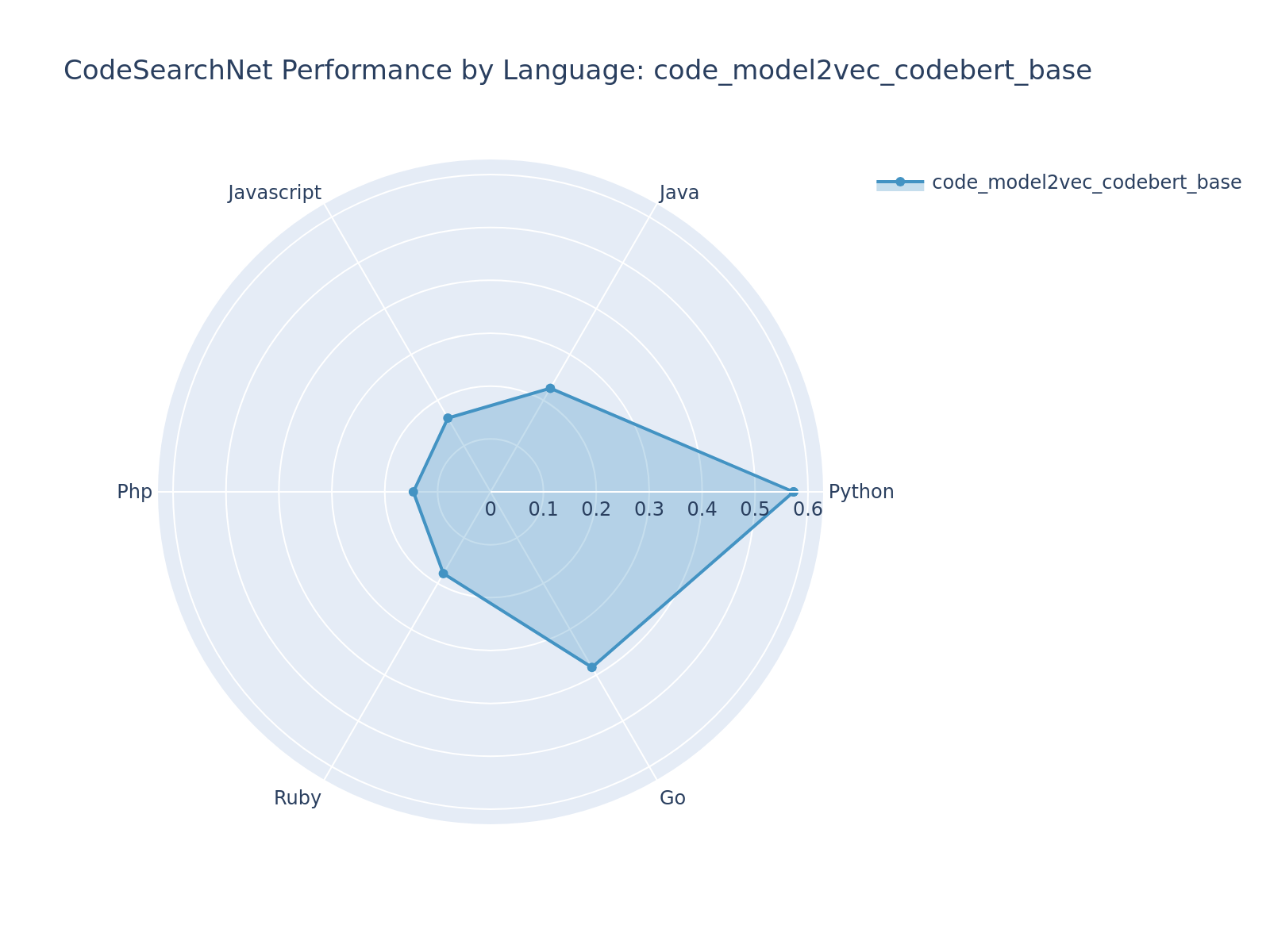

- evaluate.py +0 -422

- pyproject.toml +37 -5

- src/distiller/distill.py +419 -159

- src/distiller/evaluate.py +371 -43

- train_code_classification.py +0 -365

.codemap.yml

ADDED

|

@@ -0,0 +1,294 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

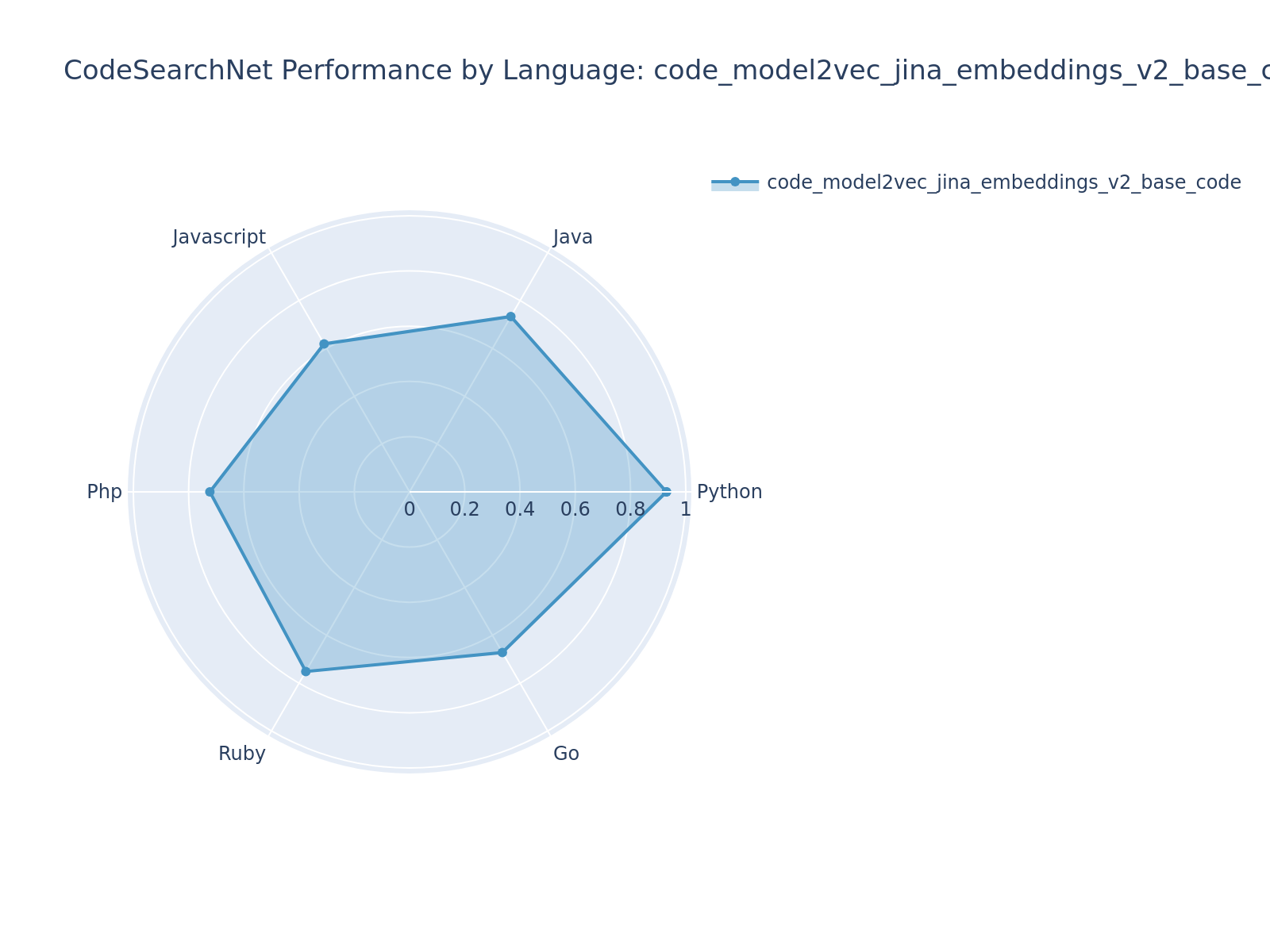

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# CodeMap Configuration File

|

| 2 |

+

# -------------------------

|

| 3 |

+

# This file configures CodeMap's behavior. Uncomment and modify settings as needed.

|

| 4 |

+

|

| 5 |

+

# LLM Configuration - Controls which model is used for AI operations

|

| 6 |

+

llm:

|

| 7 |

+

# Format: "provider:model-name", e.g., "openai:gpt-4o", "anthropic:claude-3-opus"

|

| 8 |

+

model: "google-gla:gemini-2.0-flash"

|

| 9 |

+

temperature: 0.5 # Lower for more deterministic outputs, higher for creativity

|

| 10 |

+

max_input_tokens: 1000000 # Maximum tokens in input

|

| 11 |

+

max_output_tokens: 10000 # Maximum tokens in responses

|

| 12 |

+

max_requests: 25 # Maximum number of requests

|

| 13 |

+

|

| 14 |

+

# Embedding Configuration - Controls vector embedding behavior

|

| 15 |

+

embedding:

|

| 16 |

+

# Recommended models: "minishlab/potion-base-8M3", Only Model2Vec static models are supported

|

| 17 |

+

model_name: "minishlab/potion-base-8M"

|

| 18 |

+

dimension: 256

|

| 19 |

+

# dimension_metric: "cosine" # Metric for dimension calculation (e.g., "cosine", "euclidean")

|

| 20 |

+

# max_retries: 3 # Maximum retries for embedding requests

|

| 21 |

+

# retry_delay: 5 # Delay in seconds between retries

|

| 22 |

+

# max_content_length: 5000 # Maximum characters per file chunk

|

| 23 |

+

# Qdrant (Vector DB) settings

|

| 24 |

+

# qdrant_batch_size: 100 # Batch size for Qdrant uploads

|

| 25 |

+

# url: "http://localhost:6333" # Qdrant server URL

|

| 26 |

+

# timeout: 30 # Qdrant client timeout in seconds

|

| 27 |

+

# prefer_grpc: true # Prefer gRPC for Qdrant communication

|

| 28 |

+

|

| 29 |

+

# Advanced chunking settings - controls how code is split

|

| 30 |

+

# chunking:

|

| 31 |

+

# max_hierarchy_depth: 2 # Maximum depth of code hierarchy to consider

|

| 32 |

+

# max_file_lines: 1000 # Maximum lines per file before splitting

|

| 33 |

+

|

| 34 |

+

# Clustering settings for embeddings

|

| 35 |

+

# clustering:

|

| 36 |

+

# method: "agglomerative" # Clustering method: "agglomerative", "dbscan"

|

| 37 |

+

# agglomerative: # Settings for Agglomerative Clustering

|

| 38 |

+

# metric: "precomputed" # Metric: "cosine", "euclidean", "manhattan", "l1", "l2", "precomputed"

|

| 39 |

+

# distance_threshold: 0.3 # Distance threshold for forming clusters

|

| 40 |

+

# linkage: "complete" # Linkage criterion: "ward", "complete", "average", "single"

|

| 41 |

+

# dbscan: # Settings for DBSCAN Clustering

|

| 42 |

+

# eps: 0.3 # The maximum distance between two samples for one to be considered as in the neighborhood of the other

|

| 43 |

+

# min_samples: 2 # The number of samples in a neighborhood for a point to be considered as a core point

|

| 44 |

+

# algorithm: "auto" # Algorithm to compute pointwise distances: "auto", "ball_tree", "kd_tree", "brute"

|

| 45 |

+

# metric: "precomputed" # Metric for distance computation: "cityblock", "cosine", "euclidean", "l1", "l2", "manhattan", "precomputed"

|

| 46 |

+

|

| 47 |

+

# RAG (Retrieval Augmented Generation) Configuration

|

| 48 |

+

rag:

|

| 49 |

+

max_context_length: 8000 # Maximum context length for the LLM

|

| 50 |

+

max_context_results: 100 # Maximum number of context results to return

|

| 51 |

+

similarity_threshold: 0.75 # Minimum similarity score (0-1) for relevance

|

| 52 |

+

# system_prompt: null # Optional system prompt to guide the RAG model (leave commented or set if needed)

|

| 53 |

+

include_file_content: true # Include file content in context

|

| 54 |

+

include_metadata: true # Include file metadata in context

|

| 55 |

+

|

| 56 |

+

# Sync Configuration - Controls which files are excluded from processing

|

| 57 |

+

sync:

|

| 58 |

+

exclude_patterns:

|

| 59 |

+

- "^node_modules/"

|

| 60 |

+

- "^\\.venv/"

|

| 61 |

+

- "^venv/"

|

| 62 |

+

- "^env/"

|

| 63 |

+

- "^__pycache__/"

|

| 64 |

+

- "^\\.mypy_cache/"

|

| 65 |

+

- "^\\.pytest_cache/"

|

| 66 |

+

- "^\\.ruff_cache/"

|

| 67 |

+

- "^dist/"

|

| 68 |

+

- "^build/"

|

| 69 |

+

- "^\\.git/"

|

| 70 |

+

- "^typings/"

|

| 71 |

+

- "^\\.pyc$"

|

| 72 |

+

- "^\\.pyo$"

|

| 73 |

+

- "^\\.so$"

|

| 74 |

+

- "^\\.dll$"

|

| 75 |

+

- "^\\.lib$"

|

| 76 |

+

- "^\\.a$"

|

| 77 |

+

- "^\\.o$"

|

| 78 |

+

- "^\\.class$"

|

| 79 |

+

- "^\\.jar$"

|

| 80 |

+

|

| 81 |

+

# Generation Configuration - Controls documentation generation

|

| 82 |

+

gen:

|

| 83 |

+

max_content_length: 5000 # Maximum content length per file for generation

|

| 84 |

+

use_gitignore: true # Use .gitignore patterns to exclude files

|

| 85 |

+

output_dir: "documentation" # Directory to store generated documentation

|

| 86 |

+

include_tree: true # Include directory tree in output

|

| 87 |

+

include_entity_graph: true # Include entity relationship graph

|

| 88 |

+

semantic_analysis: true # Enable semantic analysis

|

| 89 |

+

lod_level: "skeleton" # Level of detail: "signatures", "structure", "docs", "skeleton", "full"

|

| 90 |

+

|

| 91 |

+

# Mermaid diagram configuration for entity graphs

|

| 92 |

+

# mermaid_entities:

|

| 93 |

+

# - "module"

|

| 94 |

+

# - "class"

|

| 95 |

+

# - "function"

|

| 96 |

+

# - "method"

|

| 97 |

+

# - "constant"

|

| 98 |

+

# - "variable"

|

| 99 |

+

# - "import"

|

| 100 |

+

# mermaid_relationships:

|

| 101 |

+

# - "declares"

|

| 102 |

+

# - "imports"

|

| 103 |

+

# - "calls"

|

| 104 |

+

mermaid_show_legend: false

|

| 105 |

+

mermaid_remove_unconnected: true # Show isolated nodes

|

| 106 |

+

mermaid_styled: false # Style the mermaid diagram

|

| 107 |

+

|

| 108 |

+

# Processor Configuration - Controls code processing behavior

|

| 109 |

+

processor:

|

| 110 |

+

enabled: true # Enable the processor

|

| 111 |

+

max_workers: 4 # Maximum number of parallel workers

|

| 112 |

+

ignored_patterns: # Patterns to ignore during processing

|

| 113 |

+

- "**/.git/**"

|

| 114 |

+

- "**/__pycache__/**"

|

| 115 |

+

- "**/.venv/**"

|

| 116 |

+

- "**/node_modules/**"

|

| 117 |

+

- "**/*.pyc"

|

| 118 |

+

- "**/dist/**"

|

| 119 |

+

- "**/build/**"

|

| 120 |

+

default_lod_level: "signatures" # Default level of detail: "signatures", "structure", "docs", "full"

|

| 121 |

+

|

| 122 |

+

# File watcher configuration

|

| 123 |

+

# watcher:

|

| 124 |

+

# enabled: true # Enable file watching

|

| 125 |

+

# debounce_delay: 1.0 # Delay in seconds before processing changes

|

| 126 |

+

|

| 127 |

+

# Commit Command Configuration

|

| 128 |

+

commit:

|

| 129 |

+

strategy: "semantic" # Strategy for splitting diffs: "file", "hunk", "semantic"

|

| 130 |

+

bypass_hooks: false # Whether to bypass git hooks

|

| 131 |

+

use_lod_context: true # Use level of detail context

|

| 132 |

+

is_non_interactive: false # Run in non-interactive mode

|

| 133 |

+

|

| 134 |

+

# Diff splitter configuration

|

| 135 |

+

# diff_splitter:

|

| 136 |

+

# similarity_threshold: 0.6 # Similarity threshold for grouping related changes

|

| 137 |

+

# directory_similarity_threshold: 0.3 # Threshold for considering directories similar (e.g., for renames)

|

| 138 |

+

# file_move_similarity_threshold: 0.85 # Threshold for detecting file moves/renames based on content

|

| 139 |

+

# min_chunks_for_consolidation: 2 # Minimum number of small chunks to consider for consolidation

|

| 140 |

+

# max_chunks_before_consolidation: 20 # Maximum number of chunks before forcing consolidation

|

| 141 |

+

# max_file_size_for_llm: 50000 # Maximum file size (bytes) for LLM processing of individual files

|

| 142 |

+

# max_log_diff_size: 1000 # Maximum size (lines) of diff log to pass to LLM for context

|

| 143 |

+

# default_code_extensions: # File extensions considered as code for semantic splitting

|

| 144 |

+

# - "js"

|

| 145 |

+

# - "jsx"

|

| 146 |

+

# - "ts"

|

| 147 |

+

# - "tsx"

|

| 148 |

+

# - "py"

|

| 149 |

+

# - "java"

|

| 150 |

+

# - "c"

|

| 151 |

+

# - "cpp"

|

| 152 |

+

# - "h"

|

| 153 |

+

# - "hpp"

|

| 154 |

+

# - "cc"

|

| 155 |

+

# - "cs"

|

| 156 |

+

# - "go"

|

| 157 |

+

# - "rb"

|

| 158 |

+

# - "php"

|

| 159 |

+

# - "rs"

|

| 160 |

+

# - "swift"

|

| 161 |

+

# - "scala"

|

| 162 |

+

# - "kt"

|

| 163 |

+

# - "sh"

|

| 164 |

+

# - "pl"

|

| 165 |

+

# - "pm"

|

| 166 |

+

|

| 167 |

+

# Commit convention configuration (Conventional Commits)

|

| 168 |

+

convention:

|

| 169 |

+

types: # Allowed commit types

|

| 170 |

+

- "feat"

|

| 171 |

+

- "fix"

|

| 172 |

+

- "docs"

|

| 173 |

+

- "style"

|

| 174 |

+

- "refactor"

|

| 175 |

+

- "perf"

|

| 176 |

+

- "test"

|

| 177 |

+

- "build"

|

| 178 |

+

- "ci"

|

| 179 |

+

- "chore"

|

| 180 |

+

scopes: [] # Add project-specific scopes here, e.g., ["api", "ui", "db"]

|

| 181 |

+

max_length: 72 # Maximum length of commit message header

|

| 182 |

+

|

| 183 |

+

# Commit linting configuration (based on conventional-changelog-lint rules)

|

| 184 |

+

# lint:

|

| 185 |

+

# # Rules are defined as: {level: "ERROR"|"WARNING"|"DISABLED", rule: "always"|"never", value: <specific_value_if_any>}

|

| 186 |

+

# header_max_length:

|

| 187 |

+

# level: "ERROR"

|

| 188 |

+

# rule: "always"

|

| 189 |

+

# value: 100

|

| 190 |

+

# header_case: # e.g., 'lower-case', 'upper-case', 'camel-case', etc.

|

| 191 |

+

# level: "DISABLED"

|

| 192 |

+

# rule: "always"

|

| 193 |

+

# value: "lower-case"

|

| 194 |

+

# header_full_stop:

|

| 195 |

+

# level: "ERROR"

|

| 196 |

+

# rule: "never"

|

| 197 |

+

# value: "."

|

| 198 |

+

# type_enum: # Types must be from the 'convention.types' list

|

| 199 |

+

# level: "ERROR"

|

| 200 |

+

# rule: "always"

|

| 201 |

+

# type_case:

|

| 202 |

+

# level: "ERROR"

|

| 203 |

+

# rule: "always"

|

| 204 |

+

# value: "lower-case"

|

| 205 |

+

# type_empty:

|

| 206 |

+

# level: "ERROR"

|

| 207 |

+

# rule: "never"

|

| 208 |

+

# scope_case:

|

| 209 |

+

# level: "ERROR"

|

| 210 |

+

# rule: "always"

|

| 211 |

+

# value: "lower-case"

|

| 212 |

+

# scope_empty: # Set to "ERROR" if scopes are mandatory

|

| 213 |

+

# level: "DISABLED"

|

| 214 |

+

# rule: "never"

|

| 215 |

+

# scope_enum: # Scopes must be from the 'convention.scopes' list if enabled

|

| 216 |

+

# level: "DISABLED"

|

| 217 |

+

# rule: "always"

|

| 218 |

+

# # value: [] # Add allowed scopes here if rule is "always" and level is not DISABLED

|

| 219 |

+

# subject_case: # Forbids specific cases in the subject

|

| 220 |

+

# level: "ERROR"

|

| 221 |

+

# rule: "never"

|

| 222 |

+

# value: ["sentence-case", "start-case", "pascal-case", "upper-case"]

|

| 223 |

+

# subject_empty:

|

| 224 |

+

# level: "ERROR"

|

| 225 |

+

# rule: "never"

|

| 226 |

+

# subject_full_stop:

|

| 227 |

+

# level: "ERROR"

|

| 228 |

+

# rule: "never"

|

| 229 |

+

# value: "."

|

| 230 |

+

# subject_exclamation_mark:

|

| 231 |

+

# level: "DISABLED"

|

| 232 |

+

# rule: "never"

|

| 233 |

+

# body_leading_blank: # Body must start with a blank line after subject

|

| 234 |

+

# level: "WARNING"

|

| 235 |

+

# rule: "always"

|

| 236 |

+

# body_empty:

|

| 237 |

+

# level: "DISABLED"

|

| 238 |

+

# rule: "never"

|

| 239 |

+

# body_max_line_length:

|

| 240 |

+

# level: "ERROR"

|

| 241 |

+

# rule: "always"

|

| 242 |

+

# value: 100

|

| 243 |

+

# footer_leading_blank: # Footer must start with a blank line after body

|

| 244 |

+

# level: "WARNING"

|

| 245 |

+

# rule: "always"

|

| 246 |

+

# footer_empty:

|

| 247 |

+

# level: "DISABLED"

|

| 248 |

+

# rule: "never"

|

| 249 |

+

# footer_max_line_length:

|

| 250 |

+

# level: "ERROR"

|

| 251 |

+

# rule: "always"

|

| 252 |

+

# value: 100

|

| 253 |

+

|

| 254 |

+

# Pull Request Configuration

|

| 255 |

+

pr:

|

| 256 |

+

defaults:

|

| 257 |

+

base_branch: null # Default base branch (null = auto-detect, e.g., main, master, develop)

|

| 258 |

+

feature_prefix: "feature/" # Default feature branch prefix

|

| 259 |

+

|

| 260 |

+

strategy: "github-flow" # Git workflow: "github-flow", "gitflow", "trunk-based"

|

| 261 |

+

|

| 262 |

+

# Branch mapping for different PR types (primarily used in gitflow strategy)

|

| 263 |

+

# branch_mapping:

|

| 264 |

+

# feature:

|

| 265 |

+

# base: "develop"

|

| 266 |

+

# prefix: "feature/"

|

| 267 |

+

# release:

|

| 268 |

+

# base: "main"

|

| 269 |

+

# prefix: "release/"

|

| 270 |

+

# hotfix:

|

| 271 |

+

# base: "main"

|

| 272 |

+

# prefix: "hotfix/"

|

| 273 |

+

# bugfix:

|

| 274 |

+

# base: "develop"

|

| 275 |

+

# prefix: "bugfix/"

|

| 276 |

+

|

| 277 |

+

# PR generation configuration

|

| 278 |

+

generate:

|

| 279 |

+

title_strategy: "llm" # Strategy for generating PR titles: "commits" (from commit messages), "llm" (AI generated)

|

| 280 |

+

description_strategy: "llm" # Strategy for descriptions: "commits", "llm"

|

| 281 |

+

# description_template: | # Template for PR description when using 'llm' strategy. Placeholders: {changes}, {testing_instructions}, {screenshots}

|

| 282 |

+

# ## Changes

|

| 283 |

+

# {changes}

|

| 284 |

+

#

|

| 285 |

+

# ## Testing

|

| 286 |

+

# {testing_instructions}

|

| 287 |

+

#

|

| 288 |

+

# ## Screenshots

|

| 289 |

+

# {screenshots}

|

| 290 |

+

use_workflow_templates: true # Use workflow-specific templates if available (e.g., for GitHub PR templates)

|

| 291 |

+

|

| 292 |

+

# Ask Command Configuration

|

| 293 |

+

ask:

|

| 294 |

+

interactive_chat: false # Enable interactive chat mode for the 'ask' command

|

MTEB_evaluate.py

DELETED

|

@@ -1,343 +0,0 @@

|

|

| 1 |

-

#!/usr/bin/env python

|

| 2 |

-

"""

|

| 3 |

-

MTEB Evaluation Script with Subprocess Isolation (Code Information Retrieval Tasks).

|

| 4 |

-

|

| 5 |

-

This script evaluates models using MTEB with subprocess isolation to prevent

|

| 6 |

-

memory issues and process killing.

|

| 7 |

-

|

| 8 |

-

Features:

|

| 9 |

-

- Each task runs in a separate subprocess to isolate memory

|

| 10 |

-

- 1-minute timeout per task

|

| 11 |

-

- No retries - if task fails or times out, move to next one

|

| 12 |

-

- Memory monitoring and cleanup

|

| 13 |

-

|

| 14 |

-

Note: Multi-threading is NOT used here because:

|

| 15 |

-

1. Memory is the main bottleneck, not CPU

|

| 16 |

-

2. Running multiple tasks simultaneously would increase memory pressure

|

| 17 |

-

3. Many tasks are being killed (return code -9) due to OOM conditions

|

| 18 |

-

4. Sequential processing with subprocess isolation is more stable

|

| 19 |

-

"""

|

| 20 |

-

|

| 21 |

-

import contextlib

|

| 22 |

-

import json

|

| 23 |

-

import logging

|

| 24 |

-

import subprocess

|

| 25 |

-

import sys

|

| 26 |

-

import tempfile

|

| 27 |

-

import time

|

| 28 |

-

from pathlib import Path

|

| 29 |

-

|

| 30 |

-

import psutil

|

| 31 |

-

|

| 32 |

-

# =============================================================================

|

| 33 |

-

# CONFIGURATION

|

| 34 |

-

# =============================================================================

|

| 35 |

-

|

| 36 |

-

MODEL_PATH = "."

|

| 37 |

-

MODEL_NAME = "gte-Qwen2-7B-instruct-M2V-Distilled"

|

| 38 |

-

OUTPUT_DIR = "mteb_results"

|

| 39 |

-

TASK_TIMEOUT = 30 # 30 seconds timeout per task

|

| 40 |

-

MAX_RETRIES = 0 # No retries - move to next task if failed/timeout

|

| 41 |

-

|

| 42 |

-

# Constants

|

| 43 |

-

SIGKILL_RETURN_CODE = -9 # Process killed by SIGKILL (usually OOM)

|

| 44 |

-

|

| 45 |

-

# Configure logging

|

| 46 |

-

logging.basicConfig(level=logging.INFO, format="%(asctime)s - %(levelname)s - %(message)s")

|

| 47 |

-

logger = logging.getLogger(__name__)

|

| 48 |

-

|

| 49 |

-

# =============================================================================

|

| 50 |

-

# SINGLE TASK RUNNER SCRIPT

|

| 51 |

-

# =============================================================================

|

| 52 |

-

|

| 53 |

-

TASK_RUNNER_SCRIPT = """

|

| 54 |

-

import sys

|

| 55 |

-

import os

|

| 56 |

-

import json

|

| 57 |

-

import tempfile

|

| 58 |

-

import traceback

|

| 59 |

-

from pathlib import Path

|

| 60 |

-

|

| 61 |

-

# Add current directory to path

|

| 62 |

-

sys.path.insert(0, ".")

|

| 63 |

-

|

| 64 |

-

try:

|

| 65 |

-

import mteb

|

| 66 |

-

from model2vec import StaticModel

|

| 67 |

-

from mteb import ModelMeta

|

| 68 |

-

from evaluation import CustomMTEB

|

| 69 |

-

|

| 70 |

-

def run_single_task():

|

| 71 |

-

# Get arguments

|

| 72 |

-

model_path = sys.argv[1]

|

| 73 |

-

task_name = sys.argv[2]

|

| 74 |

-

output_dir = sys.argv[3]

|

| 75 |

-

model_name = sys.argv[4]

|

| 76 |

-

|

| 77 |

-

# Load model

|

| 78 |

-

model = StaticModel.from_pretrained(model_path)

|

| 79 |

-

model.mteb_model_meta = ModelMeta(

|

| 80 |

-

name=model_name, revision="distilled", release_date=None, languages=["eng"]

|

| 81 |

-

)

|

| 82 |

-

|

| 83 |

-

# Get and run task

|

| 84 |

-

task = mteb.get_task(task_name, languages=["eng"])

|

| 85 |

-

evaluation = CustomMTEB(tasks=[task])

|

| 86 |

-

|

| 87 |

-

results = evaluation.run(

|

| 88 |

-

model,

|

| 89 |

-

eval_splits=["test"],

|

| 90 |

-

output_folder=output_dir,

|

| 91 |

-

verbosity=0

|

| 92 |

-

)

|

| 93 |

-

|

| 94 |

-

# Save results to temp file for parent process

|

| 95 |

-

with tempfile.NamedTemporaryFile(mode='w', delete=False, suffix='.json') as f:

|

| 96 |

-

json.dump({

|

| 97 |

-

"success": True,

|

| 98 |

-

"task_name": task_name,

|

| 99 |

-

"results": results

|

| 100 |

-

}, f)

|

| 101 |

-

temp_file = f.name

|

| 102 |

-

|

| 103 |

-

print(f"RESULT_FILE:{temp_file}")

|

| 104 |

-

return 0

|

| 105 |

-

|

| 106 |

-

if __name__ == "__main__":

|

| 107 |

-

exit(run_single_task())

|

| 108 |

-

|

| 109 |

-

except Exception as e:

|

| 110 |

-

print(f"ERROR: {str(e)}")

|

| 111 |

-

print(f"TRACEBACK: {traceback.format_exc()}")

|

| 112 |

-

exit(1)

|

| 113 |

-

"""

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

def get_available_tasks() -> list[str]:

|

| 117 |

-

"""Get list of available tasks."""

|

| 118 |

-

try:

|

| 119 |

-

import mteb

|

| 120 |

-

import mteb.benchmarks

|

| 121 |

-

|

| 122 |

-

# Use main MTEB benchmark for comprehensive evaluation

|

| 123 |

-

benchmark = mteb.benchmarks.CoIR

|

| 124 |

-

return [str(task) for task in benchmark.tasks] # All tasks

|

| 125 |

-

except Exception:

|

| 126 |

-

logger.exception("Failed to get tasks")

|

| 127 |

-

return []

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

def check_existing_results(output_path: Path, task_names: list[str]) -> list[str]:

|

| 131 |

-

"""Check for existing results and return remaining tasks."""

|

| 132 |

-

remaining_tasks = []

|

| 133 |

-

|

| 134 |

-

for task_name in task_names:

|

| 135 |

-

result_file = output_path / MODEL_NAME / "distilled" / f"{task_name}.json"

|

| 136 |

-

if result_file.exists():

|

| 137 |

-

logger.info(f"Skipping {task_name} - results already exist")

|

| 138 |

-

else:

|

| 139 |

-

remaining_tasks.append(task_name)

|

| 140 |

-

|

| 141 |

-

return remaining_tasks

|

| 142 |

-

|

| 143 |

-

|

| 144 |

-

def run_task_subprocess(task_name: str, output_dir: str) -> tuple[bool, str, float]:

|

| 145 |

-

"""Run a single task in a subprocess with memory and time limits."""

|

| 146 |

-

# Create temporary script file

|

| 147 |

-

with tempfile.NamedTemporaryFile(mode="w", suffix=".py", delete=False) as f:

|

| 148 |

-

f.write(TASK_RUNNER_SCRIPT)

|

| 149 |

-

script_path = f.name

|

| 150 |

-

|

| 151 |

-

try:

|

| 152 |

-

logger.info(f"Running task: {task_name}")

|

| 153 |

-

start_time = time.time()

|

| 154 |

-

|

| 155 |

-

# Run subprocess with timeout

|

| 156 |

-

# subprocess security: We control all inputs (script path and known arguments)

|

| 157 |

-

cmd = [sys.executable, script_path, MODEL_PATH, task_name, output_dir, MODEL_NAME]

|

| 158 |

-

|

| 159 |

-

process = subprocess.Popen(cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE, text=True) # noqa: S603

|

| 160 |

-

|

| 161 |

-

try:

|

| 162 |

-

stdout, stderr = process.communicate(timeout=TASK_TIMEOUT)

|

| 163 |

-

duration = time.time() - start_time

|

| 164 |

-

|

| 165 |

-

if process.returncode == 0:

|

| 166 |

-

# Check for result file

|

| 167 |

-

for line in stdout.split("\n"):

|

| 168 |

-

if line.startswith("RESULT_FILE:"):

|

| 169 |

-

result_file = line.split(":", 1)[1]

|

| 170 |

-

try:

|

| 171 |

-

with Path(result_file).open() as f:

|

| 172 |

-

json.load(f)

|

| 173 |

-

Path(result_file).unlink() # Clean up temp file

|

| 174 |

-

logger.info(f"✓ Completed {task_name} in {duration:.2f}s")

|

| 175 |

-

return True, task_name, duration

|

| 176 |

-

except (json.JSONDecodeError, OSError):

|

| 177 |

-

logger.exception("Failed to read result file")

|

| 178 |

-

|

| 179 |

-

logger.info(f"✓ Completed {task_name} in {duration:.2f}s")

|

| 180 |

-

return True, task_name, duration

|

| 181 |

-

if process.returncode == SIGKILL_RETURN_CODE:

|

| 182 |

-

logger.error(f"✗ Task {task_name} killed (OOM) - return code {process.returncode}")

|

| 183 |

-

else:

|

| 184 |

-

logger.error(f"✗ Task {task_name} failed with return code {process.returncode}")

|

| 185 |

-

if stderr:

|

| 186 |

-

logger.error(f"Error output: {stderr}")

|

| 187 |

-

return False, task_name, duration

|

| 188 |

-

|

| 189 |

-

except subprocess.TimeoutExpired:

|

| 190 |

-

logger.warning(f"⏱ Task {task_name} timed out after {TASK_TIMEOUT}s")

|

| 191 |

-

process.kill()

|

| 192 |

-

process.wait()

|

| 193 |

-

return False, task_name, TASK_TIMEOUT

|

| 194 |

-

|

| 195 |

-

except Exception:

|

| 196 |

-

logger.exception(f"✗ Failed to run task {task_name}")

|

| 197 |

-

return False, task_name, 0.0

|

| 198 |

-

|

| 199 |

-

finally:

|

| 200 |

-

# Clean up script file

|

| 201 |

-

with contextlib.suppress(Exception):

|

| 202 |

-

Path(script_path).unlink()

|

| 203 |

-

|

| 204 |

-

|

| 205 |

-

def collect_results(output_path: Path) -> dict:

|

| 206 |

-

"""Collect all results from completed tasks."""

|

| 207 |

-

results_dir = output_path / MODEL_NAME / "distilled"

|

| 208 |

-

if not results_dir.exists():

|

| 209 |

-

return {}

|

| 210 |

-

|

| 211 |

-

task_results = {}

|

| 212 |

-

for result_file in results_dir.glob("*.json"):

|

| 213 |

-

if result_file.name == "model_meta.json":

|

| 214 |

-

continue

|

| 215 |

-

|

| 216 |

-

try:

|

| 217 |

-

with result_file.open() as f:

|

| 218 |

-

data = json.load(f)

|

| 219 |

-

task_name = result_file.stem

|

| 220 |

-

task_results[task_name] = data

|

| 221 |

-

except (json.JSONDecodeError, OSError) as e:

|

| 222 |

-

logger.warning(f"Could not load {result_file}: {e}")

|

| 223 |

-

|

| 224 |

-

return task_results

|

| 225 |

-

|

| 226 |

-

|

| 227 |

-

def save_summary(output_path: Path, results: dict, stats: dict) -> None:

|

| 228 |

-

"""Save evaluation summary."""

|

| 229 |

-

summary = {

|

| 230 |

-

"model_name": MODEL_NAME,

|

| 231 |

-

"timestamp": time.time(),

|

| 232 |

-

"task_timeout": TASK_TIMEOUT,

|

| 233 |

-

"stats": stats,

|

| 234 |

-

"task_results": results,

|

| 235 |

-

}

|

| 236 |

-

|

| 237 |

-

summary_file = output_path / "mteb_summary.json"

|

| 238 |

-

with summary_file.open("w") as f:

|

| 239 |

-

json.dump(summary, f, indent=2, default=str)

|

| 240 |

-

|

| 241 |

-

logger.info(f"Summary saved to {summary_file}")

|

| 242 |

-

|

| 243 |

-

|

| 244 |

-

def main() -> None:

|

| 245 |

-

"""Main evaluation function."""

|

| 246 |

-

logger.info(f"Starting MTEB evaluation for {MODEL_NAME}")

|

| 247 |

-

logger.info(f"Task timeout: {TASK_TIMEOUT}s (no retries)")

|

| 248 |

-

logger.info("Memory isolation: Each task runs in separate subprocess")

|

| 249 |

-

|

| 250 |

-

# Log system info

|

| 251 |

-

memory_info = psutil.virtual_memory()

|

| 252 |

-

logger.info(f"System memory: {memory_info.total / (1024**3):.1f} GB total")

|

| 253 |

-

|

| 254 |

-

output_path = Path(OUTPUT_DIR)

|

| 255 |

-

output_path.mkdir(parents=True, exist_ok=True)

|

| 256 |

-

|

| 257 |

-

# Get tasks

|

| 258 |

-

all_tasks = get_available_tasks()

|

| 259 |

-

if not all_tasks:

|

| 260 |

-

logger.error("No tasks found!")

|

| 261 |

-

return

|

| 262 |

-

|

| 263 |

-

logger.info(f"Found {len(all_tasks)} tasks")

|

| 264 |

-

|

| 265 |

-

# Check existing results

|

| 266 |

-

remaining_tasks = check_existing_results(output_path, all_tasks)

|

| 267 |

-

logger.info(f"Will evaluate {len(remaining_tasks)} remaining tasks")

|

| 268 |

-

|

| 269 |

-

if not remaining_tasks:

|

| 270 |

-

logger.info("All tasks already completed!")

|

| 271 |

-

return

|

| 272 |

-

|

| 273 |

-

# Process tasks sequentially (no retries)

|

| 274 |

-

start_time = time.time()

|

| 275 |

-

successful_tasks = []

|

| 276 |

-

failed_tasks = []

|

| 277 |

-

timed_out_tasks = []

|

| 278 |

-

|

| 279 |

-

for i, task_name in enumerate(remaining_tasks):

|

| 280 |

-

logger.info(f"[{i + 1}/{len(remaining_tasks)}] Processing: {task_name}")

|

| 281 |

-

|

| 282 |

-

# Run task once (no retries)

|

| 283 |

-

success, name, duration = run_task_subprocess(task_name, str(output_path))

|

| 284 |

-

|

| 285 |

-

if success:

|

| 286 |

-

successful_tasks.append((name, duration))

|

| 287 |

-

elif duration == TASK_TIMEOUT:

|

| 288 |

-

timed_out_tasks.append(name)

|

| 289 |

-

else:

|

| 290 |

-

failed_tasks.append(name)

|

| 291 |

-

# Check if it was OOM killed (this is logged in run_task_subprocess)

|

| 292 |

-

|

| 293 |

-

# Progress update

|

| 294 |

-

progress = ((i + 1) / len(remaining_tasks)) * 100

|

| 295 |

-

logger.info(f"Progress: {i + 1}/{len(remaining_tasks)} ({progress:.1f}%)")

|

| 296 |

-

|

| 297 |

-

# Brief pause between tasks

|

| 298 |

-

time.sleep(1)

|

| 299 |

-

|

| 300 |

-

total_time = time.time() - start_time

|

| 301 |

-

|

| 302 |

-

# Log final summary

|

| 303 |

-

logger.info("=" * 80)

|

| 304 |

-

logger.info("EVALUATION SUMMARY")

|

| 305 |

-

logger.info("=" * 80)

|

| 306 |

-

logger.info(f"Total tasks: {len(remaining_tasks)}")

|

| 307 |

-

logger.info(f"Successful: {len(successful_tasks)}")

|

| 308 |

-

logger.info(f"Failed: {len(failed_tasks)}")

|

| 309 |

-

logger.info(f"Timed out: {len(timed_out_tasks)}")

|

| 310 |

-

logger.info(f"Total time: {total_time:.2f}s")

|

| 311 |

-

|

| 312 |

-

if successful_tasks:

|

| 313 |

-

avg_time = sum(duration for _, duration in successful_tasks) / len(successful_tasks)

|

| 314 |

-

logger.info(f"Average successful task time: {avg_time:.2f}s")

|

| 315 |

-

|

| 316 |

-

if failed_tasks:

|

| 317 |

-

logger.warning(f"Failed tasks: {failed_tasks}")

|

| 318 |

-

|

| 319 |

-

if timed_out_tasks:

|

| 320 |

-

logger.warning(f"Timed out tasks: {timed_out_tasks}")

|

| 321 |

-

|

| 322 |

-

logger.info("=" * 80)

|

| 323 |

-

|

| 324 |

-

# Collect and save results

|

| 325 |

-

all_results = collect_results(output_path)

|

| 326 |

-

stats = {

|

| 327 |

-

"total_tasks": len(remaining_tasks),

|

| 328 |

-

"successful": len(successful_tasks),

|

| 329 |

-

"failed": len(failed_tasks),

|

| 330 |

-

"timed_out": len(timed_out_tasks),

|

| 331 |

-

"total_time": total_time,

|

| 332 |

-

"avg_time": avg_time if successful_tasks else 0,

|

| 333 |

-

"successful_task_details": successful_tasks,

|

| 334 |

-

"failed_tasks": failed_tasks,

|

| 335 |

-

"timed_out_tasks": timed_out_tasks,

|

| 336 |

-

}

|

| 337 |

-

|

| 338 |

-

save_summary(output_path, all_results, stats)

|

| 339 |

-

logger.info("Evaluation completed!")

|

| 340 |

-

|

| 341 |

-

|

| 342 |

-

if __name__ == "__main__":

|

| 343 |

-

main()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

REPORT.md

ADDED

|

@@ -0,0 +1,299 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

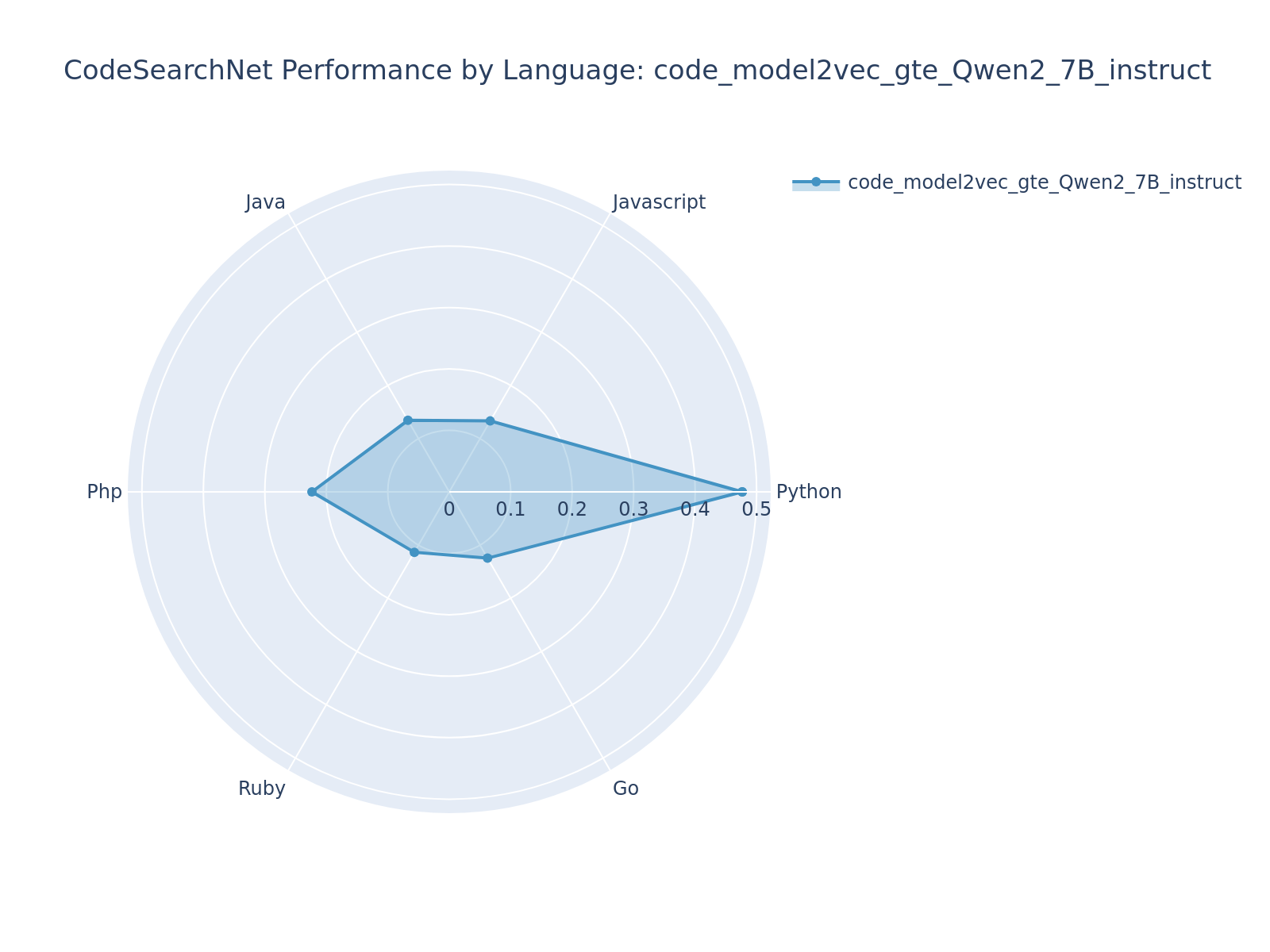

| 1 |

+

# Code-Specialized Model2Vec Distillation Analysis

|

| 2 |

+

|

| 3 |

+

## 🎯 Executive Summary

|

| 4 |

+

|

| 5 |

+

This report presents a comprehensive analysis of Model2Vec distillation experiments using different teacher models for code-specialized embedding generation.

|

| 6 |

+

|

| 7 |

+

### Evaluated Models Overview

|

| 8 |

+

|

| 9 |

+

**Simplified Distillation Models:** 13

|

| 10 |

+

**Peer Comparison Models:** 19

|

| 11 |

+

**Total Models Analyzed:** 32

|

| 12 |

+

|

| 13 |

+

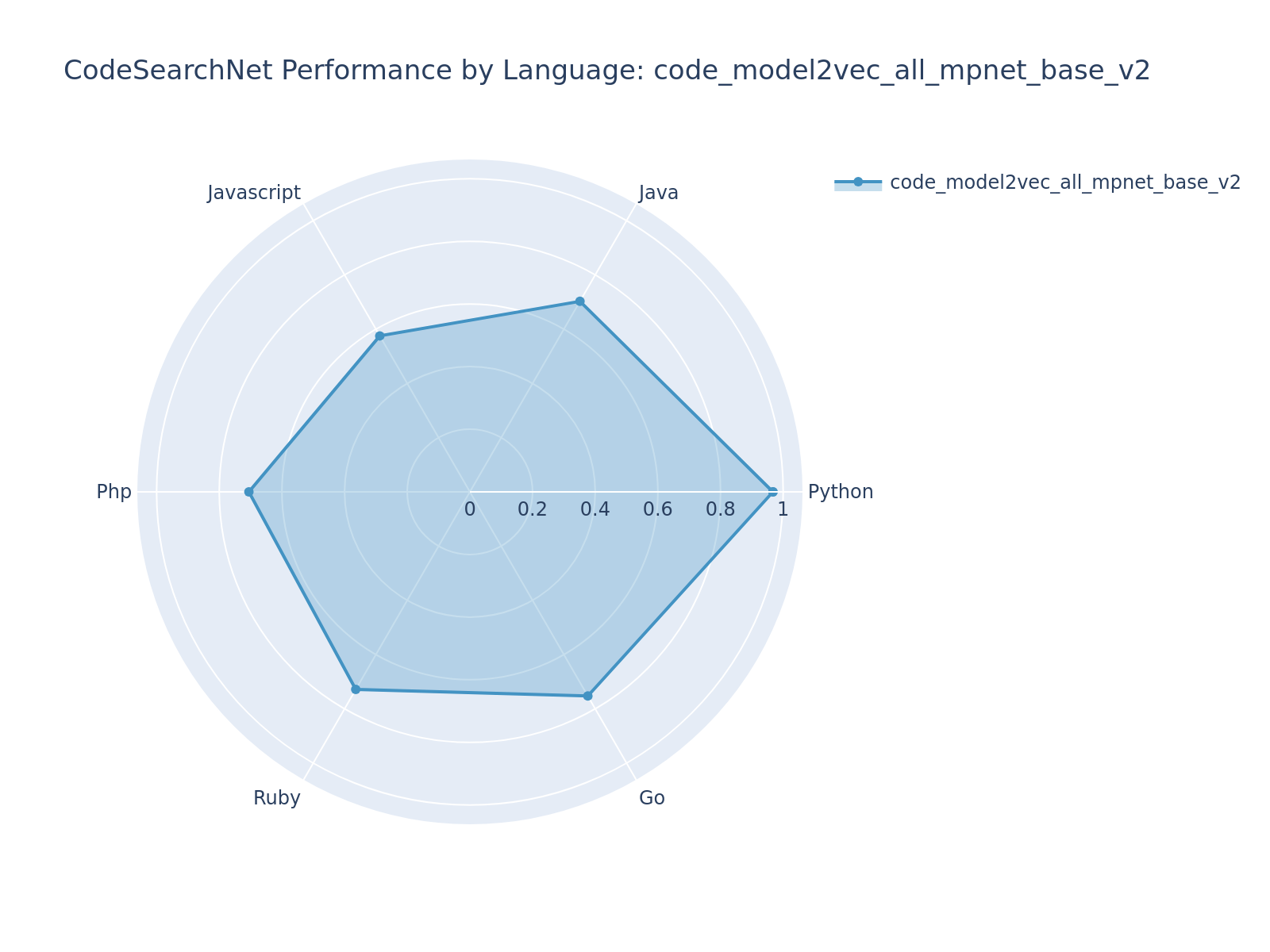

### Best Performing Simplified Model: code_model2vec_all_mpnet_base_v2

|

| 14 |

+

|

| 15 |

+

**Overall CodeSearchNet Performance:**

|

| 16 |

+

- **NDCG@10**: 0.7387

|

| 17 |

+

- **Mean Reciprocal Rank (MRR)**: 0.7010

|

| 18 |

+

- **Recall@5**: 0.8017

|

| 19 |

+

- **Mean Rank**: 6.4

|

| 20 |

+

|

| 21 |

+

## 📊 Comprehensive Model Comparison

|

| 22 |

+

|

| 23 |

+

### All Simplified Distillation Models Performance

|

| 24 |

+

|

| 25 |

+

| Model | Teacher | NDCG@10 | MRR | Recall@5 | Status |

|

| 26 |

+

|-------|---------|---------|-----|----------|--------|

|

| 27 |

+

| code_model2vec_all_mpnet_base_v2 | [sentence-transformers/all-mpnet-base-v2](https://huggingface.co/sentence-transformers/all-mpnet-base-v2) | 0.7387 | 0.7010 | 0.8017 | 🥇 Best |

|

| 28 |

+

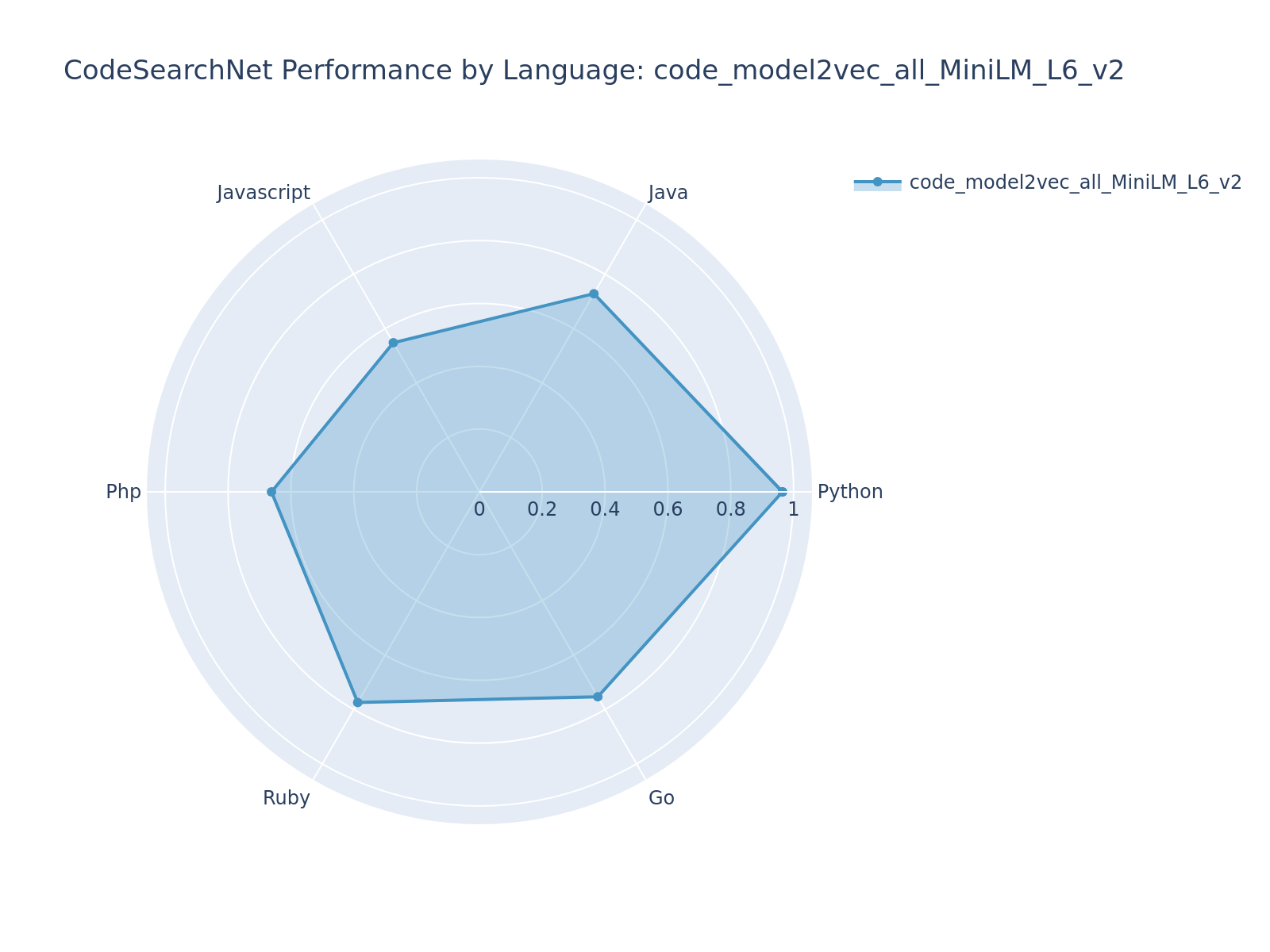

| code_model2vec_all_MiniLM_L6_v2 | [sentence-transformers/all-MiniLM-L6-v2](https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2) | 0.7385 | 0.7049 | 0.7910 | 🥈 2nd |

|

| 29 |

+

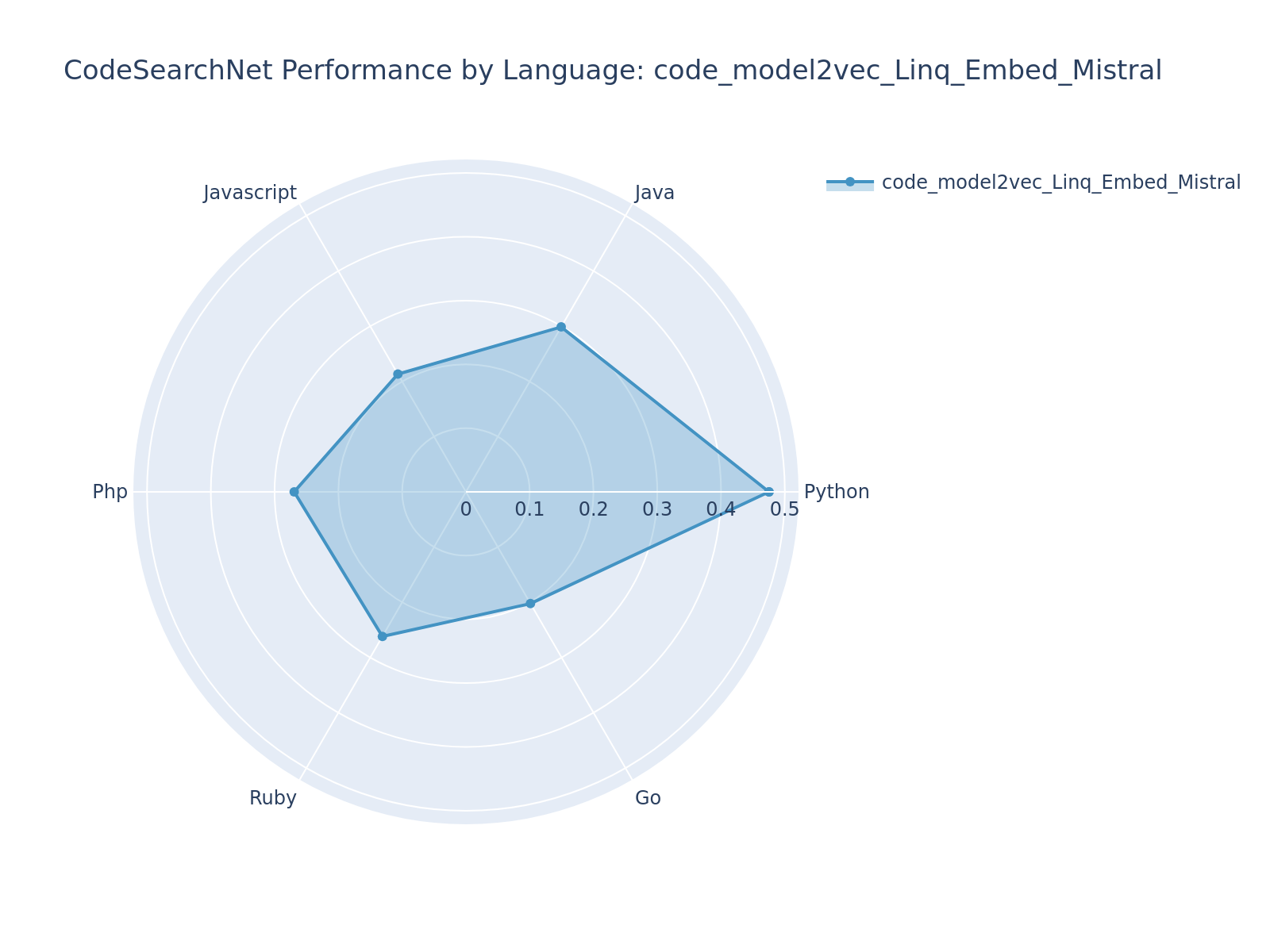

| code_model2vec_jina_embeddings_v2_base_code | [jina-embeddings-v2-base-code](https://huggingface.co/jina-embeddings-v2-base-code) | 0.7381 | 0.6996 | 0.8130 | 🥉 3rd |

|

| 30 |

+

| code_model2vec_paraphrase_MiniLM_L6_v2 | [sentence-transformers/paraphrase-MiniLM-L6-v2](https://huggingface.co/sentence-transformers/paraphrase-MiniLM-L6-v2) | 0.7013 | 0.6638 | 0.7665 | #4 |

|

| 31 |

+

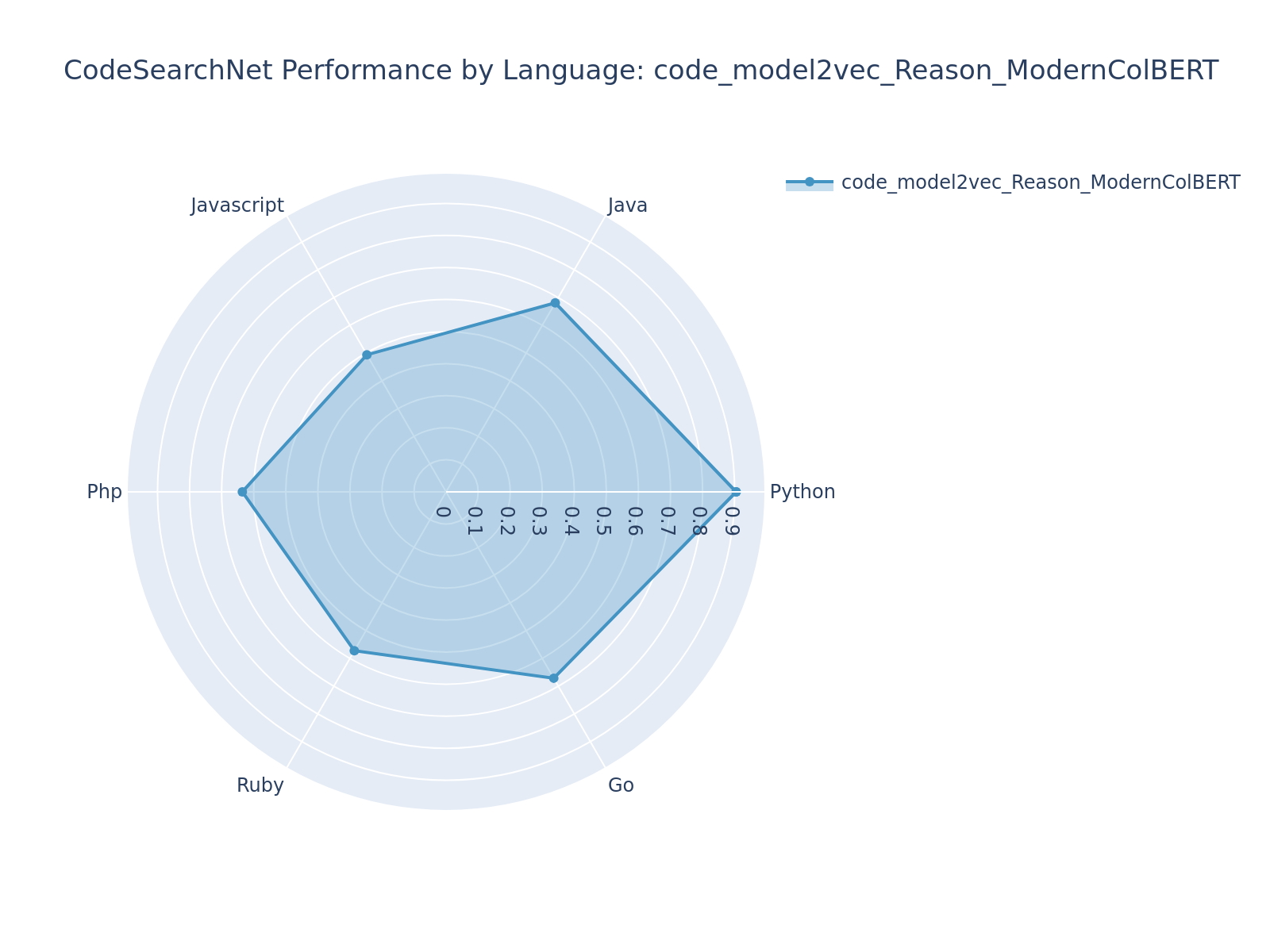

| code_model2vec_Reason_ModernColBERT | [lightonai/Reason-ModernColBERT](https://huggingface.co/lightonai/Reason-ModernColBERT) | 0.6598 | 0.6228 | 0.7260 | #5 |

|

| 32 |

+

| code_model2vec_bge_m3 | [BAAI/bge-m3](https://huggingface.co/BAAI/bge-m3) | 0.4863 | 0.4439 | 0.5514 | #6 |

|

| 33 |

+

| code_model2vec_jina_embeddings_v3 | [jinaai/jina-embeddings-v3](https://huggingface.co/jinaai/jina-embeddings-v3) | 0.4755 | 0.4416 | 0.5456 | #7 |

|

| 34 |

+

| code_model2vec_nomic_embed_text_v2_moe | [nomic-ai/nomic-embed-text-v2-moe](https://huggingface.co/nomic-ai/nomic-embed-text-v2-moe) | 0.4532 | 0.4275 | 0.5094 | #8 |

|

| 35 |

+

| code_model2vec_gte_Qwen2_1.5B_instruct | [Alibaba-NLP/gte-Qwen2-1.5B-instruct](https://huggingface.co/Alibaba-NLP/gte-Qwen2-1.5B-instruct) | 0.4238 | 0.3879 | 0.4719 | #9 |

|

| 36 |

+

| code_model2vec_Qodo_Embed_1_1.5B | [Qodo/Qodo-Embed-1-1.5B](https://huggingface.co/Qodo/Qodo-Embed-1-1.5B) | 0.4101 | 0.3810 | 0.4532 | #10 |

|

| 37 |

+

| code_model2vec_graphcodebert_base | [microsoft/codebert-base](https://huggingface.co/microsoft/codebert-base) | 0.3420 | 0.3140 | 0.3704 | #11 |

|

| 38 |

+

| code_model2vec_Linq_Embed_Mistral | [Linq-AI-Research/Linq-Embed-Mistral](https://huggingface.co/Linq-AI-Research/Linq-Embed-Mistral) | 0.2868 | 0.2581 | 0.3412 | #12 |

|

| 39 |

+

| code_model2vec_codebert_base | [microsoft/codebert-base](https://huggingface.co/microsoft/codebert-base) | 0.2779 | 0.2534 | 0.3136 | #13 |

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

### 📊 Model Specifications Analysis

|

| 43 |

+

|

| 44 |

+

Our distilled models exhibit consistent architectural characteristics across different teacher models:

|

| 45 |

+

|

| 46 |

+

| Model | Vocabulary Size | Parameters | Embedding Dim | Disk Size |

|

| 47 |

+

|-------|----------------|------------|---------------|-----------|

|

| 48 |

+

| all_mpnet_base_v2 | 29,528 | 7.6M | 256 | 14.4MB |

|

| 49 |

+

| all_MiniLM_L6_v2 | 29,525 | 7.6M | 256 | 14.4MB |

|

| 50 |

+

| jina_embeddings_v2_base_code | 61,053 | 15.6M | 256 | 29.8MB |

|

| 51 |

+

| paraphrase_MiniLM_L6_v2 | 29,525 | 7.6M | 256 | 14.4MB |

|

| 52 |

+

| Reason_ModernColBERT | 50,254 | 12.9M | 256 | 24.5MB |

|

| 53 |

+

| bge_m3 | 249,999 | 64.0M | 256 | 122.1MB |

|

| 54 |

+

| jina_embeddings_v3 | 249,999 | 64.0M | 256 | 122.1MB |

|

| 55 |

+

| nomic_embed_text_v2_moe | 249,999 | 64.0M | 256 | 122.1MB |

|

| 56 |

+

| gte_Qwen2_1.5B_instruct | 151,644 | 38.8M | 256 | 74.0MB |

|

| 57 |

+

| Qodo_Embed_1_1.5B | 151,644 | 38.8M | 256 | 74.0MB |

|

| 58 |

+

| graphcodebert_base | 50,262 | 12.9M | 256 | 24.5MB |

|

| 59 |

+

| Linq_Embed_Mistral | 31,999 | 8.2M | 256 | 15.6MB |

|

| 60 |

+

| codebert_base | 50,262 | 12.9M | 256 | 24.5MB |

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

*Comprehensive analysis of our distilled models showing vocabulary size, parameter count, embedding dimensions, and storage requirements.*

|

| 66 |

+

|

| 67 |

+

#### Key Insights from Model Specifications:

|

| 68 |

+

|

| 69 |

+

|

| 70 |

+

- **Vocabulary Consistency**: All models use vocabulary sizes ranging from 29,525 to 249,999 tokens (avg: 106,592)

|

| 71 |

+

- **Parameter Efficiency**: Models range from 7.6M to 64.0M parameters (avg: 27.3M)

|

| 72 |

+

- **Storage Efficiency**: Disk usage ranges from 14.4MB to 122.1MB (avg: 52.0MB)

|

| 73 |

+

- **Embedding Dimensions**: Consistent 256 dimensions across all models (optimized for efficiency)

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

### Key Findings

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

- **Best Teacher Model**: code_model2vec_all_mpnet_base_v2 (NDCG@10: 0.7387)

|

| 80 |

+

- **Least Effective Teacher**: code_model2vec_codebert_base (NDCG@10: 0.2779)

|

| 81 |

+

- **Performance Range**: 62.4% difference between best and worst

|

| 82 |

+

- **Average Performance**: 0.5178 NDCG@10

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## 🎯 Language Performance Radar Charts

|

| 86 |

+

|

| 87 |

+

### Best Model vs Peer Models Comparison

|

| 88 |

+

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

*Comparative view showing how the best simplified distillation model performs against top peer models across programming languages.*

|

| 92 |

+

|

| 93 |

+

### Individual Model Performance by Language

|

| 94 |

+

|

| 95 |

+

#### code_model2vec_all_mpnet_base_v2 (Teacher: [sentence-transformers/all-mpnet-base-v2](https://huggingface.co/sentence-transformers/all-mpnet-base-v2)) - NDCG@10: 0.7387

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

#### code_model2vec_all_MiniLM_L6_v2 (Teacher: [sentence-transformers/all-MiniLM-L6-v2](https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2)) - NDCG@10: 0.7385

|

| 100 |

+

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

#### code_model2vec_jina_embeddings_v2_base_code (Teacher: [jina-embeddings-v2-base-code](https://huggingface.co/jina-embeddings-v2-base-code)) - NDCG@10: 0.7381

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

#### code_model2vec_paraphrase_MiniLM_L6_v2 (Teacher: [sentence-transformers/paraphrase-MiniLM-L6-v2](https://huggingface.co/sentence-transformers/paraphrase-MiniLM-L6-v2)) - NDCG@10: 0.7013

|

| 108 |

+

|

| 109 |

+