InstanceGen: Image Generation with Instance-level Instructions

Abstract

The proposed technique combines image-based structural guidance with LLM-based instructions to align generated images with complex, detailed text prompts.

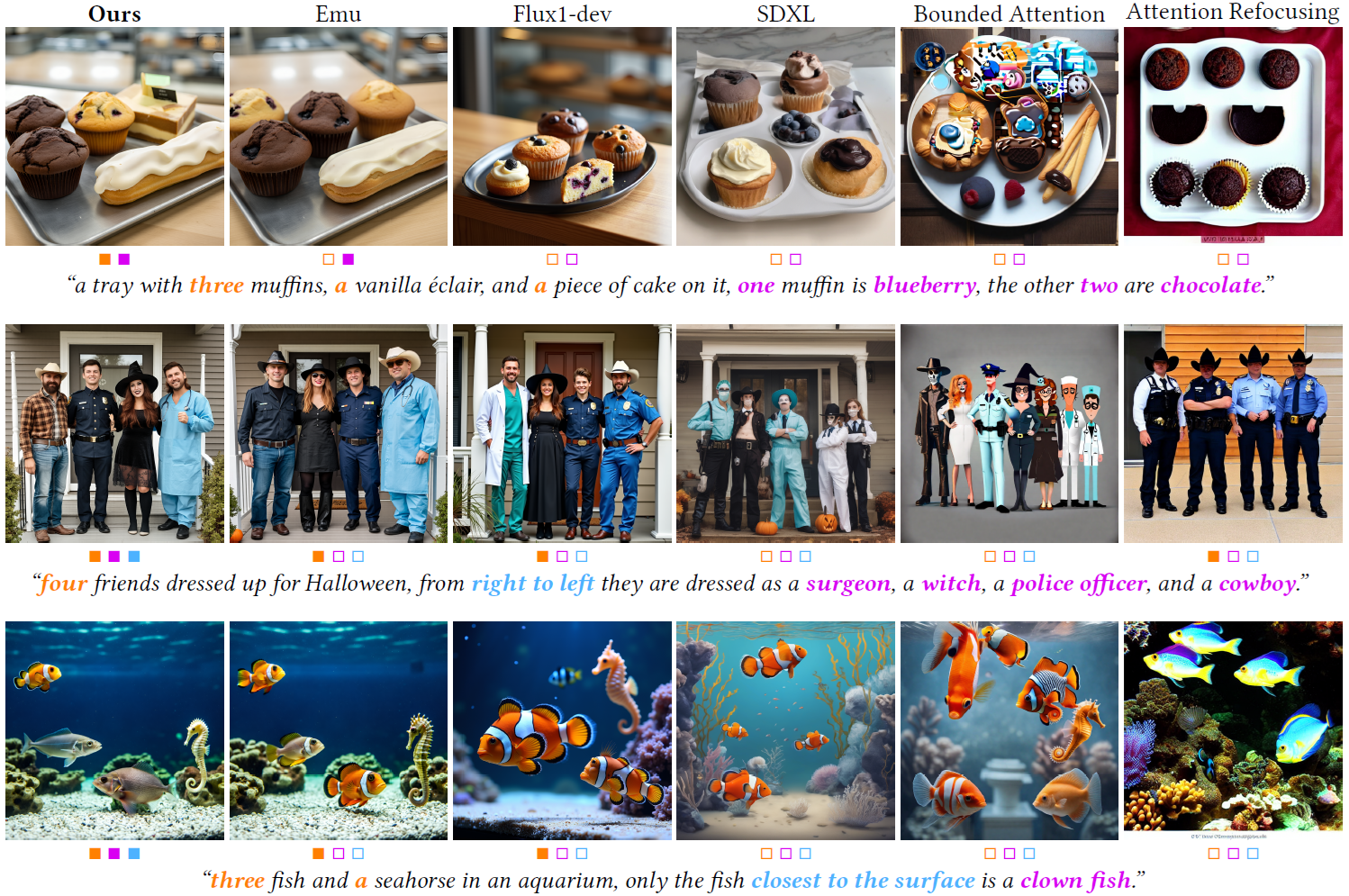

Despite rapid advancements in the capabilities of generative models, pretrained text-to-image models still struggle in capturing the semantics conveyed by complex prompts that compound multiple objects and instance-level attributes. Consequently, we are witnessing growing interests in integrating additional structural constraints, typically in the form of coarse bounding boxes, to better guide the generation process in such challenging cases. In this work, we take the idea of structural guidance a step further by making the observation that contemporary image generation models can directly provide a plausible fine-grained structural initialization. We propose a technique that couples this image-based structural guidance with LLM-based instance-level instructions, yielding output images that adhere to all parts of the text prompt, including object counts, instance-level attributes, and spatial relations between instances.

Community

We introduce InstanceGen, an inference time technique which improves diffusion model's ability to generate images for complex prompts involving multiple objects, instance level attributes and spatial relationships.

Abstract:

Despite rapid advancements in the capabilities of generative models, pretrained text-to-image models still struggle in capturing the semantics conveyed by complex prompts that compound multiple objects and instance-level attributes. Consequently, we are witnessing growing interests in integrating additional structural constraints, typically in the form of coarse bounding boxes, to better guide the generation process in such challenging cases. In this work, we take the idea of structural guidance a step further by making the observation that contemporary image generation models can directly provide a plausible fine-grained structural initialization. We propose a technique that couples this image-based structural guidance with LLM-based instance-level instructions, yielding output images that adhere to all parts of the text prompt, including object counts, instance-level attributes, and spatial relations between instances. Additionally, we contribute CompoundPrompts, a benchmark composed of complex prompts with three difficulty levels in which object instances are progressively compounded with attribute descriptions and spatial relations. Extensive experiments demonstrate that our method significantly surpasses the performance of prior models, particularly over complex multi-object and multi-attribute use cases.

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- POEM: Precise Object-level Editing via MLLM control (2025)

- LayerCraft: Enhancing Text-to-Image Generation with CoT Reasoning and Layered Object Integration (2025)

- HCMA: Hierarchical Cross-model Alignment for Grounded Text-to-Image Generation (2025)

- Efficient Multi-Instance Generation with Janus-Pro-Dirven Prompt Parsing (2025)

- Progressive Prompt Detailing for Improved Alignment in Text-to-Image Generative Models (2025)

- Lay-Your-Scene: Natural Scene Layout Generation with Diffusion Transformers (2025)

- Improving Editability in Image Generation with Layer-wise Memory (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

Models citing this paper 0

No model linking this paper

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper