---

license: apache-2.0

tags:

- Flex

base_model:

- ostris/Flex.1-alpha

---

# Flex.1-alpha 512 Redux Adapter

## Overview

This is a [Redux](https://huggingface.co/black-forest-labs/FLUX.1-Redux-dev) adapter trained from scratch on [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), however, it will also work with [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev). The main advantages of this adapter over the original are:

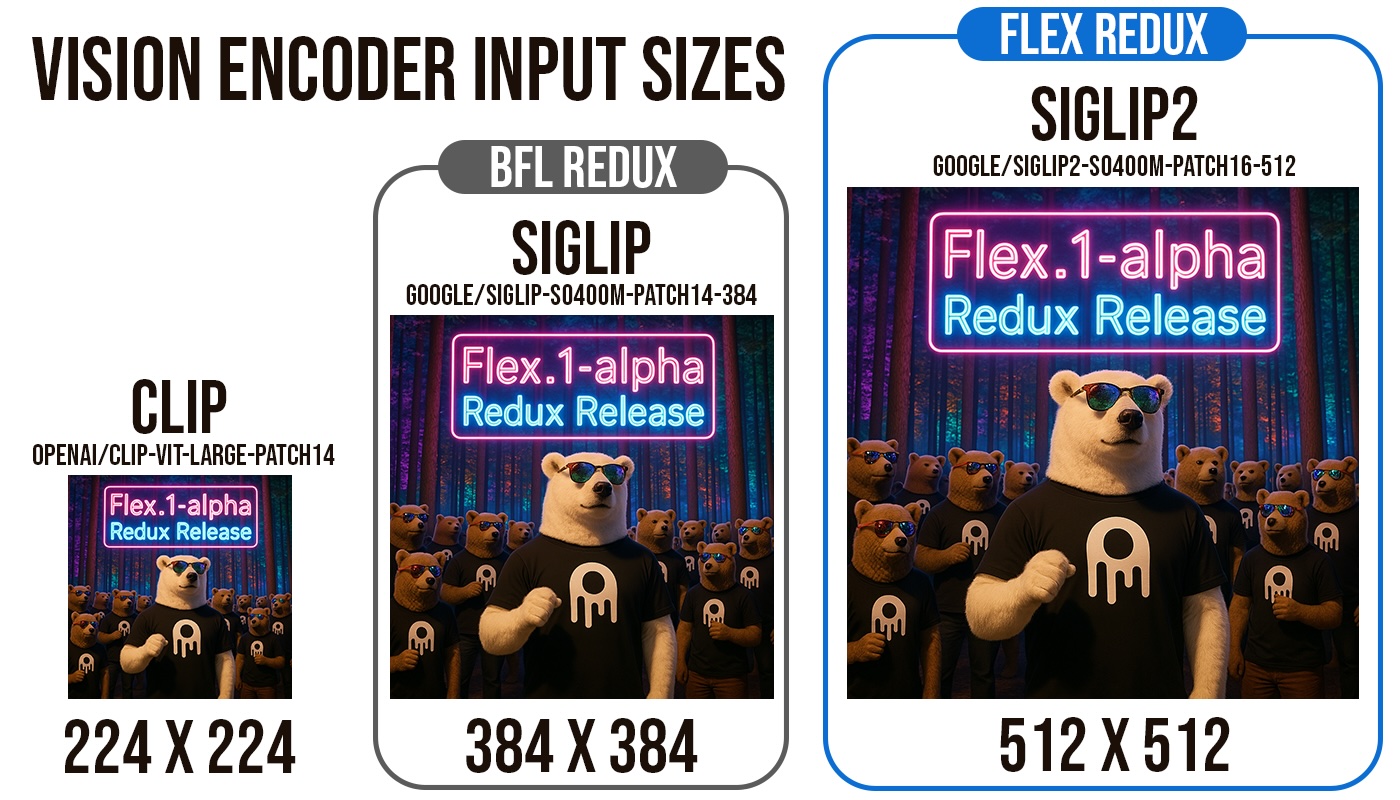

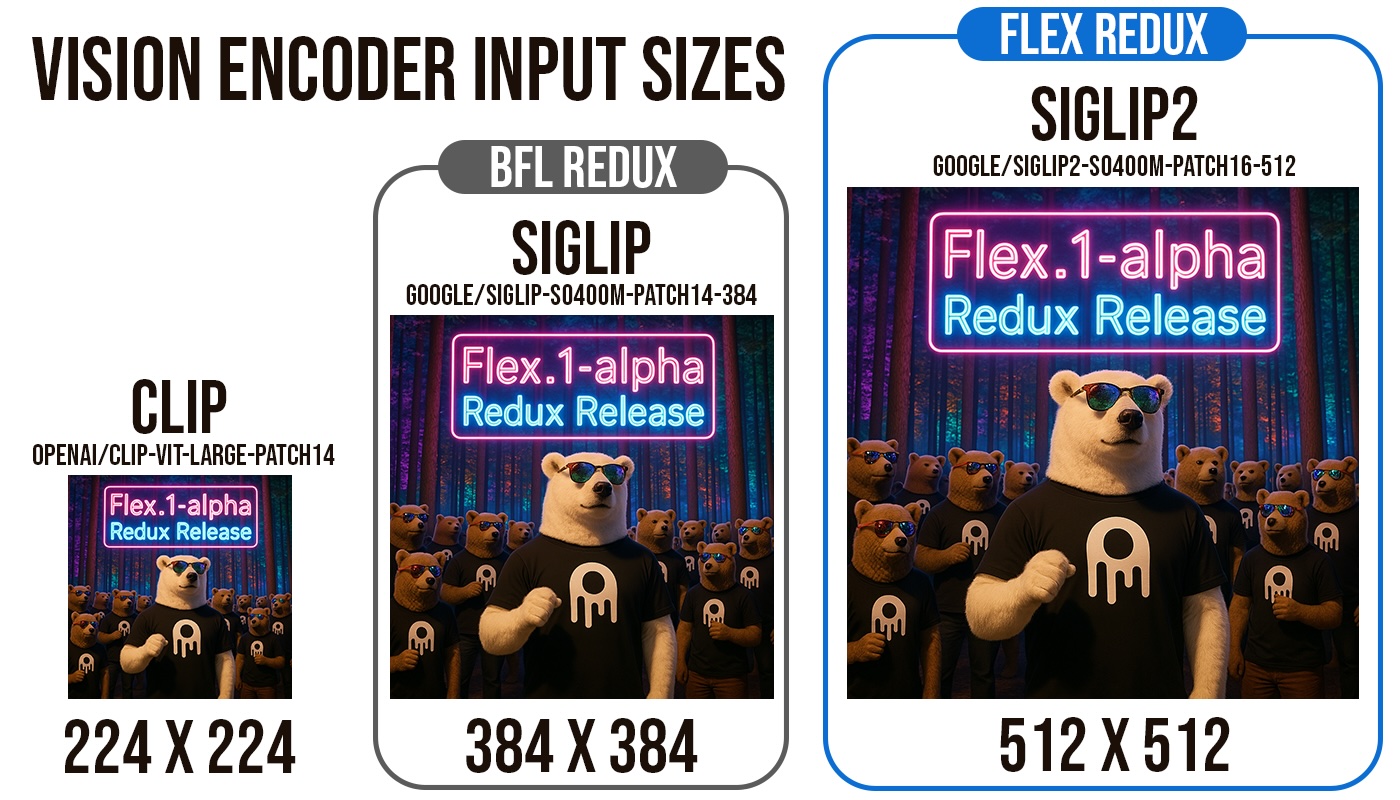

- **Better Vision Encoder**: The original Redux by BFL uses [siglip-so400m-patch14-384](https://huggingface.co/google/siglip-so400m-patch14-384). This adapter uses [siglip2-so400m-patch16-512](https://huggingface.co/google/siglip2-so400m-patch16-512), which is newer and has an increased resolution of 512x512. This allows for better image quality and more detail in the generated images.

- **Apache 2.0 License**: Since this vision encoder was trained from scratch on Flex.1-alpha, it can be licensed under the Apache 2.0 license. This means that you can use it for commercial purposes without any restrictions.

## The Vision Encoder

Flex Redux uses the latest SigLIP2 vision encoder at a resolution of 512x512. This allows the vision encoder to see more fine details in the images.

## Overview

This is a [Redux](https://huggingface.co/black-forest-labs/FLUX.1-Redux-dev) adapter trained from scratch on [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), however, it will also work with [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev). The main advantages of this adapter over the original are:

- **Better Vision Encoder**: The original Redux by BFL uses [siglip-so400m-patch14-384](https://huggingface.co/google/siglip-so400m-patch14-384). This adapter uses [siglip2-so400m-patch16-512](https://huggingface.co/google/siglip2-so400m-patch16-512), which is newer and has an increased resolution of 512x512. This allows for better image quality and more detail in the generated images.

- **Apache 2.0 License**: Since this vision encoder was trained from scratch on Flex.1-alpha, it can be licensed under the Apache 2.0 license. This means that you can use it for commercial purposes without any restrictions.

## The Vision Encoder

Flex Redux uses the latest SigLIP2 vision encoder at a resolution of 512x512. This allows the vision encoder to see more fine details in the images.

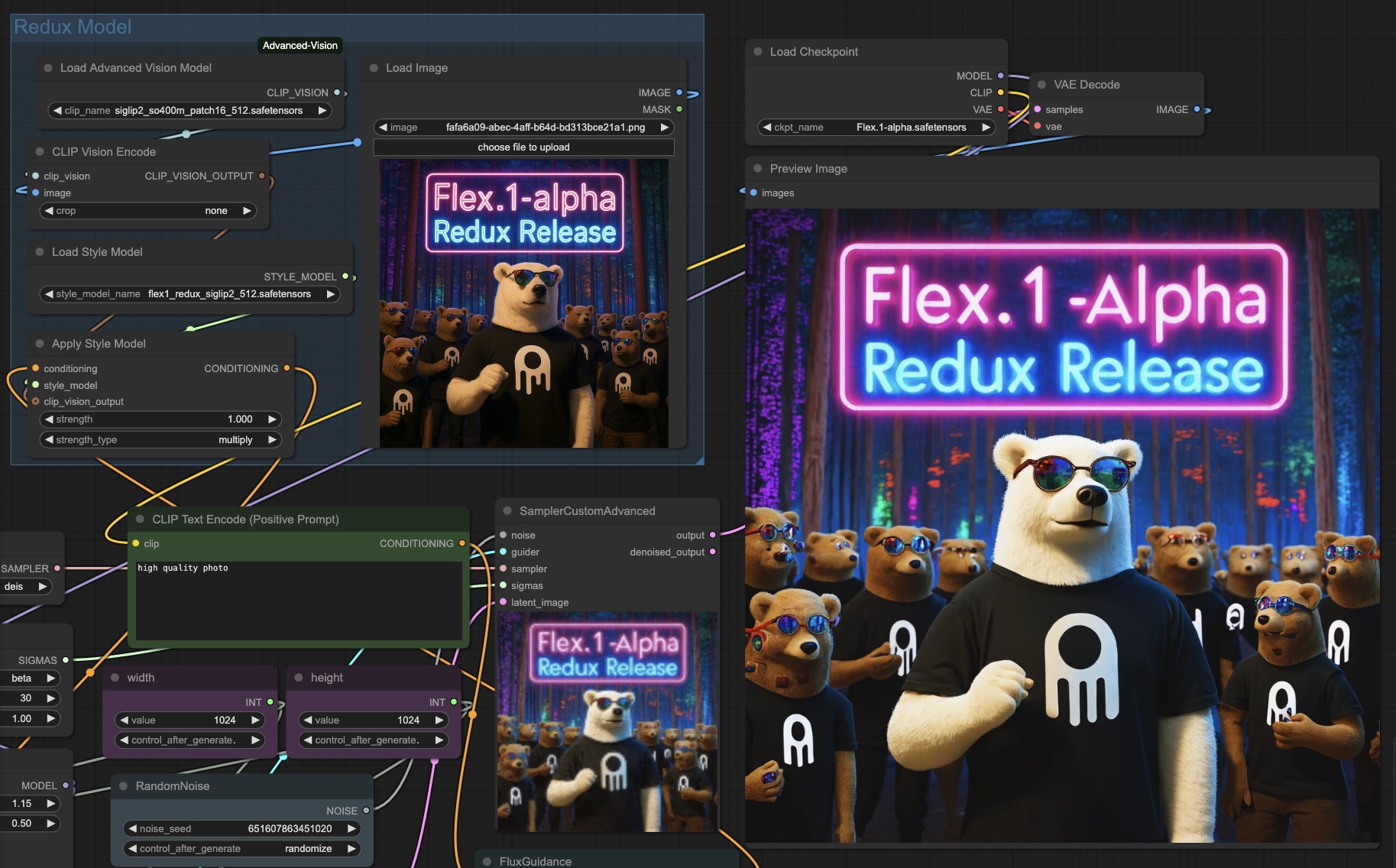

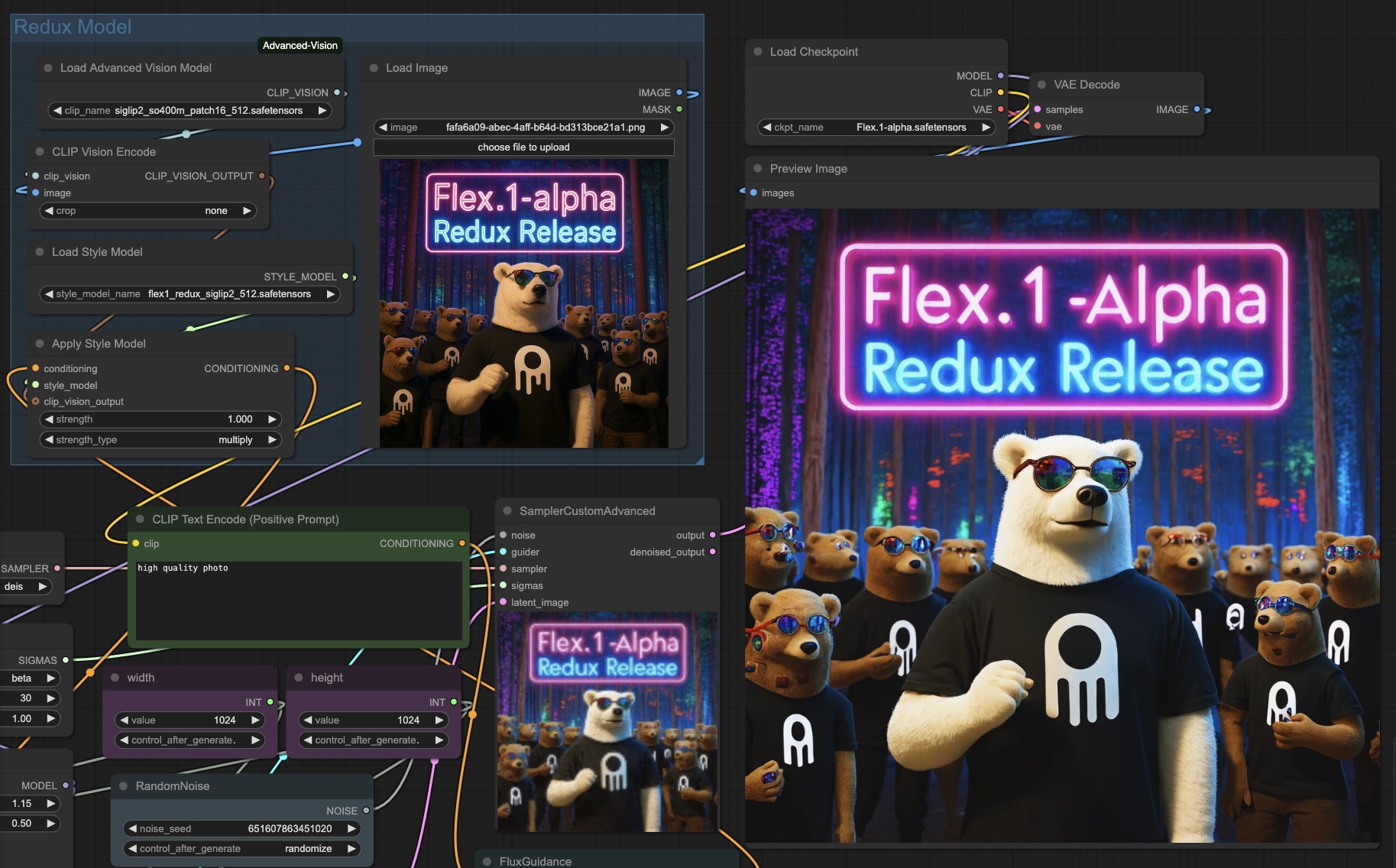

## ComfyUI

You will need this custom node. https://github.com/ostris/ComfyUI-Advanced-Vision and the siglip2 vision encoder here https://huggingface.co/ostris/ComfyUI-Advanced-Vision/blob/main/clip_vision/siglip2_so400m_patch16_512.safetensors . Put that inside your ComfyUI/models/clip_vision/ folder. If you don't have [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), you can pick it up here https://huggingface.co/ostris/Flex.1-alpha/blob/main/Flex.1-alpha.safetensors . Put that in ComfyUI/models/checkpoints/. You can also use [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev) if you prefer.

Put the flex1_redux_siglip2_512.safetensors file in this repo in ComfyUI/models/style_models/ . There is also a workflow named flex-redux-workflow.json to get you started.

## Fine tune it!

This adapter was 100% trained with [AI Toolkit](https://github.com/ostris/ai-toolkit/) on a single 3090 over the course of a few weeks. Which means you can fine tune it, or train your own, on consumer hardware. [Check out the example config](https://github.com/ostris/ai-toolkit/blob/main/config/examples/train_flex_redux.yaml) to get started.

## Support My Work

If you enjoy my work, or use it for commercial purposes, please consider sponsoring me so I can continue to maintain it. Every bit helps!

[Become a sponsor on GitHub](https://github.com/orgs/ostris) or [support me on Patreon](https://www.patreon.com/ostris).

Thank you to all my current supporters!

_Last updated: 2025-04-04_

### GitHub Sponsors

## ComfyUI

You will need this custom node. https://github.com/ostris/ComfyUI-Advanced-Vision and the siglip2 vision encoder here https://huggingface.co/ostris/ComfyUI-Advanced-Vision/blob/main/clip_vision/siglip2_so400m_patch16_512.safetensors . Put that inside your ComfyUI/models/clip_vision/ folder. If you don't have [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), you can pick it up here https://huggingface.co/ostris/Flex.1-alpha/blob/main/Flex.1-alpha.safetensors . Put that in ComfyUI/models/checkpoints/. You can also use [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev) if you prefer.

Put the flex1_redux_siglip2_512.safetensors file in this repo in ComfyUI/models/style_models/ . There is also a workflow named flex-redux-workflow.json to get you started.

## Fine tune it!

This adapter was 100% trained with [AI Toolkit](https://github.com/ostris/ai-toolkit/) on a single 3090 over the course of a few weeks. Which means you can fine tune it, or train your own, on consumer hardware. [Check out the example config](https://github.com/ostris/ai-toolkit/blob/main/config/examples/train_flex_redux.yaml) to get started.

## Support My Work

If you enjoy my work, or use it for commercial purposes, please consider sponsoring me so I can continue to maintain it. Every bit helps!

[Become a sponsor on GitHub](https://github.com/orgs/ostris) or [support me on Patreon](https://www.patreon.com/ostris).

Thank you to all my current supporters!

_Last updated: 2025-04-04_

### GitHub Sponsors

### Patreon Supporters

### Patreon Supporters

---

---

## Overview

This is a [Redux](https://huggingface.co/black-forest-labs/FLUX.1-Redux-dev) adapter trained from scratch on [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), however, it will also work with [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev). The main advantages of this adapter over the original are:

- **Better Vision Encoder**: The original Redux by BFL uses [siglip-so400m-patch14-384](https://huggingface.co/google/siglip-so400m-patch14-384). This adapter uses [siglip2-so400m-patch16-512](https://huggingface.co/google/siglip2-so400m-patch16-512), which is newer and has an increased resolution of 512x512. This allows for better image quality and more detail in the generated images.

- **Apache 2.0 License**: Since this vision encoder was trained from scratch on Flex.1-alpha, it can be licensed under the Apache 2.0 license. This means that you can use it for commercial purposes without any restrictions.

## The Vision Encoder

Flex Redux uses the latest SigLIP2 vision encoder at a resolution of 512x512. This allows the vision encoder to see more fine details in the images.

## Overview

This is a [Redux](https://huggingface.co/black-forest-labs/FLUX.1-Redux-dev) adapter trained from scratch on [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), however, it will also work with [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev). The main advantages of this adapter over the original are:

- **Better Vision Encoder**: The original Redux by BFL uses [siglip-so400m-patch14-384](https://huggingface.co/google/siglip-so400m-patch14-384). This adapter uses [siglip2-so400m-patch16-512](https://huggingface.co/google/siglip2-so400m-patch16-512), which is newer and has an increased resolution of 512x512. This allows for better image quality and more detail in the generated images.

- **Apache 2.0 License**: Since this vision encoder was trained from scratch on Flex.1-alpha, it can be licensed under the Apache 2.0 license. This means that you can use it for commercial purposes without any restrictions.

## The Vision Encoder

Flex Redux uses the latest SigLIP2 vision encoder at a resolution of 512x512. This allows the vision encoder to see more fine details in the images.

## ComfyUI

You will need this custom node. https://github.com/ostris/ComfyUI-Advanced-Vision and the siglip2 vision encoder here https://huggingface.co/ostris/ComfyUI-Advanced-Vision/blob/main/clip_vision/siglip2_so400m_patch16_512.safetensors . Put that inside your ComfyUI/models/clip_vision/ folder. If you don't have [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), you can pick it up here https://huggingface.co/ostris/Flex.1-alpha/blob/main/Flex.1-alpha.safetensors . Put that in ComfyUI/models/checkpoints/. You can also use [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev) if you prefer.

Put the flex1_redux_siglip2_512.safetensors file in this repo in ComfyUI/models/style_models/ . There is also a workflow named flex-redux-workflow.json to get you started.

## Fine tune it!

This adapter was 100% trained with [AI Toolkit](https://github.com/ostris/ai-toolkit/) on a single 3090 over the course of a few weeks. Which means you can fine tune it, or train your own, on consumer hardware. [Check out the example config](https://github.com/ostris/ai-toolkit/blob/main/config/examples/train_flex_redux.yaml) to get started.

## Support My Work

If you enjoy my work, or use it for commercial purposes, please consider sponsoring me so I can continue to maintain it. Every bit helps!

[Become a sponsor on GitHub](https://github.com/orgs/ostris) or [support me on Patreon](https://www.patreon.com/ostris).

Thank you to all my current supporters!

_Last updated: 2025-04-04_

### GitHub Sponsors

## ComfyUI

You will need this custom node. https://github.com/ostris/ComfyUI-Advanced-Vision and the siglip2 vision encoder here https://huggingface.co/ostris/ComfyUI-Advanced-Vision/blob/main/clip_vision/siglip2_so400m_patch16_512.safetensors . Put that inside your ComfyUI/models/clip_vision/ folder. If you don't have [Flex.1-alpha](https://huggingface.co/ostris/Flex.1-alpha), you can pick it up here https://huggingface.co/ostris/Flex.1-alpha/blob/main/Flex.1-alpha.safetensors . Put that in ComfyUI/models/checkpoints/. You can also use [FLUX.1-dev](https://huggingface.co/black-forest-labs/FLUX.1-dev) if you prefer.

Put the flex1_redux_siglip2_512.safetensors file in this repo in ComfyUI/models/style_models/ . There is also a workflow named flex-redux-workflow.json to get you started.

## Fine tune it!

This adapter was 100% trained with [AI Toolkit](https://github.com/ostris/ai-toolkit/) on a single 3090 over the course of a few weeks. Which means you can fine tune it, or train your own, on consumer hardware. [Check out the example config](https://github.com/ostris/ai-toolkit/blob/main/config/examples/train_flex_redux.yaml) to get started.

## Support My Work

If you enjoy my work, or use it for commercial purposes, please consider sponsoring me so I can continue to maintain it. Every bit helps!

[Become a sponsor on GitHub](https://github.com/orgs/ostris) or [support me on Patreon](https://www.patreon.com/ostris).

Thank you to all my current supporters!

_Last updated: 2025-04-04_

### GitHub Sponsors

---

---