Update README.md

Browse files

README.md

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

---

|

| 2 |

-

base_model: Qwen/Qwen2.5-7B

|

| 3 |

language:

|

| 4 |

- en

|

| 5 |

pipeline_tag: text-generation

|

|

@@ -9,55 +9,13 @@ license_link: https://huggingface.co/Qwen/Qwen2.5-Math-7B/blob/main/LICENSE

|

|

| 9 |

---

|

| 10 |

|

| 11 |

|

| 12 |

-

# Qwen2.5-Math-7B

|

| 13 |

|

| 14 |

-

|

| 15 |

-

> <div align="center">

|

| 16 |

-

> <b>

|

| 17 |

-

> 🚨 Qwen2.5-Math mainly supports solving English and Chinese math problems through CoT and TIR. We do not recommend using this series of models for other tasks.

|

| 18 |

-

> </b>

|

| 19 |

-

> </div>

|

| 20 |

-

|

| 21 |

-

## Introduction

|

| 22 |

-

|

| 23 |

-

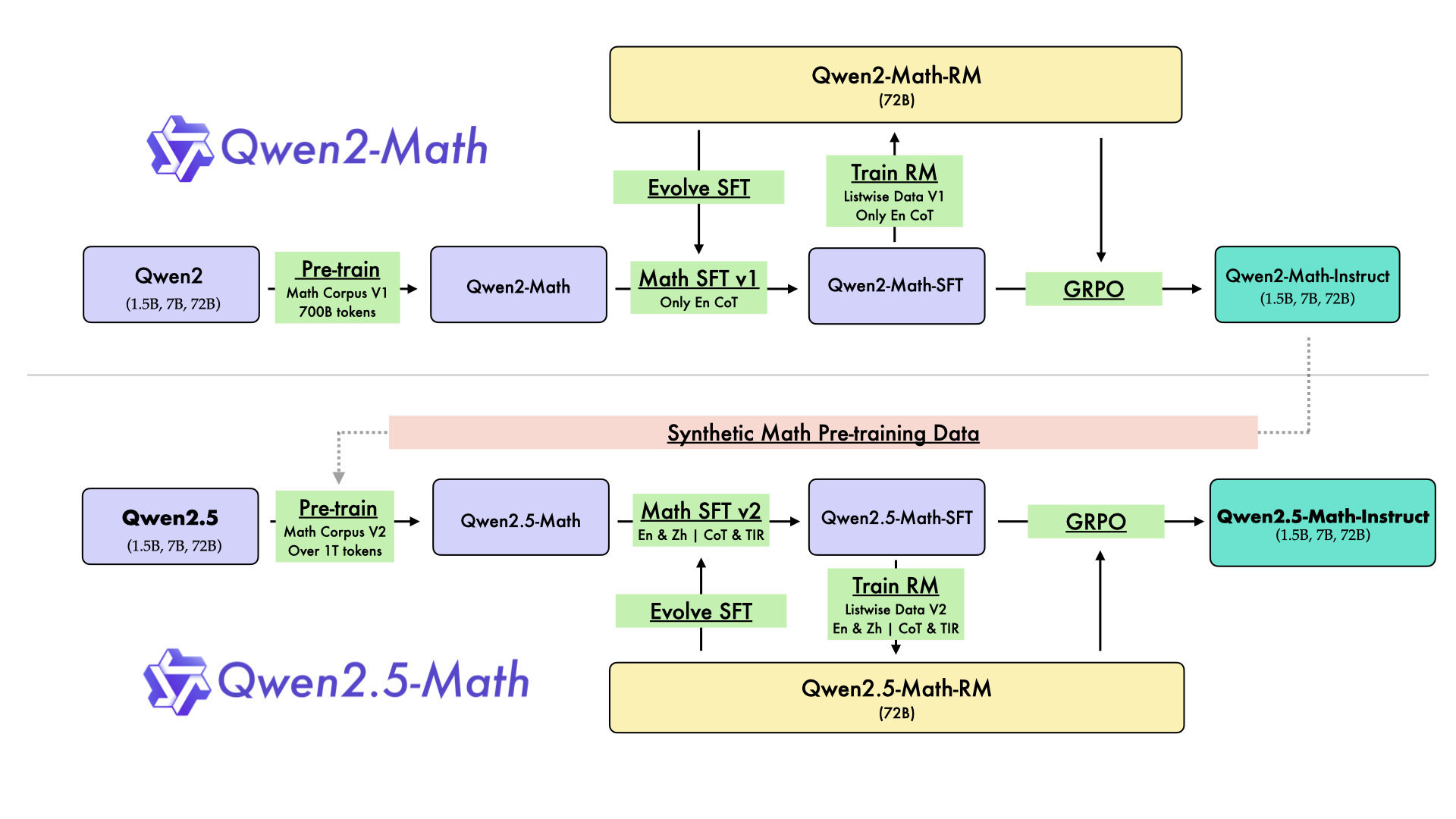

In August 2024, we released the first series of mathematical LLMs - [Qwen2-Math](https://qwenlm.github.io/blog/qwen2-math/) - of our Qwen family. A month later, we have upgraded it and open-sourced **Qwen2.5-Math** series, including base models **Qwen2.5-Math-1.5B/7B/72B**, instruction-tuned models **Qwen2.5-Math-1.5B/7B/72B-Instruct**, and mathematical reward model **Qwen2.5-Math-RM-72B**.

|

| 24 |

-

|

| 25 |

-

Unlike Qwen2-Math series which only supports using Chain-of-Thught (CoT) to solve English math problems, Qwen2.5-Math series is expanded to support using both CoT and Tool-integrated Reasoning (TIR) to solve math problems in both Chinese and English. The Qwen2.5-Math series models have achieved significant performance improvements compared to the Qwen2-Math series models on the Chinese and English mathematics benchmarks with CoT.

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

While CoT plays a vital role in enhancing the reasoning capabilities of LLMs, it faces challenges in achieving computational accuracy and handling complex mathematical or algorithmic reasoning tasks, such as finding the roots of a quadratic equation or computing the eigenvalues of a matrix. TIR can further improve the model's proficiency in precise computation, symbolic manipulation, and algorithmic manipulation. Qwen2.5-Math-1.5B/7B/72B-Instruct achieve 79.7, 85.3, and 87.8 respectively on the MATH benchmark using TIR.

|

| 30 |

-

|

| 31 |

-

## Model Details

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

For more details, please refer to our [blog post](https://qwenlm.github.io/blog/qwen2.5-math/) and [GitHub repo](https://github.com/QwenLM/Qwen2.5-Math).

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

## Requirements

|

| 38 |

-

* `transformers>=4.37.0` for Qwen2.5-Math models. The latest version is recommended.

|

| 39 |

-

|

| 40 |

-

> [!Warning]

|

| 41 |

-

> <div align="center">

|

| 42 |

-

> <b>

|

| 43 |

-

> 🚨 This is a must because <code>transformers</code> integrated Qwen2 codes since <code>4.37.0</code>.

|

| 44 |

-

> </b>

|

| 45 |

-

> </div>

|

| 46 |

-

|

| 47 |

-

For requirements on GPU memory and the respective throughput, see similar results of Qwen2 [here](https://qwen.readthedocs.io/en/latest/benchmark/speed_benchmark.html).

|

| 48 |

-

|

| 49 |

-

## Quick Start

|

| 50 |

-

|

| 51 |

-

> [!Important]

|

| 52 |

-

>

|

| 53 |

-

> **Qwen2.5-Math-7B-Instruct** is an instruction model for chatting;

|

| 54 |

-

>

|

| 55 |

-

> **Qwen2.5-Math-7B** is a base model typically used for completion and few-shot inference, serving as a better starting point for fine-tuning.

|

| 56 |

-

>

|

| 57 |

|

| 58 |

## Citation

|

| 59 |

|

| 60 |

-

If you find

|

| 61 |

|

| 62 |

```

|

| 63 |

@article{yang2024qwen25mathtechnicalreportmathematical,

|

|

|

|

| 1 |

---

|

| 2 |

+

base_model: Qwen/Qwen2.5-Math-7B

|

| 3 |

language:

|

| 4 |

- en

|

| 5 |

pipeline_tag: text-generation

|

|

|

|

| 9 |

---

|

| 10 |

|

| 11 |

|

| 12 |

+

# Qwen2.5-Math-7B-RoPE-300k

|

| 13 |

|

| 14 |

+

This model is a variant of [Qwen/Qwen2.5-Math-7B](https://huggingface.co/Qwen/Qwen2.5-Math-7B), whose RoPE base frequency was increased to 300k in order to extend the model's context from 4k to 32k tokens.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 |

|

| 16 |

## Citation

|

| 17 |

|

| 18 |

+

If you find this model useful in your work, please cite the original source:

|

| 19 |

|

| 20 |

```

|

| 21 |

@article{yang2024qwen25mathtechnicalreportmathematical,

|