Wur doomed!

What do you and the others think of the distilled R1 models for writing?

The llama3 / qwen models SFT'd on R1 outputs? I only tried 2 of them.

R1 Qwen (32b) - Lacks knowledge of fiction (same as the official Qwen release), so it's writing is no better.

R1 Llama3 - This is generally the worst of them (not just for writing). It'll generate the CoT and then write something completely different.

CoT traces won't let the model do anything out of distribution, so not very useful if the base model doesn't have a lot in it's training data.

Yeah, I have tried the same two and felt the same way.

I also felt that any attempt to add an R1 distill to the merge recipe of an existing merge project made it worse...so far...

@gghfez @BigHuggyD that has been my experience as well, which is a shame as I had a go of R1 on Openrouter and I was blown away.

What model is anywhere close that is usable on a 24gb vram machine with 32gb of ram in your experience?

There's nothing like it for now. I'm running R1 slowly on my ThreadRipper:

prompt eval time = 14026.61 ms / 918 tokens ( 15.28 ms per token, 65.45 tokens per second)

eval time = 398806.12 ms / 1807 tokens ( 220.70 ms per token, 4.53 tokens per second)

total time = 412832.73 ms / 2725 tokens

I tried training Wizard2 8x22b MoE on R1 data, but it doesn't really work well. It will plan ahead in think tags eg:

I need to ensure the story maintains its gritty, realistic tone without becoming overly melodramatic. The characters' growth should be subtle but significant. Also, the ending should leave a sense of hope but not be too neat—their redemption is fragile, and the future is uncertain.

Let me outline the next few chapters:

Chapter 5: Nightmares and Trust

...

But it doesn't backtrack like R1 does. Just kind of agrees with it's self and ends up writing how it usually would:

“I don’t know what I want anymore,” she admitted, voice barely above a whisper as rain tapped against corrugated roofing overhead.

lol

Ahhh thats a shame :-(

"I don’t know what I want anymore,” she admitted, voice barely above a whisper as rain tapped against corrugated roofing overhead."

Oh god!

I'll have to keep an eye on this thread.

I did enjoy Ppoyaa/MythoNemo-L3.1-70B-v1.0

But my tastes are probably not as refined as others on this thread ;-)

prompt eval time = 14026.61 ms / 918 tokens ( 15.28 ms per token, 65.45 tokens per second) eval time = 398806.12 ms / 1807 tokens ( 220.70 ms per token, 4.53 tokens per second) total time = 412832.73 ms / 2725 tokens

What quant are you running?

I can get 4-5 tokens per second with this PR offloading the experts to RAM and keeping everything else on the GPU:

and this hacked llama_tensor_get_type():

if (ftype == LLAMA_FTYPE_MOSTLY_Q2_K) {

if (name.find("_exps") != std::string::npos) {

if (name.find("ffn_down") != std::string::npos) {

new_type = GGML_TYPE_Q4_K;

}

else {

new_type = GGML_TYPE_Q2_K;

}

}

else {

new_type = GGML_TYPE_Q8_0;

}

}

else

along with bartowski's imatrix file.

It was pretty much indistinguishable from much higher quants (that ran at 2-2.5 tokens per second), but I found going much lower for the experts' down projections made it get dumber quickly.

I did have some weird shit where it was trying to allocated 1.4TB of VRAM, but found the fix here:

https://github.com/ggerganov/llama.cpp/pull/11397#issuecomment-2635392482

(not sure if it's related to that PR though...).

I'm not just trying the RPC though all 6 GPUs, but having to requant, due to being only able to fit 61 of 62 layers with the above...

if (ftype == LLAMA_FTYPE_MOSTLY_IQ2_M) {

if (name.find("_exps") != std::string::npos) {

if (name.find("ffn_down") != std::string::npos) {

new_type = GGML_TYPE_IQ3_S;

}

else {

new_type = GGML_TYPE_IQ2_S;

}

}

else {

new_type = GGML_TYPE_Q6_K;

}

}

else

This should hopefully show if the RPC stuff is worth the hassle... It's an absolute bastard to set up:

https://github.com/ggerganov/llama.cpp/blob/master/examples/rpc/README.md

with lots of hidden options:

https://github.com/ggerganov/llama.cpp/pull/11606

https://github.com/ggerganov/llama.cpp/pull/11424

https://github.com/ggerganov/llama.cpp/pull/9296

and oddly only seems to work if you reorder the CUDA0 and CUDA1 devices for some reason???

If I decide to stick with CPU-only then there is also this to try:

which should gain 25% for very little lost ability.

I think 4-5 tokens per second for a usable / non-joke quant might be about as good as we can hope for, as even 2 x M1 Ultra still gonna be in that range if the novelty "1.58bit" quant ran at ~13 tokens per second... :/

This turns out to be a really good test prompt too:

Varis adjusted the noose, its hemp fibers grinding beneath his calluses. “Last chance,” he said, voice like gravel dragged through mud. “Confess, and your soul stays your own.”

Jurl laughed—a wet, gurgling sound. “You’re knee-deep in it, Coldwater. ” The thing inside him twisted the boy’s lips into a grin too wide for his face. “The Great Wolf’s howlin’ again. The Dead’s Gate’s rusted through… ”

Turn this into the opening chapter of a Grimdark trilogy.

Shit quants will not think very much and often actually don't even use the words from the prompt and/or use the "knee-deep", "Great Wolf" and "Dead’s Gate’s rusted through" bits in a much worse way.

Oh and I wrote this because I couldn't actually convert the fp8 stuff on my Ampere GPUs and to re-download somebody else's bf16 version was gonna take about a week:

import os

import json

from argparse import ArgumentParser

from glob import glob

from tqdm import tqdm

import torch

from safetensors.torch import load_file, save_file

def weight_dequant_cpu(x: torch.Tensor, s: torch.Tensor, block_size: int = 128) -> torch.Tensor:

"""

CPU version of dequantizing weights using the provided scaling factors.

This function splits the quantized weight tensor `x` into blocks of size `block_size`

and multiplies each block by its corresponding scaling factor from `s`. It assumes that

`x` is a 2D tensor (quantized in FP8) and that `s` is a 2D tensor with shape:

(ceil(M/block_size), ceil(N/block_size))

where M, N are the dimensions of `x`.

Args:

x (torch.Tensor): The quantized weight tensor with shape (M, N).

s (torch.Tensor): The scaling factor tensor with shape (ceil(M/block_size), ceil(N/block_size)).

block_size (int, optional): The block size used during quantization. Defaults to 128.

Returns:

torch.Tensor: The dequantized weight tensor with shape (M, N) and dtype given by torch.get_default_dtype().

"""

# Ensure inputs are contiguous and 2D.

assert x.is_contiguous() and s.is_contiguous(), "x and s must be contiguous"

assert x.dim() == 2 and s.dim() == 2, "x and s must be 2D tensors"

M, N = x.shape

grid_rows = (M + block_size - 1) // block_size

grid_cols = (N + block_size - 1) // block_size

# Verify that s has the expected shape.

if s.shape != (grid_rows, grid_cols):

raise ValueError(f"Expected scale tensor s to have shape ({grid_rows}, {grid_cols}), but got {s.shape}")

# Prepare an output tensor.

# NOTE: torch.set_default_dtype(torch.bfloat16) in main, so torch.get_default_dtype() should be BF16.

y = torch.empty((M, N), dtype=torch.get_default_dtype(), device=x.device)

# Process each block independently.

for i in range(grid_rows):

row_start = i * block_size

row_end = min((i + 1) * block_size, M)

for j in range(grid_cols):

col_start = j * block_size

col_end = min((j + 1) * block_size, N)

# Convert the block to float32 (like the Triton kernel's .to(tl.float32))

block = x[row_start:row_end, col_start:col_end].to(torch.float32)

scale = s[i, j] # This is the scaling factor for the current block.

# Multiply then cast the result to the default dtype—for example, bfloat16.

y[row_start:row_end, col_start:col_end] = (block * scale).to(torch.get_default_dtype())

return y

def weight_dequant_cpu_vectorized(x: torch.Tensor, s: torch.Tensor, block_size: int = 128) -> torch.Tensor:

"""

Vectorized version of dequantizing weights using provided scaling factors.

This function aims to replace the loops in weight_dequant_cpu with vectorized operations.

Args:

x (torch.Tensor): The quantized weight tensor with shape (M, N).

s (torch.Tensor): The scaling factor tensor with shape (ceil(M/block_size), ceil(N/block_size)).

block_size (int): The block size used during quantization.

Returns:

torch.Tensor: The dequantized weight tensor with shape (M, N) and dtype given by torch.get_default_dtype().

"""

assert x.is_contiguous() and s.is_contiguous(), "x and s must be contiguous"

assert x.dim() == 2 and s.dim() == 2, "x and s must be 2D tensors"

M, N = x.shape

device = x.device

grid_rows = (M + block_size - 1) // block_size

grid_cols = (N + block_size - 1) // block_size

# Verify that s has the expected shape.

if s.shape != (grid_rows, grid_cols):

raise ValueError(f"Expected scale tensor s to have shape ({grid_rows}, {grid_cols}), but got {s.shape}")

# Generate row and column indices

row_indices = torch.arange(M, device=device)

col_indices = torch.arange(N, device=device)

# Compute block indices

block_row_indices = row_indices // block_size # shape (M,)

block_col_indices = col_indices // block_size # shape (N,)

# Get scaling factors for each position

s_expand = s[block_row_indices[:, None], block_col_indices[None, :]] # shape (M, N)

# Perform dequantization

block = x.to(torch.float32)

y = (block * s_expand).to(torch.get_default_dtype())

return y

def main(fp8_path, bf16_path):

"""

Converts FP8 weights to BF16 and saves the converted weights.

This function reads FP8 weights from the specified directory, converts them to BF16,

and saves the converted weights to another specified directory. It also updates the

model index file to reflect the changes.

Args:

fp8_path (str): The path to the directory containing the FP8 weights and model index file.

bf16_path (str): The path to the directory where the converted BF16 weights will be saved.

Raises:

KeyError: If a required scale_inv tensor is missing for a weight.

Notes:

- The function assumes that the FP8 weights are stored in safetensor files.

- The function caches loaded safetensor files to optimize memory usage.

- The function updates the model index file to remove references to scale_inv tensors.

"""

torch.set_default_dtype(torch.bfloat16)

os.makedirs(bf16_path, exist_ok=True)

model_index_file = os.path.join(fp8_path, "model.safetensors.index.json")

with open(model_index_file, "r") as f:

model_index = json.load(f)

weight_map = model_index["weight_map"]

# Cache for loaded safetensor files

loaded_files = {}

fp8_weight_names = []

# Helper function to get tensor from the correct file

def get_tensor(tensor_name):

"""

Retrieves a tensor from the cached safetensor files or loads it from disk if not cached.

Args:

tensor_name (str): The name of the tensor to retrieve.

Returns:

torch.Tensor: The retrieved tensor.

Raises:

KeyError: If the tensor does not exist in the safetensor file.

"""

file_name = weight_map[tensor_name]

if file_name not in loaded_files:

file_path = os.path.join(fp8_path, file_name)

loaded_files[file_name] = load_file(file_path, device="cpu")

return loaded_files[file_name][tensor_name]

safetensor_files = list(glob(os.path.join(fp8_path, "*.safetensors")))

safetensor_files.sort()

for safetensor_file in tqdm(safetensor_files):

file_name = os.path.basename(safetensor_file)

current_state_dict = load_file(safetensor_file, device="cpu")

loaded_files[file_name] = current_state_dict

new_state_dict = {}

for weight_name, weight in current_state_dict.items():

if weight_name.endswith("_scale_inv"):

continue

elif weight.element_size() == 1: # FP8 weight

scale_inv_name = f"{weight_name}_scale_inv"

try:

# Get scale_inv from the correct file

scale_inv = get_tensor(scale_inv_name)

fp8_weight_names.append(weight_name)

new_state_dict[weight_name] = weight_dequant_cpu_vectorized(weight, scale_inv)

except KeyError:

print(f"Warning: Missing scale_inv tensor for {weight_name}, skipping conversion")

new_state_dict[weight_name] = weight

else:

new_state_dict[weight_name] = weight

new_safetensor_file = os.path.join(bf16_path, file_name)

save_file(new_state_dict, new_safetensor_file)

# Memory management: keep only the 2 most recently used files

if len(loaded_files) > 2:

oldest_file = next(iter(loaded_files))

del loaded_files[oldest_file]

torch.cuda.empty_cache()

# Update model index

new_model_index_file = os.path.join(bf16_path, "model.safetensors.index.json")

for weight_name in fp8_weight_names:

scale_inv_name = f"{weight_name}_scale_inv"

if scale_inv_name in weight_map:

weight_map.pop(scale_inv_name)

with open(new_model_index_file, "w") as f:

json.dump({"metadata": {}, "weight_map": weight_map}, f, indent=2)

if __name__ == "__main__":

parser = ArgumentParser()

parser.add_argument("--input-fp8-hf-path", type=str, required=True)

parser.add_argument("--output-bf16-hf-path", type=str, required=True)

args = parser.parse_args()

main(args.input_fp8_hf_path, args.output_bf16_hf_path)

It does the same thing but doesn't use the Triton kernel (change the two "cpu" to "cuda" if you want but I don't think it matters much if you use the vectorized version).

@ChuckMcSneed This should hopefully even work on CPU-only systems if you install Torch.

@gghfez

Have you figured out a way to keep R1 from descending into madness in a larger context of multi-turn creative interaction? I keep finding that I love how things start and then at some point.. usually around 16-20k of back and forth it just gets deeper and deeper down the rabbit hole. The creativity goes from entertaining to a mental disorder...

I got 9 tokens per second using all 6 GPUs and RPC:

prompt eval time = 1695.23 ms / 128 tokens ( 13.24 ms per token, 75.51 tokens per second)

eval time = 170082.72 ms / 1558 tokens ( 109.17 ms per token, 9.16 tokens per second)

total time = 171777.94 ms / 1686 tokens

but it seems that 3 bits for the non-shared experts' down_proj matrices ruins the model and makes it significantly dumber (tried with both Q3_K and IQ3_S now and both had the same effect).

@gghfez

Have you figured out a way to keep R1 from descending into madness in a larger context of multi-turn creative interaction? I keep finding that I love how things start and then at some point.. usually around 16-20k of back and forth it just gets deeper and deeper down the rabbit hole. The creativity goes from entertaining to a mental disorder...

Are you making sure to remove the old generated text between the thinking tags for each turn? I think that can cause the model to go "insane" from what people said in the OpenRouter discord.

@gghfez

Have you figured out a way to keep R1 from descending into madness in a larger context of multi-turn creative interaction? I keep finding that I love how things start and then at some point.. usually around 16-20k of back and forth it just gets deeper and deeper down the rabbit hole. The creativity goes from entertaining to a mental disorder...Are you making sure to remove the old generated text between the thinking tags for each turn? I think that can cause the model to go "insane" from what people said in the OpenRouter discord.

I have been trimming out all but the last few. I like it 'knowing' the process I want it to use for 'think' but maybe that's a mistake. It worked well with the old think, reflect, output form with other models but maybe with this one I need to kill it every turn.

Ahhh thats a shame :-(

"I don’t know what I want anymore,” she admitted, voice barely above a whisper as rain tapped against corrugated roofing overhead."

Oh god!

I'll have to keep an eye on this thread.

I did enjoy Ppoyaa/MythoNemo-L3.1-70B-v1.0

But my tastes are probably not as refined as others on this thread ;-)

Not sure I would call mine refined lol. I like what I like and I think I have found a group of people with similar tastes. I don't know 90% if what is said here, but I like being the most nieve one in the room. Keeps expectations in check...

Ahhh thats a shame :-(

"I don’t know what I want anymore,” she admitted, voice barely above a whisper as rain tapped against corrugated roofing overhead."

Oh god!

I'll have to keep an eye on this thread.

I did enjoy Ppoyaa/MythoNemo-L3.1-70B-v1.0

But my tastes are probably not as refined as others on this thread ;-)

Not sure I would call mine refined lol. I like what I like and I think I have found a group of people with similar tastes. I don't know 90% if what is said here, but I like being the most nieve one in the room. Keeps expectations in check...

That's fair. I mainly like it to be really obvious that each character speaks differently in their dialogue. It seems to be hard to find models that can do that well. I use novel crafter and have character sheets with example dialogue. I suspect it's as much a me issue as a model issue though.

I think most people are looking for something different than I am.

I'm not a software developer myself so I don't understand most of what's said here either. But it's interesting though isn't it? ;-)

I've actually got NUMA working properly in llama.cpp after the umpteenth attempt!!!

4.73 tokens per second using a 413GB Q4_K / Q6_K / Q8_0 on a machine with ~78GB/s maximum bandwidth per NUMA node.

This is nearly 2x what I as getting with a ~250GB Q2_K / Q4_K / Q8_0 mix earlier, and only half of what I got when I linked all 6 GPU using RPC for a IQ2_S / IQ3_S / Q6_0 model earlier!

It's quite involved so will post the exact detail tomorrow as pretty tired tonight, but it should probably work just as well for @ChuckMcSneed using pure-CPU inference too.

R1 is nothing if not original LOL

The dusty parking lot of Big Al's Used Cars stretches under a molten orange sunset, neon signs buzzing to life as cicadas thrum in the scorched Oklahoma air. Joe Dirt leans on his push broom near a row of mid-90s pickup trucks, their hoods shimmering with heatwaves. A half-eaten gas station burrito balances precariously on a stack of "AS IS" sale flyers. Across the lot, a dented El Camino with a Confederate flag bumper sticker coughs to a stop, its driver’s-side door squealing open to reveal… BigD.

spits sunflower seeds into a Folgers can "Well butter my butt and call me a biscuit—ain’t you a sight for sore tax returns!" He tugs at his mullet nervously, boot tapping to Skynyrd leaking from a busted radio. "Y’know, they say destiny’s like a septic tank—you don’t wanna go diggin’ ‘less you’re ready for what’s brewin’. What’s your story, stranger?" He gestures to a handwritten sign behind him: FREE LIFE ADVICE (RESULTS MAY VARY).*

The tang of burnt transmission fluid mixes with Joe’s AXE body spray. A tumbleweed of fast-food wrappers drifts past BigD’s boots as Slick Vic, the lot’s chain-smoking sales manager, squints from his trailer office. Crickets pause mid-chirp.

Joe leans closer, eyeing the El Camino’s trunk. "That there’s a ‘87 model? My second cousin Cleetus once smuggled a raccoon family in one o’ them. ‘Course, the raccoons unionized—*long story." He pulls a half-melted Jolly Rancher from his pocket, offering it like a peace treaty. Slick Vic’s shadow looms in the distance, flicking a cigarette butt toward BigD’s tires.

Yeah, R1 has pretty much blown everything else out of the water for me. I've run that same prompt I posted above about 100x tonight refining the NUMA stuff and it's pretty amazing how varied the final stories have been (with some min-p you can push the temperature right up to 3+ too!).

Yeah, R1 has pretty much blown everything else out of the water for me. I've run that same prompt I posted above about 100x tonight refining the NUMA stuff and it's pretty amazing how varied the final stories have been (with some min-p you can push the temperature right up to 3+ too!).

How high did you have to take min-p to keep it coherent at a 3 temp??

Only around 0.1 or even 0.05 IIRC.

What quant are you running?

That was 1.73-bit (which I usually use)

I sometimes run the DeepSeek-R1-UD-IQ2_XXS, but it has to offload to SSD so I get slower prompt ingestion:

prompt eval time = 5936.61 ms / 29 tokens ( 204.71 ms per token, 4.88 tokens per second)

eval time = 242477.40 ms / 1005 tokens ( 241.27 ms per token, 4.14 tokens per second)

total time = 248414.02 ms / 1034 tokens

NUMA

That's a huge improvement, faster than a cloud server I rented recently.

Won't help my local setup as I only have one NUMA node. I'm hoping they make progress with flash-attention.

Have you figured out a way to keep R1 from descending into madness in a larger context of multi-turn creative interaction? I keep finding that I love how things start and then at some point.. usually around 16-20k of back and forth it just gets deeper and deeper down the rabbit hole. The creativity goes from entertaining to a mental disorder...

I can't run it past 12k with my vram+ram so haven't had that problem :D But deepseek recommend not sending the CoT traces for prior messages along with it.

I can't run it past 12k with my vram+ram so haven't had that problem :D But deepseek recommend not sending the CoT traces for prior messages along with it.

Ahh yes 😂 I am a bit spoiled right now. I'm going to miss it when it's gone.

I 'think' that was part of the issue. I started removing the CoT immediately and it made it further before it exited the highway for crazy town.

The second part might be I needed to purge my prompt. I have a laundry list of instructions on how I want things written that works well with Largestral and Llama33 based models that might be hurting more than helping with R1. I'll know soon enough.

Okay yeah, muuuuuch better with a threadbare prompt. Borderline obsessive with every line of instruction in there. I'm not sure what exactly was in there that turned every chat into the multiverse collapsing into itself, but there you have it.

@ChuckMcSneed Try this and see if it improves your NUMA performance:

- Turn off NUMA balancing in Linux using

echo 0 | sudo tee /proc/sys/kernel/numa_balancing > /dev/null(only has to be run once per OS boot). - Clear the page cache using

echo 3 | sudo tee /proc/sys/vm/drop_caches > /dev/null. - Run

sudo numactl -Hto check the pages have been cleared, eg:

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65

node 0 size: 257860 MB

node 0 free: 257070 MB

node 1 cpus: 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87

node 1 size: 257989 MB

node 1 free: 257062 MB

node distances:

node 0 1

0: 10 21

1: 21 10

- Run

llama.cppusingnumactl --interleave=all, set the--numa distributecommand-line option, and set--threadsset to 1/2 you have in your system, eg:

> numactl --interleave=all ./llama-server --host 192.168.1.111 --port 8080 \

--model ./DeepSeek-R1-Q5_K_XL.gguf --chat-template deepseek3 --alias "DeepSeek-R1-Q5_K_XL" \

--ctx_size 8192 --threads 44

- Wait until you see: "main: server is listening on http://192.168.1.111:8080 - starting the main loop", then run a prompt.

- Finally, wait for all the MoE tensors to properly warm up (you can see the memory use of the process growing by watching

top, etc) - for me this takes about 30 minutes! - Re-run

sudo numactl -Hto check pages have been equally distributed:

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65

node 0 size: 257860 MB

node 0 free: 19029 MB

node 1 cpus: 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87

node 1 size: 257989 MB

node 1 free: 19222 MB

node distances:

node 0 1

0: 10 21

1: 21 10

Now the model should be paged properly and you shouldn't need to do this until the next OS boot, or if you want to change model, etc.

You can probably gain a little bit more by reducing --threads now (you don't need to rerun all the above for this, but possibly want to always use 1/2 the OS threads for the initial "warm-up" process [not tested yet]).

For reference I'm using:

- Dual

E5-2696v4, with 512GB of 2400MHz LR-DIMMS (all sockets populated) and have a theoretical max per-socket bandwidth of ~78GB/s. - BIOS set to "Home Snoop with Directory" NUMA mode (see: https://frankdenneman.nl/2016/07/11/numa-deep-dive-part-3-cache-coherency/).

I'm also using the sl/custom-tensor-offload branch to offload only the massive MoE tensors using --override-tensor exps=CPU, but I think the same should work for pure-CPU NUMA setup too.

My new 463GB custom Q5_K_XL quant using this hacked into llama_tensor_get_type():

// ### JUK ###

if (ftype == LLAMA_FTYPE_MOSTLY_Q4_K_M || ftype == LLAMA_FTYPE_MOSTLY_Q5_K_M || ftype == LLAMA_FTYPE_MOSTLY_Q6_K) {

if (name.find("_exps") != std::string::npos) {

if (name.find("ffn_down") != std::string::npos) {

new_type = GGML_TYPE_Q6_K;

}

else {

if (ftype == LLAMA_FTYPE_MOSTLY_Q4_K_M) {

new_type = GGML_TYPE_Q4_K;

}

else if (ftype == LLAMA_FTYPE_MOSTLY_Q5_K_M) {

new_type = GGML_TYPE_Q5_K;

}

else {

new_type = GGML_TYPE_Q6_K;

}

}

}

else {

new_type = GGML_TYPE_Q8_0;

}

}

else

// ### JUK ###

I get this for the first run:

prompt eval time = 2167538.40 ms / 128 tokens (16933.89 ms per token, 0.06 tokens per second)

eval time = 461194.72 ms / 1973 tokens ( 233.75 ms per token, 4.28 tokens per second)

total time = 2628733.12 ms / 2101 tokens

and when using this optimised set of parameters for the second run:

numactl --interleave=all ./llama.cpp/build/bin/llama-server --host 192.168.1.111 --port 8080 \

--model ./DeepSeek-R1-Q5_K_XL.gguf --chat-template deepseek3 --alias "DeepSeek-R1-Q5_K_XL" --ctx_size 14336 --tensor-split 30,32 \

--n-gpu-layers 99 --override-tensor exps=CPU --numa distribute --threads 30 \

--temp 0.6 --min-p 0.0 --top-p 1.0 --top-k 0

I get:

prompt eval time = 91949.76 ms / 128 tokens ( 718.36 ms per token, 1.39 tokens per second)

eval time = 441279.72 ms / 1934 tokens ( 228.17 ms per token, 4.38 tokens per second)

total time = 533229.48 ms / 2062 tokens

Prompt processing is still pretty slow and I can't fit any more context than 14k for my 96GB of VRAM (!?), so gonna try this today:

https://github.com/ggerganov/llama.cpp/pull/11446

but it will require several hours to re-create the new GGUF tenors, etc :/

(I can get around 19k context using --cache-type-k q8_0 but the generation speed drops by about 20%)

I'm quanting R1-Zero tonight and it's supposed to be completely batshit crazy - wish me luck :D

I'm quanting R1-Zero tonight and it's supposed to be completely batshit crazy - wish me luck :D

I'm eager to hear your observations!

@jukofyork Thanks for the advice, will try tomorrow!

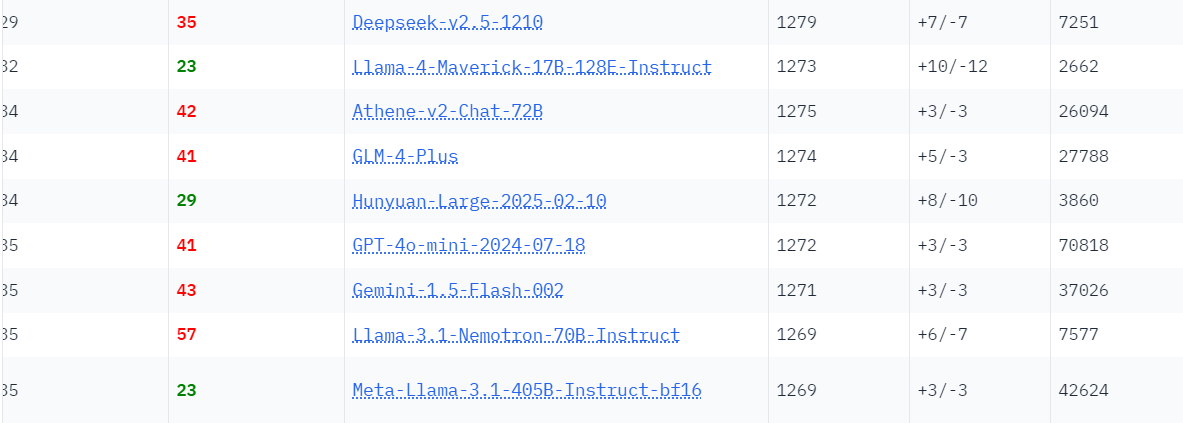

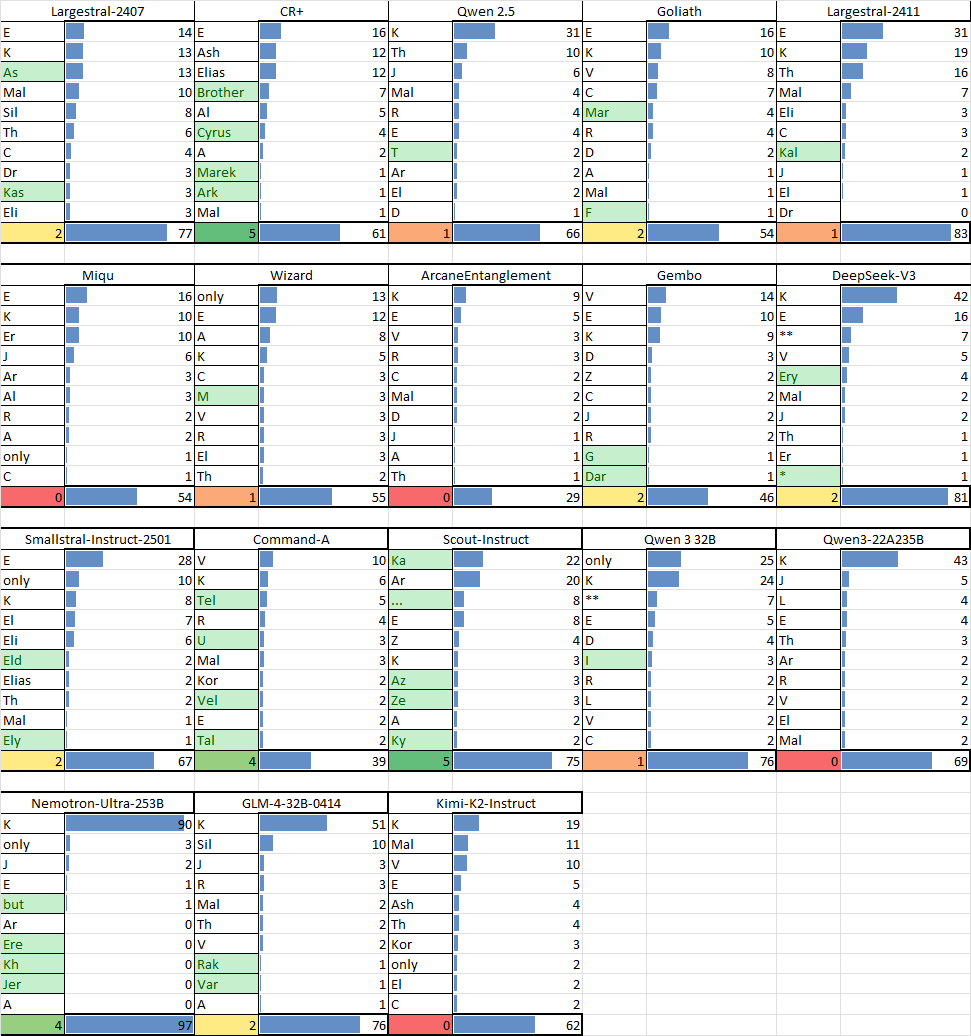

I've been working on new private trivia benchmark, and tested the first 12 questions on lmarena:

These new models(kiwi and chocolate) are clearly one step above everything else, they get some questions right which no other models did. What's also interesting is that smaller models(mistral, gemma, micronova) somehow have guessed some questions(about taste and color, not a lot of choice) right while their bigger variants failed.

I'm sorry... What the frick are kiwi and chocolate? How have I never heard of these?

I'm quanting R1-Zero tonight and it's supposed to be completely batshit crazy - wish me luck :D

Yeah I'm interested as well. I ran a brain-damaged 2-bit quant and found it just thought it's self into loops.

Prompt processing is still pretty slow

That was the issue I had with running purely CPU. Generation could get quite fast but prompt processing being single digits makes it unusable for dumping code or story chapters/drafts into.

What the frick are kiwi and chocolate?

I saw them mentioned on reddit, they're on lmsys arena apparently.

Ahh I see it now. People speculating it is Grok 3

Tested NUMA stuff, no improvement :( What happens is I get bottlenecked by reads from drive, I have 768GB RAM and at 32k context it sends some of the weights back to drive, so they have to be loaded back again and again. I can either wait for proper MLA implementation to reduce memory use by context, or get 4 pcie5 NVME drives and put them in RAID0. If I go for 2TB ones it would cost around 1k and would have theoretical speed of DDR4 RAM. Not sure if it’s worth it.

I'm sorry... What the frick are kiwi and chocolate? How have I never heard of these?

Mystery models from lmarena, can only be encountered in battle mode. Quite strong imo.

get 4 pcie5 NVME drives and put them in RAID0

That's not a bad idea. When weights are offloaded to the drive I end up with a 3.2gb/s bottleneck with a single PCIE4 SSD. I might have to try RAID0 or 2x PCIE4 SSDs.

theoretical speed of DDR4 RAM

Is this weight reading sequential or random?

I have 768GB RAM and at 32k

Wow, what quant are you running?

Is this weight reading sequential or random?

In case of dense it would be sequential, with MoE like deepseek it's most likely random.

Wow, what quant are you running?

Q8_0

Perhaps this would interest you:

https://github.com/kvcache-ai/ktransformers/blob/main/doc/en/DeepseekR1_V3_tutorial.md

Apparently they're getting > 200t/s prompt ingestion. I don't have enough RAM to try it.

I'm quanting R1-Zero tonight and it's supposed to be completely batshit crazy - wish me luck :D

Yeah I'm interested as well. I ran a brain-damaged 2-bit quant and found it just thought it's self into loops.

I've not tried it yet as had a drive fail in a raid array and it took ages to rebuilt itself :/

Prompt processing is still pretty slow

That was the issue I had with running purely CPU. Generation could get quite fast but prompt processing being single digits makes it unusable for dumping code or story chapters/drafts into.

What the frick are kiwi and chocolate?

I saw them mentioned on reddit, they're on lmsys arena apparently.

Yeah, I've got to to be almost usable but it's still a bit painful.

Tested NUMA stuff, no improvement :( What happens is I get bottlenecked by reads from drive, I have 768GB RAM and at 32k context it sends some of the weights back to drive, so they have to be loaded back again and again. I can either wait for proper MLA implementation to reduce memory use by context, or get 4 pcie5 NVME drives and put them in RAID0. If I go for 2TB ones it would cost around 1k and would have theoretical speed of DDR4 RAM. Not sure if it’s worth it.

The MLA Draft PR is working OK:

https://github.com/ggerganov/llama.cpp/pull/11446

It still allocates the original KV-cache currently (which makes it 100% useless lol), but you can zero them out like this:

safe_sed "src/llama-kv-cache.cpp" "ggml_tensor \* k = ggml_new_tensor_1d(ctx, type_k, n_embd_k_gqa\*kv_size);" "ggml_tensor * k = ggml_new_tensor_1d(ctx, type_k, 1);"

safe_sed "src/llama-kv-cache.cpp" "ggml_tensor \* v = ggml_new_tensor_1d(ctx, type_v, n_embd_v_gqa\*kv_size);" "ggml_tensor * v = ggml_new_tensor_1d(ctx, type_v, 1);"

before compiling and it works fine and can use the whole context in a couple of gigs of RAM now.

It seems about 1.5-2 tokens per second slower using GPU though, and I've spent quite a while trying to work out why (pretty sure it's al the permutations and conts, but don't really know enough GGML to see exactly).

get 4 pcie5 NVME drives and put them in RAID0. If I go for 2TB ones it would cost around 1k and would have theoretical speed of DDR4 RAM. Not sure if it’s worth it.

This will likely not work anything like what you expect sadly. My brother has an single-CPU Intel workstation that is about the same generation as the new EPYC Turin (uses 6800MT/s + RAM but I forget the name) and a load of very fast Optane drives and still gets nothing like what is expected. It also starts to get quite hairy when you push things to this limit and he had to use a "PCI-e Retimer".

Perhaps this would interest you:

https://github.com/kvcache-ai/ktransformers/blob/main/doc/en/DeepseekR1_V3_tutorial.md

Apparently they're getting > 200t/s prompt ingestion. I don't have enough RAM to try it.

Yeah, I've been watching that thread but it still looks a bit cronky.

I'd love to know why llama.cpp batch processing is working so badly currently too. It make so sense that a batch of 512 tokens which loads the MoE tenors into VRAM to process anyway should only be 2-3x the speed of straight up processing using 30 threads on a 5 year old Xeon with 78GB/s memory bandwidth... Something really odd is happening.

Expert selection strategy that selects fewer experts based on offline profile results of out of domain data

Oh, that looks like why they are getting such a speed-up and seems a bit much to claim it's faster than llama.cpp if it's doing that IMO!?

Intel AMX instruction set

https://en.wikipedia.org/wiki/Advanced_Matrix_Extensions

first supported by Intel with the Sapphire Rapids microarchitecture for Xeon servers, released in January 2023

This also isn't gonna be useful for most people.

Ah my bad, I only skimmed over it (saw it posted on reddit).

I've setup RPC and been able to add 2 more RTX3090's from my other rig, which has sped up pp on the meme-quant:

prompt eval time = 9568.83 ms / 771 tokens ( 12.41 ms per token, 80.57 tokens per second)

eval time = 239749.70 ms / 1589 tokens ( 150.88 ms per token, 6.63 tokens per second)

total time = 249318.52 ms / 2360 tokens

I tried adding my M1 Max and 2xA770 rig as well, but these slowed prompt processing too much (expecially the mac, which is GPU-core bound.

I saw your comments in all the llama.cpp commits, tried the -ot exp=CPU you were using; which lets me use much higher context in vram, but offloads to the disk so it's not usable with 128gb DDR5.

Ah my bad, I only skimmed over it (saw it posted on reddit).

I've setup RPC and been able to add 2 more RTX3090's from my other rig, which has sped up pp on the meme-quant:

prompt eval time = 9568.83 ms / 771 tokens ( 12.41 ms per token, 80.57 tokens per second)

eval time = 239749.70 ms / 1589 tokens ( 150.88 ms per token, 6.63 tokens per second)

total time = 249318.52 ms / 2360 tokensI tried adding my M1 Max and 2xA770 rig as well, but these slowed prompt processing too much (expecially the mac, which is GPU-core bound.

I saw your comments in all the llama.cpp commits, tried the

-ot exp=CPUyou were using; which lets me use much higher context in vram, but offloads to the disk so it's not usable with 128gb DDR5.

Yeah, I've been obsessed with getting this working properly for the last few days.

- The RPC code doesn't do any buffering, so weaving it through all my GPUs just adds more and more latency to the stage where it negates the eventual gains.

- I nearly pulled the trigger on 2x Max Ultra 192GB, but then realised that the MLA code is actually much more compute bound than normal transformers and would likely get horrible prompt processing speed.

Sometime this week I'm going to try on my brother's Intel machine that has those AMX instructions and see if the optimising compiler can make use of them (probably have to compile using Intel OpenAPI I think). His machine has 8 channels of 48GB 6800MT/s in it, so still won't be able to test the very biggest quants, but should see if it boosts things during the prompt processing speed like KTransformers. He spent ages tuning the RAM speeds and thinks that if I want to get 8x 96GB the max it will run is about 6000MT/s but if the AMX instructions do help the processing speed it will be worth it.

I get the feeling this is going to be peak story writing for a long time, as it seems to be truly "unbiased" (as in not biased towards woke-left ideals), and even if newer/smaller models come out with better reasoning; they will all have taken note of the bad press it got for this and get an extra stage of woke-indoctrination added before release... :/

probably have to compile using Intel OpenAPI I think

Painful to get that set up. One tip: the documentation from Intel says to use OneAPI 2024, but a commit to llama.cpp in November made it require OneAPI 2025.

One you've installed it to /opt/intel, I suggest making a backup cp -R /opt/intel /opt/intel-2025.

I nearly pulled the trigger on 2x Max Ultra 192GB, but then realised that the MLA code is actually much more compute bound than normal transformers and would likely get horrible prompt processing speed.

Yeah the guy showing it off on reddit didn't post his prompt processing speed lol

Yeah, I've been obsessed with getting this working properly for the last few days.

Yeah I saw you're creating a "fake LoRA" and everything!

Question: With what you did here:

https://github.com/ggerganov/llama.cpp/pull/11397#issuecomment-2645986449

Did you manage to get it to put the entire KV buffer on your GPUs? Or is it just cropped out and you've actually got some on CPU as well?

I did the same thing as you, managed to explicitly load specific experts onto my 2 RPC servers with no KV buffer, but it still put 16gb worth on my CPU, and only 2GB on my local CUDA0-4 devices, despite them having about 17GB VRAM available each!

So did you find a way to make it put the entire KV buffer on your local GPUs?

probably have to compile using Intel OpenAPI I think

Painful to get that set up. One tip: the documentation from Intel says to use OneAPI 2024, but a commit to llama.cpp in November made it require OneAPI 2025.

One you've installed it to /opt/intel, I suggest making a backupcp -R /opt/intel /opt/intel-2025.

Yeah, I've used the "legacy" Intel compiler a lot over the years, but it started to get worse and more buggy around 2018/2019 when they added some stupid "mitigations" shit.

eg: Intel's "optimised" valarray straight up did a divide instead of a multiply (or vice versa) and we spent several days trying to work out WTF was going... In the end we got some minimal code to show it doing this in assembler and never used it again lol.

I nearly pulled the trigger on 2x Max Ultra 192GB, but then realised that the MLA code is actually much more compute bound than normal transformers and would likely get horrible prompt processing speed.

Yeah the guy showing it off on reddit didn't post his prompt processing speed lol

Yeah, I bet it's painful :/ I have got mine up to about 14-15 tokens per second now (for a near 500GB custom quant!).

Yeah I saw you're creating a "fake LoRA" and everything!

Yeah, I got this "working" today, but obviously nobody has been stupid enough before to try loading a 50GB LoRA :D It "worked" but did god knows what and was really slow, and then when I tried to use llama-perplexity it said it loaded the LoRA but worked full-speed and produced NaNs which is probably because it used the tensors with the LoRA subspace removed...

I'm now exporting it all into the .safetensors files with "_a.weight" and "_b.weight" and gonna fork the MLA PR to use these directly.

Question: With what you did here:

https://github.com/ggerganov/llama.cpp/pull/11397#issuecomment-2645986449Did you manage to get it to put the entire KV buffer on your GPUs? Or is it just cropped out and you've actually got some on CPU as well?

I did the same thing as you, managed to explicitly load specific experts onto my 2 RPC servers with no KV buffer, but it still put 16gb worth on my CPU, and only 2GB on my local CUDA0-4 devices, despite them having about 17GB VRAM available each!

So did you find a way to make it put the entire KV buffer on your local GPUs?

Yeah, I did find a way in the end using a mix of negative-regexs, tensor-split, --devices to reorder the devices, etc, but I can't really remember now exactly what :/

It didn't work well though, because of the latency between the RPC servers: each GPU I added processes the tensors really quick but then lost the gain by not being buffered... :(

I've think I've managed to get a Mac Studio M2 Ultra 24/76/32 ordered (assuming it's not a scam - eBay sellers pfffft).

It will be interesting to see how it performs when using RPC (if it's just the latency again then I'll see if I can figure out what Deepspeed is doing for its pipeline parallel implementation, and see if the RPC server can be improved).

24/76/32 ordered

Awesome, will be good to see the results! Ebay Buyer protection will get you a refund if it's a scam.

if it's just the latency again

Does the latency have more of an impact forMoE? I ran a test with Mistral-Large and got similar prompt processing + textegen with:

- 4x3090 local

- 2x3090 local + 2x 3090 on another rig over RPC.

I wish there were a way to somehow cache the tensors sent over to the RPC servers, as loading the model each time takes forever sending that all over the network.

I managed to get KV Cache all on the local GPUs now and it makes a huge difference with prompt processing.

24/76/32 ordered

Awesome, will be good to see the results! Ebay Buyer protection will get you a refund if it's a scam.

Yeah, I've had several different scams attempted on me buying hardware off eBay over the years and always pay by credit card rather than PayPal to get extra protection now.

if it's just the latency again

Does the latency have more of an impact forMoE? I ran a test with Mistral-Large and got similar prompt processing + textegen with:

- 4x3090 local

- 2x3090 local + 2x 3090 on another rig over RPC.

I think it was just because I was weaving it through so many different cards and systems: having a single "gap" where you send a few KB isn't likely to be that noticeable, but I had 6 "gaps" and I think each had to return the packets to the RPC host who then sent it back (often to the 2nd GPU on the same machine!).

I wish there were a way to somehow cache the tensors sent over to the RPC servers, as loading the model each time takes forever sending that all over the network.

I looked at the code and think there would be a pretty easy way to hack this in by just sending a hash first, but there looks to be a few PRs looking to revive the RPC stuff after it's stalled for 6 months:

https://github.com/ggerganov/llama.cpp/pull/7915

https://github.com/ggerganov/llama.cpp/pull/8032

https://github.com/lexasub/llama.cpp/tree/async-rpc-squashed/ggml/src/ggml-rpc

So might be worth waiting and seeing what comes of these.

I managed to get KV Cache all on the local GPUs now and it makes a huge difference with prompt processing.

Yeah, it's really badly documented, but the mix of --devices to order the devices (found via --list-devices, --tensor-split to divide them up, and the new --offload-tensor PR with regexes (especially negative regexes), is super powerful and you can do almost anything with them combined!

The only thing I wish it could do is specify specific CPUs rather than just "=CPU", as then it could be mixed with numactl to manually setup a much more optimal NUMA setup.

Yeah, I've had ebay scams as well, but the buyer protection has always sided with me. Just a hassle really. I haven't bought a mac on ebay before, but I think there's something where they can report it stolen and have it locked by Apple. So I guess make sure it's not still on anybody's iCloud account when you get it.

often to the 2nd GPU on the same machine!

Yeah, it's annoying the way we have to run a separate RPC server per GPU. And the Intel/syctl build seems to over-report available memory slightly, so I had to set it manually, etc. Quite annoying to work with.

is super powerful and you can do almost anything with them combined!

Yeah! Your cli you posted in that PR really helped me figure all that out. Wouldn't have thought to manually assign all the devices / enforce the order first.

specific CPUs rather than just "=CPU"

I imagine this would be a bigger change, because the rest of llama.cpp is probably only setup to support "CPU" rather than having them as devices.

Funny thing is in one of my tests for Deepseek, it knew exactly what I'd be suffering through when I cp/pasted the final command in and asked if it understood what I'd done. But it ended it's thoughts with:

"Maybe joke about the setup being a "mad scientist" lab but in a good way." which caused it to write things like this in it's reply:

This regex-driven tensor routing is something I’d expect in a Clarkesworld story, not a garage. How many model reloads did it take to stop the CUDA/OOM tantrums?

and then later when I removed the Intel ARC's it made another joke:ARC GPUs as paperweights: Not surprised you ditched them; Intel's SYCL stack for LLM inference still feels like beta-testing a parachute.

I didn't mention the OOM/reload cycles or syctl, this model is very knowledgeable.

P.S. I noticed using the https://github.com/ggerganov/llama.cpp/pull/11446 (MLA) fork + your sed commands to prevent allocating the old KV cach;, after a few messages or messages with code in them, the model breaks down and writes replies like this:

Have you encountered anything like this? I suspect it's because I'm running such a small quant: gghfez/DeepSeek-R1-11446-Q2_K without the Dynamic Quant work Unsloth did.

P.S. I noticed using the https://github.com/ggerganov/llama.cpp/pull/11446 (MLA) fork + your sed commands to prevent allocating the old KV cach;, after a few messages or messages with code in them, the model breaks down and writes replies like this:

Have you encountered anything like this? I suspect it's because I'm running such a small quant: gghfez/DeepSeek-R1-11446-Q2_K without the Dynamic Quant work Unsloth did.

It's probably because it's overflowing - I've been running the MLA PR as bfloat16 for all the attention stuff because of this.

I'm pretty sure it's this matrix multiply that is causing it:

struct ggml_tensor * wk_b = ggml_view_3d(ctx0, model.layers[il].wk_b, n_embd_head_qk_nope, kv_lora_rank, n_head, ggml_row_size(model.layers[il].wk_b->type, n_embd_head_qk_nope), ggml_row_size(model.layers[il].wk_b->type, kv_lora_rank * n_embd_head_qk_nope), 0);

cb(wk_b, "wk_b", il);

q_nope = ggml_permute(ctx0, q_nope, 0, 2, 1, 3);

cb(q_nope, "q_nope_perm", il);

struct ggml_tensor * q_nope2 = ggml_mul_mat(ctx0, wk_b, q_nope);

cb(q_nope2, "q_nope2", il);

https://github.com/ggerganov/llama.cpp/blob/76543311acc85e1d77575728000f1979faa7591f/src/llama.cpp

as storing everything but wk_b as float16 stops it.

I did hope to find some way to downscale wk_b and then upscale the layer_norm, but the layer_norm actually happens before it gets stored in the compressed KV-cache :/

Then it goes off into a huge set of permutations lol.

The official code is so much simpler:

wkv_b = self.wkv_b.weight if self.wkv_b.scale is None else weight_dequant(self.wkv_b.weight, self.wkv_b.scale, block_size)

wkv_b = wkv_b.view(self.n_local_heads, -1, self.kv_lora_rank)

q_nope = torch.einsum("bshd,hdc->bshc", q_nope, wkv_b[:, :self.qk_nope_head_dim])

self.kv_cache[:bsz, start_pos:end_pos] = self.kv_norm(kv)

self.pe_cache[:bsz, start_pos:end_pos] = k_pe.squeeze(2)

scores = (torch.einsum("bshc,btc->bsht", q_nope, self.kv_cache[:bsz, :end_pos]) +

torch.einsum("bshr,btr->bsht", q_pe, self.pe_cache[:bsz, :end_pos])) * self.softmax_scale

https://github.com/deepseek-ai/DeepSeek-V3/blob/main/inference/model.py

and I get the feeling that doing all those permutations is what is making the MLA PR run so much slower than the official version for MLA.

The einsum stuff looks complex, but it's really just changing the order of nested for loops that iterate over the data unchanged...

The permutations in llama.cpp are all there to just to make this fit the standard batched matrix multiply idiom and it's lack of an einsum equivalent.

https://github.com/ggml-org/llama.cpp/pull/11446#issuecomment-2661455127

This should give quite a big boost in token generation speed for the MLA branch, and possibly much more if you have a CPU with a lot of cache.

https://aomukai.com/2025/02/16/writingway-if-scrivener-had-ai-implementation/

https://github.com/aomukai/Writingway

https://www.reddit.com/r/WritingWithAI/comments/1iqqi86/writingway_a_free_open_source_software_that/

This looks quite well done - written by someone who's not a programmer using LLMs so maybe a bit rough round the edges, but the first attempt I've seen of someone trying to create an offline app similar to Novercrafter (which makes zero sense to be an online tool with a subscription...).

Been reading some of your work @jukofyork , a lot of interesting stuff, particularly https://github.com/ggml-org/llama.cpp/discussions/5263#discussioncomment-9396351 and the stuff you've been posting in the MLA PR.. wondering if you wanted to chat at all and see if there's anything we can do to improve llama.cpp quant performance (or can talk publicly here, don't mind either way :D), i'm thirsty for some big quality improvements, feels like there's some low hanging fruit but i'm not great at the llama.cpp codebase that you seem to be getting familiar with

This should give quite a big boost in token generation speed for the MLA branch, and possibly much more if you have a CPU with a lot of cache.

Nice, did I read that correctly, in that it will fix the overflow issue?

a bit rough round the edges

It looks more polished than what I've hacked together locally. I'll try switching to it after adding a feature my system has (click a token -> 5 probabilities displayed -> click one of them -> the token is replaced and generation resumes from that point)

@bartowski

You sure you won't regret making a comment in this thread? Apparently there's no unsubscribing now ;)

I currently have 2148 unread messages, this thread will be the least of my concerns 😂

Mainly because there's no good way to follow an org to see new models without also following all their model threads :')

.... doom DOOM!

Been reading some of your work @jukofyork , a lot of interesting stuff, particularly https://github.com/ggml-org/llama.cpp/discussions/5263#discussioncomment-9396351 and the stuff you've been posting in the MLA PR.. wondering if you wanted to chat at all and see if there's anything we can do to improve llama.cpp quant performance (or can talk publicly here, don't mind either way :D), i'm thirsty for some big quality improvements, feels like there's some low hanging fruit but i'm not great at the llama.cpp codebase that you seem to be getting familiar with

I'll just post here if that's OK. The biggest problem with all this is that for some reason ikawrakow (the guy who implemented all the quants in llama.cpp, and who has made his own fork now) fell out with the the llama.cpp devs and I don't want to get involved nor pour water on the fire... The very best outcome would be if they resolved their differences and carried on working together IMO :)

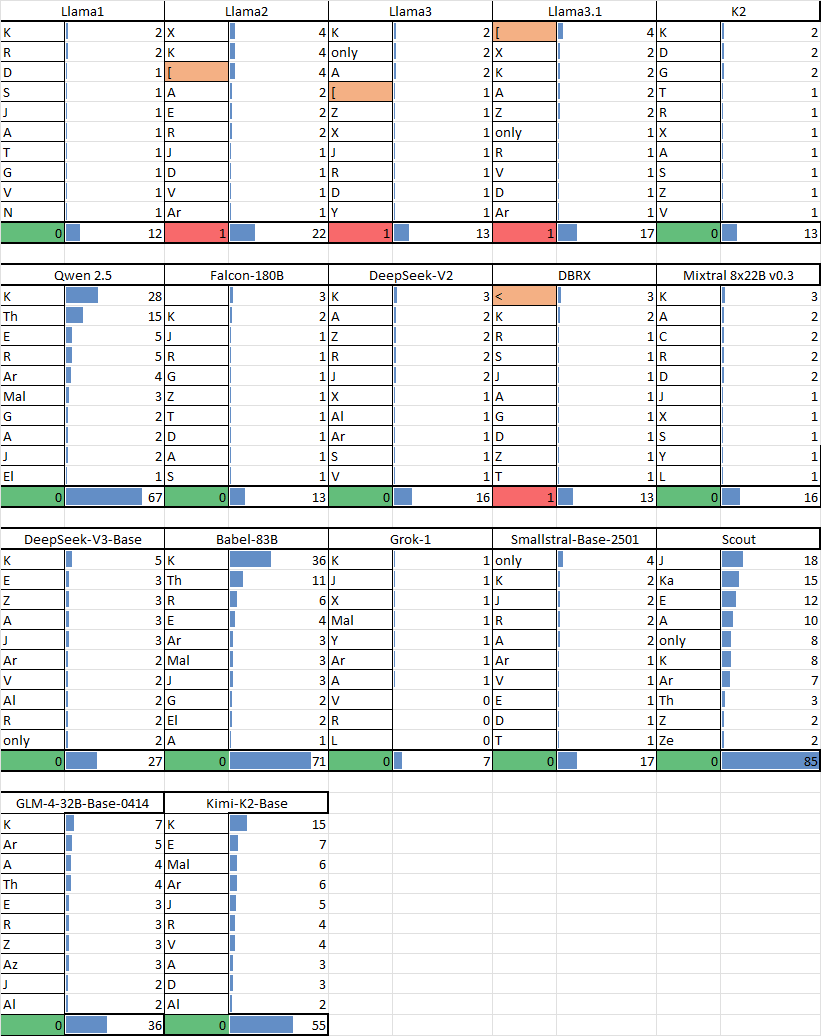

Anyway, there's two main problems with the quants as they are:

1. Bias in the imatrix calculations

There are numerous sources of statistical bias in both the imatrix creation process and the imatrix evaluations:

A. The samples are unrepresentative of real world data, and often the sample used to evaluate the "improvement" is very distributionally similar to that used to create the imatrix itself

I know you have gone some way to fixing this with using better semi-random mixes of data, but if you think about the likely distribution of tokens for: a coding model, a reasoning model, a creative writing model and a language-translation model, then it should be clear that these are all very different distributionally.

The fact that this guy has been successfully using the bias to influence the writing styles of models should be a big warning as to this problem.

B. The llama-imatrix code doesn't use the proper chat templates and also just breaks the "chunks" at random points

For some model this probably doesn't matter much, but for certain models it's likely a disaster:

- The

Mistral AImodels (especiallymiqu-1:70b) are amazingly sensitive to even the slightest change of prompt (again something people have actually exploited to make them write better/differently). - I can envision this being quite a big problem with the new reasoning models, that also seem very sensitive to the prompt template and expect a more rigid order with the

<think>stuff being first, etc.

C. By default the llama-imatrix code only looks at contexts lengths of 512 tokens

This is the most serious IMO and likely causes a huge amount of problems that aren't at all obvious at first sight:

- Some of the tensors in LLMs seem to work the same all the way though the context (as the control vectors and "abliteration" show for

down_projandout_proj). Thedown_projmatrix is so insensitive to this that you can actually just sample 1 token and apply the same direction all throughout the context (which was very surprising to me!). - Some of the tensors absolutely don't work like this and are hugely hurt by sampling such small sequences, namely the

q_projand thek_projtensors which have positional information added to them via RoPE.

In general all the different types of tensors in an LLM are likely hurt to (very) different degrees by this, and it is probably the most important thing to avoid (if I have to use an imatrix currently, then I use your 'calibration_datav3.txt' but make sure to bump all the attention matrices up to at least Q6_K to avoid this problem, as the imatrix weighting logic for the Q6_K+ code is commented out in llama.cpp and not used).

D. Differing activation frequencies for MoE models

The original mixtral models only had 8 experts and 2 were activated per token, so the effective sample size of the 3 sets of expert MLP tensors is only 1/4 of all the other tensors. This probably wasn't a very big problem then, but as the expert count and the sparsity has increased; this has become more and more of a problem.

I did manage to fix some of this back when dbrx first dropped:

https://github.com/ggerganov/llama.cpp/pull/7099 (see here for the main discussion: https://github.com/ggerganov/llama.cpp/pull/6387#issuecomment-2094926182)

but this only really fixed the divisors and doesn't really fix the root problem...

So these problems aren't unique to imatrix creation, and bias in statistics is always a problem.. There are two main ways to deal with this:

1. Manually consider each of the points above (and any others I've forgotten to mention)

The problem with this is that they all involve either a large increase in compute or a large increase in thought/effort regarding data preparation.

2. Regularisation

I've tried to put forward the use of Regularisation for this several times:

https://github.com/ggml-org/llama.cpp/discussions/5263

https://github.com/ikawrakow/ik_llama.cpp/discussions/140

But the method ikawrakow used actually is a form of non-standard regularisation he found empirically, which works very well in practice; but isn't very conducive to the introduction of a regularisation-factor to control the amount of regularisation applied...

I actually tried more "standard" methods of regularising the weighting factors, but his method clearly seemed to work better, and I assumed for a long time it must be some signal-processing voodoo - thankfully he explained in the recent post where it came from! :)

This isn't impossible to work around though, and I could easily adapt the existing imatrix.cpp code to use something like bootstrapping to estimate the variance and then add an option to imatrix to shrink back the estimates towards a prior of "all weighted equally" using something similar to the "One Standard Error Rule".

In reality, a mix of (1) and (2) together would probably be needed (ie: you can't fix the 512-token problem easily with regularisation alone).

2. Outdated heuristics in llama_tensor_get_type()

(I'll write this later today)

This should give quite a big boost in token generation speed for the MLA branch, and possibly much more if you have a CPU with a lot of cache.

Nice, did I read that correctly, in that it will fix the overflow issue?

The overflow only seems to occur if you use float16 and everything else is fine (possibly only for [non-CuBLAS] CUDA code too - see the recent reply from Johannes Gäßler).

The massive slowdown for MLA seems to be some oversight in the code, and I suspect it's either due either:

- Repeatedly dequantising the two

_btensors instead of dequantising once and reusing for the whole batch. - Re-quantising the multiplier and multiplicand to

QK8_1or something related to this (it's a very impenetrable bit of code). - Using the

_btensors transposed so the float scaling factor is applied for every weight instead of every 32 weights.

I tired to follow the code to see if could find it, but it's very complex due to the compute-graph ops causing indirection and the CUDA stuff in general is very complex and hard to understand.

Just using float32 for those 2 tensors seems to fix the problem for now though.

I appreciate the large write up @jukofyork ! I'll try to go line by line replying :)

Re: ikawrakow, yeah I'm aware of the fallout and the fork, I don't think meddling with upstream llama.cpp would cause any fire or make any issues, I'd be willing to reach out to him directly though if we have any specific concerns to make double sure!

I definitely agree with the bias introduction, I have long felt any increase in bias was likely offset by the improved overall accuracy versus the original model. I did a test a few months back where I compared a static quant and my imatrix quant versus a japanese wikitext and found that, despite my imatrix containing no japanese characters, the kld improved with my imatrix versus the static, which led me to the (admittedly weak and flawed) conclusion that there must be enough overlap in important tokens no matter the context that the imatrix was a net benefit. I'd like to challenge that conclusion if possible, but obviously easier said than done.

I wouldn't draw too many conclusions from the author you linked personally but that's a discussion for another time

Regarding chat templates, I actually had a thought about this a couple weeks ago I wanted to test.. I was curious if there would be any notable improvements if I took a high quality Q/A dataset and turned it into multi-turn conversations with the chat templates applied and used THAT as corpus of text, I don't think that would necessarily represent a final results, but I think it's an interesting middle step, especially if we can directly compare the Q/A dataset as the corpus versus the chat-templated Q/A dataset. Since perplexity datasets also wouldn't have the chat templates this would require more aggressive benchmarking which I wouldn't be opposed to doing/funding (MMLU pro ect)

The imatrix context length yes I can see that being a problem. I do wonder if there is a large difference in activations for short context versus long, it definitely would not surprise me in either direction, but it is something that should become known..

I know compilade (won't tag to avoid hat is apparently a thread from hell) was working on fixing issues with loading multiple chunks with a larger batch size here: https://github.com/ggml-org/llama.cpp/pull/9400 but I don't know if that also would allow larger chunk sizes. Is this a fundamental flaw with imatrix or is it just something that we don't do? Would it make sense to make an imatrix dataset from 2 passes, possibly even on the same corpus, one with chunk sizes of 512 and one with chunk sizes of 2000+?

Do you use your own fork to make the attention matrices go up to Q6_K? This is something I'd be interested to explore, similar to the _L variants I release that have the embed/output weights at Q8_0. I also didn't know imatrix was disabled for Q6_K, are you positive about this? or is it specifically the attention matrices have it commented out?

That's interesting about regularisation, I'll have to read up more on that.

Anyways, if there's anything I can do to help or enable development, please let me know, I'm very interested in keeping llama.cpp at the forefront of performance especially in terms of performance per bit when quantizing, and I think there's definitely some work that can be done.

I was also theorizing about the possibility of measuring the impact of quantization of layers through some sort of KLD measurement method, where you'd take the original model in fp16+ (or Q4+ for extremely large models), measure the logits against a corpus of text, then sequentially quantize each layer down to Q2_K or lower (so layer 1 is all Q2_K while the rest is fp16 and measure logits, then put layer 2 at Q2_K with rest at fp16 and measure logits) and measure the change in logits to see which layers had the least impact against the final results by being crushed to low precision, and use this as an ad-hoc method of determining which layers would be better to represent at higher precision and which at lower (similar to unsloth's "dynamic" quants but with more automation)

In response to concerns about compute complexity, I would posit the possibility of creating a single run per arch/size, since it's been shown that imatrix and even exl2 calibration differs to surprisingly small degrees across finetunes of the same models, so if we created say

- llama 3 1/3/8/70b

- Qwen2.5 0.5,1.5,3,7,14,32,72b

- Gemma 2 2/8/27b

etc etc, we would have a master list of these layer importances that likely would not change even with finetuning. I could be wrong but it could also be a potential performance gain

Do you use your own fork to make the attention matrices go up to Q6_K? This is something I'd be interested to explore, similar to the _L variants I release that have the embed/output weights at Q8_0.

I just hack the llama_tensor_get_type() function and recompile.

I also didn't know imatrix was disabled for Q6_K, are you positive about this? or is it specifically the attention matrices have it commented out?

Just looking again and it's not fully commented out:

static void quantize_row_q6_K_impl(const float * restrict x, block_q6_K * restrict y, int64_t n_per_row, const float * quant_weights) {

assert(n_per_row % QK_K == 0);

const int64_t nb = n_per_row / QK_K;

int8_t L[QK_K];

float scales[QK_K/16];

//float weights[16];

for (int i = 0; i < nb; i++) {

//float sum_x2 = 0;

//for (int j = 0; j < QK_K; ++j) sum_x2 += x[j]*x[j];

//float sigma2 = sum_x2/QK_K;

float max_scale = 0;

float max_abs_scale = 0;

for (int ib = 0; ib < QK_K/16; ++ib) {

float scale;

if (quant_weights) {

const float * qw = quant_weights + QK_K*i + 16*ib;

//for (int j = 0; j < 16; ++j) weights[j] = qw[j] * sqrtf(sigma2 + x[16*ib + j]*x[16*ib + j]);

//scale = make_qx_quants(16, 32, x + 16*ib, L + 16*ib, 1, weights);

scale = make_qx_quants(16, 32, x + 16*ib, L + 16*ib, 1, qw);

} else {

scale = make_qx_quants(16, 32, x + 16*ib, L + 16*ib, 1, NULL);

}

scales[ib] = scale;

const float abs_scale = fabsf(scale);

if (abs_scale > max_abs_scale) {

max_abs_scale = abs_scale;

max_scale = scale;

}

}

I honestly can't remember if it was before fully commented out or if I traced the code to make_qx_quants() and found it wasn't being used.

It's definitely not using the sqrtf(sigma2 + x...) empirical regularisation even now though.

I was also theorizing about the possibility of measuring the impact of quantization of layers

The key thing here is you have to have some clear metric to optimise for and the current "perplexity for 512 tokens" method definitely isn't going to work well on recent models (and may actually make them much worse).

All the metrics that use the post-softmax probabilities actually throw away a lot of information about the effect of quantisation, and it may be much more sample efficient to take measurements on either the final hidden state or the pre-softmax logits.

I think deciding what we want to optimise is actually harder here than the actual optimisation process. I haven't time today, but will post about this tomorrow...

@bartowski Here is a discussion about this from last year:

https://github.com/ggml-org/llama.cpp/pull/6844#issuecomment-2192834093

Somewhere around the same time there was another discussion in a similar thread where I mentioned the idea of using a "surrogate model" instead of directly trying to optimise it:

https://en.wikipedia.org/wiki/Surrogate_model

The most appropriate being Gaussian Processes (aka "Bayesian Optimisation"):

https://en.m.wikipedia.org/wiki/Gaussian_process

or regression trees:

https://en.m.wikipedia.org/wiki/Decision_tree_learning

But again, it all comes down to "what are we trying to optimise?"...

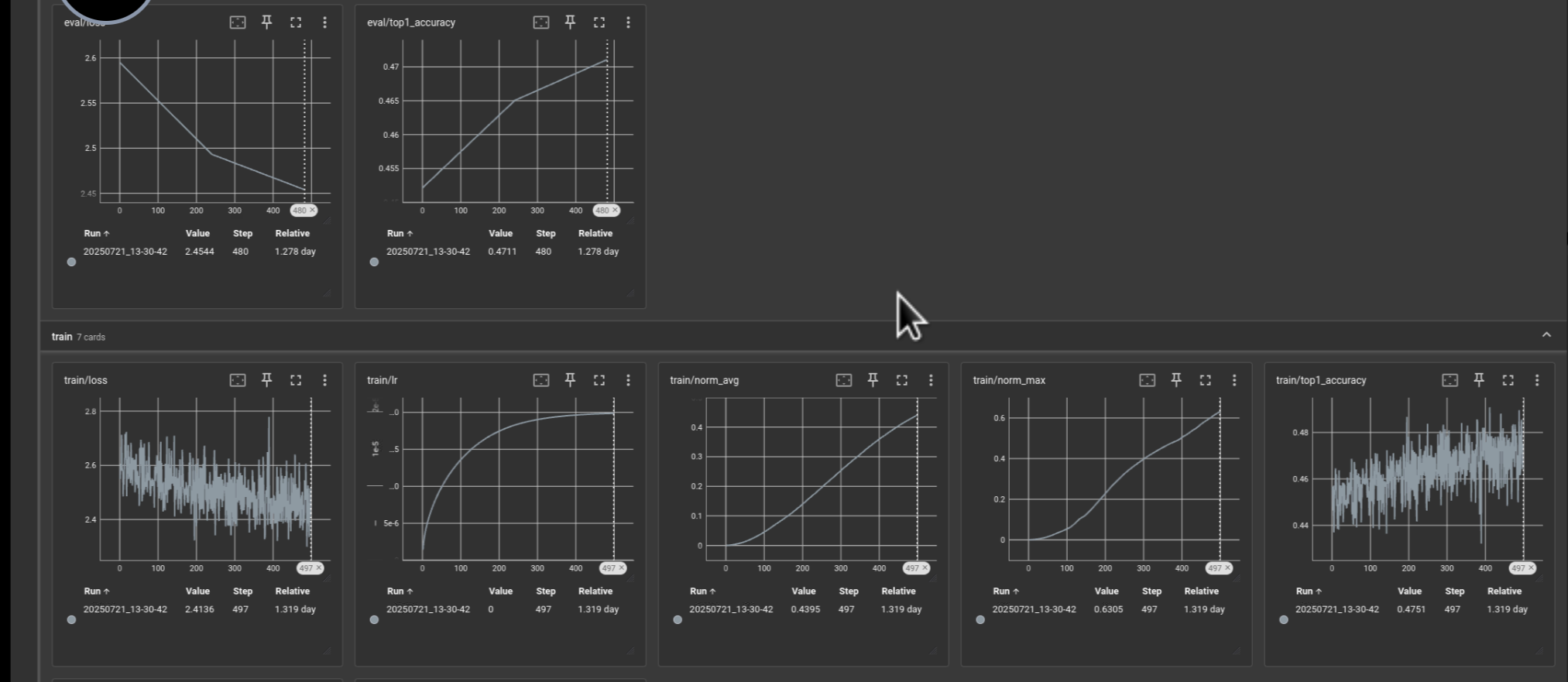

Man it's been painful to get the MLA branch fully working, but I think I've finally got it going and oh boy can this thing write some good Grimdark!

My custom BF16/Q6_K /Q5_K quant (480GB) runs at:

prompt eval time = 54856.58 ms / 1071 tokens ( 51.22 ms per token, 19.52 tokens per second)

eval time = 604165.18 ms / 1812 tokens ( 333.42 ms per token, 3.00 tokens per second)

and stays pretty consistent.

The equivalent BF16/Q4_K /Q4_K quant (365GB) runs quite a bit faster, but definitely doesn't seem quite a good.

Are you running this on Apple? What is bf16 used to quant, didn't think it was fast on anything but macs, and not at all on CUDA

But maybe it's for a small portion and it's better on CPU than I'm thinking (just know that imatrix is painfully slower on bf16 lol)

Are you running this on Apple? What is bf16 used to quant, didn't think it was fast on anything but macs, and not at all on CUDA

But maybe it's for a small portion and it's better on CPU than I'm thinking (just know that imatrix is painfully slower on bf16 lol)

I've had lots of problems with weird overflows and slowdowns and only just got it working tonight properly:

- The

BF16tensors are everything but the experts and on two A6000 GPUs. - The experts are

Q6_Kfor thedown_projandQ5_Kforup_projandgate_projand in RAM.

The BF16 PR for llama.cpp was merged a few weeks ago and seems to work fine. It can't use F16 for certain tensor(s) or it overflows, and any quant causes some weird slowdowns where the prompt processing takes 2.5x longer and the token generation about 1 tokens/s less (which is a lot when you're only getting 3 tokens/s!).

It's been a long slog getting it all working, but it's nuts how good it is at writing!

No other LLM I've ever tried can come even remotely close to being able to write chapters out of order like this:

USER

Varis adjusted the noose, its hemp fibers grinding beneath his calluses. “Last chance,” he said, voice like gravel dragged through mud. “Confess, and your soul stays your own.”

Jurl laughed—a wet, gurgling sound. “You’re knee-deep in it, Coldwater. ” The thing inside him twisted the boy’s lips into a grin too wide for his face. “The Great Wolf’s howlin’ again. The Dead’s Gate’s rusted through… ”

Turn this into the opening chapter of a Grimdark trilogy.

ASSISTANT

Thought for 272 seconds

> Okay, so I need to turn this dialogue and scene into the opening chapter of a Grimdark trilogy. Let me start by understanding what's given. There's Varis adjusting a noose, made of hemp, and his voice is described as gravelly. He's giving someone a last chance to confess. Then Jurl laughs, but it's a wet, gurgling sound, implying maybe he's possessed or something inside him. The entity mentions the Great Wolf and the Dead's Gate rusting through. > > Grimdark is typically dark, gritty, morally ambiguous. So I need to set a tone that's oppressive, maybe with elements of decay, hopelessness, and visceral imagery. The opening chapter should hook readers with tension and introduce the grim elements. > > First, I need to expand the scene. Where are they? Probably a place of execution, maybe a town square, or a desolate area. The setting should reflect the grimdark theme—maybe a dying town, surrounded by decay. Varis is an executioner or a lawman, but in a grimdark world, he might have his own flaws or dark past. > > Jurl is possessed by something, so there's a supernatural element. The Great Wolf could be a deity or a harbinger of doom. The Dead's Gate rusting through suggests a barrier between the living and dead is failing, which could be a major plot point for the trilogy. > > I should build the atmosphere with sensory details: the smell of rot, the sound of wind, the feel of the rough hemp. Maybe add some onlookers to show the community's state—perhaps they're fearful, desperate, or indifferent. > > Characterization: Varis is hardened, maybe weary. His calluses suggest years of this work. The thing inside Jurl is malevolent, taunting Varis with cryptic warnings. The dialogue hints at a larger conflict—Varis being involved in something bigger ("knee-deep in it"), so maybe he's part of a group that tried to prevent this, but failed. > > Plot progression: The execution goes wrong when Jurl's body transforms, the Dead's Gate opens, unleashing horrors. This sets off the events of the trilogy. Varis survives, now burdened with the knowledge that the apocalypse is coming, and he has to act. > > Structure-wise, start with the execution scene, build tension through dialogue and setting, then the supernatural event occurs, leading to chaos. End the chapter with Varis realizing the gravity and setting off on his quest. > > I need to make sure the prose is tight, with vivid, harsh imagery. Avoid info dumps, but sprinkle in world-building through details. Maybe include some flashbacks or internal thoughts from Varis to hint at his backstory without slowing the pace. > > Potential pitfalls: Over-explaining the supernatural elements too soon. Keep it mysterious. Also, ensuring the grimdark tone is consistent—no heroes, just flawed people in a harsh world. > > Possible additions: A secondary character, like a priest or another townsfolk, to interact with Varis, adding depth. Maybe show the town's desperation through their reactions. Also, after the Dead's Gate opens, describe the horrors unleashed in a visceral way to emphasize the stakes. > > Check for consistency in the setting—what season is it? Maybe autumn with decaying leaves, or winter with a bitter cold. The time of day could be dawn or dusk for symbolic effect. > > Make sure the action flows smoothly from the dialogue to the transformation, to the gate opening, and the aftermath. Keep sentences varied in length to control pacing—short, choppy sentences during intense moments, longer ones for description. > > Names: Varis Coldwater—sounds like a northern, rugged name. The Great Wolf could be a local legend or a real entity. Dead's Gate is a good name for a portal to the afterlife or a prison for the dead. > > End the chapter with a hook—maybe Varis taking a token from Jurl's body, or a vision of the Great Wolf, prompting him to act. Alternatively, the town being overrun, forcing him to flee, setting up the next chapter's journey.The gallows creaked like a dying man’s breath. Varis Coldwater stood atop the splintered platform, the noose in his hands as familiar as sin. Below, the town of Blackmoor huddled—a carcass of leaning timber and crumbling stone, its people gaunt shadows gathered in the square. They reeked of desperation, their eyes hollow as the sockets of the skulls nailed above the butcher’s stall. Autumn had stripped the oaks bare, and the wind carried the stench of rot from the moors, where the peat devoured the unwary.

“Last chance,” Varis growled, the hemp rough against his palms. He’d hanged seven men on this rope. Their faces blurred now, but their screams never did. “Confess, and your soul stays your own.”

Jurl dangled, wrists bound, his boyish frame too slight for the iron chains. Blood crusted his temple where the mob had struck him—before they lost their nerve. Before they saw the black veins spidering beneath his skin.

The thing inside Jurl laughed, a sound like drowned lungs heaving. “You’re knee-deep in it, Coldwater.” The boy’s jaw cracked sideways, teeth splintering as the grin split his face. “The Great Wolf’s howlin’ again. The Dead’s Gate’s rusted through…”

A woman in the crowd crossed herself. Old Tessa, the midwife. Her lips moved in a silent prayer to saints long forgotten. Varis didn’t believe in prayers. Only the work.

He yanked the lever.

The trapdoor crashed open—

—and Jurl twisted.

The boy’s body snapped taut mid-fall, suspended by nothing. Chains exploded, shrapnel shredding the air. A farmer screamed as a link buried itself in his thigh. The crowd scattered, but Varis stood frozen, watching as Jurl’s spine arched, ribs punching through flesh like antlers. The thing wearing his skin howled, a chorus of voices that scratched at the sky.

“YOU SHOULD HAVE LISTENED, HANGMAN.”

The earth shuddered. Graves in the churchyard split, skeletal hands clawing free. The air curdled, and the horizon rippled—a massive gate of tarnished iron materializing in the distance, its hinges weeping rust. Beyond it, shadows writhed. Hungry. Familiar.

Varis’s hand flew to the amulet beneath his shirt—a wolf’s fang, warm as a heartbeat. Mari’s fang. The last relic of the order that had burned with her.

“The Gate…” Old Tessa whispered, collapsing to her knees. “*Gods save us, it’s open.*”

Jurl’s corpse hit the ground, deflated. But the gate remained. And the shadows swarmed.

Varis was running before he’d decided to move, snatching his axe from the execution block. A child’s wail cut through the chaos—a girl trapped beneath a cart, her mother’s body splayed beside her. He lunged, hauling the cart aside, but the shadows were faster. They coiled around the girl, viscous as tar, and her scream dissolved into a wet gurgle.

“Bastards!” he roared, swinging the axe. The blade passed through the darkness harmlessly.

Mari’s voice, sharp in his memory: “Steel won’t bite the dead, you fool. Only blood. Only sacrifice.”

The amulet burned.

He fled, the gate’s howl chasing him into the moors. Behind, Blackmoor burned, its people reduced to puppets jerking on shadow-strings. He didn’t look back.

Coward, the wind hissed. Cripple.

His leg—the old injury from the siege at Veldmark—throbbed. But he ran, the fang’s heat searing his chest.

The Great Wolf was coming. And Varis Coldwater, last of the Grey Order, would need to remember how to bite.

End of Chapter One

Hook: The chapter closes with Varis escaping into the moors, the amulet (a relic tied to the Great Wolf) guiding him toward a forgotten shrine. His internal conflict—guilt over past failures vs. the ruthless pragmatism needed to survive—sets the tone. The Dead’s Gate’s opening unleashes minor horrors here, but hints at greater threats (the Wolf, political rot in the realm). The next chapter could introduce a survivor from the town (Old Tessa?) or a rival hunter sent to silence him, escalating tension.

USER

Can you now write the prologue of the book which should come before this chapter. It should be a slower pace and foreshadow something much further into the world's history than happened in chapter 1.

ASSISTANT

Thought for 206 seconds