---

license: mit

pipeline_tag: text-to-speech

language:

- en

tags:

- gguf-connector

---

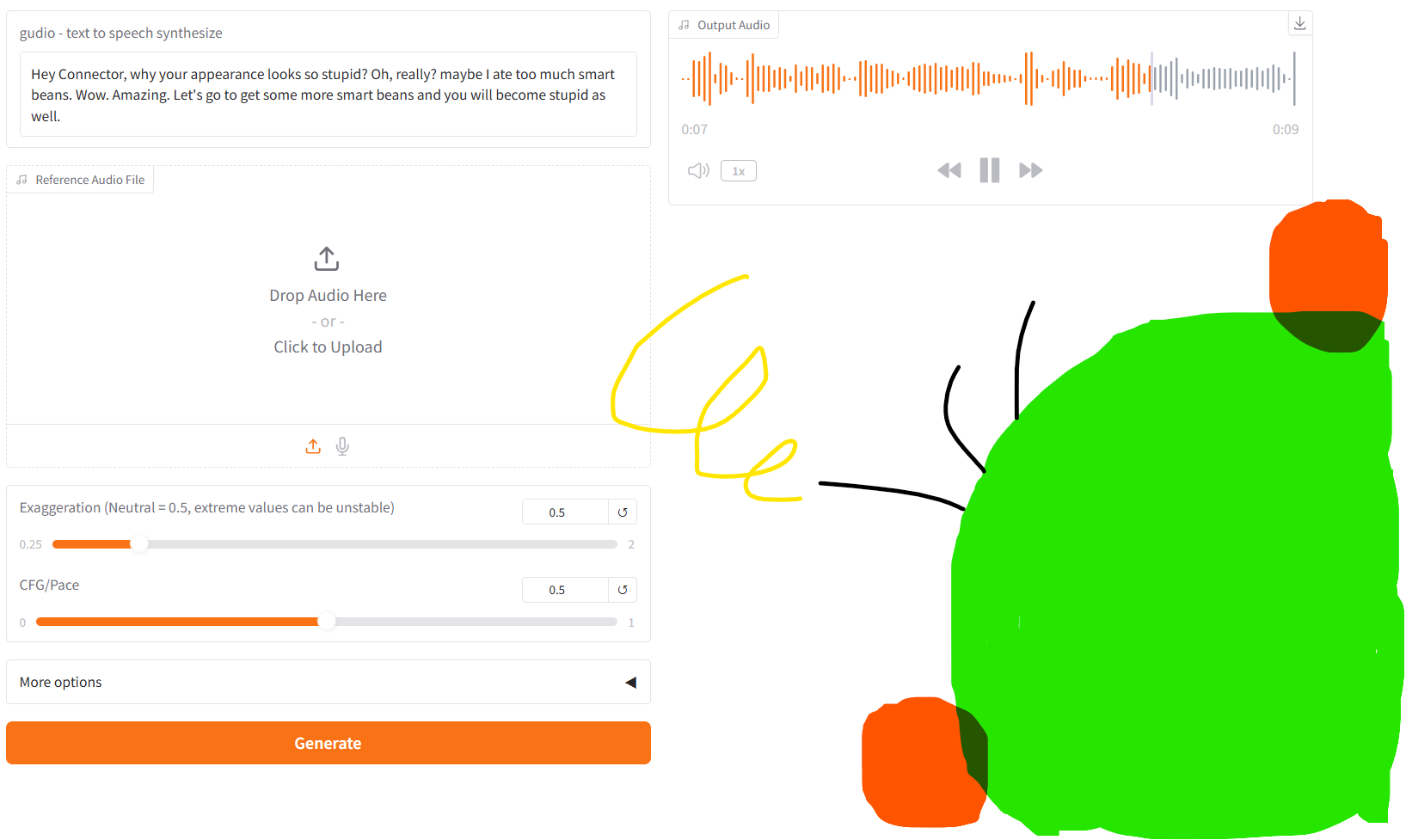

# gudio

- text-to-speech synthesis

### **run it with gguf-connector**

```

ggc g2

```

| Prompt | Audio Sample |

|--------|---------------|

|`Hey Connector, why your appearance looks so stupid?` `Oh, really? maybe I ate too much smart beans.` `Wow. Amazing.` `Let's go to get some more smart beans and you will become stupid as well.` | 🎧 **audio-sample-1** |

|`Hey, why you folks always act together like a wolf pack?` `Oh, really? we just hang out for good food and share the bills.` `Wow, amazing, a pig pack then!` `You are the smartest joke maker in this universe.` | 🎧 **audio-sample-2** |

|`Suddenly the plane's engines began failing, and the pilot says there isn't much time, and he'll keep the plane in the air as long as he can and told his two passengers to take the only two parachutes on board and bail out. The world's smartest man immediately took a parachute and said "I'm the world's smartest man! The world needs me, so I can't die here!", and then jumped out of the plane. The pilot tells the hippie to hurry up and take the other parachute, because there aren't any more. And the hippie says "Relax man. We'll be fine. The world's smartest man took my backpack."` | 🎧 **audio-sample-3** |

### **review/reference**

- simply execute the command (`ggc g2`) above in console/terminal

- opt a `model` and a `clip` gguf in the current directory to interact with (see example below)

> Using device: cuda (auto-detection; based on your system/setting)

>>>

>

>GGUF file(s) available. Select which one for **MODEL (t3)**:

>

>1. model_t3-f16-tts-q2_k.gguf

>2. model_t3-f16-tts-q3_k_m.gguf

>3. model_t3-f16-tts-q4_k_m.gguf (recommended)

>4. model_t3-f16-tts-q5_k_m.gguf

>5. model_t3-f16-tts-q6_k.gguf

>6. s3_clip-bf16.gguf

>7. s3_clip-f16.gguf

>8. s3_clip-f32.gguf

>

>Enter your choice (1 to 8): 3

>

>t3 file: model_t3-f16-tts-q4_k_m.gguf is selected!

>

>>>>>

>

>GGUF file(s) available. Select which one for **CLIP (s3)**:

>

>1. model_t3-f16-tts-q2_k.gguf

>2. model_t3-f16-tts-q3_k_m.gguf

>3. model_t3-f16-tts-q4_k_m.gguf

>4. model_t3-f16-tts-q5_k_m.gguf

>5. model_t3-f16-tts-q6_k.gguf

>6. s3_clip-bf16.gguf (recommended)

>7. s3_clip-f16.gguf (for non-cuda user)

>8. s3_clip-f32.gguf

>

>Enter your choice (1 to 8): _

>

- run it from local URL: http://127.0.0.1:7860 with lazy webui

- gguf-connector ([pypi](https://pypi.org/project/gguf-connector)|[repo](https://github.com/calcuis/gguf-connector))