Commit

·

c5694b0

1

Parent(s):

fd3f5df

Add suggested LICENSE and tags

Browse files

README.md

CHANGED

|

@@ -1,41 +1,47 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: openrail

|

| 3 |

+

tags:

|

| 4 |

+

- diffusers

|

| 5 |

+

- stable-diffusion

|

| 6 |

+

- controlnet

|

| 7 |

+

---

|

| 8 |

+

# Face landmark ControlNet

|

| 9 |

+

|

| 10 |

+

## ControlNet with Face landmark

|

| 11 |

+

|

| 12 |

+

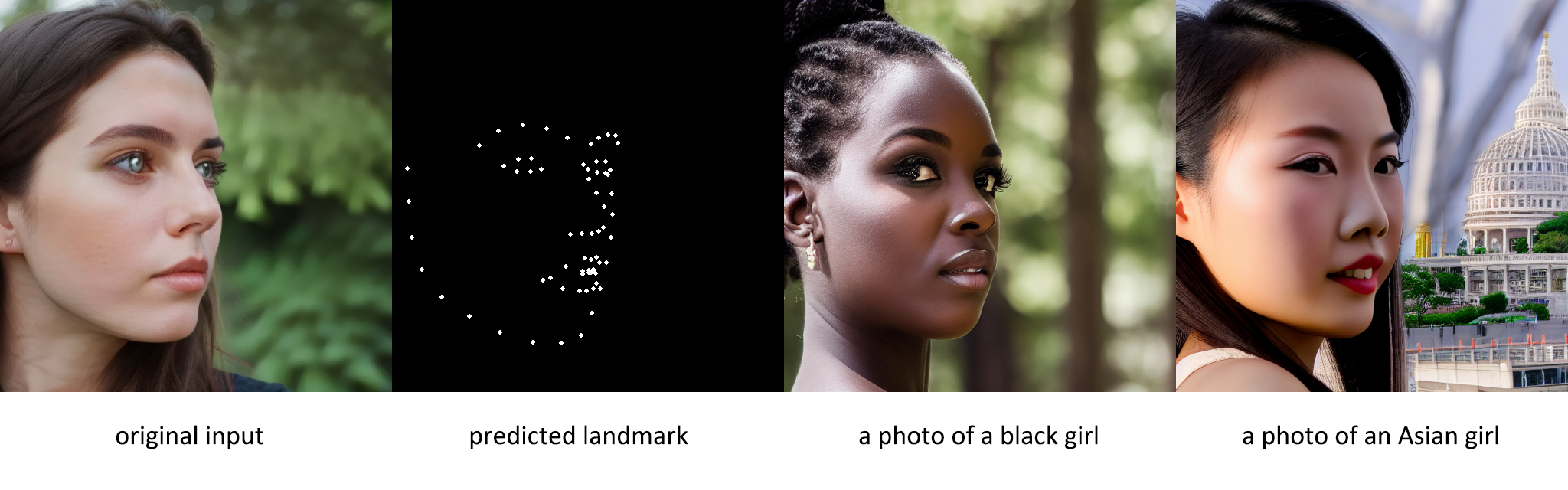

I trained using [ControlNet](https://github.com/lllyasviel/ControlNet), which was proposed by lllyasviel, on a face dataset. By using facial landmarks as a condition, finer face control can be achieved.

|

| 13 |

+

|

| 14 |

+

Currently, I’m using Stable Diffusion 1.5 as the base model and dlib as the face landmark detector (those with the capability can replace it with a better one). The checkpoint can be found at "models" folder.

|

| 15 |

+

|

| 16 |

+

**Create conda environment:**

|

| 17 |

+

|

| 18 |

+

```sh

|

| 19 |

+

conda env create -f environment.yaml

|

| 20 |

+

conda activate control

|

| 21 |

+

wget http://dlib.net/files/shape_predictor_68_face_landmarks.dat.bz2

|

| 22 |

+

bzip2 -d shape_predictor_68_face_landmarks.dat.bz2

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

**Testing it by:**

|

| 26 |

+

|

| 27 |

+

```

|

| 28 |

+

python gradio_landmark2image.py

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

## Generate face with the identical poses and expression

|

| 32 |

+

|

| 33 |

+

To create a new face, input an image and extract the facial landmarks from it. These landmarks will be used as a reference to redraw the face while ensuring that the original features are retained.

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

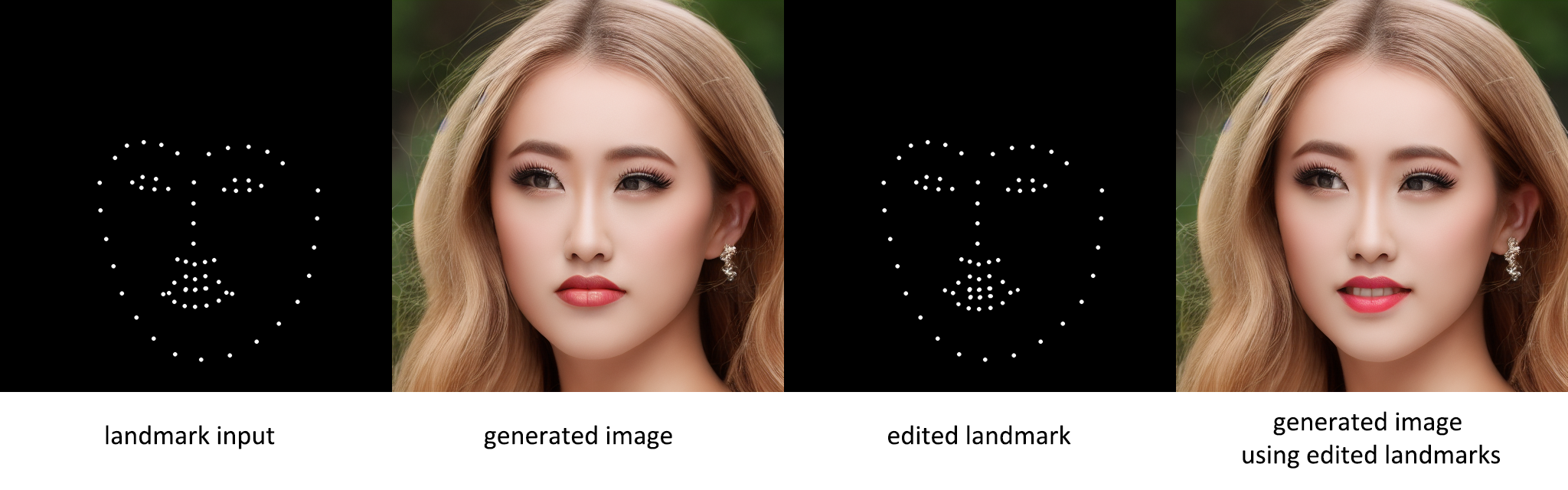

## Control the facial expressions and poses of generated images

|

| 38 |

+

|

| 39 |

+

For the images we generated, we have the prompt and random seed used to generate them. While keeping the prompt and random seed, we can also edit the landmarks to modify the facial expressions and postures of the generated results.

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

## Credits

|

| 44 |

+

|

| 45 |

+

**Thanks to lllyasviel for his amazing work on [https://github.com/lllyasviel/ControlNet](https://github.com/lllyasviel/ControlNet)!, this is totaly based on his work.**

|

| 46 |

+

|

| 47 |

+

**This is just a proof of concept and should not be applied for any risky purposes.**

|