Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- README.md +2 -2

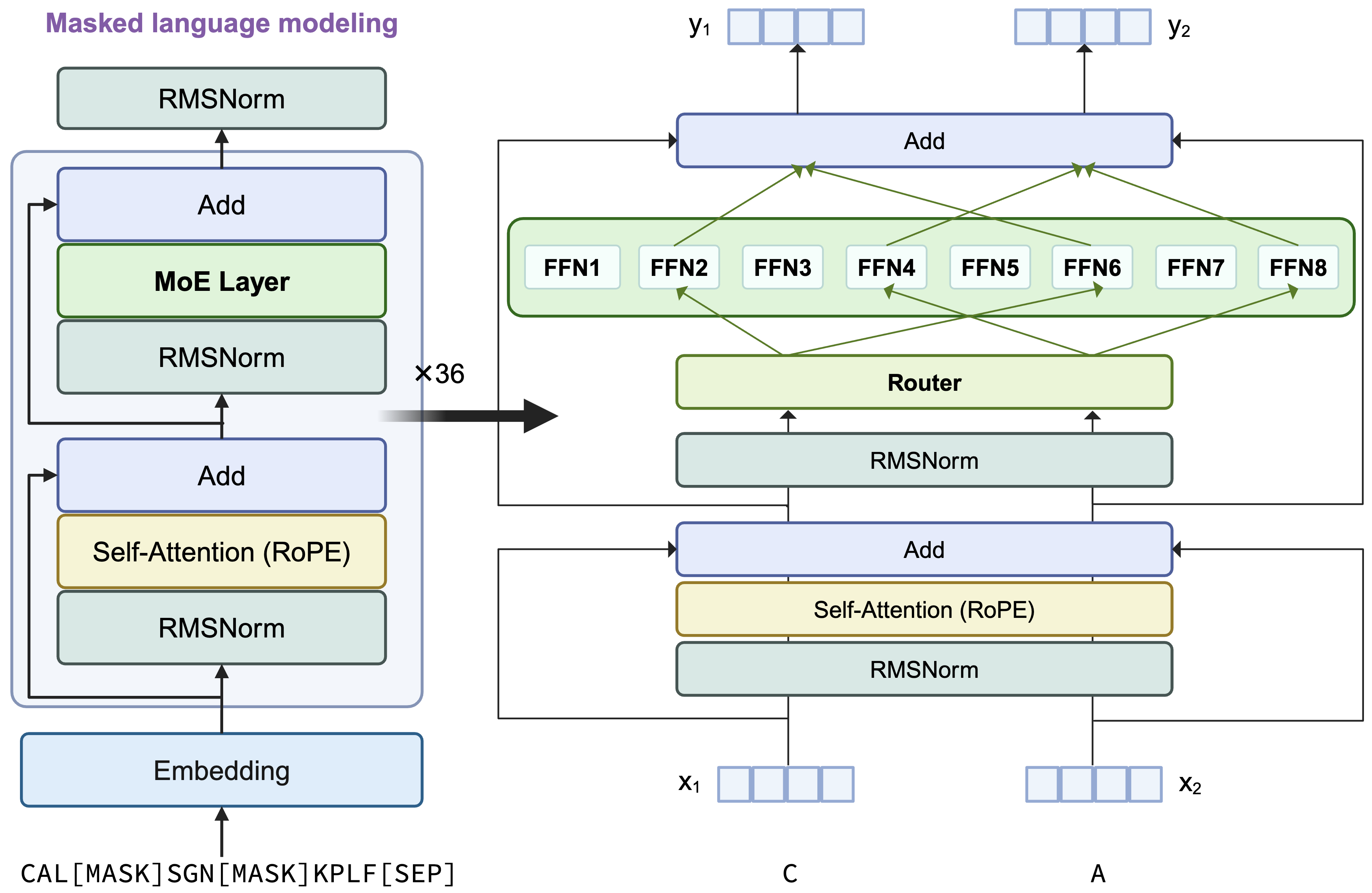

- proteinmoe_architecture.png +3 -0

.gitattributes

CHANGED

|

@@ -34,3 +34,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

inverse_folding.png filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

inverse_folding.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

proteinmoe_architecture.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -66,8 +66,8 @@ We fine-tuned a pretrained masked language model using MSA data by concatenating

|

|

| 66 |

| Sequence length | 2048 | 12800 | 12800 |

|

| 67 |

| Per Device Micro Batch Size | 1 | 1 | 1 |

|

| 68 |

| Precision | Mixed FP32-FP16 | Mixed FP32-FP16 | Mixed FP32-FP16 |

|

| 69 |

-

|

|

| 70 |

-

|

|

| 71 |

|

| 72 |

### Tokenization

|

| 73 |

|

|

|

|

| 66 |

| Sequence length | 2048 | 12800 | 12800 |

|

| 67 |

| Per Device Micro Batch Size | 1 | 1 | 1 |

|

| 68 |

| Precision | Mixed FP32-FP16 | Mixed FP32-FP16 | Mixed FP32-FP16 |

|

| 69 |

+

| LR | [5e-6,5e-5] | [1e-6, 1e-5] | 1e-5 |

|

| 70 |

+

| Num Tokens | 10 billion | 100 billion | 80 billion |

|

| 71 |

|

| 72 |

### Tokenization

|

| 73 |

|

proteinmoe_architecture.png

ADDED

|

Git LFS Details

|