End of training

Browse files- README.md +3 -2

- all_results.json +11 -11

- eval_results.json +6 -6

- train_results.json +6 -6

- trainer_state.json +106 -21

- training_eval_loss.png +0 -0

- training_loss.png +0 -0

README.md

CHANGED

|

@@ -4,6 +4,7 @@ license: apache-2.0

|

|

| 4 |

base_model: Qwen/Qwen2.5-32B

|

| 5 |

tags:

|

| 6 |

- llama-factory

|

|

|

|

| 7 |

- generated_from_trainer

|

| 8 |

model-index:

|

| 9 |

- name: pretrain

|

|

@@ -15,9 +16,9 @@ should probably proofread and complete it, then remove this comment. -->

|

|

| 15 |

|

| 16 |

# pretrain

|

| 17 |

|

| 18 |

-

This model is a fine-tuned version of [Qwen/Qwen2.5-32B](https://huggingface.co/Qwen/Qwen2.5-32B) on

|

| 19 |

It achieves the following results on the evaluation set:

|

| 20 |

-

- Loss: 1.

|

| 21 |

|

| 22 |

## Model description

|

| 23 |

|

|

|

|

| 4 |

base_model: Qwen/Qwen2.5-32B

|

| 5 |

tags:

|

| 6 |

- llama-factory

|

| 7 |

+

- full

|

| 8 |

- generated_from_trainer

|

| 9 |

model-index:

|

| 10 |

- name: pretrain

|

|

|

|

| 16 |

|

| 17 |

# pretrain

|

| 18 |

|

| 19 |

+

This model is a fine-tuned version of [Qwen/Qwen2.5-32B](https://huggingface.co/Qwen/Qwen2.5-32B) on the openalex_small dataset.

|

| 20 |

It achieves the following results on the evaluation set:

|

| 21 |

+

- Loss: 1.1904

|

| 22 |

|

| 23 |

## Model description

|

| 24 |

|

all_results.json

CHANGED

|

@@ -1,13 +1,13 @@

|

|

| 1 |

{

|

| 2 |

-

"epoch": 0.

|

| 3 |

-

"eval_loss": 1.

|

| 4 |

-

"eval_runtime":

|

| 5 |

-

"eval_samples_per_second":

|

| 6 |

-

"eval_steps_per_second":

|

| 7 |

-

"perplexity":

|

| 8 |

-

"total_flos":

|

| 9 |

-

"train_loss": 1.

|

| 10 |

-

"train_runtime":

|

| 11 |

-

"train_samples_per_second": 0.

|

| 12 |

-

"train_steps_per_second": 0.

|

| 13 |

}

|

|

|

|

| 1 |

{

|

| 2 |

+

"epoch": 0.9454545454545454,

|

| 3 |

+

"eval_loss": 1.1903643608093262,

|

| 4 |

+

"eval_runtime": 40.1525,

|

| 5 |

+

"eval_samples_per_second": 2.441,

|

| 6 |

+

"eval_steps_per_second": 0.324,

|

| 7 |

+

"perplexity": 3.2882791091731414,

|

| 8 |

+

"total_flos": 45330292801536.0,

|

| 9 |

+

"train_loss": 1.3396454407618597,

|

| 10 |

+

"train_runtime": 2207.3438,

|

| 11 |

+

"train_samples_per_second": 0.398,

|

| 12 |

+

"train_steps_per_second": 0.006

|

| 13 |

}

|

eval_results.json

CHANGED

|

@@ -1,8 +1,8 @@

|

|

| 1 |

{

|

| 2 |

-

"epoch":

|

| 3 |

-

"eval_loss": 1.

|

| 4 |

-

"eval_runtime":

|

| 5 |

-

"eval_samples_per_second":

|

| 6 |

-

"eval_steps_per_second":

|

| 7 |

-

"perplexity":

|

| 8 |

}

|

|

|

|

| 1 |

{

|

| 2 |

+

"epoch": 0.9454545454545454,

|

| 3 |

+

"eval_loss": 1.1903643608093262,

|

| 4 |

+

"eval_runtime": 40.1525,

|

| 5 |

+

"eval_samples_per_second": 2.441,

|

| 6 |

+

"eval_steps_per_second": 0.324,

|

| 7 |

+

"perplexity": 3.2882791091731414

|

| 8 |

}

|

train_results.json

CHANGED

|

@@ -1,8 +1,8 @@

|

|

| 1 |

{

|

| 2 |

-

"epoch": 0.

|

| 3 |

-

"total_flos":

|

| 4 |

-

"train_loss": 1.

|

| 5 |

-

"train_runtime":

|

| 6 |

-

"train_samples_per_second": 0.

|

| 7 |

-

"train_steps_per_second": 0.

|

| 8 |

}

|

|

|

|

| 1 |

{

|

| 2 |

+

"epoch": 0.9454545454545454,

|

| 3 |

+

"total_flos": 45330292801536.0,

|

| 4 |

+

"train_loss": 1.3396454407618597,

|

| 5 |

+

"train_runtime": 2207.3438,

|

| 6 |

+

"train_samples_per_second": 0.398,

|

| 7 |

+

"train_steps_per_second": 0.006

|

| 8 |

}

|

trainer_state.json

CHANGED

|

@@ -1,42 +1,127 @@

|

|

| 1 |

{

|

| 2 |

"best_metric": null,

|

| 3 |

"best_model_checkpoint": null,

|

| 4 |

-

"epoch": 0.

|

| 5 |

-

"eval_steps":

|

| 6 |

-

"global_step":

|

| 7 |

"is_hyper_param_search": false,

|

| 8 |

"is_local_process_zero": true,

|

| 9 |

"is_world_process_zero": true,

|

| 10 |

"log_history": [

|

| 11 |

{

|

| 12 |

-

"epoch": 0.

|

| 13 |

-

"grad_norm": 0.

|

| 14 |

-

"learning_rate":

|

| 15 |

-

"loss": 1.

|

| 16 |

"step": 1

|

| 17 |

},

|

| 18 |

{

|

| 19 |

-

"epoch": 0.

|

| 20 |

-

"grad_norm": 0.

|

| 21 |

-

"learning_rate": 0.

|

| 22 |

-

"loss": 1.

|

| 23 |

"step": 2

|

| 24 |

},

|

| 25 |

{

|

| 26 |

-

"epoch": 0.

|

| 27 |

-

"

|

| 28 |

-

"

|

| 29 |

-

"

|

| 30 |

-

"

|

| 31 |

-

|

| 32 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

}

|

| 34 |

],

|

| 35 |

"logging_steps": 1,

|

| 36 |

-

"max_steps":

|

| 37 |

"num_input_tokens_seen": 0,

|

| 38 |

"num_train_epochs": 1,

|

| 39 |

-

"save_steps":

|

| 40 |

"stateful_callbacks": {

|

| 41 |

"TrainerControl": {

|

| 42 |

"args": {

|

|

@@ -49,7 +134,7 @@

|

|

| 49 |

"attributes": {}

|

| 50 |

}

|

| 51 |

},

|

| 52 |

-

"total_flos":

|

| 53 |

"train_batch_size": 1,

|

| 54 |

"trial_name": null,

|

| 55 |

"trial_params": null

|

|

|

|

| 1 |

{

|

| 2 |

"best_metric": null,

|

| 3 |

"best_model_checkpoint": null,

|

| 4 |

+

"epoch": 0.9454545454545454,

|

| 5 |

+

"eval_steps": 10,

|

| 6 |

+

"global_step": 13,

|

| 7 |

"is_hyper_param_search": false,

|

| 8 |

"is_local_process_zero": true,

|

| 9 |

"is_world_process_zero": true,

|

| 10 |

"log_history": [

|

| 11 |

{

|

| 12 |

+

"epoch": 0.07272727272727272,

|

| 13 |

+

"grad_norm": 0.09205172210931778,

|

| 14 |

+

"learning_rate": 5e-05,

|

| 15 |

+

"loss": 1.2196,

|

| 16 |

"step": 1

|

| 17 |

},

|

| 18 |

{

|

| 19 |

+

"epoch": 0.14545454545454545,

|

| 20 |

+

"grad_norm": 0.08487003296613693,

|

| 21 |

+

"learning_rate": 0.0001,

|

| 22 |

+

"loss": 1.1877,

|

| 23 |

"step": 2

|

| 24 |

},

|

| 25 |

{

|

| 26 |

+

"epoch": 0.21818181818181817,

|

| 27 |

+

"grad_norm": 0.6594376564025879,

|

| 28 |

+

"learning_rate": 9.797464868072488e-05,

|

| 29 |

+

"loss": 1.3252,

|

| 30 |

+

"step": 3

|

| 31 |

+

},

|

| 32 |

+

{

|

| 33 |

+

"epoch": 0.2909090909090909,

|

| 34 |

+

"grad_norm": 1.960582971572876,

|

| 35 |

+

"learning_rate": 9.206267664155907e-05,

|

| 36 |

+

"loss": 2.0162,

|

| 37 |

+

"step": 4

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

"epoch": 0.36363636363636365,

|

| 41 |

+

"grad_norm": 1.3530547618865967,

|

| 42 |

+

"learning_rate": 8.274303669726426e-05,

|

| 43 |

+

"loss": 1.5829,

|

| 44 |

+

"step": 5

|

| 45 |

+

},

|

| 46 |

+

{

|

| 47 |

+

"epoch": 0.43636363636363634,

|

| 48 |

+

"grad_norm": 1.9950076341629028,

|

| 49 |

+

"learning_rate": 7.077075065009433e-05,

|

| 50 |

+

"loss": 1.4982,

|

| 51 |

+

"step": 6

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"epoch": 0.509090909090909,

|

| 55 |

+

"grad_norm": 0.38373228907585144,

|

| 56 |

+

"learning_rate": 5.7115741913664264e-05,

|

| 57 |

+

"loss": 1.4252,

|

| 58 |

+

"step": 7

|

| 59 |

+

},

|

| 60 |

+

{

|

| 61 |

+

"epoch": 0.5818181818181818,

|

| 62 |

+

"grad_norm": 0.14959090948104858,

|

| 63 |

+

"learning_rate": 4.288425808633575e-05,

|

| 64 |

+

"loss": 1.1972,

|

| 65 |

+

"step": 8

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"epoch": 0.6545454545454545,

|

| 69 |

+

"grad_norm": 0.10391217470169067,

|

| 70 |

+

"learning_rate": 2.9229249349905684e-05,

|

| 71 |

+

"loss": 1.2054,

|

| 72 |

+

"step": 9

|

| 73 |

+

},

|

| 74 |

+

{

|

| 75 |

+

"epoch": 0.7272727272727273,

|

| 76 |

+

"grad_norm": 0.0690321996808052,

|

| 77 |

+

"learning_rate": 1.725696330273575e-05,

|

| 78 |

+

"loss": 1.168,

|

| 79 |

+

"step": 10

|

| 80 |

+

},

|

| 81 |

+

{

|

| 82 |

+

"epoch": 0.7272727272727273,

|

| 83 |

+

"eval_loss": 1.1994872093200684,

|

| 84 |

+

"eval_runtime": 40.5127,

|

| 85 |

+

"eval_samples_per_second": 2.419,

|

| 86 |

+

"eval_steps_per_second": 0.321,

|

| 87 |

+

"step": 10

|

| 88 |

+

},

|

| 89 |

+

{

|

| 90 |

+

"epoch": 0.8,

|

| 91 |

+

"grad_norm": 0.0676456168293953,

|

| 92 |

+

"learning_rate": 7.937323358440935e-06,

|

| 93 |

+

"loss": 1.1932,

|

| 94 |

+

"step": 11

|

| 95 |

+

},

|

| 96 |

+

{

|

| 97 |

+

"epoch": 0.8727272727272727,

|

| 98 |

+

"grad_norm": 0.061955519020557404,

|

| 99 |

+

"learning_rate": 2.0253513192751373e-06,

|

| 100 |

+

"loss": 1.2116,

|

| 101 |

+

"step": 12

|

| 102 |

+

},

|

| 103 |

+

{

|

| 104 |

+

"epoch": 0.9454545454545454,

|

| 105 |

+

"grad_norm": 0.059567924588918686,

|

| 106 |

+

"learning_rate": 0.0,

|

| 107 |

+

"loss": 1.1849,

|

| 108 |

+

"step": 13

|

| 109 |

+

},

|

| 110 |

+

{

|

| 111 |

+

"epoch": 0.9454545454545454,

|

| 112 |

+

"step": 13,

|

| 113 |

+

"total_flos": 45330292801536.0,

|

| 114 |

+

"train_loss": 1.3396454407618597,

|

| 115 |

+

"train_runtime": 2207.3438,

|

| 116 |

+

"train_samples_per_second": 0.398,

|

| 117 |

+

"train_steps_per_second": 0.006

|

| 118 |

}

|

| 119 |

],

|

| 120 |

"logging_steps": 1,

|

| 121 |

+

"max_steps": 13,

|

| 122 |

"num_input_tokens_seen": 0,

|

| 123 |

"num_train_epochs": 1,

|

| 124 |

+

"save_steps": 100,

|

| 125 |

"stateful_callbacks": {

|

| 126 |

"TrainerControl": {

|

| 127 |

"args": {

|

|

|

|

| 134 |

"attributes": {}

|

| 135 |

}

|

| 136 |

},

|

| 137 |

+

"total_flos": 45330292801536.0,

|

| 138 |

"train_batch_size": 1,

|

| 139 |

"trial_name": null,

|

| 140 |

"trial_params": null

|

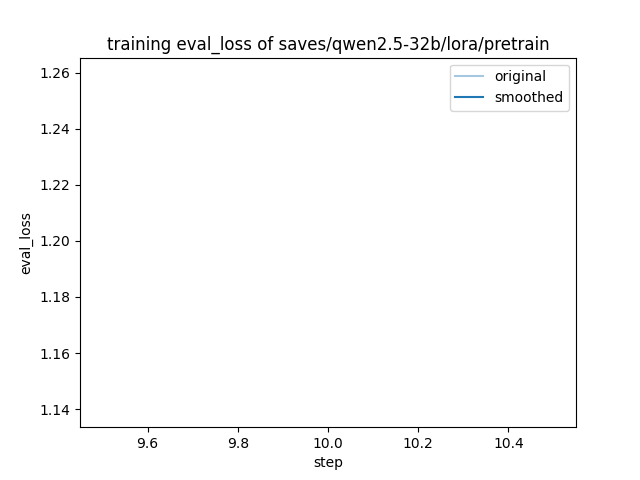

training_eval_loss.png

CHANGED

|

|

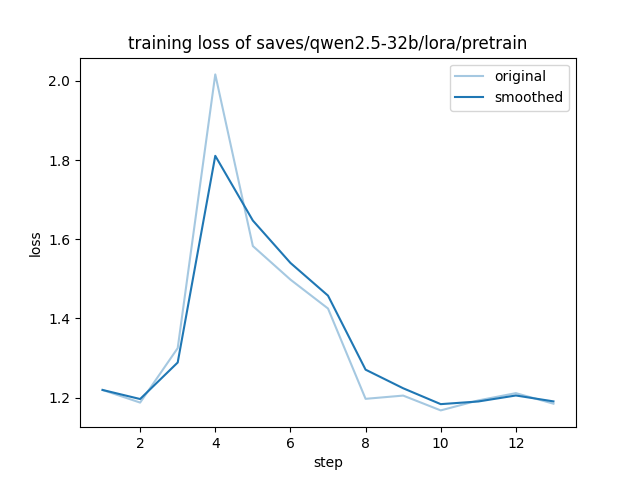

training_loss.png

CHANGED

|

|