Datasets:

Update README.md

Browse files

README.md

CHANGED

|

@@ -23,4 +23,118 @@ configs:

|

|

| 23 |

data_files:

|

| 24 |

- split: train

|

| 25 |

path: data/train-*

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

data_files:

|

| 24 |

- split: train

|

| 25 |

path: data/train-*

|

| 26 |

+

license: cc

|

| 27 |

+

task_categories:

|

| 28 |

+

- feature-extraction

|

| 29 |

+

tags:

|

| 30 |

+

- climate

|

| 31 |

+

- geology

|

| 32 |

+

size_categories:

|

| 33 |

+

- n<1K

|

| 34 |

---

|

| 35 |

+

|

| 36 |

+

# WildfireSimMaps

|

| 37 |

+

|

| 38 |

+

## Description

|

| 39 |

+

|

| 40 |

+

This is a dataset containing real-world map data for wildfire simulations.

|

| 41 |

+

The data is in the form of 2D maps with the following features:

|

| 42 |

+

|

| 43 |

+

- `name`: The name of the map data.

|

| 44 |

+

- `shape`: The shape of the area, in pixels.

|

| 45 |

+

- `canopy`: The canopy cover in the area, in percentage.

|

| 46 |

+

- `density`: The density of the area, in percentage.

|

| 47 |

+

- `slope`: The slope of the area, in degrees.

|

| 48 |

+

|

| 49 |

+

## Quick Start

|

| 50 |

+

|

| 51 |

+

Install the package using pip:

|

| 52 |

+

|

| 53 |

+

```bash

|

| 54 |

+

pip install datasets

|

| 55 |

+

```

|

| 56 |

+

|

| 57 |

+

Then you can use the dataset as follows with **NumPy**:

|

| 58 |

+

|

| 59 |

+

```python

|

| 60 |

+

import numpy as np

|

| 61 |

+

from datasets import load_dataset

|

| 62 |

+

|

| 63 |

+

# Load the dataset

|

| 64 |

+

ds = load_dataset("xiazeyu/WildfireSimMaps", split="train")

|

| 65 |

+

ds = ds.with_format("numpy")

|

| 66 |

+

|

| 67 |

+

def preprocess_function(examples):

|

| 68 |

+

# Reshape arrays based on the 'shape' field

|

| 69 |

+

examples['density'] = [d.reshape(sh) for d, sh in zip(examples['density'], examples['shape'])]

|

| 70 |

+

examples['slope'] = [s.reshape(sh) for s, sh in zip(examples['slope'], examples['shape'])]

|

| 71 |

+

examples['canopy'] = [c.reshape(sh) for c, sh in zip(examples['canopy'], examples['shape'])]

|

| 72 |

+

|

| 73 |

+

return examples

|

| 74 |

+

|

| 75 |

+

ds = ds.map(preprocess_function, batched=True, batch_size=None) # Adjust batch_size as needed

|

| 76 |

+

|

| 77 |

+

print(ds[0])

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

To use the dataset with **PyTorch**, you can use the following code:

|

| 81 |

+

|

| 82 |

+

```python

|

| 83 |

+

import torch

|

| 84 |

+

from datasets import load_dataset

|

| 85 |

+

|

| 86 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 87 |

+

|

| 88 |

+

# Load the dataset

|

| 89 |

+

ds = load_dataset("xiazeyu/WildfireSimMaps", split="train")

|

| 90 |

+

ds = ds.with_format("torch", device=device)

|

| 91 |

+

|

| 92 |

+

def preprocess_function(examples):

|

| 93 |

+

# Reshape arrays based on the 'shape' field

|

| 94 |

+

examples['density'] = [d.reshape(sh.tolist()) for d, sh in zip(examples['density'], examples['shape'])]

|

| 95 |

+

examples['slope'] = [s.reshape(sh.tolist()) for s, sh in zip(examples['slope'], examples['shape'])]

|

| 96 |

+

examples['canopy'] = [c.reshape(sh.tolist()) for c, sh in zip(examples['canopy'], examples['shape'])]

|

| 97 |

+

|

| 98 |

+

return examples

|

| 99 |

+

|

| 100 |

+

ds = ds.map(preprocess_function, batched=True, batch_size=None) # Adjust batch_size as needed

|

| 101 |

+

|

| 102 |

+

print(ds[0])

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

+

## Next Steps

|

| 106 |

+

|

| 107 |

+

In order to make practical use of this dataset, you may perform the following tasks:

|

| 108 |

+

|

| 109 |

+

- scale or normalize the data to fit your model's requirements

|

| 110 |

+

- reshape the data to fit your model's input shape

|

| 111 |

+

- stack the data into a single tensor if needed

|

| 112 |

+

- perform data augmentation if needed

|

| 113 |

+

- split the data into training, validation, and test sets

|

| 114 |

+

|

| 115 |

+

In general, you can use the dataset as you would use any other dataset in your pipeline.

|

| 116 |

+

|

| 117 |

+

And the most important thing is to have fun and learn from the data!

|

| 118 |

+

|

| 119 |

+

## Visualization

|

| 120 |

+

|

| 121 |

+

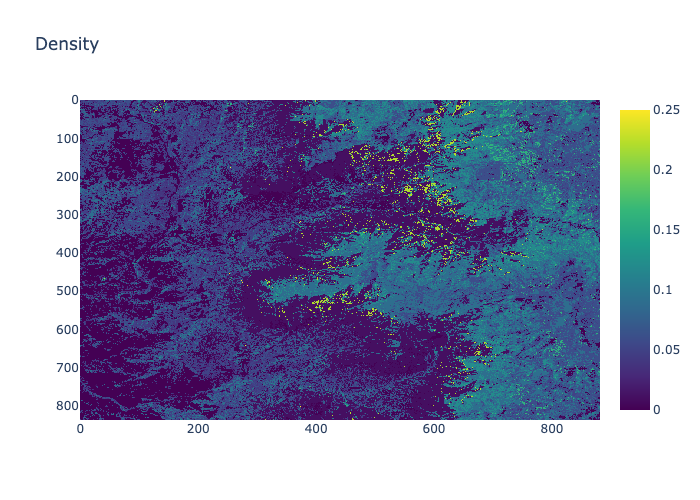

Density

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

Canopy

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

Slope

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

## License

|

| 134 |

+

|

| 135 |

+

The dataset is licensed under the CC BY-NC 4.0 License.

|

| 136 |

+

|

| 137 |

+

## Contact

|

| 138 |

+

|

| 139 |

+

- Zeyu Xia - [email protected]

|

| 140 |

+

- Sibo Cheng - [email protected]

|