---

annotations_creators:

- expert-generated

language:

- en

size_categories:

- n<1K

source_datasets:

- extended

task_categories:

- feature-extraction

- text-to-video

pretty_name: AVS-Spot

tags:

- co-speech gestures

- gesture-spotting

- video-understanding

- multimodal-learning

---

# Dataset Card for AVS-Spot Benchmark

This dataset is associated with the paper: "Understanding Co-Speech Gestures in-the-wild"

- 📝 ArXiv: https://arxiv.org/abs/2503.22668

- 🌐 Project page: https://www.robots.ox.ac.uk/~vgg/research/jegal

- 💻 Code: https://github.com/Sindhu-Hegde/jegal

We present **JEGAL**, a **J**oint **E**mbedding space for **G**estures, **A**udio and **L**anguage. Our semantic gesture representations can be used to perform multiple downstream tasks such as cross-modal retrieval, spotting gestured words, and identifying who is speaking solely using gestures.

## 📋 Table of Contents

- [Dataset Card for AVS-Spot Benchmark](#dataset-card-for-avs-spot-benchmark)

- [📋 Table of Contents](#📋-table-of-contents)

- [📚 What is the AVS-Spot Benchmark?](#📚-what-is-the-AVS-Spot-Benchmark?)

- [Summary](#summary)

- [Download instructions](#download-instructions)

- [📝 Dataset Structure](#📝-dataset-structure)

- [Data Fields](#data-fields)

- [Data Instances](#data-instances)

- [📦 Dataset curation](#📦-dataset-curation)

- [Statistics](#statistics)

- [🔖 Citation](#🔖-citation)

- [🙏 Acknowledgements](#🙏-acknowledgements)

## 📚 What is the AVS-Spot Benchmark?

### Summary

AVS-Spot is a benchmark for evaluating the task of **gestured word-spotting**. It contains **500 videos**, sampled from the AVSpeech official test dataset. Each video contains at least one clearly gestured word, annotated as the "target word". Additionally, we provide other annotations, including the text phrase, word boundaries, and speech-stress labels for each sample.

**Task:** Given a target word, an input gesture video with a transcript/speech, the goal is to localize the occurrence of the target word in the video based on gestures.

**Other tasks:** The benchmark can also be used for evaluating tasks such as gesture recognition, cross-modal gestured word retrieval, and gesture segmentation.

Some examples from the dataset are shown below. Note: the green highlight box in the video is for visualization purposes only. The actual dataset does not contain these boxes; instead, we provide the target word's start and end frames as part of the annotations.

### Download instructions

Run the following scripts to download and pre-process the dataset:

```python

from datasets import load_dataset

# Load the dataset csv file with annotations

dataset = load_dataset("sindhuhegde/avs-spot")

```

The csv file contains the video-ids and annotations.

Please refer to the [preprocessing scripts](https://github.com/Sindhu-Hegde/jegal/tree/main/dataset) to prepare the AVS-Spot benchmark.

Once the dataset is downloaded and pre-processed, the structure of the folders will be as follows:

```

video_root (path of the downloaded videos)

├── *.mp4 (videos)

```

```

preprocessed_root (path of the pre-processed videos)

├── list of video-ids

│ ├── *.avi (extracted person track video for each sample)

| ├── *.wav (extracted person track audio for each sample)

```

```

merge_dir (path of the merged videos)

├── *.mp4 (target-speaker videos with audio)

```

## 📝 Dataset Structure

### Data Fields

- `video_id`: YouTube video ID

- `start_time`: Start time (in seconds)

- `end_time`: End time (in seconds)

- `filename`: Filename along with the target-speaker crop number (obtained after pre-processing)

- `num_frames`: Number of frames in the video after pre-processing

- `phrase`: Text trasncript of the video

- `target_word`: Target word (the word to be spotted)

- `target_word_boundary`: Word boundary of the target word. Format: [target-word, start_frame, end_frame]

- `word_boundaries`: Word boundaries for all the words in the video. Format: [[word-1, start_frame, end_frame], [word-2, start_frame, end_frame], ..., [word-n, start_frame, end_frame]]

- `stress_label`: Binary label indicating whether the target-word has been stressed in the corresponding speech

### Data Instances

Each instance in the dataset contains the above fields. An example instance is shown below.

```json

{

"video_id": "jnsuH9_qYyA",

"start_time": 26.562700,

"end_time": 29.802700,

"filename": "jnsuH9_qYyA_26.562700-29.802700/00000",

"num_frames": 83,

"phrase": "app is beautiful it just is streamlined it",

"target_word": "beautiful",

"target_word_boundary": "['beautiful', 21, 37]",

"word_boundaries": "[['app', 0, 11], ['is', 12, 13], ['beautiful', 21, 37], ['it', 45, 47], ['just', 48, 53], ['is', 60, 63], ['streamlined', 65, 81], ['it', 82, 83]]",

"stress_label": 1

}

```

See the [AVS-Spot dataset viewer](https://huggingface.co/datasets/sindhuhegde/avs-spot/viewer) to explore more examples.

## 📦 Dataset Curation

AVS-Spot is a dataset of video clips where a specific word is distinctly gestured. We begin with the full English test set from the [AVSpeech dataset](https://looking-to-listen.github.io/avspeech/) and extract word-aligned transcripts using the WhisperX ASR model. Short phrases containing 4 to 12 words are then selected, ensuring that the clips exhibit distinct gesture movements. We then manually review and annotate clips with a `target-word`, where the word is visibly gestured. This process results in $500$ curated clips, each containing a well-defined gestured word. The manual annotation ensures minimal label noise, enabling a reliable evaluation of the gesture spotting task. Additionally, we provide binary `stress/emphasis` labels for target words, capturing key gesture-related cues.

Summarized dataset information is given below:

- Source: [AVSpeech](https://looking-to-listen.github.io/avspeech/)

- Language: English

- Modalities: Video, audio, text

- Labels: Target-word, word-boundaries, speech-stress binary label

- Task: Gestured word spotting

### Statistics

| Dataset | Split | # Hours | # Speakers | Avg. clip duration | # Videos |

|:--------:|:-----:|:-------:|:-----------:|:-----------------:|:--------:|

| AVS-Spot | test | 0.38 | 391 | 2.73 | 500 |

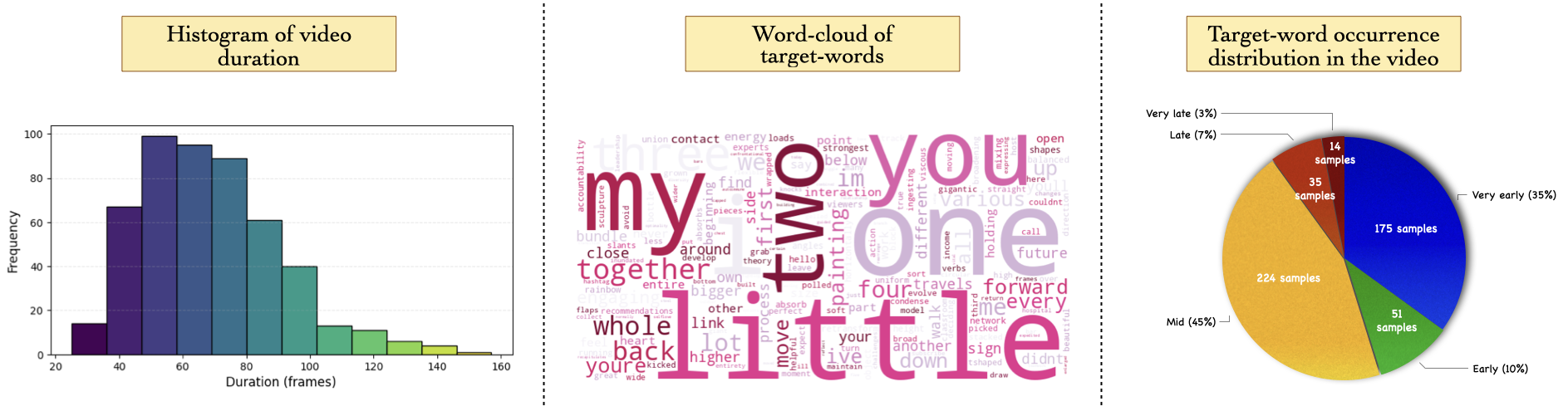

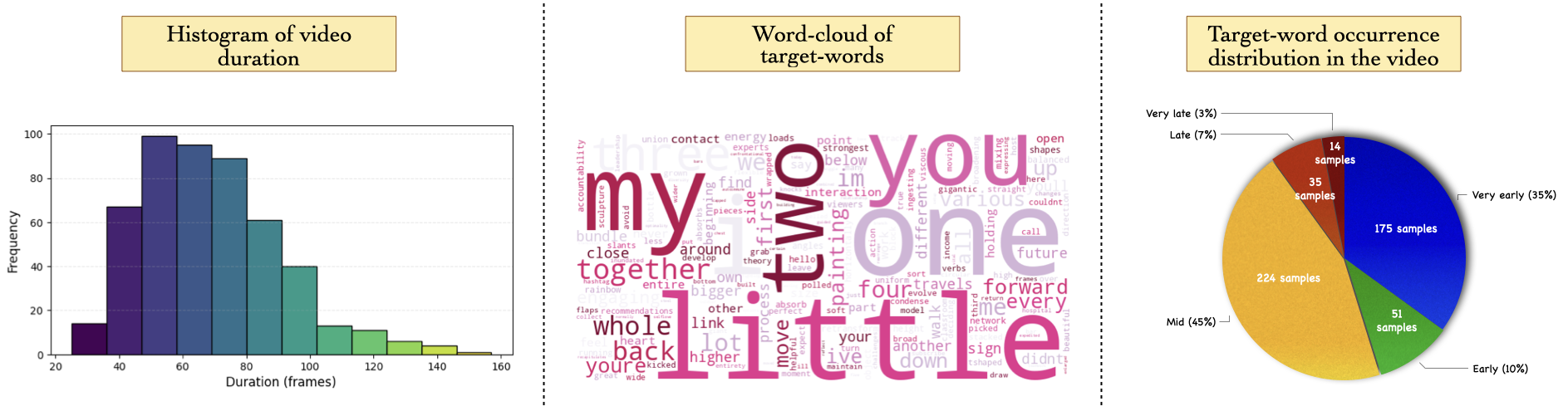

Below, we show some additional statistics for the dataset: (i) Duration of videos in terms of number of frames, (ii) Wordcloud of most gestured words in the dataset, illustrating the diversity of the different words present, and (iii) The distribution of target-word occurences in the video.

## 🔖 Citation

If you find this dataset helpful, please consider starring ⭐ the repository and citing our work.

```bibtex

@article{Hegde_ArXiv_2025,

title={Understanding Co-speech Gestures in-the-wild},

author={Hegde, Sindhu and Prajwal, K R, Kwon, Taein and Zisserman, Andrew},

booktitle={arXiv},

year={2025}

}

```

## 🙏 Acknowledgements

The authors would like to thank Piyush Bagad, Ragav Sachdeva, and Jaesung Hugh for their valuable discussions. They also extend their thanks to David Pinto for setting up the data annotation tool and to Ashish Thandavan for his support with the infrastructure. This research is funded by EPSRC Programme Grant VisualAI EP/T028572/1, and a Royal Society Research Professorship RP \textbackslash R1 \textbackslash 191132.