---

tags:

- robotics

- grasping

- simulation

- nvidia

task_categories:

- other

license: "cc-by-4.0"

---

# GraspGen: Scaling Sim2Real Grasping

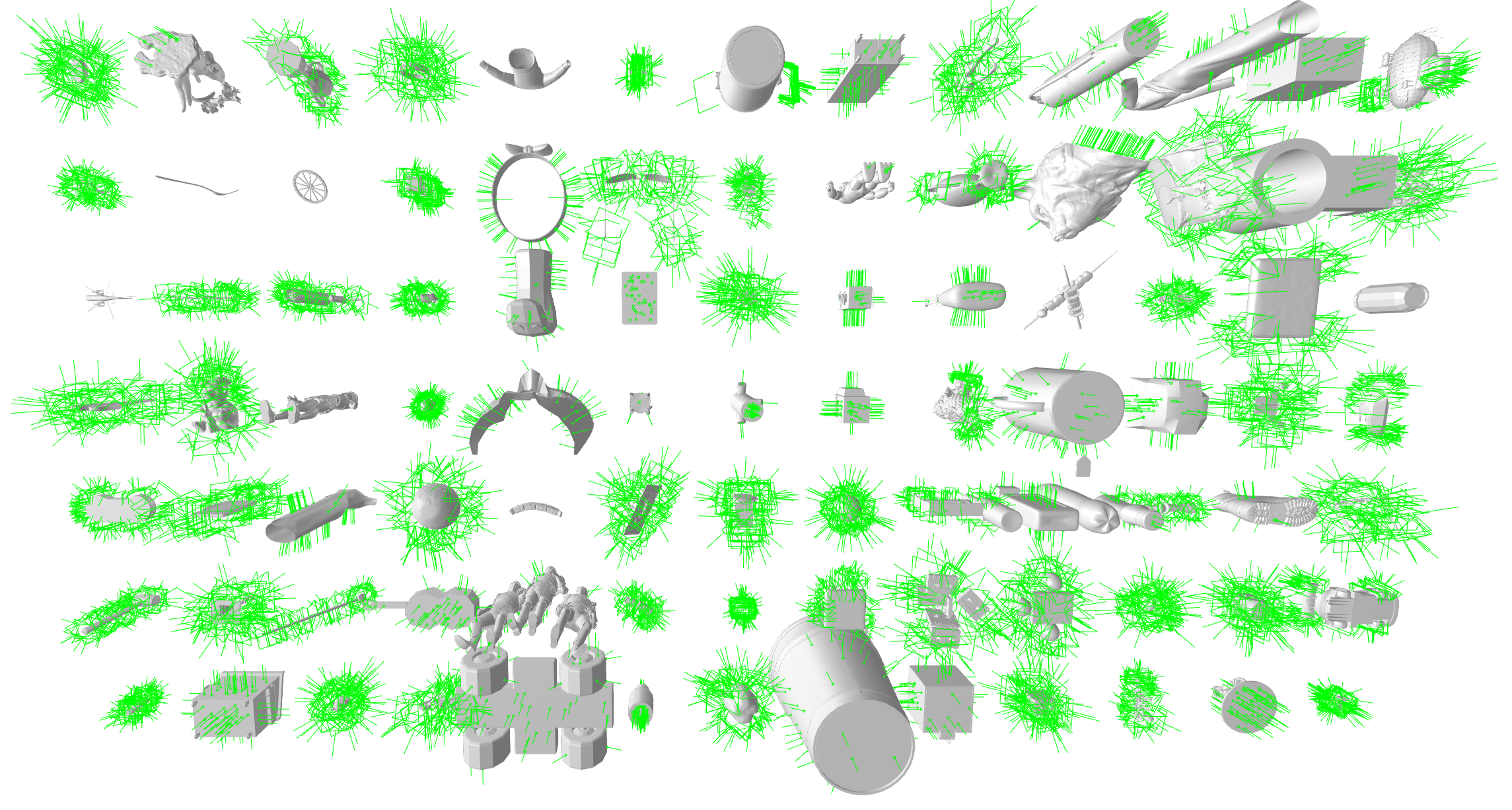

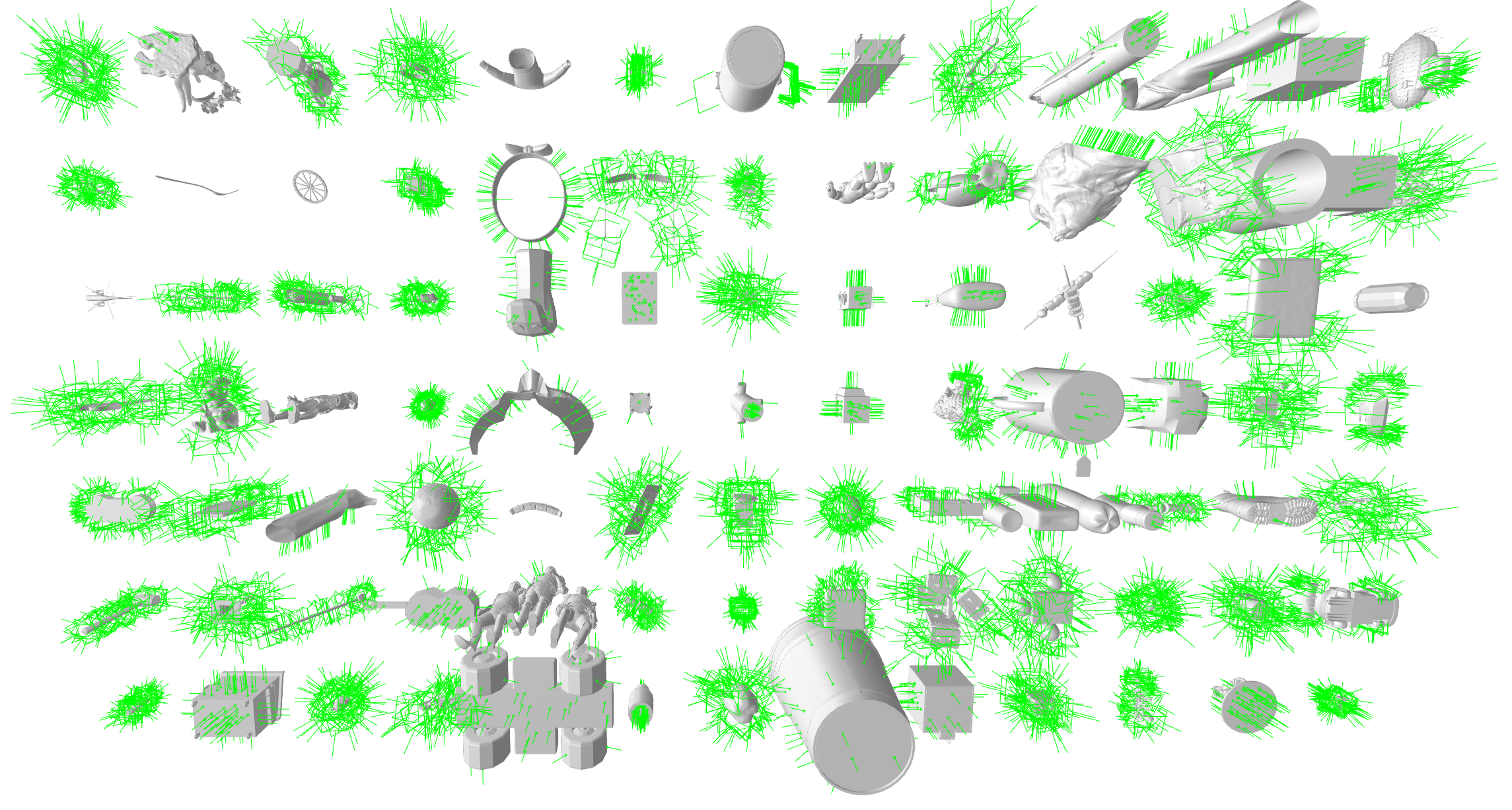

GraspGen is a large-scale simulated grasp dataset for multiple robot embodiments and grippers.

We release over 57 million grasps, computed for a subset of 8515 objects from the [Objaverse XL](https://objaverse.allenai.org/) (LVIS) dataset. These grasps are specific to three grippers: Franka Panda, the Robotiq-2f-140 industrial gripper, and a single-contact suction gripper (30mm radius).

We release over 57 million grasps, computed for a subset of 8515 objects from the [Objaverse XL](https://objaverse.allenai.org/) (LVIS) dataset. These grasps are specific to three grippers: Franka Panda, the Robotiq-2f-140 industrial gripper, and a single-contact suction gripper (30mm radius).

## Dataset Format

The dataset is released in the [WebDataset](https://github.com/webdataset/webdataset) format. The folder structure of the dataset is as follows:

```

grasp_data/

franka/shard_{0-7}.tar

robotiq2f140/shard_{0-7}.tar

suction/shard_{0-7}.tar

splits/

franka/{train/valid}_scenes.json

robotiq2f140/{train/valid}_scenes.json

suction/{train/valid}_scenes.json

```

We release test-train splits along with the grasp dataset. The splits are made randomly based on object instances.

Each json file in the shard has the following data in a python dictionary. Note that `num_grasps=2000` per object.

```

‘object’/

‘scale’ # This is the scale of the asset, float

‘grasps’/

‘object_in_gripper’ # boolean mask indicating grasp success, [num_grasps X 1]

‘transforms’ # Pose of the gripper in homogenous matrices, [num_grasps X 4 X 4]

```

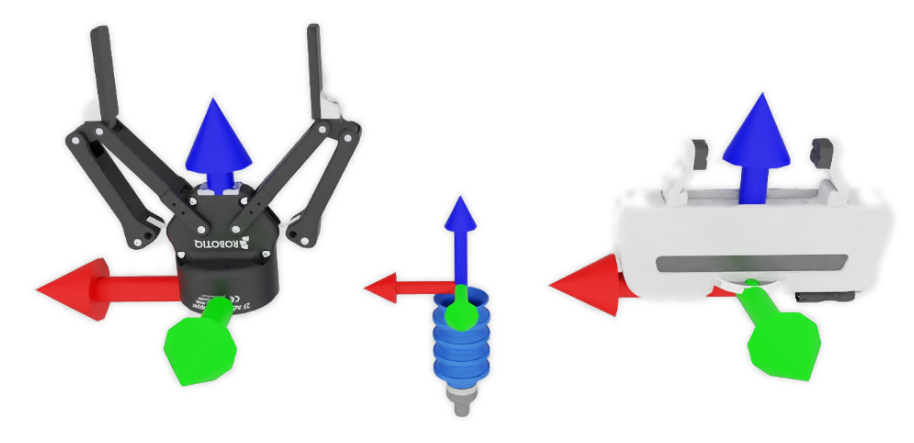

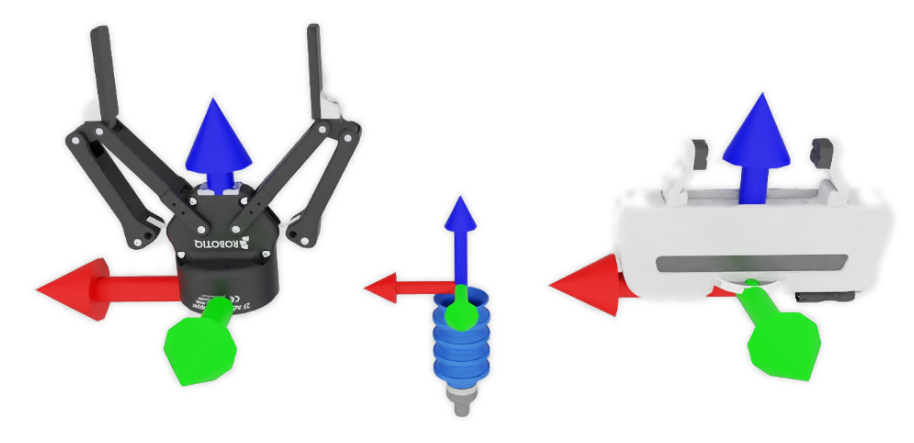

The coordinate frame convention for the three grippers are provided below:

## Dataset Format

The dataset is released in the [WebDataset](https://github.com/webdataset/webdataset) format. The folder structure of the dataset is as follows:

```

grasp_data/

franka/shard_{0-7}.tar

robotiq2f140/shard_{0-7}.tar

suction/shard_{0-7}.tar

splits/

franka/{train/valid}_scenes.json

robotiq2f140/{train/valid}_scenes.json

suction/{train/valid}_scenes.json

```

We release test-train splits along with the grasp dataset. The splits are made randomly based on object instances.

Each json file in the shard has the following data in a python dictionary. Note that `num_grasps=2000` per object.

```

‘object’/

‘scale’ # This is the scale of the asset, float

‘grasps’/

‘object_in_gripper’ # boolean mask indicating grasp success, [num_grasps X 1]

‘transforms’ # Pose of the gripper in homogenous matrices, [num_grasps X 4 X 4]

```

The coordinate frame convention for the three grippers are provided below:

## Visualizing the dataset

We have provided some minimal, standalone scripts for visualizing this dataset. See the header of the [visualize_dataset.py](scripts/visualize_dataset.py) for installation instructions.

Before running any of the visualization scripts, remember to start meshcat-server in a separate terminal:

``` shell

meshcat-server

```

To visualize a single object from the dataset, alongside its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --object_uuid {object_uuid} --object_file /path/to/mesh --gripper_name {choose from: franka, suction, robotiq2f140}

```

To sequentially visualize a list of objects with its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --uuid_list {path to a splits.json file} --uuid_object_paths_file {path to a json file mapping uuid to absolute path of meshes} --gripper_name {choose from: franka, suction, robotiq2f140}

```

## Objaverse dataset

Please download the Objaverse XL (LVIS) objects separately. See the helper script [download_objaverse.py](scripts/download_objaverse.py) for instructions and usage.

Note that running this script autogenerates a file that maps from `UUID` to the asset mesh path, which you can pass in as input `uuid_object_paths_file` to the `visualize_dataset.py` script.

## License

License Copyright © 2025, NVIDIA Corporation & affiliates. All rights reserved.

Both the dataset and visualization code is released under a CC-BY 4.0 [License](LICENSE_DATASET).

For business inquiries, please submit the form [NVIDIA Research Licensing](https://www.nvidia.com/en-us/research/inquiries/).

## Contact

Please reach out to [Adithya Murali](http://adithyamurali.com) (admurali@nvidia.com) and [Clemens Eppner](https://clemense.github.io/) (ceppner@nvidia.com) for further enquiries.

## Visualizing the dataset

We have provided some minimal, standalone scripts for visualizing this dataset. See the header of the [visualize_dataset.py](scripts/visualize_dataset.py) for installation instructions.

Before running any of the visualization scripts, remember to start meshcat-server in a separate terminal:

``` shell

meshcat-server

```

To visualize a single object from the dataset, alongside its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --object_uuid {object_uuid} --object_file /path/to/mesh --gripper_name {choose from: franka, suction, robotiq2f140}

```

To sequentially visualize a list of objects with its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --uuid_list {path to a splits.json file} --uuid_object_paths_file {path to a json file mapping uuid to absolute path of meshes} --gripper_name {choose from: franka, suction, robotiq2f140}

```

## Objaverse dataset

Please download the Objaverse XL (LVIS) objects separately. See the helper script [download_objaverse.py](scripts/download_objaverse.py) for instructions and usage.

Note that running this script autogenerates a file that maps from `UUID` to the asset mesh path, which you can pass in as input `uuid_object_paths_file` to the `visualize_dataset.py` script.

## License

License Copyright © 2025, NVIDIA Corporation & affiliates. All rights reserved.

Both the dataset and visualization code is released under a CC-BY 4.0 [License](LICENSE_DATASET).

For business inquiries, please submit the form [NVIDIA Research Licensing](https://www.nvidia.com/en-us/research/inquiries/).

## Contact

Please reach out to [Adithya Murali](http://adithyamurali.com) (admurali@nvidia.com) and [Clemens Eppner](https://clemense.github.io/) (ceppner@nvidia.com) for further enquiries.

We release over 57 million grasps, computed for a subset of 8515 objects from the [Objaverse XL](https://objaverse.allenai.org/) (LVIS) dataset. These grasps are specific to three grippers: Franka Panda, the Robotiq-2f-140 industrial gripper, and a single-contact suction gripper (30mm radius).

We release over 57 million grasps, computed for a subset of 8515 objects from the [Objaverse XL](https://objaverse.allenai.org/) (LVIS) dataset. These grasps are specific to three grippers: Franka Panda, the Robotiq-2f-140 industrial gripper, and a single-contact suction gripper (30mm radius).

## Dataset Format

The dataset is released in the [WebDataset](https://github.com/webdataset/webdataset) format. The folder structure of the dataset is as follows:

```

grasp_data/

franka/shard_{0-7}.tar

robotiq2f140/shard_{0-7}.tar

suction/shard_{0-7}.tar

splits/

franka/{train/valid}_scenes.json

robotiq2f140/{train/valid}_scenes.json

suction/{train/valid}_scenes.json

```

We release test-train splits along with the grasp dataset. The splits are made randomly based on object instances.

Each json file in the shard has the following data in a python dictionary. Note that `num_grasps=2000` per object.

```

‘object’/

‘scale’ # This is the scale of the asset, float

‘grasps’/

‘object_in_gripper’ # boolean mask indicating grasp success, [num_grasps X 1]

‘transforms’ # Pose of the gripper in homogenous matrices, [num_grasps X 4 X 4]

```

The coordinate frame convention for the three grippers are provided below:

## Dataset Format

The dataset is released in the [WebDataset](https://github.com/webdataset/webdataset) format. The folder structure of the dataset is as follows:

```

grasp_data/

franka/shard_{0-7}.tar

robotiq2f140/shard_{0-7}.tar

suction/shard_{0-7}.tar

splits/

franka/{train/valid}_scenes.json

robotiq2f140/{train/valid}_scenes.json

suction/{train/valid}_scenes.json

```

We release test-train splits along with the grasp dataset. The splits are made randomly based on object instances.

Each json file in the shard has the following data in a python dictionary. Note that `num_grasps=2000` per object.

```

‘object’/

‘scale’ # This is the scale of the asset, float

‘grasps’/

‘object_in_gripper’ # boolean mask indicating grasp success, [num_grasps X 1]

‘transforms’ # Pose of the gripper in homogenous matrices, [num_grasps X 4 X 4]

```

The coordinate frame convention for the three grippers are provided below:

## Visualizing the dataset

We have provided some minimal, standalone scripts for visualizing this dataset. See the header of the [visualize_dataset.py](scripts/visualize_dataset.py) for installation instructions.

Before running any of the visualization scripts, remember to start meshcat-server in a separate terminal:

``` shell

meshcat-server

```

To visualize a single object from the dataset, alongside its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --object_uuid {object_uuid} --object_file /path/to/mesh --gripper_name {choose from: franka, suction, robotiq2f140}

```

To sequentially visualize a list of objects with its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --uuid_list {path to a splits.json file} --uuid_object_paths_file {path to a json file mapping uuid to absolute path of meshes} --gripper_name {choose from: franka, suction, robotiq2f140}

```

## Objaverse dataset

Please download the Objaverse XL (LVIS) objects separately. See the helper script [download_objaverse.py](scripts/download_objaverse.py) for instructions and usage.

Note that running this script autogenerates a file that maps from `UUID` to the asset mesh path, which you can pass in as input `uuid_object_paths_file` to the `visualize_dataset.py` script.

## License

License Copyright © 2025, NVIDIA Corporation & affiliates. All rights reserved.

Both the dataset and visualization code is released under a CC-BY 4.0 [License](LICENSE_DATASET).

For business inquiries, please submit the form [NVIDIA Research Licensing](https://www.nvidia.com/en-us/research/inquiries/).

## Contact

Please reach out to [Adithya Murali](http://adithyamurali.com) (admurali@nvidia.com) and [Clemens Eppner](https://clemense.github.io/) (ceppner@nvidia.com) for further enquiries.

## Visualizing the dataset

We have provided some minimal, standalone scripts for visualizing this dataset. See the header of the [visualize_dataset.py](scripts/visualize_dataset.py) for installation instructions.

Before running any of the visualization scripts, remember to start meshcat-server in a separate terminal:

``` shell

meshcat-server

```

To visualize a single object from the dataset, alongside its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --object_uuid {object_uuid} --object_file /path/to/mesh --gripper_name {choose from: franka, suction, robotiq2f140}

```

To sequentially visualize a list of objects with its grasps:

```shell

cd scripts/ && python visualize_dataset.py --dataset_path /path/to/dataset --uuid_list {path to a splits.json file} --uuid_object_paths_file {path to a json file mapping uuid to absolute path of meshes} --gripper_name {choose from: franka, suction, robotiq2f140}

```

## Objaverse dataset

Please download the Objaverse XL (LVIS) objects separately. See the helper script [download_objaverse.py](scripts/download_objaverse.py) for instructions and usage.

Note that running this script autogenerates a file that maps from `UUID` to the asset mesh path, which you can pass in as input `uuid_object_paths_file` to the `visualize_dataset.py` script.

## License

License Copyright © 2025, NVIDIA Corporation & affiliates. All rights reserved.

Both the dataset and visualization code is released under a CC-BY 4.0 [License](LICENSE_DATASET).

For business inquiries, please submit the form [NVIDIA Research Licensing](https://www.nvidia.com/en-us/research/inquiries/).

## Contact

Please reach out to [Adithya Murali](http://adithyamurali.com) (admurali@nvidia.com) and [Clemens Eppner](https://clemense.github.io/) (ceppner@nvidia.com) for further enquiries.