Upload 144 files

Browse files- 0c9f8747-6faf-4fe5-a686-3b383e795875.png +3 -0

- 0cc0d307-bdc2-4968-a76f-2abad53632d5.png +3 -0

- 0da82c1e-1f60-4155-ba7a-ea6b1716706d.png +3 -0

- 0e21ed76-03de-4d81-a9d5-bed6133d480c.png +3 -0

- 10ad7fd5-f8ed-4ce6-b2fc-2ee0ff2ec2da.png +3 -0

- 18d90f28-2ca6-4d47-8f61-4ec1fe51663b.png +3 -0

- 26c509d1-3f53-46e6-93a9-56386bdf134a.png +3 -0

- 290e2e83-cd4f-48c0-8495-d778a8f7dcae.png +3 -0

- 2bcbbf14-535b-44f7-9181-361009094ded.png +3 -0

- 2c1966b4-6884-4f01-bfa1-c3315d9e602a.png +3 -0

- 2dda52c7-e651-42b3-a4d7-e5c851d069e8.png +3 -0

- 32a12477-96aa-4e54-8481-4bf7bb14b5c5.png +3 -0

- 33520d1f-4cea-4f6b-9b2f-5fa8c10a7f74.png +3 -0

- 349596c1-3a7d-4ff4-a4fc-f73cbb9efc96.png +3 -0

- 38eee642-aaba-4c8f-9deb-dcc38b93aa9e.png +3 -0

- 3a6b3ea9-4d75-4c12-be83-ab785f6467c0.png +3 -0

- 3f7d8e6a-d8a9-4345-ba88-d2c16d6c0e33.png +3 -0

- 40f0fb53-667e-4862-afb1-9b5dec3d3e8f.png +3 -0

- 46f5e525-39aa-4f73-be5b-7d473875d44f.png +3 -0

- 498fd963-8652-49f9-b5e0-ce7d5b456779.png +3 -0

- 4cf1dd3e-f26c-4d30-b57c-720a0eaba96c.png +3 -0

- 56c43818-b08c-40c0-9020-fcd0bf0360e6.png +3 -0

- 6a6b603e-5a0a-4d17-9ad3-3e4c90f3ea32.png +3 -0

- 7591c83b-c6b6-4bd4-9af0-b727942431c1.png +3 -0

- 8ab6a8f3-a4e2-4e36-8b83-c19528cbcef5.png +3 -0

- 8ec90e4a-0b0a-4575-b71d-62f4656ecdff.png +3 -0

- 9a41f973-ab10-4193-a35a-766867ecf2e9.png +3 -0

- 9c23fe20-747d-4d30-ad44-1cdfc8a42588.png +3 -0

- a0bdfdce-1e37-4964-ba2d-b239aade67c2.png +3 -0

- bb433170-5ae3-4c9b-9d4a-da4a83806506.png +3 -0

- bc0b95d5-4f66-4497-8b0e-c47a7b6207d4.png +3 -0

- d0fb8690-f472-45e2-9147-e9c944d9104f.png +3 -0

- d62bf0f8-d896-4a3d-9e93-f2b3720883c2.png +3 -0

- d6d231c0-9c0b-4dbc-8ecf-0de6f70c071c.png +3 -0

- da552b13-cf0c-4c0d-88ac-7f228e43d866.png +3 -0

- df9d8c0c-5bb0-4945-909f-0d2773f184d0.png +3 -0

- ec0df12b-72a3-4d94-838e-b46e8a66661e.png +3 -0

- ecea1dcb-6f05-4a49-b169-9ef0480fa543.png +3 -0

- f17d818f-a580-4389-9600-cf315f113fdc.png +3 -0

- f438116f-9cf4-439b-a79d-b4a49ea84d76.png +3 -0

- ff904238-3810-4381-a1c1-3b03f949ee82.png +3 -0

- metadata.jsonl +41 -0

0c9f8747-6faf-4fe5-a686-3b383e795875.png

ADDED

|

Git LFS Details

|

0cc0d307-bdc2-4968-a76f-2abad53632d5.png

ADDED

|

Git LFS Details

|

0da82c1e-1f60-4155-ba7a-ea6b1716706d.png

ADDED

|

Git LFS Details

|

0e21ed76-03de-4d81-a9d5-bed6133d480c.png

ADDED

|

Git LFS Details

|

10ad7fd5-f8ed-4ce6-b2fc-2ee0ff2ec2da.png

ADDED

|

Git LFS Details

|

18d90f28-2ca6-4d47-8f61-4ec1fe51663b.png

ADDED

|

Git LFS Details

|

26c509d1-3f53-46e6-93a9-56386bdf134a.png

ADDED

|

Git LFS Details

|

290e2e83-cd4f-48c0-8495-d778a8f7dcae.png

ADDED

|

Git LFS Details

|

2bcbbf14-535b-44f7-9181-361009094ded.png

ADDED

|

Git LFS Details

|

2c1966b4-6884-4f01-bfa1-c3315d9e602a.png

ADDED

|

Git LFS Details

|

2dda52c7-e651-42b3-a4d7-e5c851d069e8.png

ADDED

|

Git LFS Details

|

32a12477-96aa-4e54-8481-4bf7bb14b5c5.png

ADDED

|

Git LFS Details

|

33520d1f-4cea-4f6b-9b2f-5fa8c10a7f74.png

ADDED

|

Git LFS Details

|

349596c1-3a7d-4ff4-a4fc-f73cbb9efc96.png

ADDED

|

Git LFS Details

|

38eee642-aaba-4c8f-9deb-dcc38b93aa9e.png

ADDED

|

Git LFS Details

|

3a6b3ea9-4d75-4c12-be83-ab785f6467c0.png

ADDED

|

Git LFS Details

|

3f7d8e6a-d8a9-4345-ba88-d2c16d6c0e33.png

ADDED

|

Git LFS Details

|

40f0fb53-667e-4862-afb1-9b5dec3d3e8f.png

ADDED

|

Git LFS Details

|

46f5e525-39aa-4f73-be5b-7d473875d44f.png

ADDED

|

Git LFS Details

|

498fd963-8652-49f9-b5e0-ce7d5b456779.png

ADDED

|

Git LFS Details

|

4cf1dd3e-f26c-4d30-b57c-720a0eaba96c.png

ADDED

|

Git LFS Details

|

56c43818-b08c-40c0-9020-fcd0bf0360e6.png

ADDED

|

Git LFS Details

|

6a6b603e-5a0a-4d17-9ad3-3e4c90f3ea32.png

ADDED

|

Git LFS Details

|

7591c83b-c6b6-4bd4-9af0-b727942431c1.png

ADDED

|

Git LFS Details

|

8ab6a8f3-a4e2-4e36-8b83-c19528cbcef5.png

ADDED

|

Git LFS Details

|

8ec90e4a-0b0a-4575-b71d-62f4656ecdff.png

ADDED

|

Git LFS Details

|

9a41f973-ab10-4193-a35a-766867ecf2e9.png

ADDED

|

Git LFS Details

|

9c23fe20-747d-4d30-ad44-1cdfc8a42588.png

ADDED

|

Git LFS Details

|

a0bdfdce-1e37-4964-ba2d-b239aade67c2.png

ADDED

|

Git LFS Details

|

bb433170-5ae3-4c9b-9d4a-da4a83806506.png

ADDED

|

Git LFS Details

|

bc0b95d5-4f66-4497-8b0e-c47a7b6207d4.png

ADDED

|

Git LFS Details

|

d0fb8690-f472-45e2-9147-e9c944d9104f.png

ADDED

|

Git LFS Details

|

d62bf0f8-d896-4a3d-9e93-f2b3720883c2.png

ADDED

|

Git LFS Details

|

d6d231c0-9c0b-4dbc-8ecf-0de6f70c071c.png

ADDED

|

Git LFS Details

|

da552b13-cf0c-4c0d-88ac-7f228e43d866.png

ADDED

|

Git LFS Details

|

df9d8c0c-5bb0-4945-909f-0d2773f184d0.png

ADDED

|

Git LFS Details

|

ec0df12b-72a3-4d94-838e-b46e8a66661e.png

ADDED

|

Git LFS Details

|

ecea1dcb-6f05-4a49-b169-9ef0480fa543.png

ADDED

|

Git LFS Details

|

f17d818f-a580-4389-9600-cf315f113fdc.png

ADDED

|

Git LFS Details

|

f438116f-9cf4-439b-a79d-b4a49ea84d76.png

ADDED

|

Git LFS Details

|

ff904238-3810-4381-a1c1-3b03f949ee82.png

ADDED

|

Git LFS Details

|

metadata.jsonl

CHANGED

|

@@ -100,3 +100,44 @@

|

|

| 100 |

{"file_name": "fc9c5d3e-fc14-48a9-9a81-f2e691fd45ee.png", "code": "import json\r\n\r\nfrom flask import request, Flask\r\n\r\nfrom ..config.logger_config import app_logger\r\nfrom .rate_limiter import request_is_rate_limited\r\nfrom redis import Redis\r\nfrom datetime import timedelta\r\nfrom http import HTTPStatus\r\n\r\nr = Redis(host='localhost', port=6379, db=0)\r\n\r\n\r\ndef log_post_request():\r\n request_data = {\r\n \"method\": request.method,\r\n \"url root\": request.url_root,\r\n \"user agent\": request.user_agent,\r\n \"scheme\": request.scheme,\r\n \"remote address\": request.remote_addr,\r\n \"headers\": request.headers,\r\n }\r\n if request.args:\r\n request_data[\"args\"] = request.args\r\n if request.form:\r\n request_data[\"data\"] = request.form\r\n else:\r\n request_data[\"data\"] = request.json\r\n if request.cookies:\r\n request_data[\"cookies\"] = request.cookies\r\n if request.files:\r\n request_data[\"image\"] = {\r\n \"filename\": request.files[\"Image\"].filename,\r\n \"content type\": request.files[\"Image\"].content_type,\r\n \"size\": len(request.files[\"Image\"].read()) // 1000,\r\n }\r\n app_logger.info(str(request_data))"}

|

| 101 |

{"file_name": "35b643c4-f349-475f-ad54-561e0f89c228.png", "code": "def log_get_request():\r\n request_data = {\r\n \"method\": request.method,\r\n \"url root\": request.url_root,\r\n \"user agent\": request.user_agent,\r\n \"scheme\": request.scheme,\r\n \"remote address\": request.remote_addr,\r\n \"headers\": request.headers,\r\n \"route\": request.endpoint,\r\n \"base url\": request.base_url,\r\n \"url\": request.url,\r\n }\r\n if request.args:\r\n request_data[\"args\"] = request.args\r\n if request.cookies:\r\n request_data[\"cookies\"] = request.cookies\r\n app_logger.info(str(request_data))\r\n\r\n\r\ndef get_response(response):\r\n response_data = {\r\n \"status\": response.status,\r\n \"status code\": response.status_code,\r\n \"response\": json.loads(response.data),\r\n }\r\n app_logger.info(str(response_data))"}

|

| 102 |

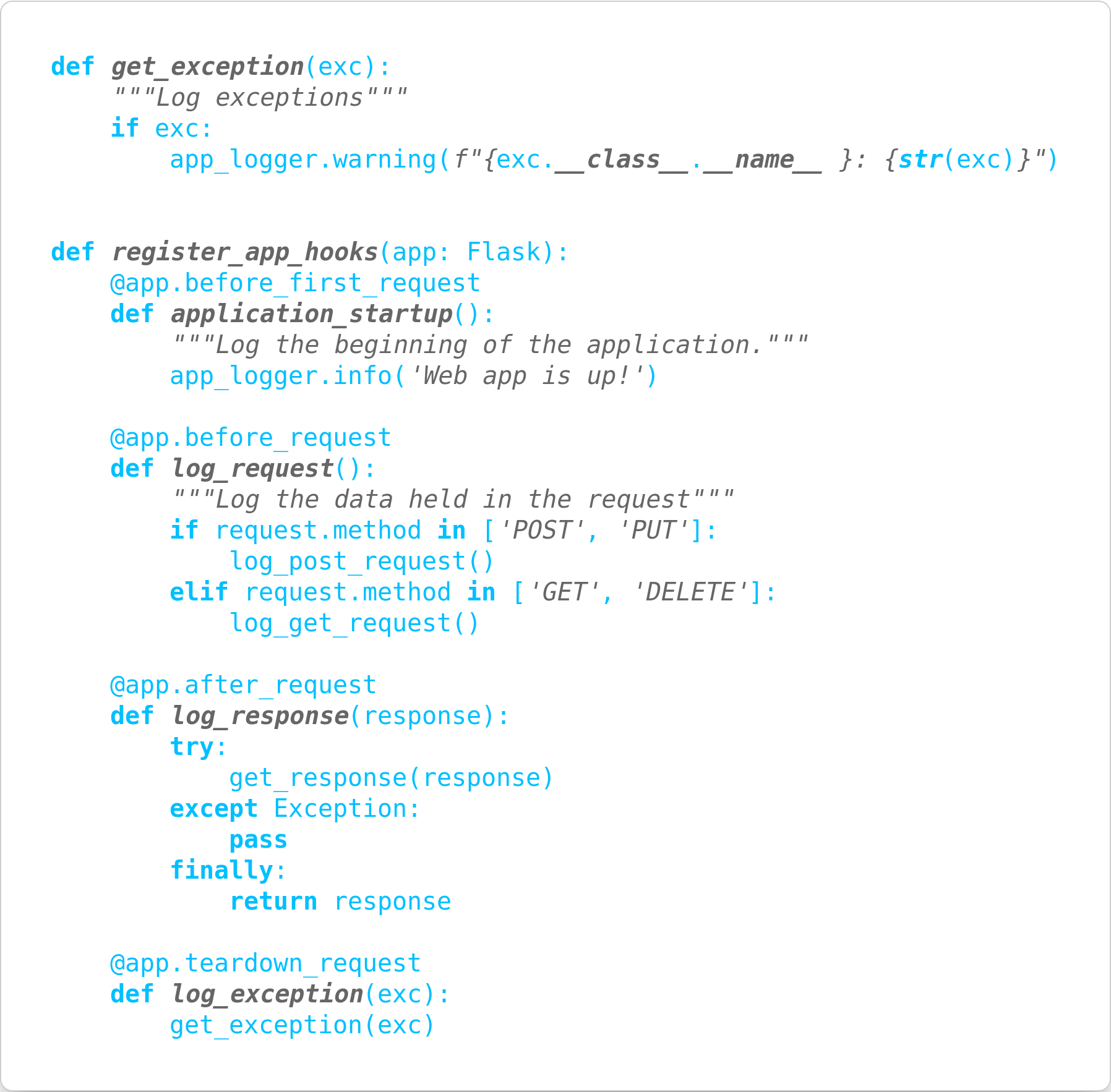

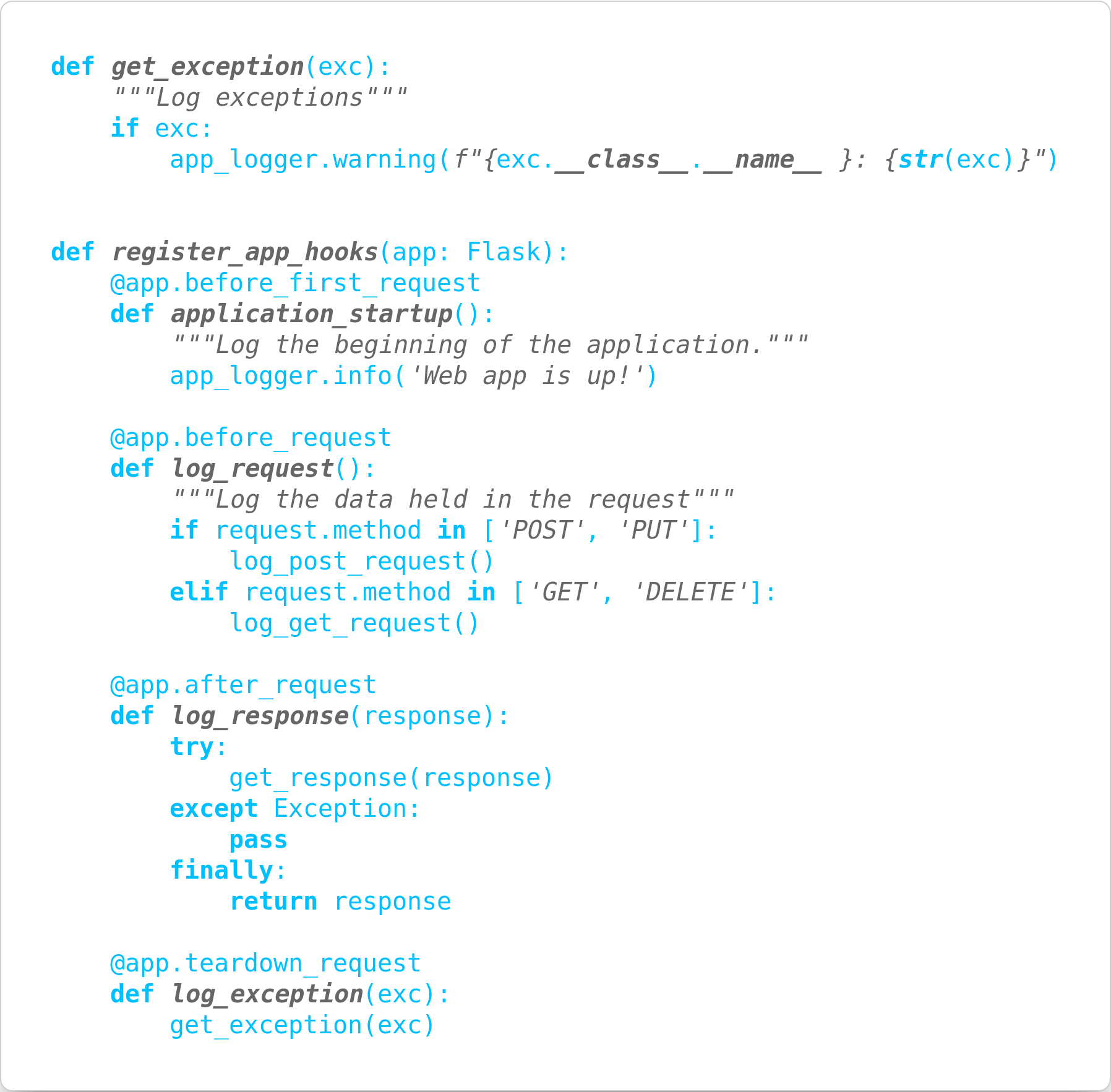

{"file_name": "24644e99-3f0c-4b4b-89f2-21e867c2bb93.png", "code": "def get_exception(exc):\r\n \"\"\"Log exceptions\"\"\"\r\n if exc:\r\n app_logger.warning(f\"{exc.__class__.__name__ }: {str(exc)}\")\r\n \r\n \r\ndef register_app_hooks(app: Flask):\r\n @app.before_first_request\r\n def application_startup():\r\n \"\"\"Log the beginning of the application.\"\"\"\r\n app_logger.info('Web app is up!')\r\n\r\n @app.before_request\r\n def log_request():\r\n \"\"\"Log the data held in the request\"\"\"\r\n if request.method in ['POST', 'PUT']:\r\n log_post_request()\r\n elif request.method in ['GET', 'DELETE']:\r\n log_get_request()\r\n\r\n @app.after_request\r\n def log_response(response):\r\n try:\r\n get_response(response)\r\n except Exception:\r\n pass\r\n finally:\r\n return response\r\n\r\n @app.teardown_request\r\n def log_exception(exc):\r\n get_exception(exc)"}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 100 |

{"file_name": "fc9c5d3e-fc14-48a9-9a81-f2e691fd45ee.png", "code": "import json\r\n\r\nfrom flask import request, Flask\r\n\r\nfrom ..config.logger_config import app_logger\r\nfrom .rate_limiter import request_is_rate_limited\r\nfrom redis import Redis\r\nfrom datetime import timedelta\r\nfrom http import HTTPStatus\r\n\r\nr = Redis(host='localhost', port=6379, db=0)\r\n\r\n\r\ndef log_post_request():\r\n request_data = {\r\n \"method\": request.method,\r\n \"url root\": request.url_root,\r\n \"user agent\": request.user_agent,\r\n \"scheme\": request.scheme,\r\n \"remote address\": request.remote_addr,\r\n \"headers\": request.headers,\r\n }\r\n if request.args:\r\n request_data[\"args\"] = request.args\r\n if request.form:\r\n request_data[\"data\"] = request.form\r\n else:\r\n request_data[\"data\"] = request.json\r\n if request.cookies:\r\n request_data[\"cookies\"] = request.cookies\r\n if request.files:\r\n request_data[\"image\"] = {\r\n \"filename\": request.files[\"Image\"].filename,\r\n \"content type\": request.files[\"Image\"].content_type,\r\n \"size\": len(request.files[\"Image\"].read()) // 1000,\r\n }\r\n app_logger.info(str(request_data))"}

|

| 101 |

{"file_name": "35b643c4-f349-475f-ad54-561e0f89c228.png", "code": "def log_get_request():\r\n request_data = {\r\n \"method\": request.method,\r\n \"url root\": request.url_root,\r\n \"user agent\": request.user_agent,\r\n \"scheme\": request.scheme,\r\n \"remote address\": request.remote_addr,\r\n \"headers\": request.headers,\r\n \"route\": request.endpoint,\r\n \"base url\": request.base_url,\r\n \"url\": request.url,\r\n }\r\n if request.args:\r\n request_data[\"args\"] = request.args\r\n if request.cookies:\r\n request_data[\"cookies\"] = request.cookies\r\n app_logger.info(str(request_data))\r\n\r\n\r\ndef get_response(response):\r\n response_data = {\r\n \"status\": response.status,\r\n \"status code\": response.status_code,\r\n \"response\": json.loads(response.data),\r\n }\r\n app_logger.info(str(response_data))"}

|

| 102 |

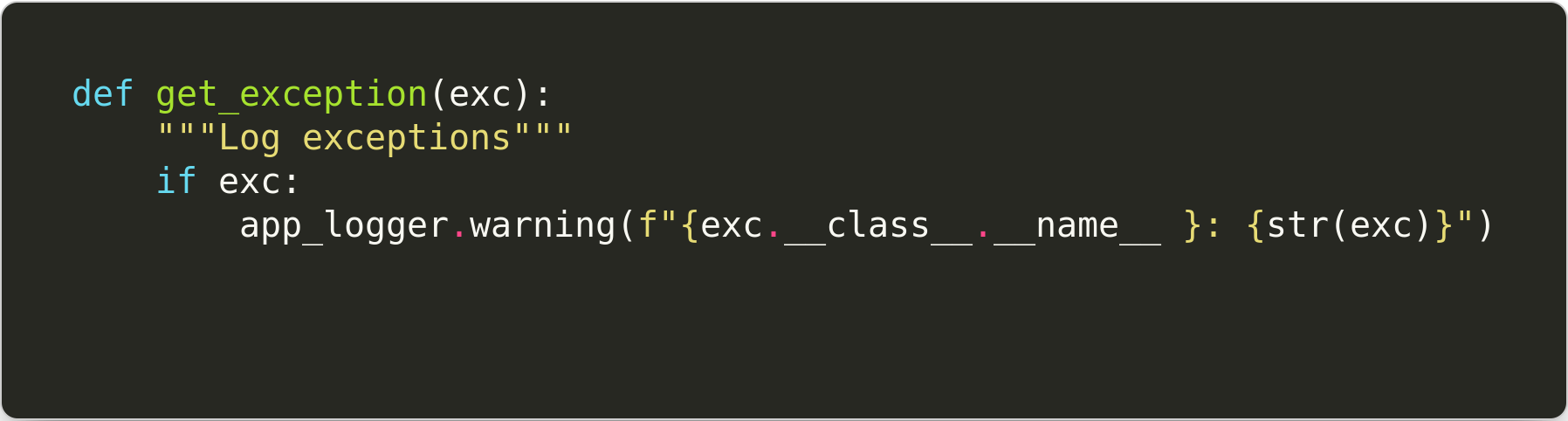

{"file_name": "24644e99-3f0c-4b4b-89f2-21e867c2bb93.png", "code": "def get_exception(exc):\r\n \"\"\"Log exceptions\"\"\"\r\n if exc:\r\n app_logger.warning(f\"{exc.__class__.__name__ }: {str(exc)}\")\r\n \r\n \r\ndef register_app_hooks(app: Flask):\r\n @app.before_first_request\r\n def application_startup():\r\n \"\"\"Log the beginning of the application.\"\"\"\r\n app_logger.info('Web app is up!')\r\n\r\n @app.before_request\r\n def log_request():\r\n \"\"\"Log the data held in the request\"\"\"\r\n if request.method in ['POST', 'PUT']:\r\n log_post_request()\r\n elif request.method in ['GET', 'DELETE']:\r\n log_get_request()\r\n\r\n @app.after_request\r\n def log_response(response):\r\n try:\r\n get_response(response)\r\n except Exception:\r\n pass\r\n finally:\r\n return response\r\n\r\n @app.teardown_request\r\n def log_exception(exc):\r\n get_exception(exc)"}

|

| 103 |

+

{"file_name": "2dda52c7-e651-42b3-a4d7-e5c851d069e8.png", "code": "def get_exception(exc):\r\n \"\"\"Log exceptions\"\"\"\r\n if exc:\r\n app_logger.warning(f\"{exc.__class__.__name__ }: {str(exc)}\")\r\n \r\n \r\ndef register_app_hooks(app: Flask):\r\n @app.before_first_request\r\n def application_startup():\r\n \"\"\"Log the beginning of the application.\"\"\"\r\n app_logger.info('Web app is up!')\r\n\r\n @app.before_request\r\n def log_request():\r\n \"\"\"Log the data held in the request\"\"\"\r\n if request.method in ['POST', 'PUT']:\r\n log_post_request()\r\n elif request.method in ['GET', 'DELETE']:\r\n log_get_request()\r\n\r\n @app.after_request\r\n def log_response(response):\r\n try:\r\n get_response(response)\r\n except Exception:\r\n pass\r\n finally:\r\n return response\r\n\r\n @app.teardown_request\r\n def log_exception(exc):\r\n get_exception(exc)"}

|

| 104 |

+

{"file_name": "32a12477-96aa-4e54-8481-4bf7bb14b5c5.png", "code": "def get_exception(exc):\r\n \"\"\"Log exceptions\"\"\"\r\n if exc:\r\n app_logger.warning(f\"{exc.__class__.__name__ }: {str(exc)}\")\r\n \r\n \r\ndef register_app_hooks(app: Flask):\r\n @app.before_first_request\r\n def application_startup():\r\n \"\"\"Log the beginning of the application.\"\"\"\r\n app_logger.info('Web app is up!')\r\n\r\n @app.before_request\r\n def log_request():\r\n \"\"\"Log the data held in the request\"\"\"\r\n if request.method in ['POST', 'PUT']:\r\n log_post_request()\r\n elif request.method in ['GET', 'DELETE']:\r\n log_get_request()\r\n\r\n @app.after_request\r\n def log_response(response):\r\n try:\r\n get_response(response)\r\n except Exception:\r\n pass\r\n finally:\r\n return response\r\n\r\n @app.teardown_request\r\n def log_exception(exc):\r\n get_exception(exc)"}

|

| 105 |

+

{"file_name": "2bcbbf14-535b-44f7-9181-361009094ded.png", "code": "def get_exception(exc):\r\n \"\"\"Log exceptions\"\"\"\r\n if exc:\r\n app_logger.warning(f\"{exc.__class__.__name__ }: {str(exc)}\")\r\n \r\n \r\ndef register_app_hooks(app: Flask):\r\n @app.before_first_request\r\n def application_startup():\r\n \"\"\"Log the beginning of the application.\"\"\"\r\n app_logger.info('Web app is up!')\r\n\r\n @app.before_request\r\n def log_request():\r\n \"\"\"Log the data held in the request\"\"\"\r\n if request.method in ['POST', 'PUT']:\r\n log_post_request()\r\n elif request.method in ['GET', 'DELETE']:\r\n log_get_request()\r\n\r\n @app.after_request\r\n def log_response(response):\r\n try:\r\n get_response(response)\r\n except Exception:\r\n pass\r\n finally:\r\n return response\r\n\r\n @app.teardown_request\r\n def log_exception(exc):\r\n get_exception(exc)"}

|

| 106 |

+

{"file_name": "498fd963-8652-49f9-b5e0-ce7d5b456779.png", "code": "\r\ndef get_exception(exc):\r\n \"\"\"Log exceptions\"\"\"\r\n if exc:\r\n app_logger.warning(f\"{exc.__class__.__name__ }: {str(exc)}\")\r\n"}

|

| 107 |

+

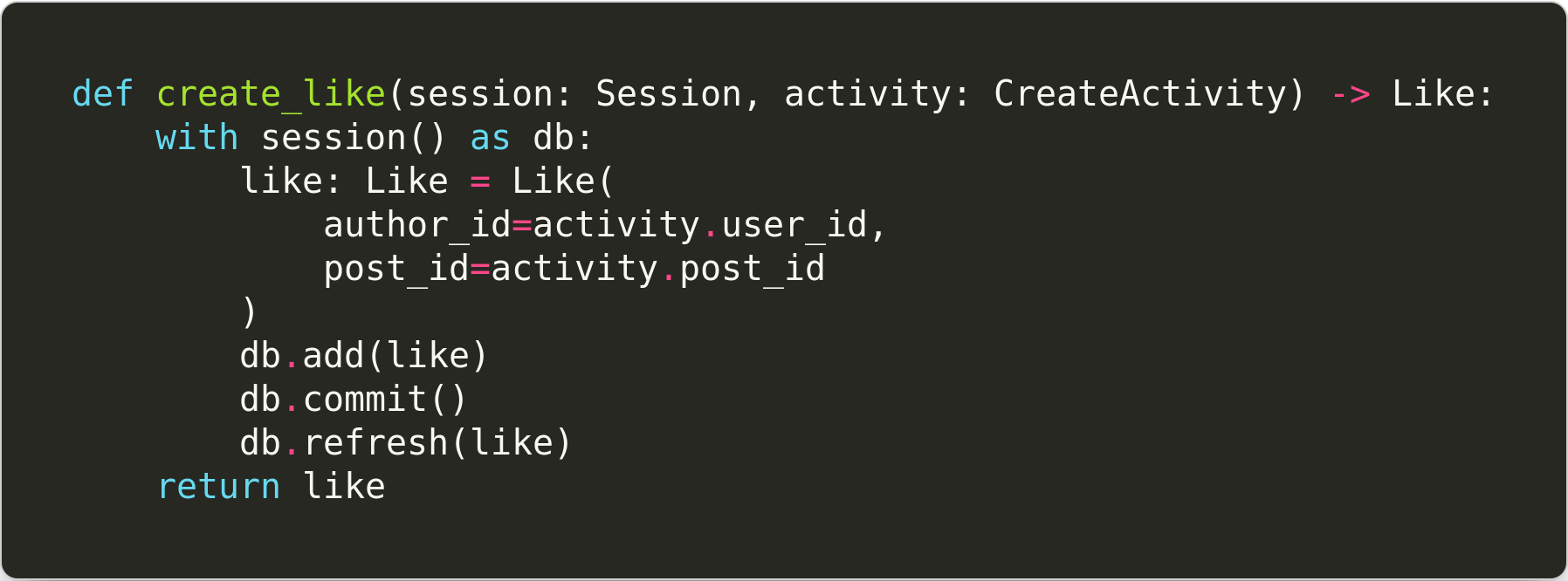

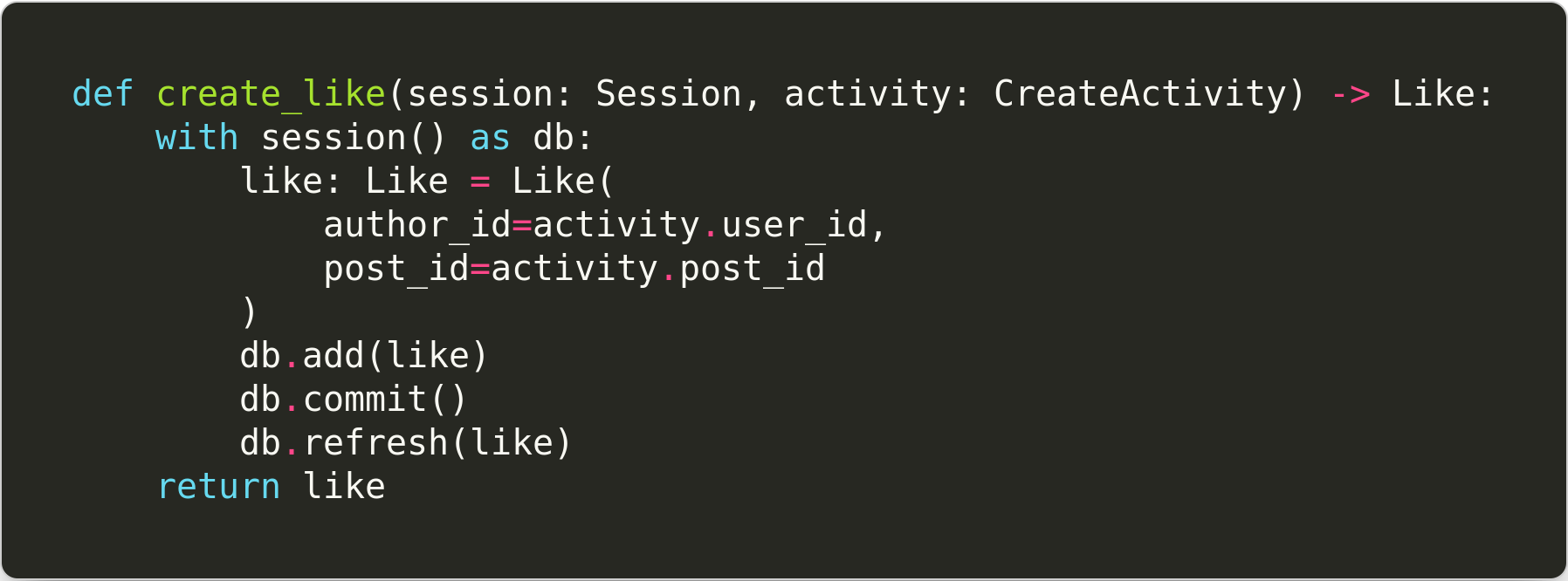

{"file_name": "4cf1dd3e-f26c-4d30-b57c-720a0eaba96c.png", "code": "\r\ndef create_like(session: Session, activity: CreateActivity) -> Like:\r\n with session() as db:\r\n like: Like = Like(\r\n author_id=activity.user_id,\r\n post_id=activity.post_id\r\n )\r\n db.add(like)\r\n db.commit()\r\n db.refresh(like)\r\n return like\r\n"}

|

| 108 |

+

{"file_name": "6a6b603e-5a0a-4d17-9ad3-3e4c90f3ea32.png", "code": "\r\ndef create_like(session: Session, activity: CreateActivity) -> Like:\r\n with session() as db:\r\n like: Like = Like(\r\n author_id=activity.user_id,\r\n post_id=activity.post_id\r\n )\r\n db.add(like)\r\n db.commit()\r\n db.refresh(like)\r\n return like\r\n"}

|

| 109 |

+

{"file_name": "8ec90e4a-0b0a-4575-b71d-62f4656ecdff.png", "code": "\r\n# @app.before_request\r\n # def rate_limit_request():\r\n # if request_is_rate_limited(r, 'admin', 10, timedelta(seconds=60)):\r\n # return {'Error': 'You have exceeded the allowed requests'}, HTTPStatus.TOO_MANY_REQUESTS\r\n"}

|

| 110 |

+

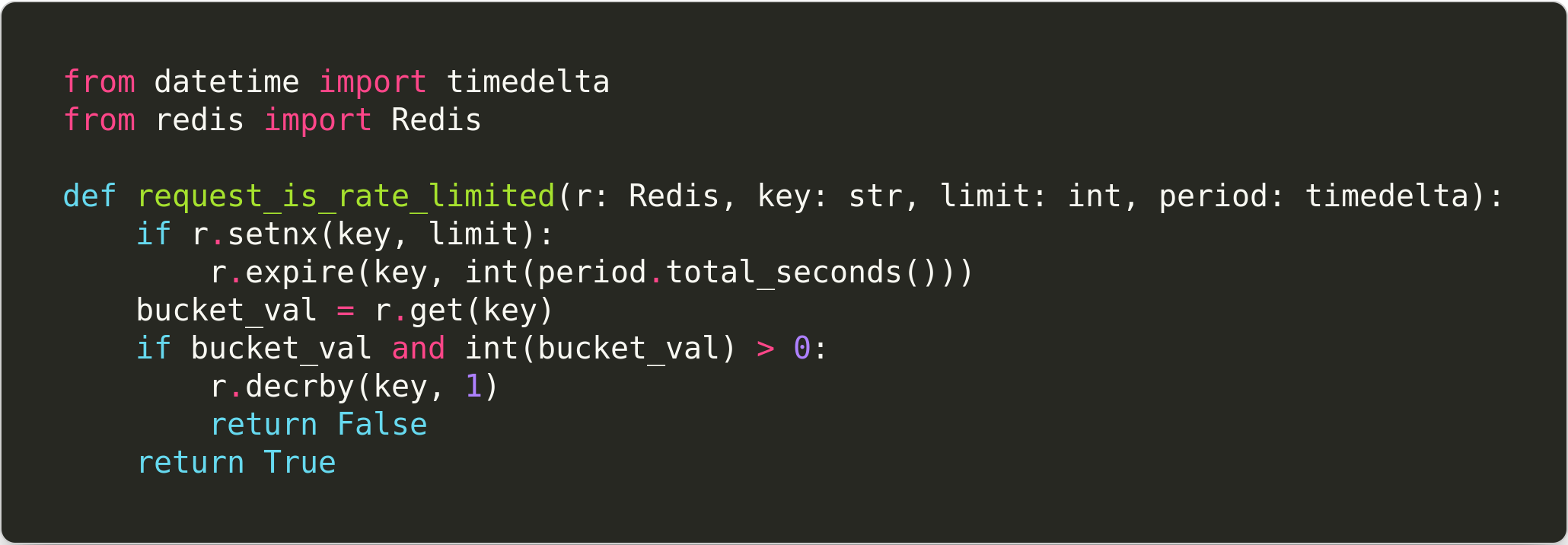

{"file_name": "bc0b95d5-4f66-4497-8b0e-c47a7b6207d4.png", "code": "\r\nfrom datetime import timedelta\r\nfrom redis import Redis\r\n\r\ndef request_is_rate_limited(r: Redis, key: str, limit: int, period: timedelta):\r\n if r.setnx(key, limit):\r\n r.expire(key, int(period.total_seconds()))\r\n bucket_val = r.get(key)\r\n if bucket_val and int(bucket_val) > 0:\r\n r.decrby(key, 1)\r\n return False\r\n return True\r\n"}

|

| 111 |

+

{"file_name": "0e21ed76-03de-4d81-a9d5-bed6133d480c.png", "code": "\r\nclass DirectoryIterator:\r\n def __init__(self, config: Config):\r\n self.config: Config = config\r\n self.queue: deque[str] = deque(self.config.path)\r\n\r\n def __iter__(self) -> Iterator:\r\n return self\r\n\r\n def __next__(self) -> list[str]:\r\n files: list[str] = list()\r\n if self.queue:\r\n for _ in range(len(self.queue)):\r\n root_dir: str = self.queue.popleft()\r\n if root_dir.split('/')[-1] in self.config.directories_ignore:\r\n continue\r\n entries: list[str] = listdir(root_dir)\r\n for entry in entries:\r\n entry_path: str = path.join(root_dir, entry)\r\n if path.isfile(entry_path):\r\n if (\r\n entry_path not in self.config.files_ignore\r\n and entry.split('.')[-1] == 'py'\r\n ):\r\n files.append(entry_path)\r\n elif entry not in self.config.directories_ignore:\r\n self.queue.append(entry_path)\r\n return files\r\n else:\r\n raise StopIteration()\r\n"}

|

| 112 |

+

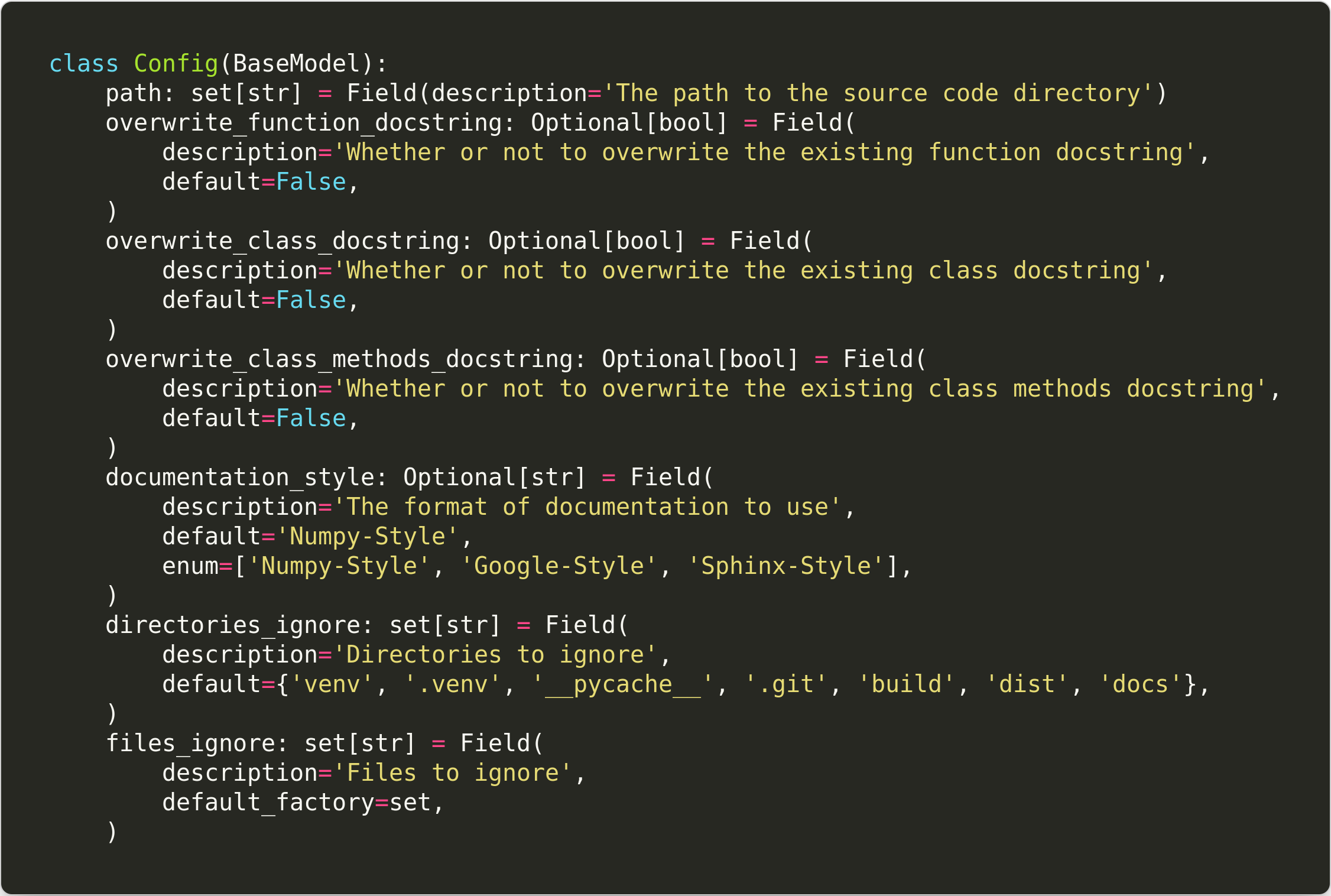

{"file_name": "46f5e525-39aa-4f73-be5b-7d473875d44f.png", "code": "\r\nclass Config(BaseModel):\r\n path: set[str] = Field(description='The path to the source code directory')\r\n overwrite_function_docstring: Optional[bool] = Field(\r\n description='Whether or not to overwrite the existing function docstring',\r\n default=False,\r\n )\r\n overwrite_class_docstring: Optional[bool] = Field(\r\n description='Whether or not to overwrite the existing class docstring',\r\n default=False,\r\n )\r\n overwrite_class_methods_docstring: Optional[bool] = Field(\r\n description='Whether or not to overwrite the existing class methods docstring',\r\n default=False,\r\n )\r\n documentation_style: Optional[str] = Field(\r\n description='The format of documentation to use',\r\n default='Numpy-Style',\r\n enum=['Numpy-Style', 'Google-Style', 'Sphinx-Style'],\r\n )\r\n directories_ignore: set[str] = Field(\r\n description='Directories to ignore',\r\n default={'venv', '.venv', '__pycache__', '.git', 'build', 'dist', 'docs'},\r\n )\r\n files_ignore: set[str] = Field(\r\n description='Files to ignore',\r\n default_factory=set,\r\n )\r\n"}

|

| 113 |

+

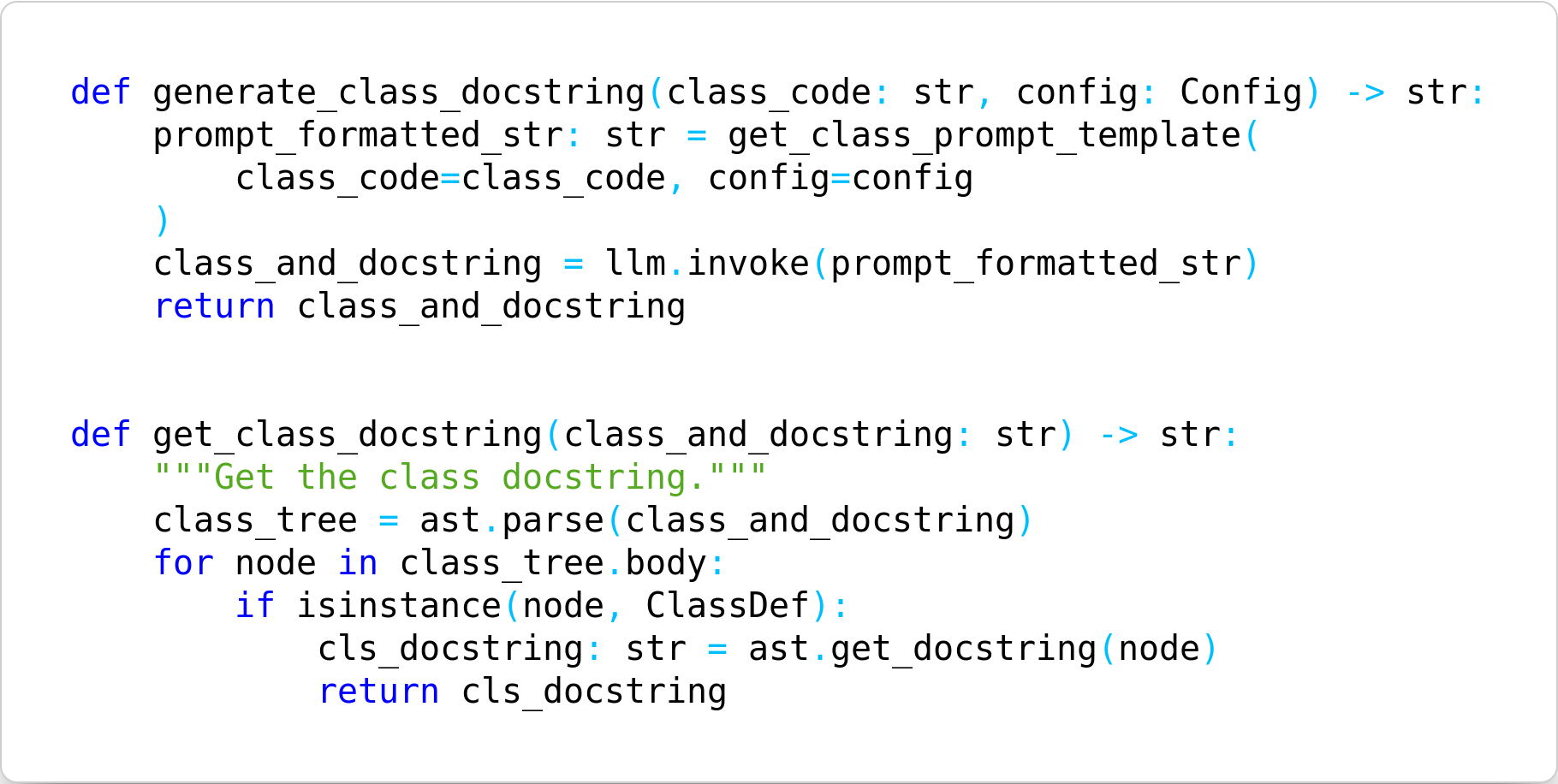

{"file_name": "bb433170-5ae3-4c9b-9d4a-da4a83806506.png", "code": "def generate_class_docstring(class_code: str, config: Config) -> str:\r\n prompt_formatted_str: str = get_class_prompt_template(\r\n class_code=class_code, config=config\r\n )\r\n class_and_docstring = llm.invoke(prompt_formatted_str)\r\n return class_and_docstring\r\n\r\n\r\ndef get_class_docstring(class_and_docstring: str) -> str:\r\n \"\"\"Get the class docstring.\"\"\"\r\n class_tree = ast.parse(class_and_docstring)\r\n for node in class_tree.body:\r\n if isinstance(node, ClassDef):\r\n cls_docstring: str = ast.get_docstring(node)\r\n return cls_docstring"}

|

| 114 |

+

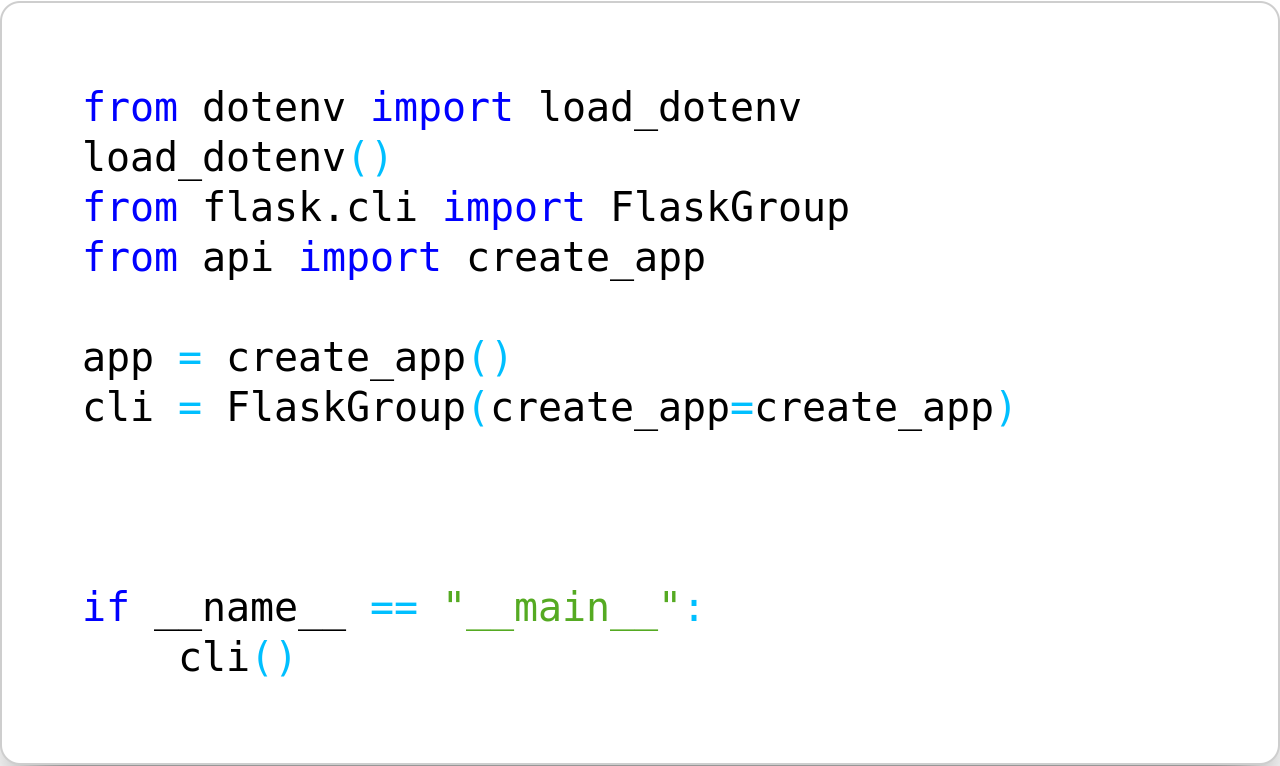

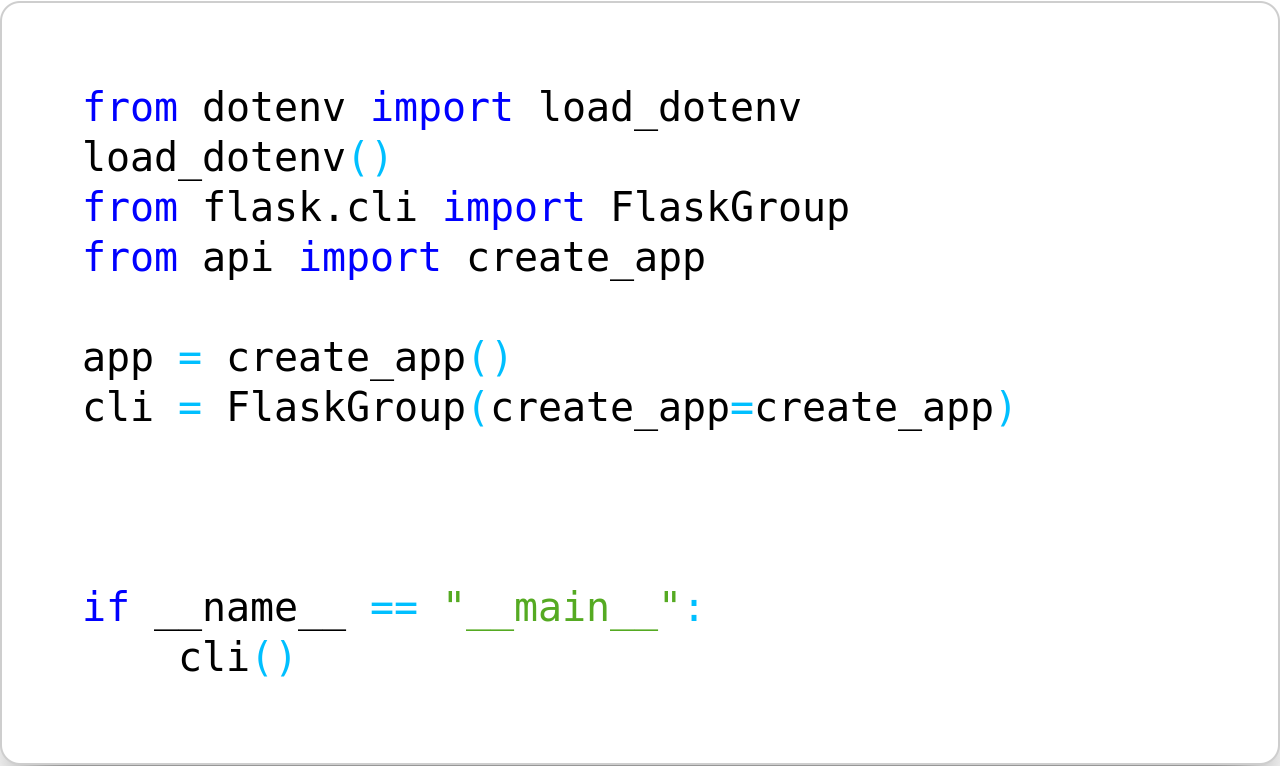

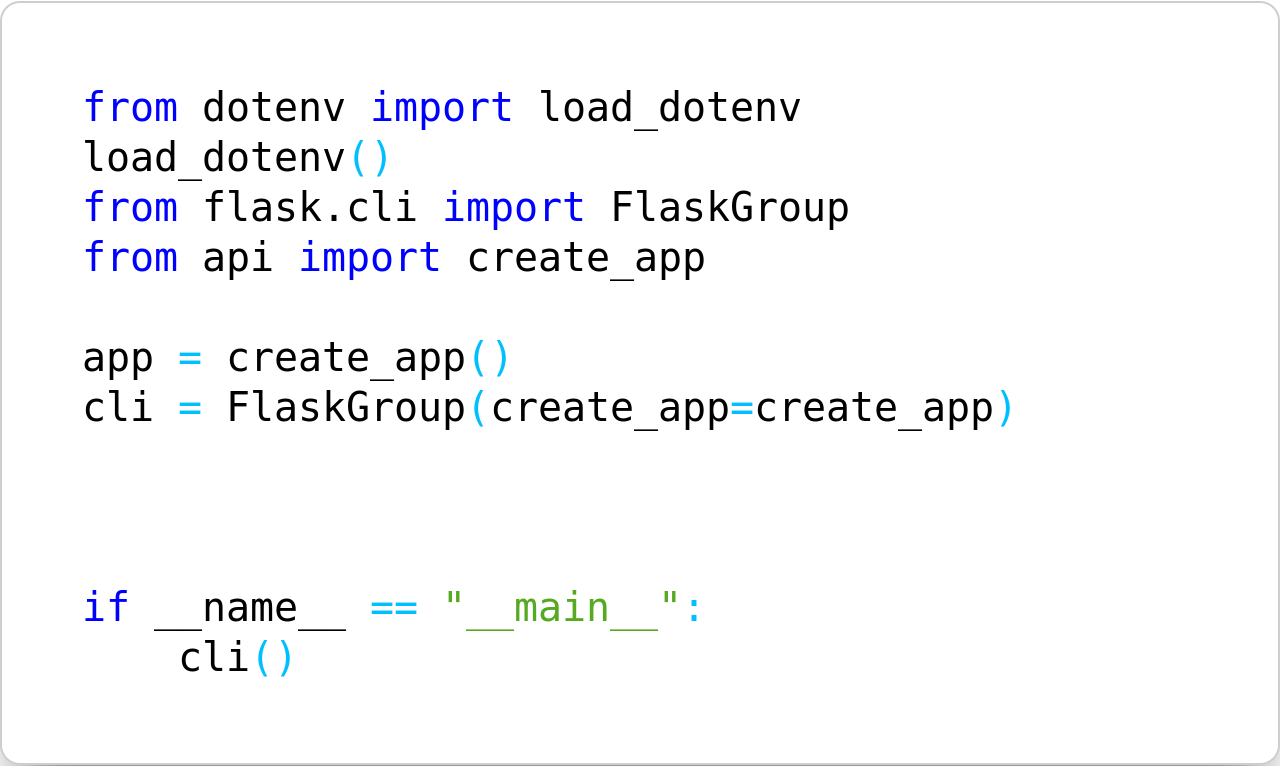

{"file_name": "f17d818f-a580-4389-9600-cf315f113fdc.png", "code": "from dotenv import load_dotenv\r\nload_dotenv()\r\nfrom flask.cli import FlaskGroup\r\nfrom api import create_app\r\n\r\napp = create_app()\r\ncli = FlaskGroup(create_app=create_app)\r\n\r\n\r\n\r\nif __name__ == \"__main__\":\r\n cli()"}

|

| 115 |

+

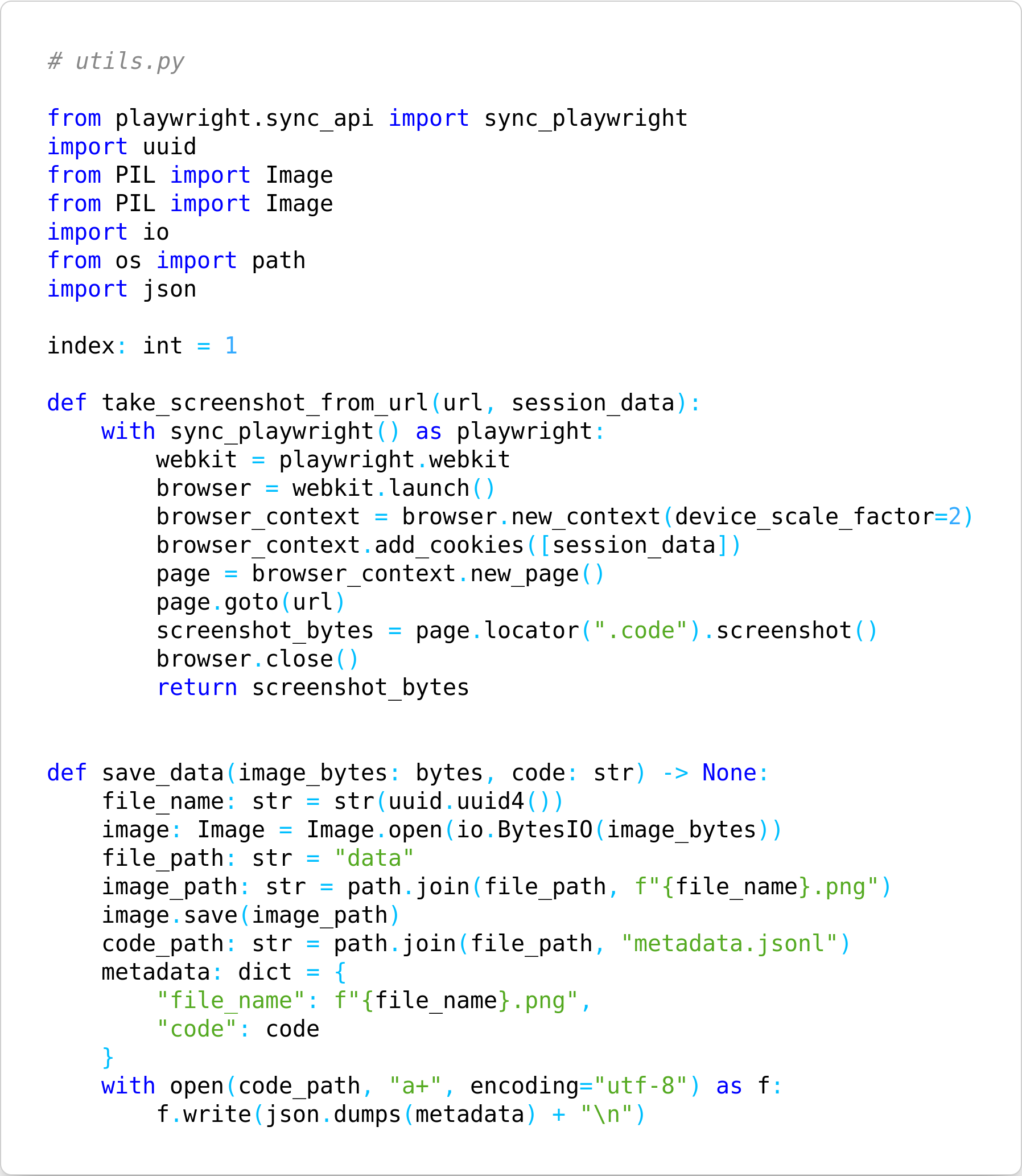

{"file_name": "18d90f28-2ca6-4d47-8f61-4ec1fe51663b.png", "code": "# utils.py\r\n\r\nfrom playwright.sync_api import sync_playwright\r\nimport uuid\r\nfrom PIL import Image\r\nfrom PIL import Image\r\nimport io\r\nfrom os import path\r\nimport json\r\n\r\nindex: int = 1\r\n\r\ndef take_screenshot_from_url(url, session_data):\r\n with sync_playwright() as playwright:\r\n webkit = playwright.webkit\r\n browser = webkit.launch()\r\n browser_context = browser.new_context(device_scale_factor=2)\r\n browser_context.add_cookies([session_data])\r\n page = browser_context.new_page()\r\n page.goto(url)\r\n screenshot_bytes = page.locator(\".code\").screenshot()\r\n browser.close()\r\n return screenshot_bytes\r\n \r\n \r\ndef save_data(image_bytes: bytes, code: str) -> None:\r\n file_name: str = str(uuid.uuid4())\r\n image: Image = Image.open(io.BytesIO(image_bytes))\r\n file_path: str = \"data\"\r\n image_path: str = path.join(file_path, f\"{file_name}.png\")\r\n image.save(image_path)\r\n code_path: str = path.join(file_path, \"metadata.jsonl\")\r\n metadata: dict = {\r\n \"file_name\": f\"{file_name}.png\",\r\n \"code\": code\r\n }\r\n with open(code_path, \"a+\", encoding=\"utf-8\") as f:\r\n f.write(json.dumps(metadata) + \"\\n\")"}

|

| 116 |

+

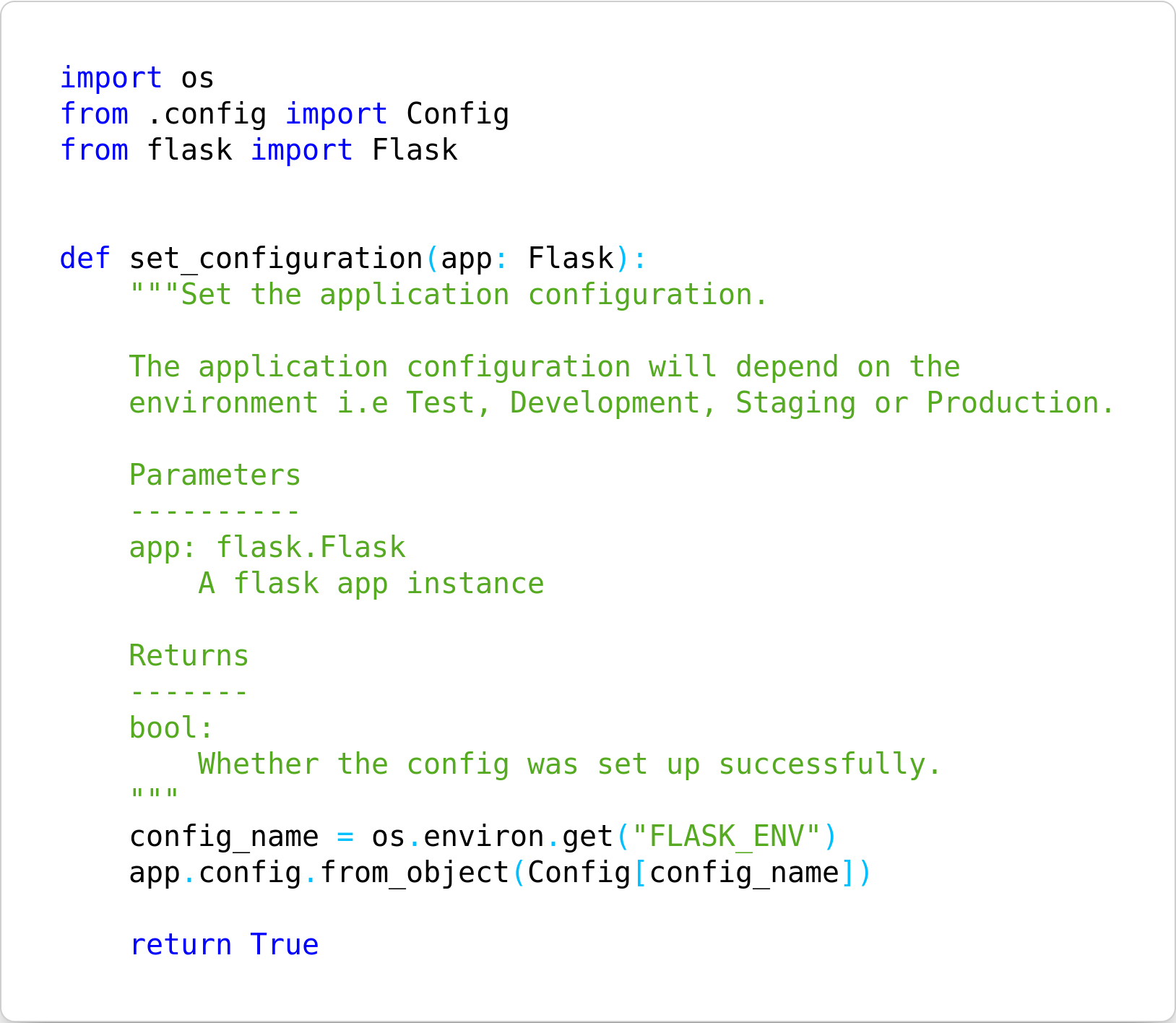

{"file_name": "a0bdfdce-1e37-4964-ba2d-b239aade67c2.png", "code": "import os\r\nfrom .config import Config\r\nfrom flask import Flask\r\n\r\n\r\ndef set_configuration(app: Flask):\r\n \"\"\"Set the application configuration.\r\n\r\n The application configuration will depend on the\r\n environment i.e Test, Development, Staging or Production.\r\n\r\n Parameters\r\n ----------\r\n app: flask.Flask\r\n A flask app instance\r\n\r\n Returns\r\n -------\r\n bool:\r\n Whether the config was set up successfully.\r\n \"\"\"\r\n config_name = os.environ.get(\"FLASK_ENV\")\r\n app.config.from_object(Config[config_name])\r\n\r\n return True"}

|

| 117 |

+

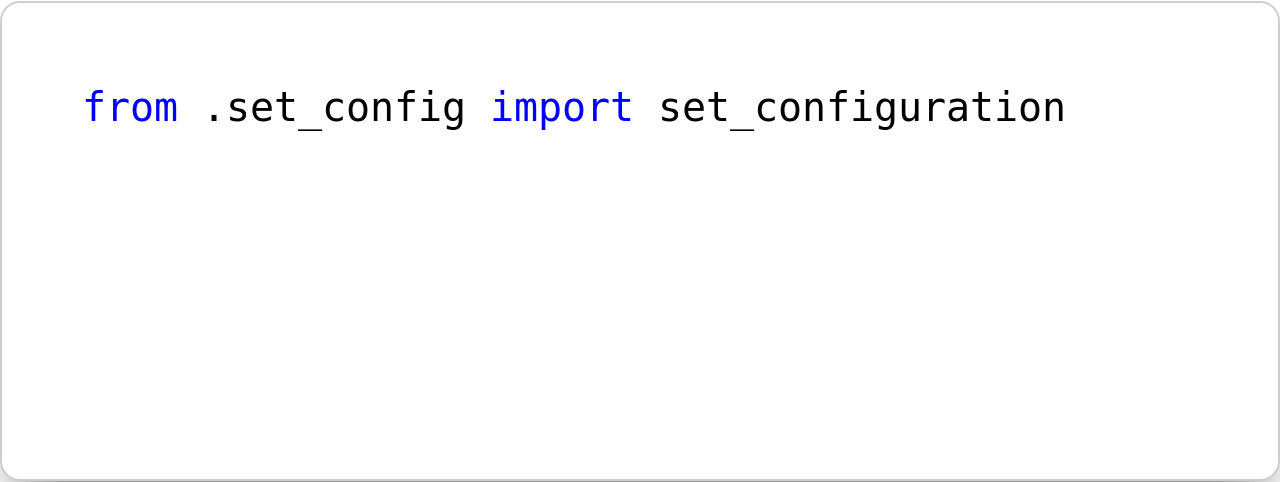

{"file_name": "ecea1dcb-6f05-4a49-b169-9ef0480fa543.png", "code": "from .set_config import set_configuration"}

|

| 118 |

+

{"file_name": "0da82c1e-1f60-4155-ba7a-ea6b1716706d.png", "code": "\"\"\"This module declares the app configuration.\r\n\r\nThe classes include:\r\n\r\nBaseConfig:\r\n Has all the configurations shared by all the environments.\r\n\r\n\"\"\"\r\nimport os\r\n\r\nfrom dotenv import load_dotenv\r\n\r\nload_dotenv()\r\n\r\n\r\nclass BaseConfig:\r\n \"\"\"Base configuration.\"\"\"\r\n\r\n DEBUG = True\r\n TESTING = False\r\n SECRET_KEY = os.environ.get(\r\n \"SECRET_KEY\", \"df0331cefc6c2b9a5d0208a726a5d1c0fd37324feba25506\"\r\n )\r\n\r\n\r\nclass DevelopmentConfig(BaseConfig):\r\n \"\"\"Development confuguration.\"\"\"\r\n\r\n DEBUG = True\r\n TESTING = False\r\n SECRET_KEY = os.environ.get(\r\n \"SECRET_KEY\", \"df0331cefc6c2b9a5d0208a726a5d1c0fd37324feba25506\"\r\n )\r\n\r\n\r\nclass TestingConfig(BaseConfig):\r\n \"\"\"Testing configuration.\"\"\"\r\n\r\n TESTING = True\r\n SECRET_KEY = os.environ.get(\"SECRET_KEY\", \"secret-key\")\r\n"}

|

| 119 |

+

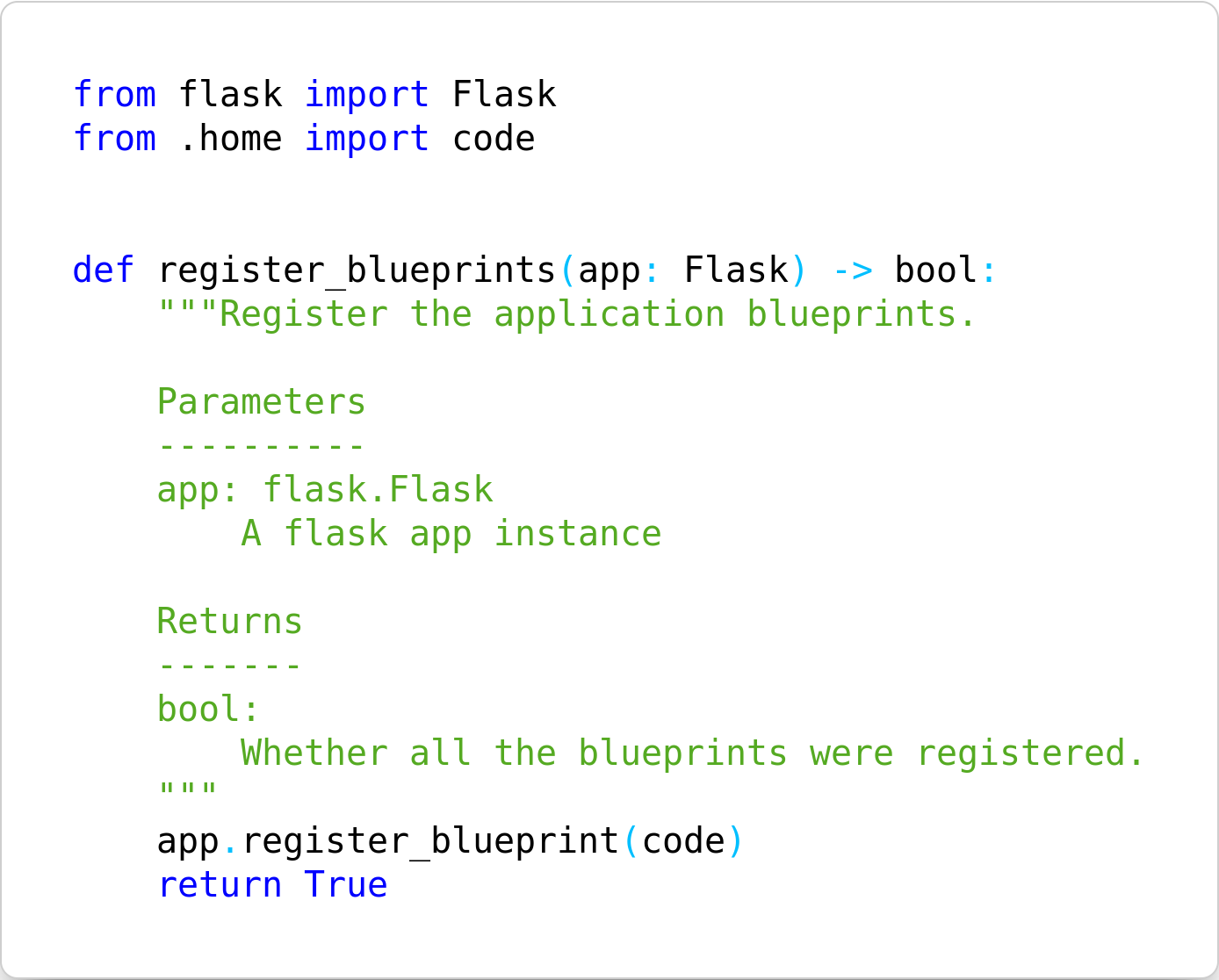

{"file_name": "3a6b3ea9-4d75-4c12-be83-ab785f6467c0.png", "code": "from flask import Flask\r\nfrom .home import code\r\n\r\n\r\ndef register_blueprints(app: Flask) -> bool:\r\n \"\"\"Register the application blueprints.\r\n\r\n Parameters\r\n ----------\r\n app: flask.Flask\r\n A flask app instance\r\n\r\n Returns\r\n -------\r\n bool:\r\n Whether all the blueprints were registered.\r\n \"\"\"\r\n app.register_blueprint(code)\r\n return True"}

|

| 120 |

+

{"file_name": "10ad7fd5-f8ed-4ce6-b2fc-2ee0ff2ec2da.png", "code": "from .register_blueprints import register_blueprints"}

|

| 121 |

+

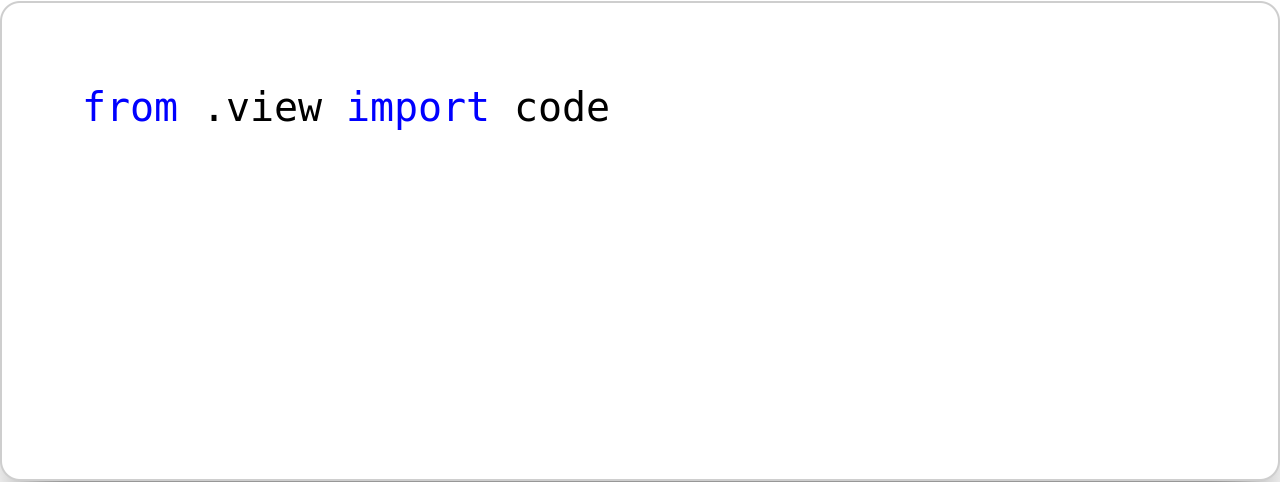

{"file_name": "d0fb8690-f472-45e2-9147-e9c944d9104f.png", "code": "from .view import code"}

|

| 122 |

+

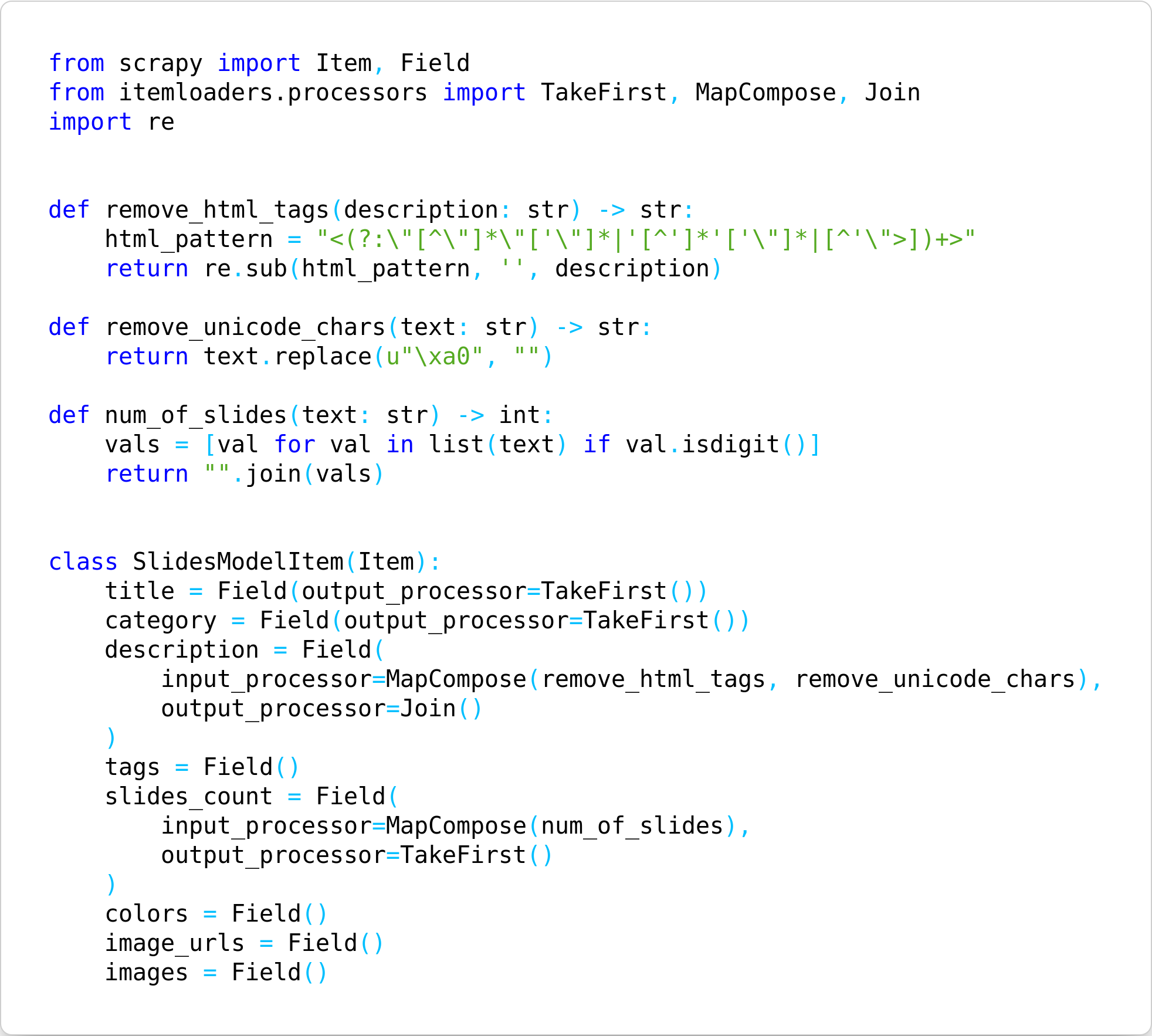

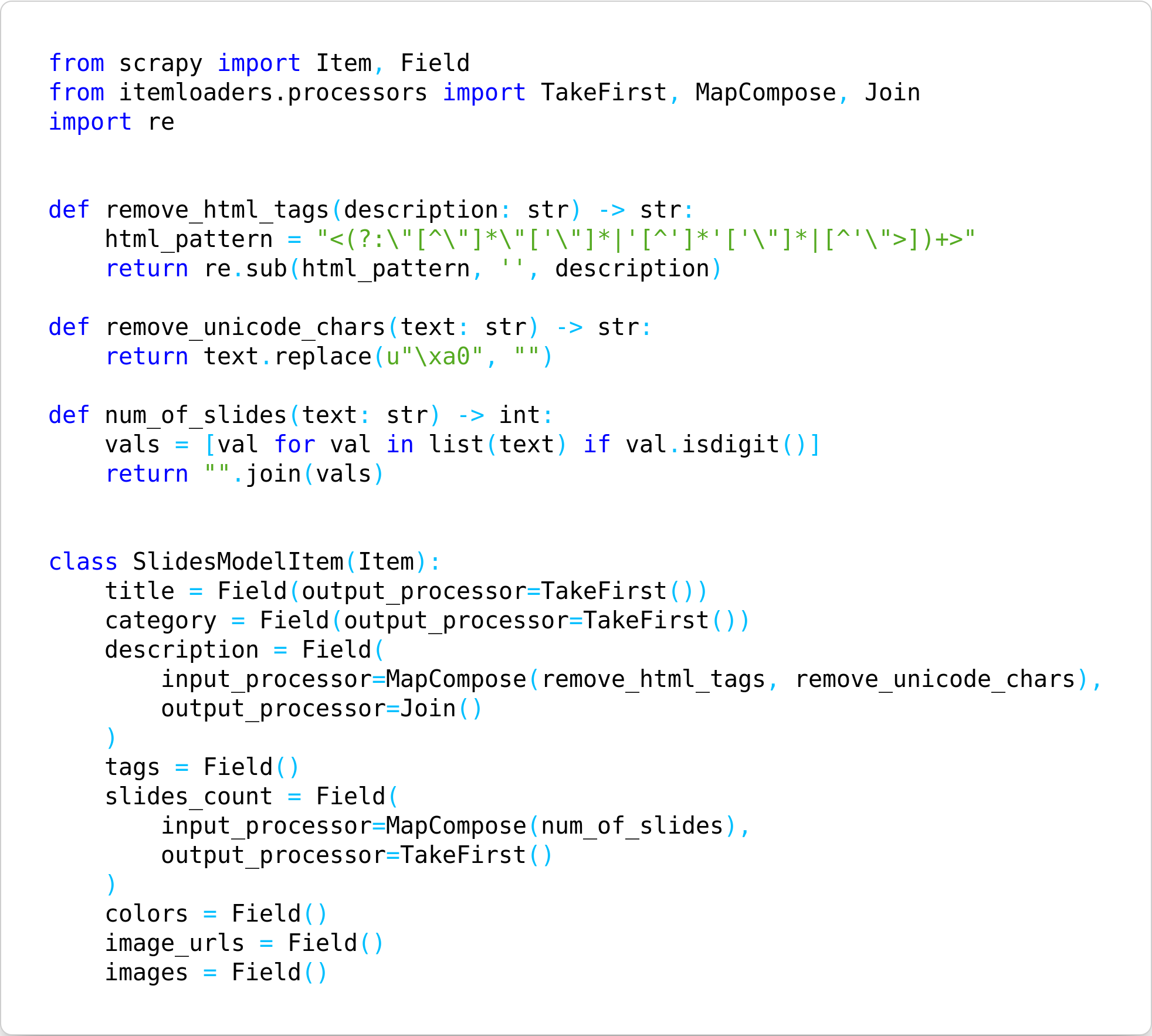

{"file_name": "0cc0d307-bdc2-4968-a76f-2abad53632d5.png", "code": "from scrapy import Item, Field\r\nfrom itemloaders.processors import TakeFirst, MapCompose, Join\r\nimport re\r\n\r\n\r\ndef remove_html_tags(description: str) -> str:\r\n html_pattern = \"<(?:\\\"[^\\\"]*\\\"['\\\"]*|'[^']*'['\\\"]*|[^'\\\">])+>\" \r\n return re.sub(html_pattern, '', description)\r\n\r\ndef remove_unicode_chars(text: str) -> str:\r\n return text.replace(u\"\\xa0\", \"\")\r\n\r\ndef num_of_slides(text: str) -> int:\r\n vals = [val for val in list(text) if val.isdigit()]\r\n return \"\".join(vals)\r\n\r\n\r\nclass SlidesModelItem(Item):\r\n title = Field(output_processor=TakeFirst())\r\n category = Field(output_processor=TakeFirst())\r\n description = Field(\r\n input_processor=MapCompose(remove_html_tags, remove_unicode_chars),\r\n output_processor=Join()\r\n )\r\n tags = Field()\r\n slides_count = Field(\r\n input_processor=MapCompose(num_of_slides),\r\n output_processor=TakeFirst()\r\n )\r\n colors = Field()\r\n image_urls = Field()\r\n images = Field()\r\n"}

|

| 123 |

+

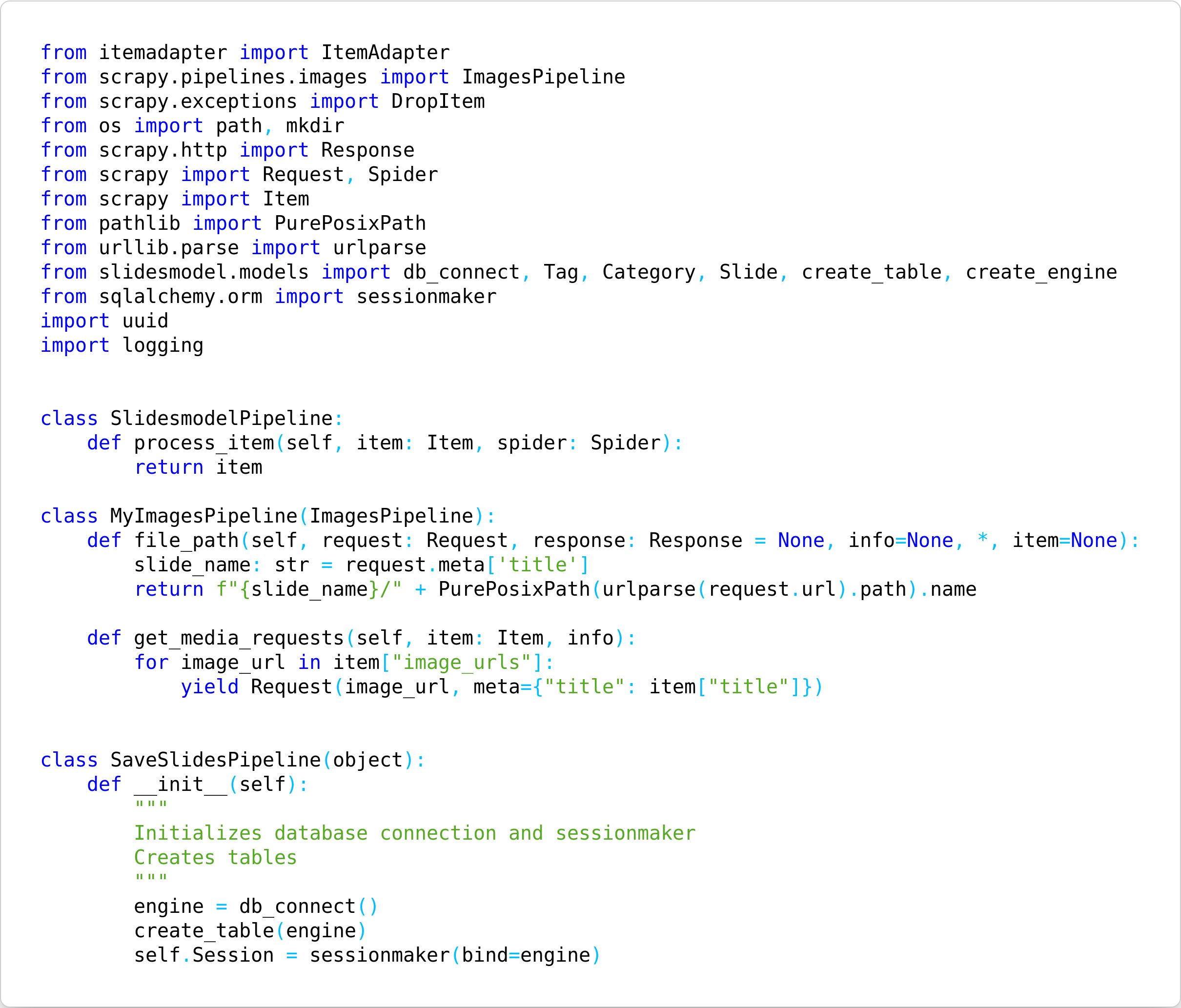

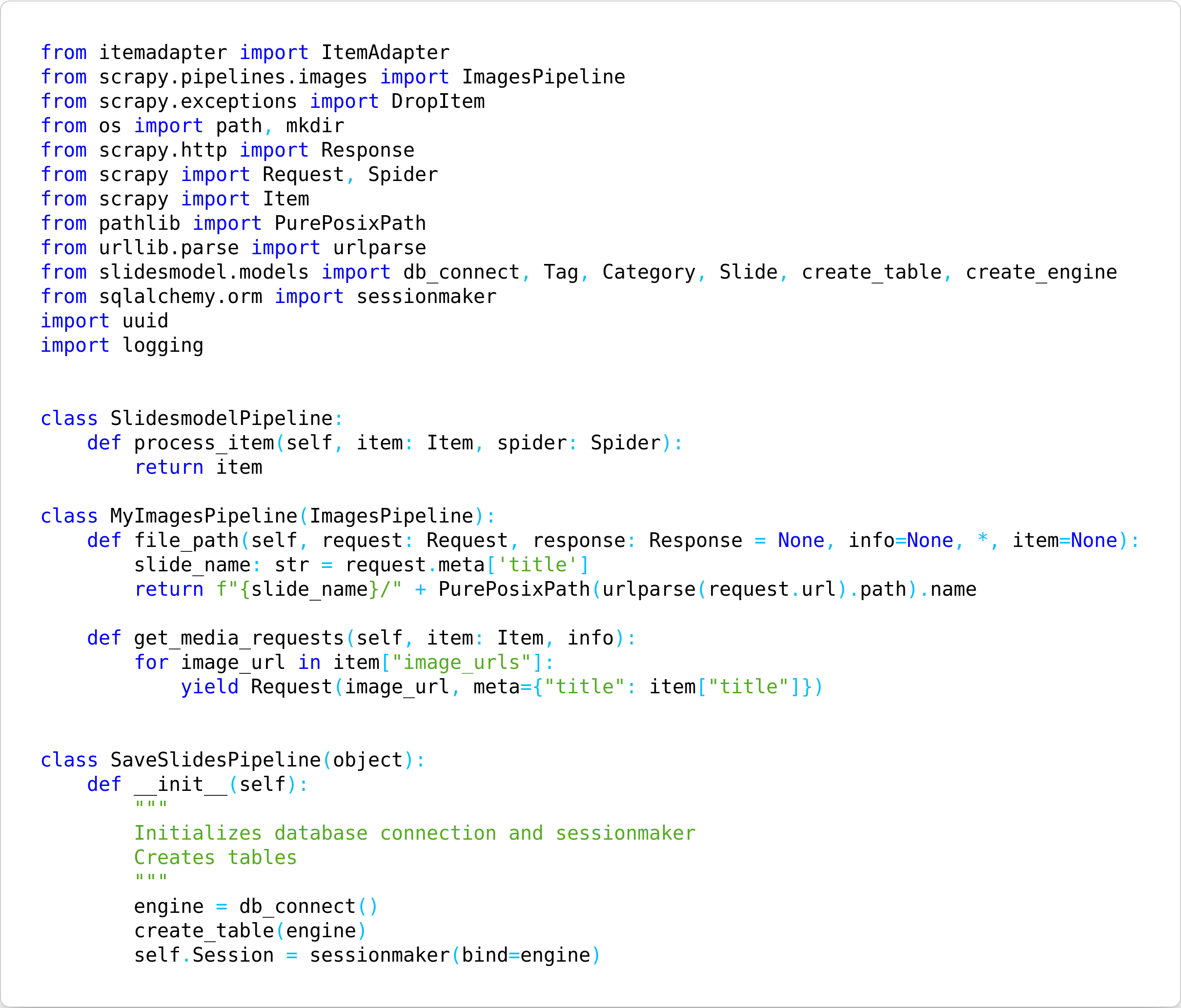

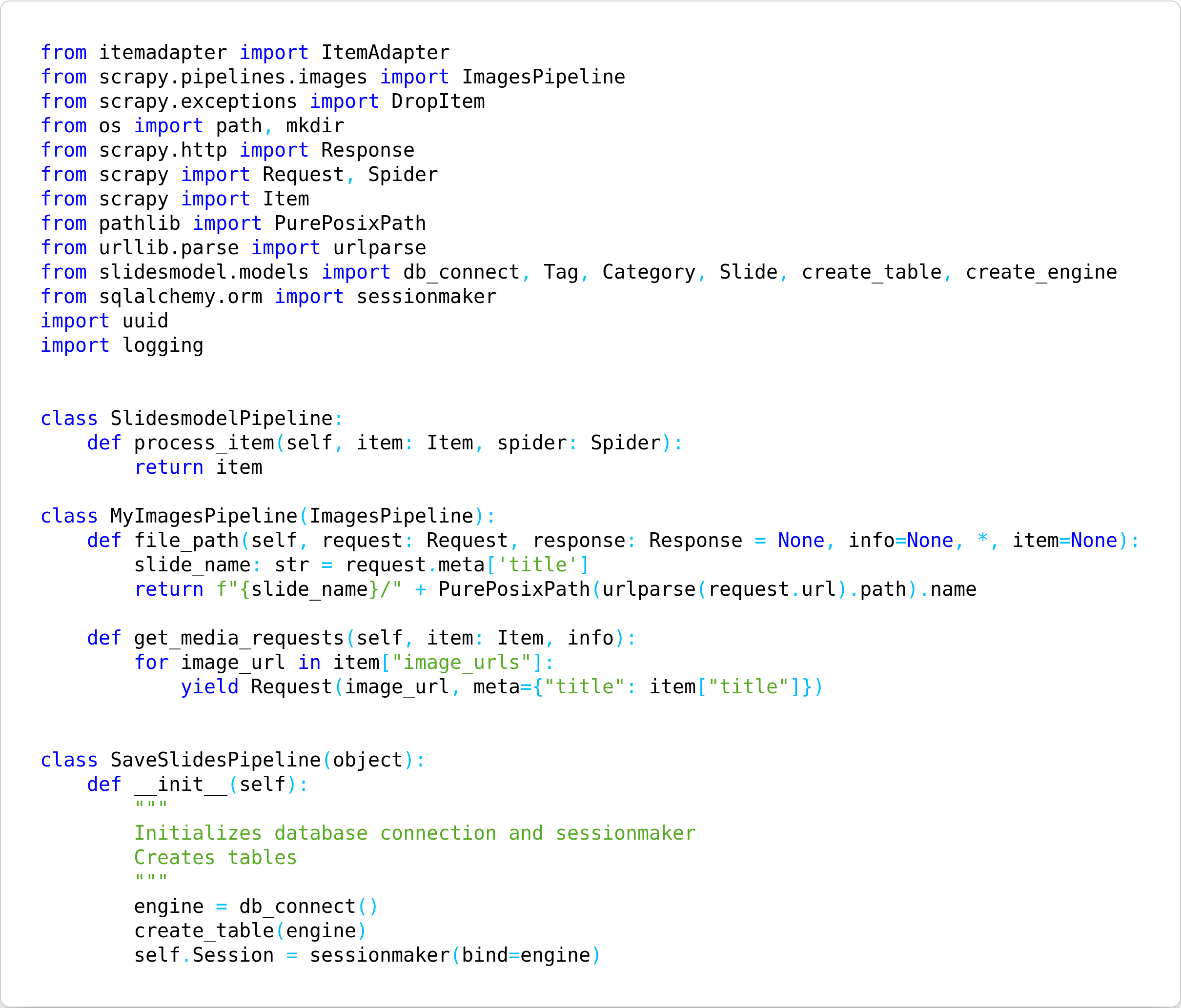

{"file_name": "9a41f973-ab10-4193-a35a-766867ecf2e9.png", "code": "from itemadapter import ItemAdapter\r\nfrom scrapy.pipelines.images import ImagesPipeline\r\nfrom scrapy.exceptions import DropItem\r\nfrom os import path, mkdir\r\nfrom scrapy.http import Response\r\nfrom scrapy import Request, Spider\r\nfrom scrapy import Item\r\nfrom pathlib import PurePosixPath\r\nfrom urllib.parse import urlparse\r\nfrom slidesmodel.models import db_connect, Tag, Category, Slide, create_table, create_engine\r\nfrom sqlalchemy.orm import sessionmaker\r\nimport uuid\r\nimport logging\r\n\r\n\r\nclass SlidesmodelPipeline:\r\n def process_item(self, item: Item, spider: Spider):\r\n return item\r\n \r\nclass MyImagesPipeline(ImagesPipeline):\r\n def file_path(self, request: Request, response: Response = None, info=None, *, item=None):\r\n slide_name: str = request.meta['title']\r\n return f\"{slide_name}/\" + PurePosixPath(urlparse(request.url).path).name\r\n \r\n def get_media_requests(self, item: Item, info):\r\n for image_url in item[\"image_urls\"]:\r\n yield Request(image_url, meta={\"title\": item[\"title\"]})\r\n \r\n\r\nclass SaveSlidesPipeline(object):\r\n def __init__(self):\r\n \"\"\"\r\n Initializes database connection and sessionmaker\r\n Creates tables\r\n \"\"\"\r\n engine = db_connect()\r\n create_table(engine)\r\n self.Session = sessionmaker(bind=engine)\r\n\r\n\r\n"}

|

| 124 |

+

{"file_name": "d6d231c0-9c0b-4dbc-8ecf-0de6f70c071c.png", "code": "from scrapy import Item, Field\r\nfrom itemloaders.processors import TakeFirst, MapCompose, Join\r\nimport re\r\n\r\n\r\ndef remove_html_tags(description: str) -> str:\r\n html_pattern = \"<(?:\\\"[^\\\"]*\\\"['\\\"]*|'[^']*'['\\\"]*|[^'\\\">])+>\" \r\n return re.sub(html_pattern, '', description)\r\n\r\ndef remove_unicode_chars(text: str) -> str:\r\n return text.replace(u\"\\xa0\", \"\")\r\n\r\ndef num_of_slides(text: str) -> int:\r\n vals = [val for val in list(text) if val.isdigit()]\r\n return \"\".join(vals)\r\n\r\n\r\nclass SlidesModelItem(Item):\r\n title = Field(output_processor=TakeFirst())\r\n category = Field(output_processor=TakeFirst())\r\n description = Field(\r\n input_processor=MapCompose(remove_html_tags, remove_unicode_chars),\r\n output_processor=Join()\r\n )\r\n tags = Field()\r\n slides_count = Field(\r\n input_processor=MapCompose(num_of_slides),\r\n output_processor=TakeFirst()\r\n )\r\n colors = Field()\r\n image_urls = Field()\r\n images = Field()\r\n"}

|

| 125 |

+

{"file_name": "33520d1f-4cea-4f6b-9b2f-5fa8c10a7f74.png", "code": "from itemadapter import ItemAdapter\r\nfrom scrapy.pipelines.images import ImagesPipeline\r\nfrom scrapy.exceptions import DropItem\r\nfrom os import path, mkdir\r\nfrom scrapy.http import Response\r\nfrom scrapy import Request, Spider\r\nfrom scrapy import Item\r\nfrom pathlib import PurePosixPath\r\nfrom urllib.parse import urlparse\r\nfrom slidesmodel.models import db_connect, Tag, Category, Slide, create_table, create_engine\r\nfrom sqlalchemy.orm import sessionmaker\r\nimport uuid\r\nimport logging\r\n\r\n\r\nclass SlidesmodelPipeline:\r\n def process_item(self, item: Item, spider: Spider):\r\n return item\r\n \r\nclass MyImagesPipeline(ImagesPipeline):\r\n def file_path(self, request: Request, response: Response = None, info=None, *, item=None):\r\n slide_name: str = request.meta['title']\r\n return f\"{slide_name}/\" + PurePosixPath(urlparse(request.url).path).name\r\n \r\n def get_media_requests(self, item: Item, info):\r\n for image_url in item[\"image_urls\"]:\r\n yield Request(image_url, meta={\"title\": item[\"title\"]})\r\n \r\n\r\nclass SaveSlidesPipeline(object):\r\n def __init__(self):\r\n \"\"\"\r\n Initializes database connection and sessionmaker\r\n Creates tables\r\n \"\"\"\r\n engine = db_connect()\r\n create_table(engine)\r\n self.Session = sessionmaker(bind=engine)\r\n\r\n\r\n"}

|

| 126 |

+

{"file_name": "2c1966b4-6884-4f01-bfa1-c3315d9e602a.png", "code": "from scrapy import Item, Field\r\nfrom itemloaders.processors import TakeFirst, MapCompose, Join\r\nimport re\r\n\r\n\r\ndef remove_html_tags(description: str) -> str:\r\n html_pattern = \"<(?:\\\"[^\\\"]*\\\"['\\\"]*|'[^']*'['\\\"]*|[^'\\\">])+>\" \r\n return re.sub(html_pattern, '', description)\r\n\r\ndef remove_unicode_chars(text: str) -> str:\r\n return text.replace(u\"\\xa0\", \"\")\r\n\r\ndef num_of_slides(text: str) -> int:\r\n vals = [val for val in list(text) if val.isdigit()]\r\n return \"\".join(vals)\r\n\r\n\r\nclass SlidesModelItem(Item):\r\n title = Field(output_processor=TakeFirst())\r\n category = Field(output_processor=TakeFirst())\r\n description = Field(\r\n input_processor=MapCompose(remove_html_tags, remove_unicode_chars),\r\n output_processor=Join()\r\n )\r\n tags = Field()\r\n slides_count = Field(\r\n input_processor=MapCompose(num_of_slides),\r\n output_processor=TakeFirst()\r\n )\r\n colors = Field()\r\n image_urls = Field()\r\n images = Field()\r\n"}

|

| 127 |

+

{"file_name": "40f0fb53-667e-4862-afb1-9b5dec3d3e8f.png", "code": "from itemadapter import ItemAdapter\r\nfrom scrapy.pipelines.images import ImagesPipeline\r\nfrom scrapy.exceptions import DropItem\r\nfrom os import path, mkdir\r\nfrom scrapy.http import Response\r\nfrom scrapy import Request, Spider\r\nfrom scrapy import Item\r\nfrom pathlib import PurePosixPath\r\nfrom urllib.parse import urlparse\r\nfrom slidesmodel.models import db_connect, Tag, Category, Slide, create_table, create_engine\r\nfrom sqlalchemy.orm import sessionmaker\r\nimport uuid\r\nimport logging\r\n\r\n\r\nclass SlidesmodelPipeline:\r\n def process_item(self, item: Item, spider: Spider):\r\n return item\r\n \r\nclass MyImagesPipeline(ImagesPipeline):\r\n def file_path(self, request: Request, response: Response = None, info=None, *, item=None):\r\n slide_name: str = request.meta['title']\r\n return f\"{slide_name}/\" + PurePosixPath(urlparse(request.url).path).name\r\n \r\n def get_media_requests(self, item: Item, info):\r\n for image_url in item[\"image_urls\"]:\r\n yield Request(image_url, meta={\"title\": item[\"title\"]})\r\n \r\n\r\nclass SaveSlidesPipeline(object):\r\n def __init__(self):\r\n \"\"\"\r\n Initializes database connection and sessionmaker\r\n Creates tables\r\n \"\"\"\r\n engine = db_connect()\r\n create_table(engine)\r\n self.Session = sessionmaker(bind=engine)\r\n\r\n\r\n"}

|

| 128 |

+

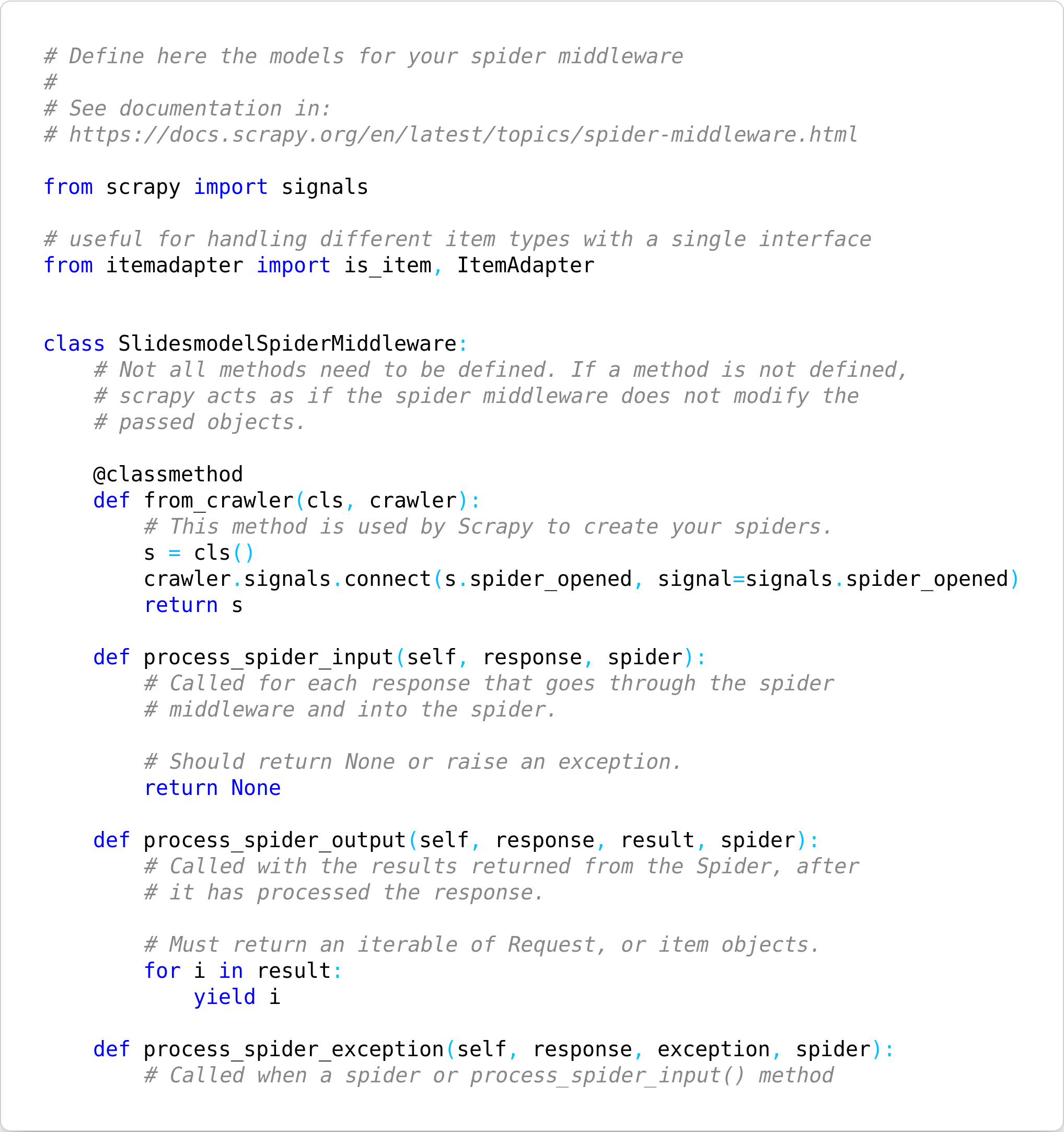

{"file_name": "38eee642-aaba-4c8f-9deb-dcc38b93aa9e.png", "code": "# Define here the models for your spider middleware\r\n#\r\n# See documentation in:\r\n# https://docs.scrapy.org/en/latest/topics/spider-middleware.html\r\n\r\nfrom scrapy import signals\r\n\r\n# useful for handling different item types with a single interface\r\nfrom itemadapter import is_item, ItemAdapter\r\n\r\n\r\nclass SlidesmodelSpiderMiddleware:\r\n # Not all methods need to be defined. If a method is not defined,\r\n # scrapy acts as if the spider middleware does not modify the\r\n # passed objects.\r\n\r\n @classmethod\r\n def from_crawler(cls, crawler):\r\n # This method is used by Scrapy to create your spiders.\r\n s = cls()\r\n crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)\r\n return s\r\n\r\n def process_spider_input(self, response, spider):\r\n # Called for each response that goes through the spider\r\n # middleware and into the spider.\r\n\r\n # Should return None or raise an exception.\r\n return None\r\n\r\n def process_spider_output(self, response, result, spider):\r\n # Called with the results returned from the Spider, after\r\n # it has processed the response.\r\n\r\n # Must return an iterable of Request, or item objects.\r\n for i in result:\r\n yield i\r\n\r\n def process_spider_exception(self, response, exception, spider):\r\n # Called when a spider or process_spider_input() method\r\n"}

|

| 129 |

+

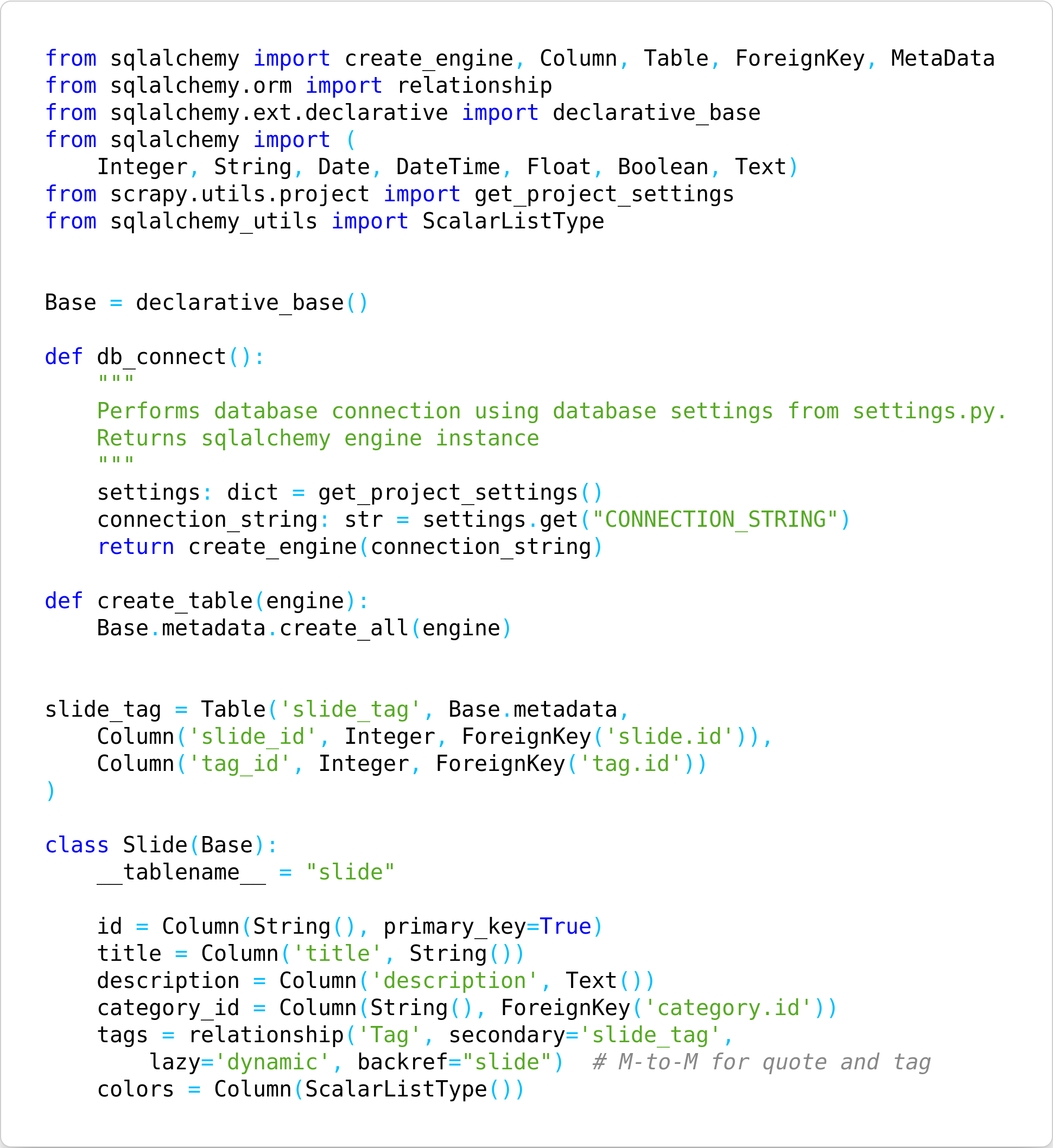

{"file_name": "26c509d1-3f53-46e6-93a9-56386bdf134a.png", "code": "\r\nfrom sqlalchemy import create_engine, Column, Table, ForeignKey, MetaData\r\nfrom sqlalchemy.orm import relationship\r\nfrom sqlalchemy.ext.declarative import declarative_base\r\nfrom sqlalchemy import (\r\n Integer, String, Date, DateTime, Float, Boolean, Text)\r\nfrom scrapy.utils.project import get_project_settings\r\nfrom sqlalchemy_utils import ScalarListType\r\n\r\n\r\nBase = declarative_base()\r\n\r\ndef db_connect():\r\n \"\"\"\r\n Performs database connection using database settings from settings.py.\r\n Returns sqlalchemy engine instance\r\n \"\"\"\r\n settings: dict = get_project_settings()\r\n connection_string: str = settings.get(\"CONNECTION_STRING\")\r\n return create_engine(connection_string)\r\n\r\ndef create_table(engine):\r\n Base.metadata.create_all(engine)\r\n \r\n \r\nslide_tag = Table('slide_tag', Base.metadata,\r\n Column('slide_id', Integer, ForeignKey('slide.id')),\r\n Column('tag_id', Integer, ForeignKey('tag.id'))\r\n)\r\n\r\nclass Slide(Base):\r\n __tablename__ = \"slide\"\r\n\r\n id = Column(String(), primary_key=True)\r\n title = Column('title', String())\r\n description = Column('description', Text())\r\n category_id = Column(String(), ForeignKey('category.id'))\r\n tags = relationship('Tag', secondary='slide_tag',\r\n lazy='dynamic', backref=\"slide\") # M-to-M for quote and tag\r\n colors = Column(ScalarListType())\r\n"}

|

| 130 |

+

{"file_name": "d62bf0f8-d896-4a3d-9e93-f2b3720883c2.png", "code": "# Scrapy settings for slidesmodel project\r\n#\r\n# For simplicity, this file contains only settings considered important or\r\n# commonly used. You can find more settings consulting the documentation:\r\n#\r\n# https://docs.scrapy.org/en/latest/topics/settings.html\r\n# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html\r\n# https://docs.scrapy.org/en/latest/topics/spider-middleware.html\r\n\r\nBOT_NAME = \"slidesmodel\"\r\n\r\nSPIDER_MODULES = [\"slidesmodel.spiders\"]\r\nNEWSPIDER_MODULE = \"slidesmodel.spiders\"\r\n\r\n\r\n# Crawl responsibly by identifying yourself (and your website) on the user-agent\r\n#USER_AGENT = \"slidesmodel (+http://www.yourdomain.com)\"\r\n\r\n# Obey robots.txt rules\r\nROBOTSTXT_OBEY = False\r\n\r\n# Configure maximum concurrent requests performed by Scrapy (default: 16)\r\n#CONCURRENT_REQUESTS = 32\r\n\r\n# Configure a delay for requests for the same website (default: 0)\r\n# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay\r\n# See also autothrottle settings and docs\r\n#DOWNLOAD_DELAY = 3\r\n# The download delay setting will honor only one of:\r\n#CONCURRENT_REQUESTS_PER_DOMAIN = 16\r\n#CONCURRENT_REQUESTS_PER_IP = 16\r\n\r\n# Disable cookies (enabled by default)\r\n#COOKIES_ENABLED = False\r\n\r\n# Disable Telnet Console (enabled by default)\r\n#TELNETCONSOLE_ENABLED = False\r\n\r\n# Override the default request headers:\r\n#DEFAULT_REQUEST_HEADERS = {\r\n"}

|

| 131 |

+

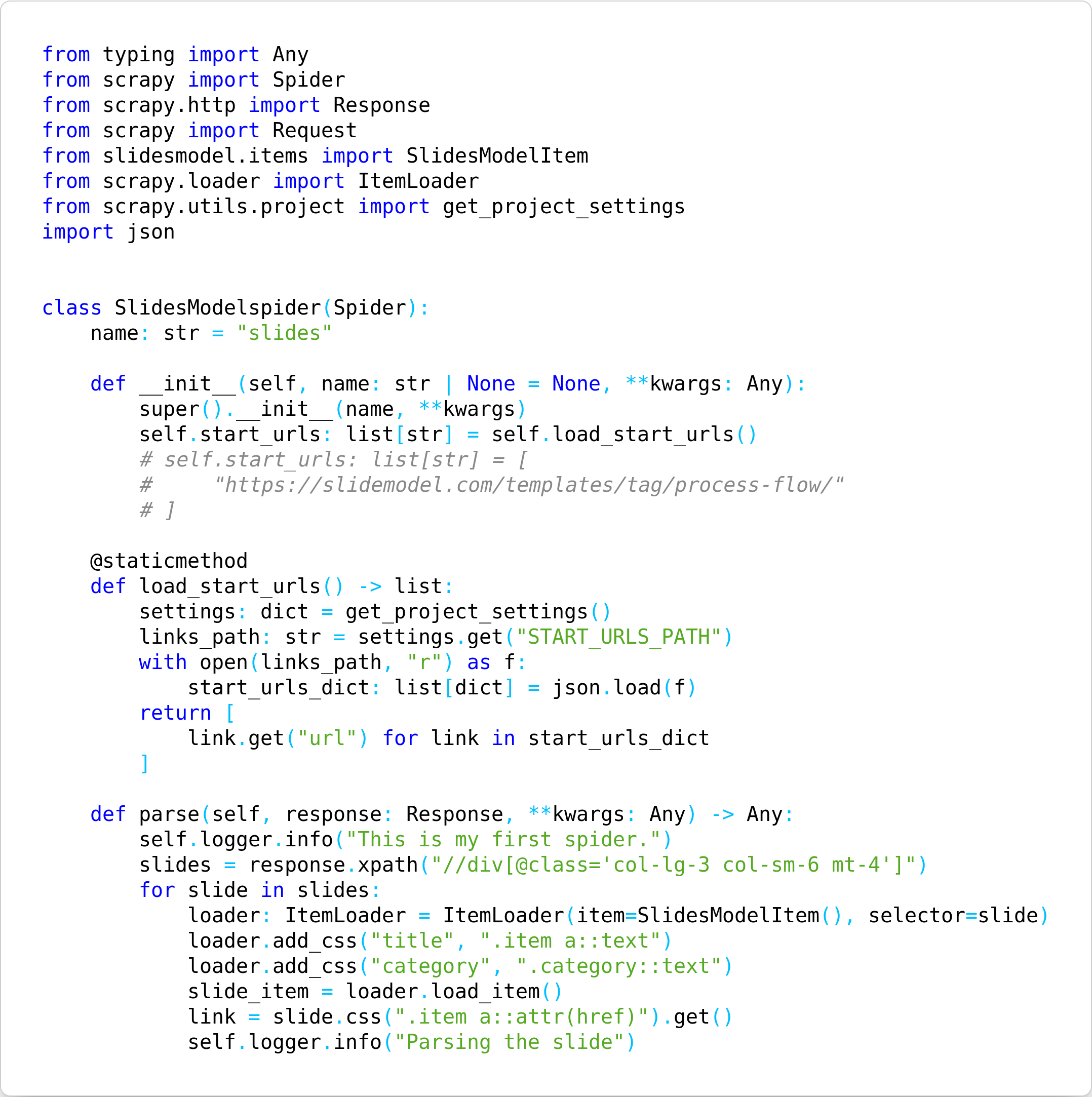

{"file_name": "349596c1-3a7d-4ff4-a4fc-f73cbb9efc96.png", "code": "from typing import Any\r\nfrom scrapy import Spider\r\nfrom scrapy.http import Response\r\nfrom scrapy import Request\r\nfrom slidesmodel.items import SlidesModelItem\r\nfrom scrapy.loader import ItemLoader\r\nfrom scrapy.utils.project import get_project_settings\r\nimport json\r\n\r\n\r\nclass SlidesModelspider(Spider):\r\n name: str = \"slides\"\r\n \r\n def __init__(self, name: str | None = None, **kwargs: Any):\r\n super().__init__(name, **kwargs)\r\n self.start_urls: list[str] = self.load_start_urls()\r\n # self.start_urls: list[str] = [\r\n # \"https://slidemodel.com/templates/tag/process-flow/\"\r\n # ]\r\n \r\n @staticmethod\r\n def load_start_urls() -> list:\r\n settings: dict = get_project_settings()\r\n links_path: str = settings.get(\"START_URLS_PATH\")\r\n with open(links_path, \"r\") as f:\r\n start_urls_dict: list[dict] = json.load(f)\r\n return [\r\n link.get(\"url\") for link in start_urls_dict\r\n ]\r\n \r\n def parse(self, response: Response, **kwargs: Any) -> Any:\r\n self.logger.info(\"This is my first spider.\")\r\n slides = response.xpath(\"//div[@class='col-lg-3 col-sm-6 mt-4']\")\r\n for slide in slides:\r\n loader: ItemLoader = ItemLoader(item=SlidesModelItem(), selector=slide)\r\n loader.add_css(\"title\", \".item a::text\")\r\n loader.add_css(\"category\", \".category::text\")\r\n slide_item = loader.load_item()\r\n link = slide.css(\".item a::attr(href)\").get()\r\n self.logger.info(\"Parsing the slide\")\r\n"}

|

| 132 |

+

{"file_name": "df9d8c0c-5bb0-4945-909f-0d2773f184d0.png", "code": "# This package will contain the spiders of your Scrapy project\r\n#\r\n# Please refer to the documentation for information on how to create and manage\r\n# your spiders.\r\n"}

|

| 133 |

+

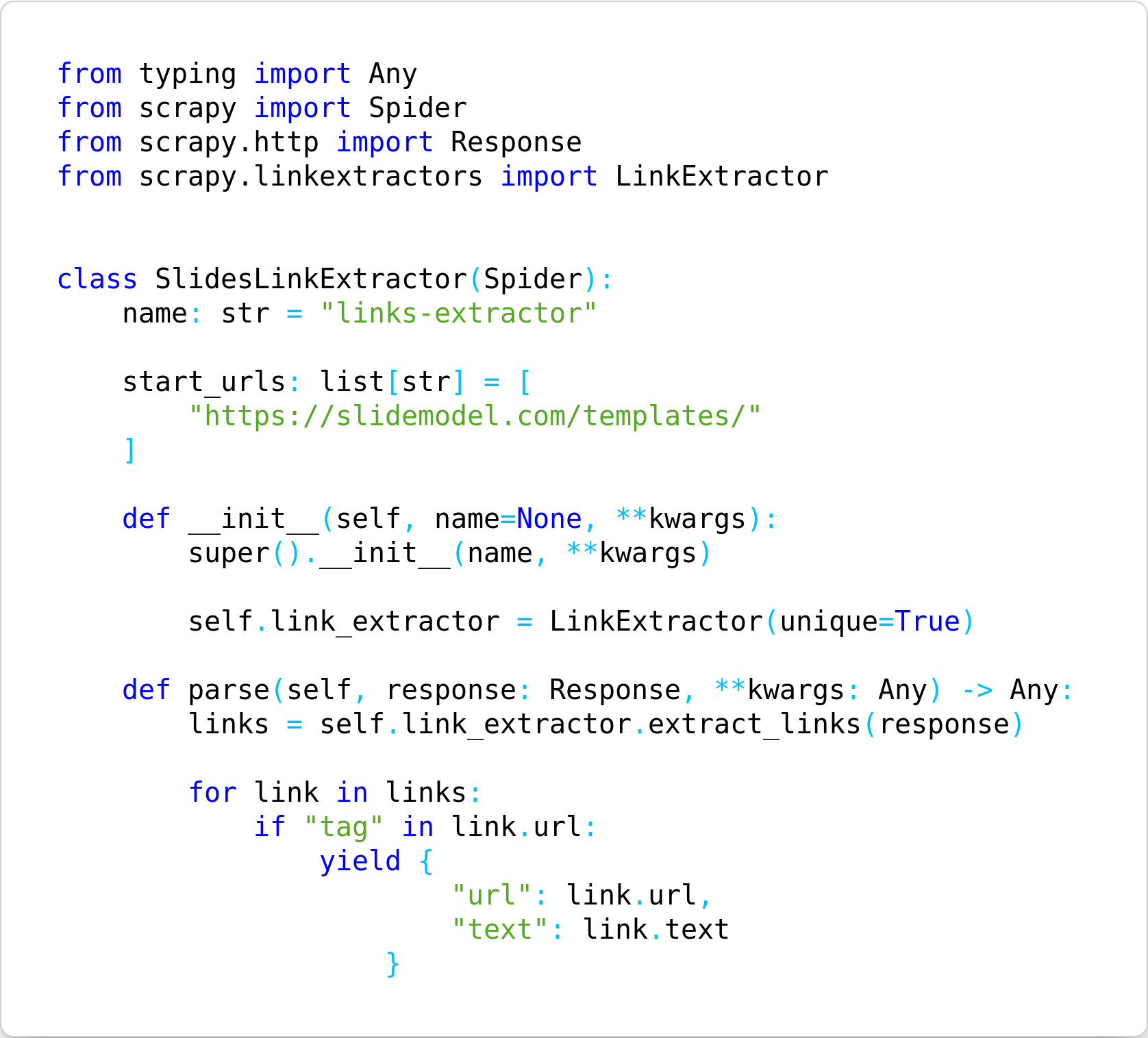

{"file_name": "290e2e83-cd4f-48c0-8495-d778a8f7dcae.png", "code": "from typing import Any\r\nfrom scrapy import Spider\r\nfrom scrapy.http import Response\r\nfrom scrapy.linkextractors import LinkExtractor \r\n\r\n\r\nclass SlidesLinkExtractor(Spider):\r\n name: str = \"links-extractor\"\r\n \r\n start_urls: list[str] = [\r\n \"https://slidemodel.com/templates/\"\r\n ]\r\n \r\n def __init__(self, name=None, **kwargs): \r\n super().__init__(name, **kwargs) \r\n \r\n self.link_extractor = LinkExtractor(unique=True) \r\n \r\n def parse(self, response: Response, **kwargs: Any) -> Any: \r\n links = self.link_extractor.extract_links(response) \r\n \r\n for link in links: \r\n if \"tag\" in link.url:\r\n yield {\r\n \"url\": link.url, \r\n \"text\": link.text\r\n }"}

|

| 134 |

+

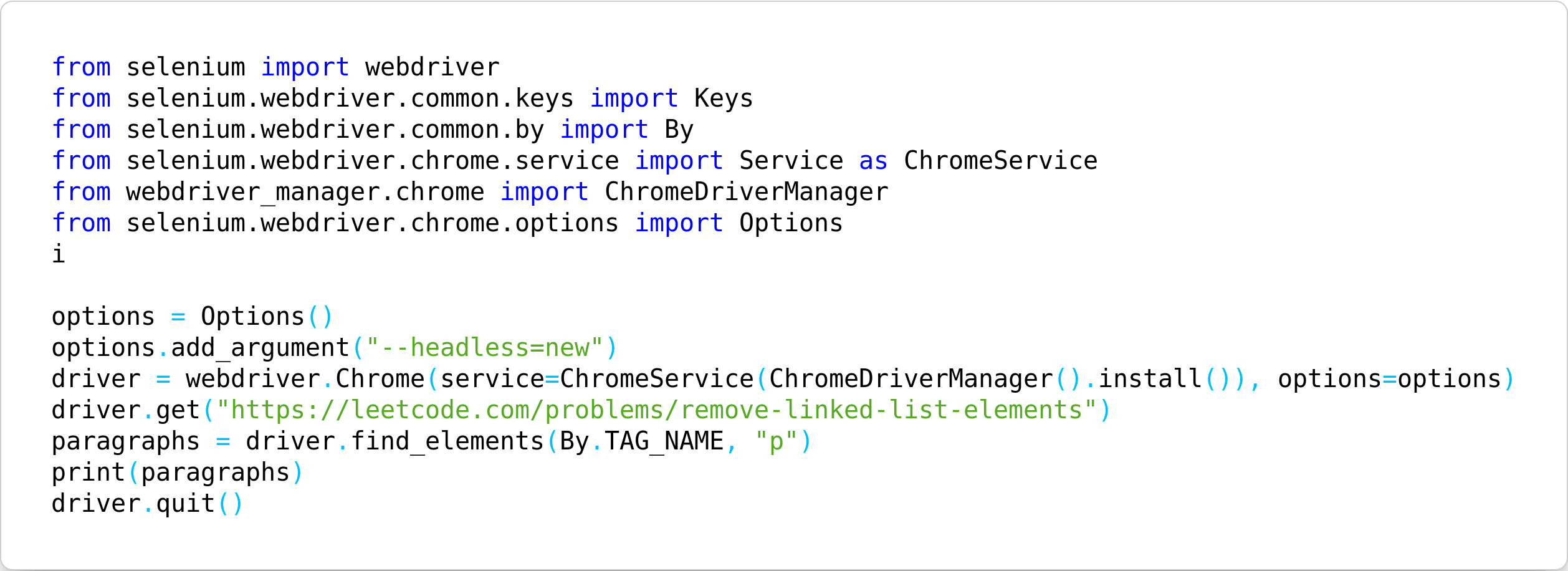

{"file_name": "ec0df12b-72a3-4d94-838e-b46e8a66661e.png", "code": "from selenium import webdriver\r\nfrom selenium.webdriver.common.keys import Keys\r\nfrom selenium.webdriver.common.by import By\r\nfrom selenium.webdriver.chrome.service import Service as ChromeService\r\nfrom webdriver_manager.chrome import ChromeDriverManager\r\nfrom selenium.webdriver.chrome.options import Options\r\ni\r\n\r\noptions = Options()\r\noptions.add_argument(\"--headless=new\")\r\ndriver = webdriver.Chrome(service=ChromeService(ChromeDriverManager().install()), options=options)\r\ndriver.get(\"https://leetcode.com/problems/remove-linked-list-elements\")\r\nparagraphs = driver.find_elements(By.TAG_NAME, \"p\")\r\nprint(paragraphs)\r\ndriver.quit()"}

|

| 135 |

+

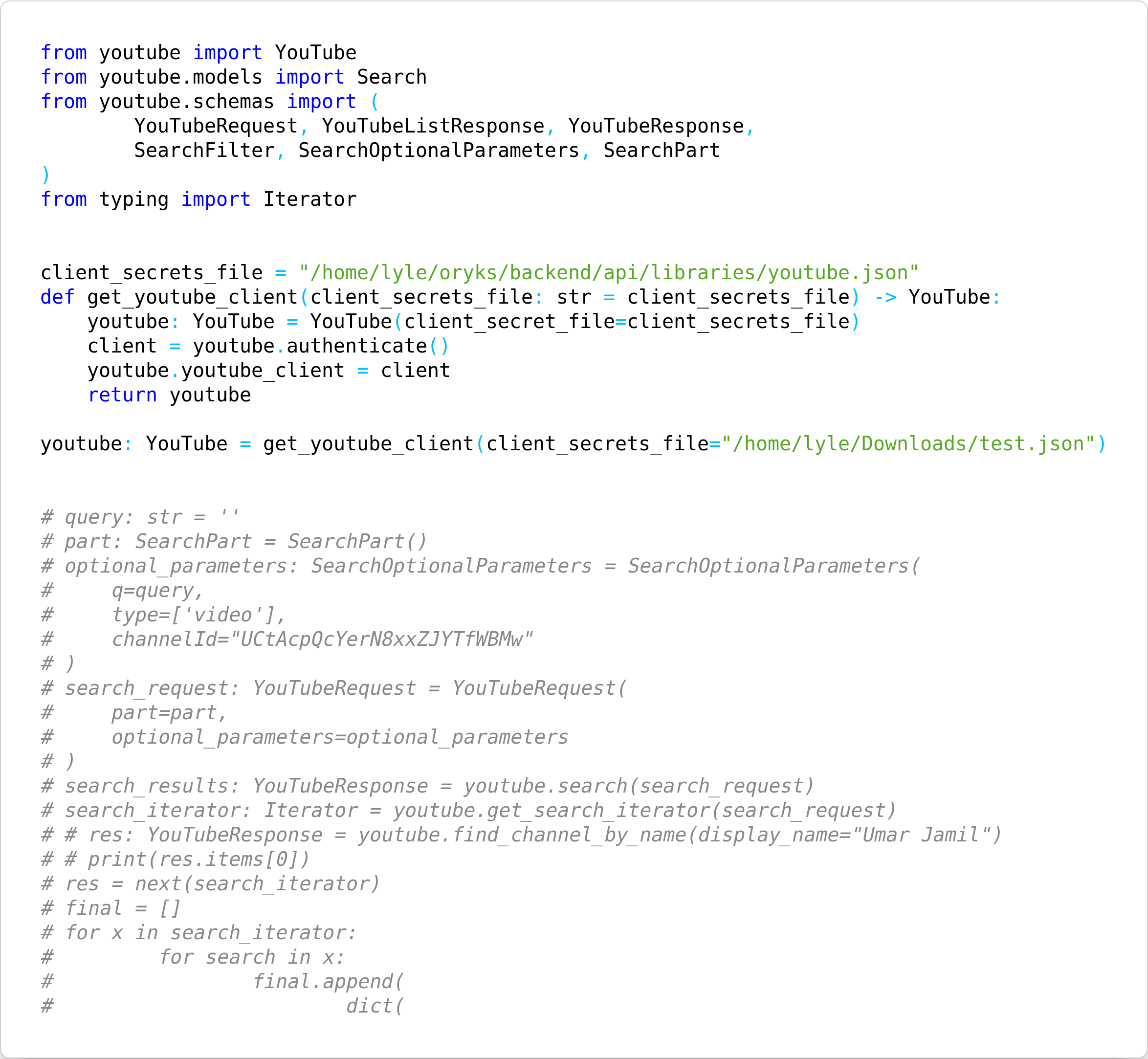

{"file_name": "da552b13-cf0c-4c0d-88ac-7f228e43d866.png", "code": "from youtube import YouTube\r\nfrom youtube.models import Search\r\nfrom youtube.schemas import (\r\n YouTubeRequest, YouTubeListResponse, YouTubeResponse,\r\n SearchFilter, SearchOptionalParameters, SearchPart\r\n)\r\nfrom typing import Iterator\r\n\r\n\r\nclient_secrets_file = \"/home/lyle/oryks/backend/api/libraries/youtube.json\"\r\ndef get_youtube_client(client_secrets_file: str = client_secrets_file) -> YouTube:\r\n youtube: YouTube = YouTube(client_secret_file=client_secrets_file)\r\n client = youtube.authenticate()\r\n youtube.youtube_client = client\r\n return youtube\r\n\r\nyoutube: YouTube = get_youtube_client(client_secrets_file=\"/home/lyle/Downloads/test.json\")\r\n\r\n\r\n# query: str = ''\r\n# part: SearchPart = SearchPart()\r\n# optional_parameters: SearchOptionalParameters = SearchOptionalParameters(\r\n# q=query,\r\n# type=['video'],\r\n# channelId=\"UCtAcpQcYerN8xxZJYTfWBMw\"\r\n# )\r\n# search_request: YouTubeRequest = YouTubeRequest(\r\n# part=part, \r\n# optional_parameters=optional_parameters\r\n# )\r\n# search_results: YouTubeResponse = youtube.search(search_request)\r\n# search_iterator: Iterator = youtube.get_search_iterator(search_request)\r\n# # res: YouTubeResponse = youtube.find_channel_by_name(display_name=\"Umar Jamil\")\r\n# # print(res.items[0])\r\n# res = next(search_iterator)\r\n# final = []\r\n# for x in search_iterator:\r\n# for search in x:\r\n# final.append(\r\n# dict(\r\n"}

|

| 136 |

+

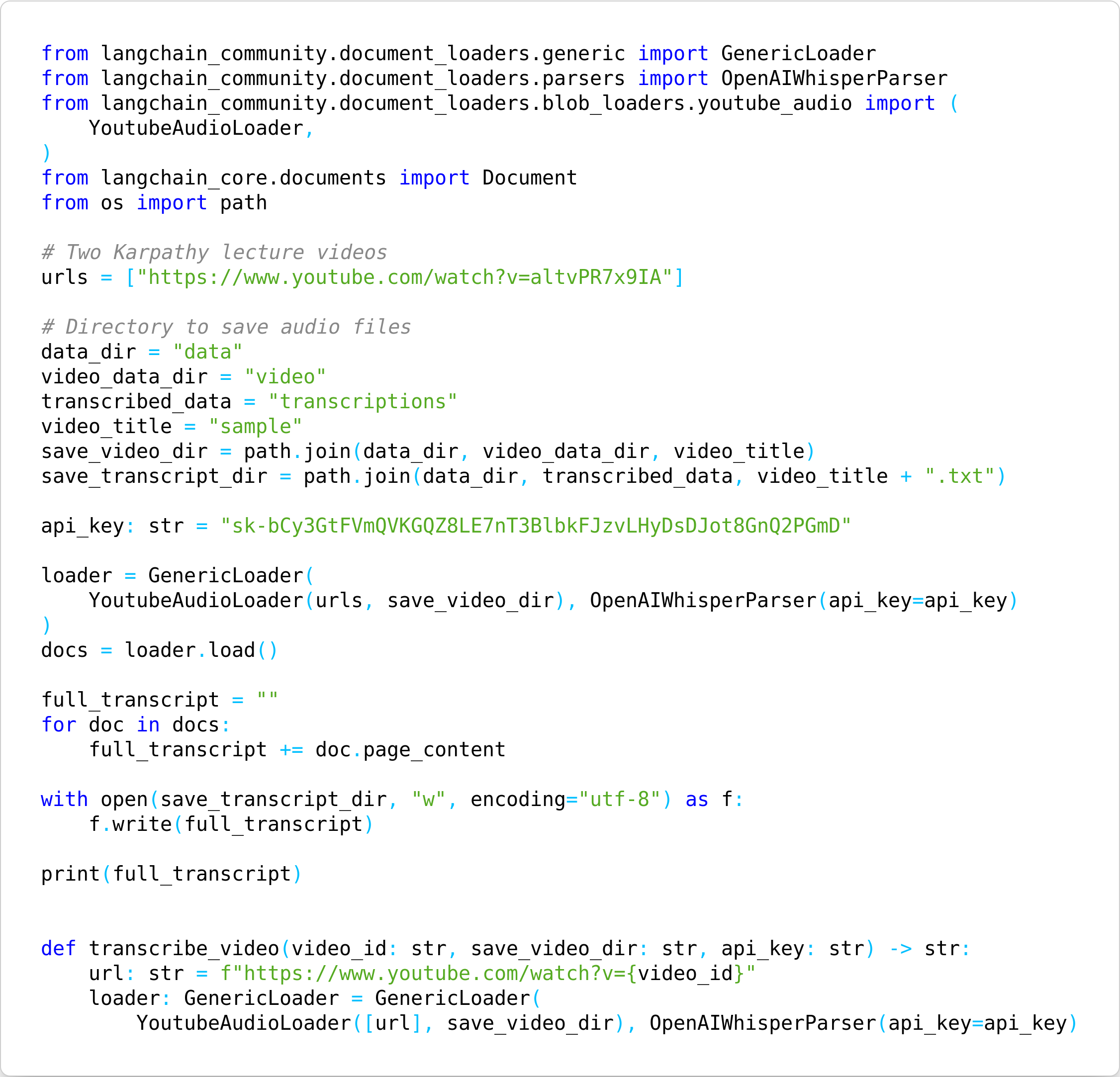

{"file_name": "3f7d8e6a-d8a9-4345-ba88-d2c16d6c0e33.png", "code": "from langchain_community.document_loaders.generic import GenericLoader\r\nfrom langchain_community.document_loaders.parsers import OpenAIWhisperParser\r\nfrom langchain_community.document_loaders.blob_loaders.youtube_audio import (\r\n YoutubeAudioLoader,\r\n)\r\nfrom langchain_core.documents import Document\r\nfrom os import path\r\n\r\n# Two Karpathy lecture videos\r\nurls = [\"https://www.youtube.com/watch?v=altvPR7x9IA\"]\r\n\r\n# Directory to save audio files\r\ndata_dir = \"data\"\r\nvideo_data_dir = \"video\"\r\ntranscribed_data = \"transcriptions\"\r\nvideo_title = \"sample\"\r\nsave_video_dir = path.join(data_dir, video_data_dir, video_title)\r\nsave_transcript_dir = path.join(data_dir, transcribed_data, video_title + \".txt\")\r\n\r\napi_key: str = \"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\"\r\n\r\nloader = GenericLoader(\r\n YoutubeAudioLoader(urls, save_video_dir), OpenAIWhisperParser(api_key=api_key)\r\n)\r\ndocs = loader.load()\r\n\r\nfull_transcript = \"\"\r\nfor doc in docs:\r\n full_transcript += doc.page_content\r\n\r\nwith open(save_transcript_dir, \"w\", encoding=\"utf-8\") as f:\r\n f.write(full_transcript)\r\n\r\nprint(full_transcript)\r\n\r\n\r\ndef transcribe_video(video_id: str, save_video_dir: str, api_key: str) -> str:\r\n url: str = f\"https://www.youtube.com/watch?v={video_id}\"\r\n loader: GenericLoader = GenericLoader(\r\n YoutubeAudioLoader([url], save_video_dir), OpenAIWhisperParser(api_key=api_key)\r\n"}

|

| 137 |

+

{"file_name": "56c43818-b08c-40c0-9020-fcd0bf0360e6.png", "code": "from dotenv import load_dotenv\r\nload_dotenv()\r\nfrom flask.cli import FlaskGroup\r\nfrom api import create_app\r\n\r\napp = create_app()\r\ncli = FlaskGroup(create_app=create_app)\r\n\r\n\r\n\r\nif __name__ == \"__main__\":\r\n cli()"}

|

| 138 |

+

{"file_name": "9c23fe20-747d-4d30-ad44-1cdfc8a42588.png", "code": "from dotenv import load_dotenv\r\nload_dotenv()\r\nfrom flask.cli import FlaskGroup\r\nfrom api import create_app\r\n\r\napp = create_app()\r\ncli = FlaskGroup(create_app=create_app)\r\n\r\n\r\n\r\nif __name__ == \"__main__\":\r\n cli()"}

|

| 139 |

+

{"file_name": "0c9f8747-6faf-4fe5-a686-3b383e795875.png", "code": "import whisper\r\n\r\nmodel = whisper.load_model(\"medium.en\")\r\nresult = model.transcribe(\"code.wav\")\r\nprint(result[\"text\"])"}

|

| 140 |

+

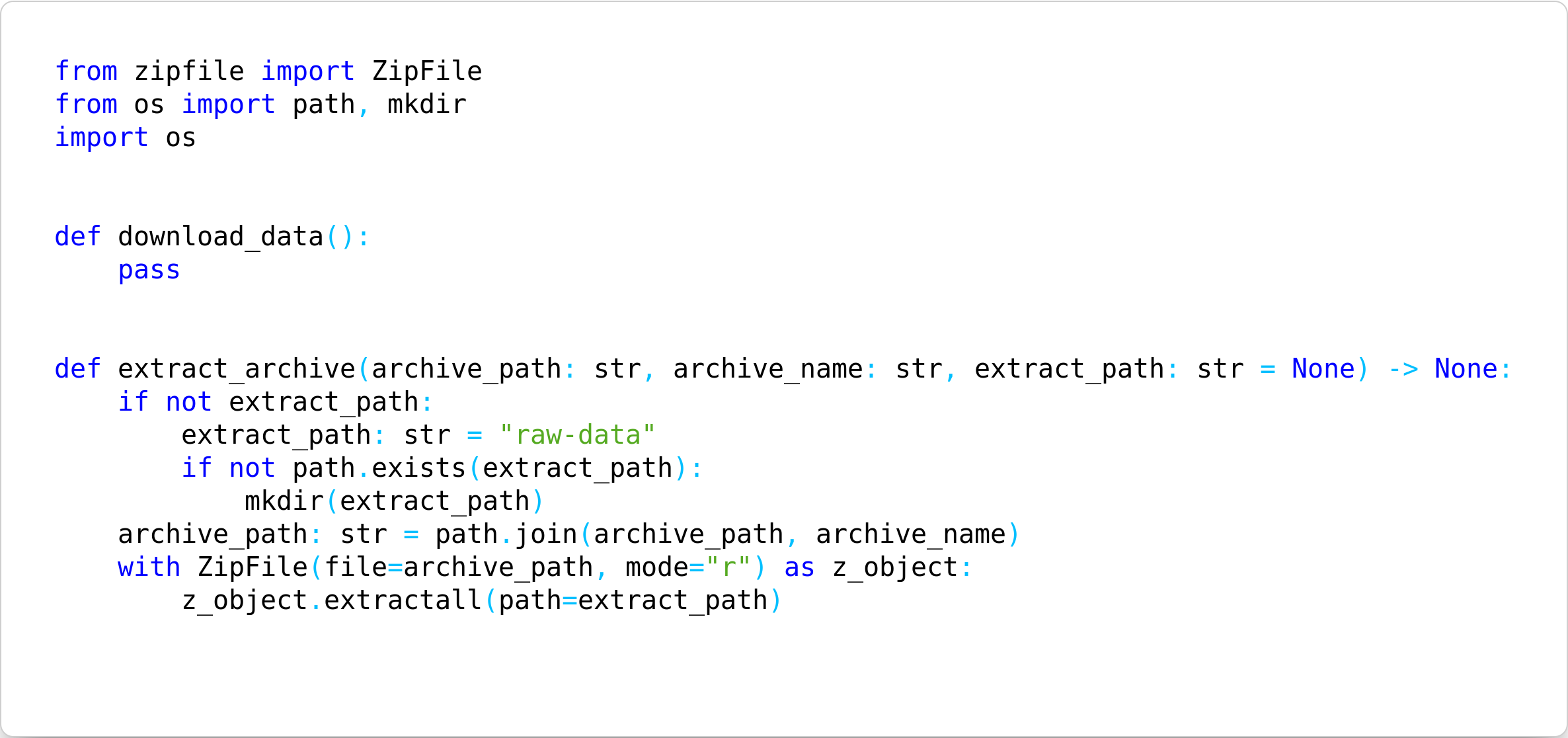

{"file_name": "ff904238-3810-4381-a1c1-3b03f949ee82.png", "code": "from zipfile import ZipFile\r\nfrom os import path, mkdir\r\nimport os\r\n\r\n\r\ndef download_data():\r\n pass\r\n\r\n\r\ndef extract_archive(archive_path: str, archive_name: str, extract_path: str = None) -> None:\r\n if not extract_path:\r\n extract_path: str = \"raw-data\"\r\n if not path.exists(extract_path):\r\n mkdir(extract_path)\r\n archive_path: str = path.join(archive_path, archive_name)\r\n with ZipFile(file=archive_path, mode=\"r\") as z_object:\r\n z_object.extractall(path=extract_path)\r\n \r\n "}

|

| 141 |

+

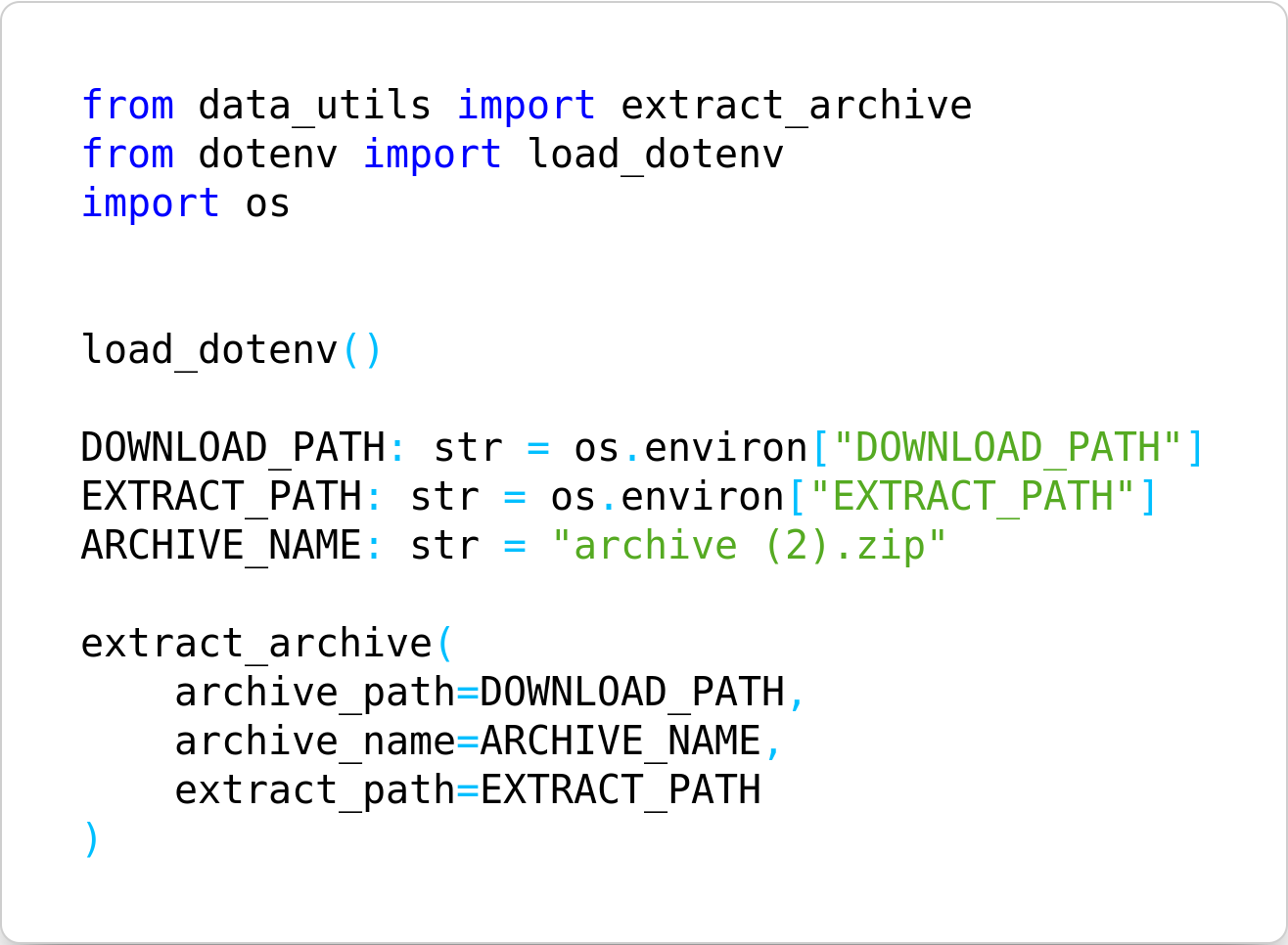

{"file_name": "7591c83b-c6b6-4bd4-9af0-b727942431c1.png", "code": "from data_utils import extract_archive\r\nfrom dotenv import load_dotenv\r\nimport os\r\n\r\n\r\nload_dotenv()\r\n\r\nDOWNLOAD_PATH: str = os.environ[\"DOWNLOAD_PATH\"]\r\nEXTRACT_PATH: str = os.environ[\"EXTRACT_PATH\"]\r\nARCHIVE_NAME: str = \"archive (2).zip\"\r\n\r\nextract_archive(\r\n archive_path=DOWNLOAD_PATH, \r\n archive_name=ARCHIVE_NAME, \r\n extract_path=EXTRACT_PATH\r\n)\r\n\r\n"}

|

| 142 |

+

{"file_name": "f438116f-9cf4-439b-a79d-b4a49ea84d76.png", "code": "import torch\r\nimport torchvision.transforms as transforms\r\nfrom PIL import Image\r\n\r\n\r\ndef print_examples(model, device, dataset):\r\n transform = transforms.Compose(\r\n [\r\n transforms.Resize((299, 299)),\r\n transforms.ToTensor(),\r\n transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),\r\n ]\r\n )\r\n\r\n model.eval()\r\n test_img1 = transform(Image.open(\"test_examples/dog.jpg\").convert(\"RGB\")).unsqueeze(\r\n 0\r\n )\r\n print(\"Example 1 CORRECT: Dog on a beach by the ocean\")\r\n print(\r\n \"Example 1 OUTPUT: \"\r\n + \" \".join(model.caption_image(test_img1.to(device), dataset.vocabulary))\r\n )\r\n test_img2 = transform(\r\n Image.open(\"test_examples/child.jpg\").convert(\"RGB\")\r\n ).unsqueeze(0)\r\n print(\"Example 2 CORRECT: Child holding red frisbee outdoors\")\r\n print(\r\n \"Example 2 OUTPUT: \"\r\n + \" \".join(model.caption_image(test_img2.to(device), dataset.vocabulary))\r\n )\r\n test_img3 = transform(Image.open(\"test_examples/bus.png\").convert(\"RGB\")).unsqueeze(\r\n 0\r\n )\r\n print(\"Example 3 CORRECT: Bus driving by parked cars\")\r\n print(\r\n \"Example 3 OUTPUT: \"\r\n + \" \".join(model.caption_image(test_img3.to(device), dataset.vocabulary))\r\n )\r\n test_img4 = transform(\r\n"}

|

| 143 |

+

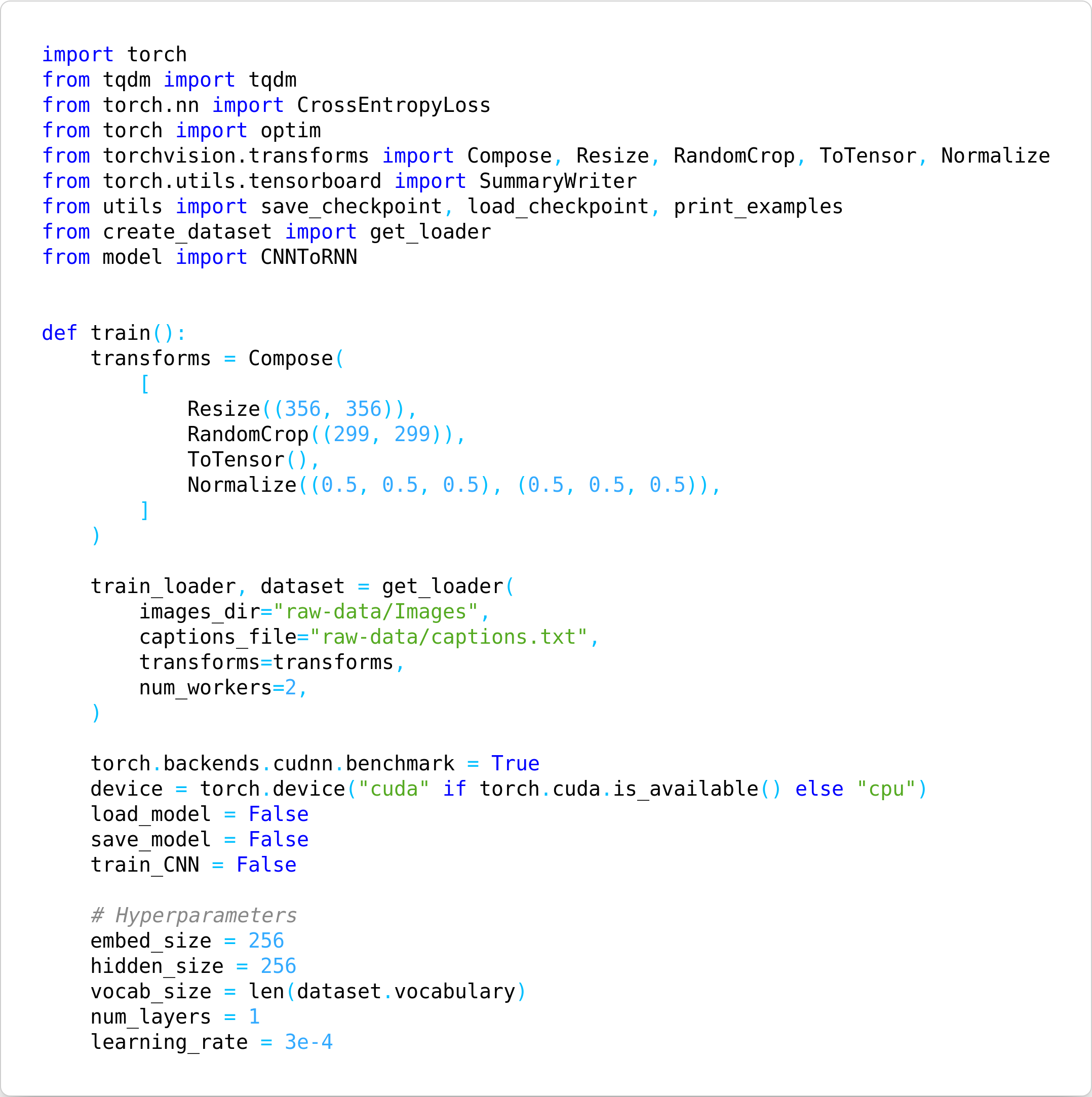

{"file_name": "8ab6a8f3-a4e2-4e36-8b83-c19528cbcef5.png", "code": "import torch\r\nfrom tqdm import tqdm\r\nfrom torch.nn import CrossEntropyLoss\r\nfrom torch import optim\r\nfrom torchvision.transforms import Compose, Resize, RandomCrop, ToTensor, Normalize\r\nfrom torch.utils.tensorboard import SummaryWriter\r\nfrom utils import save_checkpoint, load_checkpoint, print_examples\r\nfrom create_dataset import get_loader\r\nfrom model import CNNToRNN\r\n\r\n\r\ndef train():\r\n transforms = Compose(\r\n [\r\n Resize((356, 356)),\r\n RandomCrop((299, 299)),\r\n ToTensor(),\r\n Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),\r\n ]\r\n )\r\n\r\n train_loader, dataset = get_loader(\r\n images_dir=\"raw-data/Images\",\r\n captions_file=\"raw-data/captions.txt\",\r\n transforms=transforms,\r\n num_workers=2,\r\n )\r\n\r\n torch.backends.cudnn.benchmark = True\r\n device = torch.device(\"cuda\" if torch.cuda.is_available() else \"cpu\")\r\n load_model = False\r\n save_model = False\r\n train_CNN = False\r\n\r\n # Hyperparameters\r\n embed_size = 256\r\n hidden_size = 256\r\n vocab_size = len(dataset.vocabulary)\r\n num_layers = 1\r\n learning_rate = 3e-4\r\n"}

|