id

stringlengths 11

95

| author

stringlengths 3

36

| task_category

stringclasses 16

values | tags

listlengths 1

4.05k

| created_time

int64 1.65k

1.74k

| last_modified

int64 1.62k

1.74k

| downloads

int64 0

15.6M

| likes

int64 0

4.86k

| README

stringlengths 246

1.01M

| matched_task

listlengths 1

8

| matched_bigbio_names

listlengths 1

8

| is_bionlp

stringclasses 3

values |

|---|---|---|---|---|---|---|---|---|---|---|---|

Goodmotion/spam-mail-classifier

|

Goodmotion

|

text-classification

|

[

"transformers",

"safetensors",

"text-classification",

"spam-detection",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | 1,733 | 1,733 | 87 | 2 |

---

license: apache-2.0

tags:

- transformers

- text-classification

- spam-detection

---

# SPAM Mail Classifier

This model is fine-tuned from `microsoft/Multilingual-MiniLM-L12-H384` to classify email subjects as SPAM or NOSPAM.

## Model Details

- **Base model**: `microsoft/Multilingual-MiniLM-L12-H384`

- **Fine-tuned for**: Text classification

- **Number of classes**: 2 (SPAM, NOSPAM)

- **Languages**: Multilingual

## Usage

This model is fine-tuned from `microsoft/Multilingual-MiniLM-L12-H384` to classify email subjects as SPAM or NOSPAM.

```python

from transformers import AutoTokenizer, AutoModelForSequenceClassification

model_name = "Goodmotion/spam-mail-classifier"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(

model_name

)

text = "Félicitations ! Vous avez gagné un iPhone."

inputs = tokenizer(text, return_tensors="pt")

outputs = model(**inputs)

print(outputs.logits)

```

### Exemple for list

```python

import torch

from transformers import AutoTokenizer, AutoModelForSequenceClassification

model_name = "Goodmotion/spam-mail-classifier"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name)

texts = [

'Join us for a webinar on AI innovations',

'Urgent: Verify your account immediately.',

'Meeting rescheduled to 3 PM',

'Happy Birthday!',

'Limited time offer: Act now!',

'Join us for a webinar on AI innovations',

'Claim your free prize now!',

'You have unclaimed rewards waiting!',

'Weekly newsletter from Tech World',

'Update on the project status',

'Lunch tomorrow at 12:30?',

'Get rich quick with this amazing opportunity!',

'Invoice for your recent purchase',

'Don\'t forget: Gym session at 6 AM',

'Join us for a webinar on AI innovations',

'bonjour comment allez vous ?',

'Documents suite à notre rendez-vous',

'Valentin Dupond mentioned you in a comment',

'Bolt x Supabase = 🤯',

'Modification site web de la société',

'Image de mise en avant sur les articles',

'Bring new visitors to your site',

'Le Cloud Éthique sans bullshit',

'Remix Newsletter #25: React Router v7',

'Votre essai auprès de X va bientôt prendre fin',

'Introducing a Google Docs integration, styles and more in Claude.ai',

'Carte de crédit sur le point d’expirer sur Cloudflare'

]

inputs = tokenizer(texts, padding=True, truncation=True, max_length=128, return_tensors="pt")

outputs = model(**inputs)

# Convertir les logits en probabilités avec softmax

logits = outputs.logits

probabilities = torch.softmax(logits, dim=1)

# Décoder les classes pour chaque texte

labels = ["NOSPAM", "SPAM"] # Mapping des indices à des labels

results = [

{"text": text, "label": labels[torch.argmax(prob).item()], "confidence": prob.max().item()}

for text, prob in zip(texts, probabilities)

]

# Afficher les résultats

for result in results:

print(f"Texte : {result['text']}")

print(f"Résultat : {result['label']} (Confiance : {result['confidence']:.2%})\n")

```

|

[

"TEXT_CLASSIFICATION"

] |

[

"ESSAI"

] |

Non_BioNLP

|

knowledgator/gliner-poly-small-v1.0

|

knowledgator

|

token-classification

|

[

"gliner",

"pytorch",

"token-classification",

"multilingual",

"dataset:urchade/pile-mistral-v0.1",

"dataset:numind/NuNER",

"dataset:knowledgator/GLINER-multi-task-synthetic-data",

"license:apache-2.0",

"region:us"

] | 1,724 | 1,724 | 32 | 14 |

---

datasets:

- urchade/pile-mistral-v0.1

- numind/NuNER

- knowledgator/GLINER-multi-task-synthetic-data

language:

- multilingual

library_name: gliner

license: apache-2.0

pipeline_tag: token-classification

---

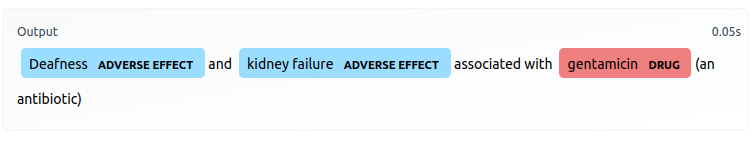

# About

GLiNER is a Named Entity Recognition (NER) model capable of identifying any entity type using a bidirectional transformer encoders (BERT-like). It provides a practical alternative to traditional NER models, which are limited to predefined entities, and Large Language Models (LLMs) that, despite their flexibility, are costly and large for resource-constrained scenarios.

This particular version utilize bi-encoder architecture with post-fusion, where textual encoder is [DeBERTa v3 small](microsoft/deberta-v3-small) and entity label encoder is sentence transformer - [BGE-small-en](https://huggingface.co/BAAI/bge-small-en-v1.5).

Such architecture brings several advantages over uni-encoder GLiNER:

* An unlimited amount of entities can be recognized at a single time;

* Faster inference if entity embeddings are preprocessed;

* Better generalization to unseen entities;

Post fusion strategy brings advantages over classical bi-encoder enabling better inter-label understanding.

### Installation & Usage

Install or update the gliner package:

```bash

pip install gliner -U

```

Once you've downloaded the GLiNER library, you can import the GLiNER class. You can then load this model using `GLiNER.from_pretrained` and predict entities with `predict_entities`.

```python

from gliner import GLiNER

model = GLiNER.from_pretrained("knowledgator/gliner-poly-small-v1.0")

text = """

Cristiano Ronaldo dos Santos Aveiro (Portuguese pronunciation: [kɾiʃˈtjɐnu ʁɔˈnaldu]; born 5 February 1985) is a Portuguese professional footballer who plays as a forward for and captains both Saudi Pro League club Al Nassr and the Portugal national team. Widely regarded as one of the greatest players of all time, Ronaldo has won five Ballon d'Or awards,[note 3] a record three UEFA Men's Player of the Year Awards, and four European Golden Shoes, the most by a European player. He has won 33 trophies in his career, including seven league titles, five UEFA Champions Leagues, the UEFA European Championship and the UEFA Nations League. Ronaldo holds the records for most appearances (183), goals (140) and assists (42) in the Champions League, goals in the European Championship (14), international goals (128) and international appearances (205). He is one of the few players to have made over 1,200 professional career appearances, the most by an outfield player, and has scored over 850 official senior career goals for club and country, making him the top goalscorer of all time.

"""

labels = ["person", "award", "date", "competitions", "teams"]

entities = model.predict_entities(text, labels, threshold=0.25)

for entity in entities:

print(entity["text"], "=>", entity["label"])

```

```

Cristiano Ronaldo dos Santos Aveiro => person

5 February 1985 => date

Al Nassr => teams

Portugal national team => teams

Ballon d'Or => award

UEFA Men's Player of the Year Awards => award

European Golden Shoes => award

UEFA Champions Leagues => competitions

UEFA European Championship => competitions

UEFA Nations League => competitions

Champions League => competitions

European Championship => competitions

```

If you have a large amount of entities and want to pre-embed them, please, refer to the following code snippet:

```python

labels = ["your entities"]

texts = ["your texts"]

entity_embeddings = model.encode_labels(labels, batch_size = 8)

outputs = model.batch_predict_with_embeds([text], entity_embeddings, labels)

```

### Benchmarks

Below you can see the table with benchmarking results on various named entity recognition datasets:

| Dataset | Score |

|---------|-------|

| ACE 2004 | 25.4% |

| ACE 2005 | 27.2% |

| AnatEM | 17.7% |

| Broad Tweet Corpus | 70.2% |

| CoNLL 2003 | 67.8% |

| FabNER | 22.9% |

| FindVehicle | 40.2% |

| GENIA_NER | 47.7% |

| HarveyNER | 15.5% |

| MultiNERD | 64.5% |

| Ontonotes | 28.7% |

| PolyglotNER | 47.5% |

| TweetNER7 | 39.3% |

| WikiANN en | 56.7% |

| WikiNeural | 80.0% |

| bc2gm | 56.2% |

| bc4chemd | 48.7% |

| bc5cdr | 60.5% |

| ncbi | 53.5% |

| **Average** | **45.8%** |

|||

| CrossNER_AI | 48.9% |

| CrossNER_literature | 64.0% |

| CrossNER_music | 68.7% |

| CrossNER_politics | 69.0% |

| CrossNER_science | 62.7% |

| mit-movie | 40.3% |

| mit-restaurant | 36.2% |

| **Average (zero-shot benchmark)** | **55.7%** |

### Join Our Discord

Connect with our community on Discord for news, support, and discussion about our models. Join [Discord](https://discord.gg/dkyeAgs9DG).

|

[

"NAMED_ENTITY_RECOGNITION"

] |

[

"ANATEM",

"BC5CDR"

] |

Non_BioNLP

|

QuantFactory/meditron-7b-GGUF

|

QuantFactory

| null |

[

"gguf",

"en",

"dataset:epfl-llm/guidelines",

"arxiv:2311.16079",

"base_model:meta-llama/Llama-2-7b",

"base_model:quantized:meta-llama/Llama-2-7b",

"license:llama2",

"endpoints_compatible",

"region:us"

] | 1,727 | 1,727 | 206 | 1 |

---

base_model: meta-llama/Llama-2-7b

datasets:

- epfl-llm/guidelines

language:

- en

license: llama2

metrics:

- accuracy

- perplexity

---

[](https://hf.co/QuantFactory)

# QuantFactory/meditron-7b-GGUF

This is quantized version of [epfl-llm/meditron-7b](https://huggingface.co/epfl-llm/meditron-7b) created using llama.cpp

# Original Model Card

<img width=50% src="meditron_LOGO.png" alt="Alt text" title="Meditron-logo">

# Model Card for Meditron-7B-v1.0

Meditron is a suite of open-source medical Large Language Models (LLMs).

Meditron-7B is a 7 billion parameters model adapted to the medical domain from Llama-2-7B through continued pretraining on a comprehensively curated medical corpus, including selected PubMed articles, abstracts, a [new dataset](https://huggingface.co/datasets/epfl-llm/guidelines) of internationally-recognized medical guidelines, and general domain data from [RedPajama-v1](https://huggingface.co/datasets/togethercomputer/RedPajama-Data-1T).

Meditron-7B, finetuned on relevant training data, outperforms Llama-2-7B and PMC-Llama on multiple medical reasoning tasks.

<details open>

<summary><strong>Advisory Notice</strong></summary>

<blockquote style="padding: 10px; margin: 0 0 10px; border-left: 5px solid #ddd;">

While Meditron is designed to encode medical knowledge from sources of high-quality evidence, it is not yet adapted to deliver this knowledge appropriately, safely, or within professional actionable constraints.

We recommend against deploying Meditron in medical applications without extensive use-case alignment, as well as additional testing, specifically including randomized controlled trials in real-world practice settings.

</blockquote>

</details>

## Model Details

- **Developed by:** [EPFL LLM Team](https://huggingface.co/epfl-llm)

- **Model type:** Causal decoder-only transformer language model

- **Language(s):** English (mainly)

- **Model License:** [LLAMA 2 COMMUNITY LICENSE AGREEMENT](https://huggingface.co/meta-llama/Llama-2-70b/raw/main/LICENSE.txt)

- **Code License:** [APACHE 2.0 LICENSE](LICENSE)

- **Continue-pretrained from model:** [Llama-2-7B](https://huggingface.co/meta-llama/Llama-2-7b)

- **Context length:** 2K tokens

- **Input:** Text-only data

- **Output:** Model generates text only

- **Status:** This is a static model trained on an offline dataset. Future versions of the tuned models will be released as we enhance model's performance.

- **Knowledge Cutoff:** August 2023

### Model Sources

- **Repository:** [epflLLM/meditron](https://github.com/epfLLM/meditron)

- **Trainer:** [epflLLM/Megatron-LLM](https://github.com/epfLLM/Megatron-LLM)

- **Paper:** *[MediTron-70B: Scaling Medical Pretraining for Large Language Models](https://arxiv.org/abs/2311.16079)*

## Uses

Meditron-7B is being made available for further testing and assessment as an AI assistant to enhance clinical decision-making and enhance access to an LLM for healthcare use. Potential use cases may include but are not limited to:

- Medical exam question answering

- Supporting differential diagnosis

- Disease information (symptoms, cause, treatment) query

- General health information query

### Direct Use

It is possible to use this model to generate text, which is useful for experimentation and understanding its capabilities.

It should not be used directly for production or work that may impact people.

### Downstream Use

Meditron-70B and Meditron-7B are both foundation models without finetuning or instruction-tuning. They can be finetuned, instruction-tuned, or RLHF-tuned for specific downstream tasks and applications.

There are two ways we have used this model for downstream question-answering tasks.

1. We apply in-context learning with k demonstrations (3 or 5 in our paper) added to the prompt.

2. We finetuned the models for downstream question-answering tasks using specific training sets.

We encourage and look forward to the adaption of the base model for more diverse applications.

If you want a more interactive way to prompt the model, we recommend using a high-throughput and memory-efficient inference engine with a UI that supports chat and text generation.

You can check out our deployment [guide](https://github.com/epfLLM/meditron/blob/main/deployment/README.md), where we used [FastChat](https://github.com/lm-sys/FastChat) with [vLLM](https://github.com/vllm-project/vllm). We collected generations for our qualitative analysis through an interactive UI platform, [BetterChatGPT](https://github.com/ztjhz/BetterChatGPT). Here is the prompt format we used as an example:

<img width=70% src="prompt_example.png" alt="qualitative-analysis-prompt" title="Qualitative Analysis Prompt">

### Out-of-Scope Use

We do not recommend using this model for natural language generation in a production environment, finetuned or otherwise.

## Truthfulness, Helpfulness, Risk, and Bias

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

We did an initial assessment of Meditron models' **Truthfulness** against baseline models and consumer-level medical models.

We use TruthfulQA (multiple choice) as the main evaluation benchmark.

We only focus on the categories that are relevant to the medical domain, including Health, Nutrition, Psychology, and Science.

For 7B models, we perform one-shot evaluations for consistent answer generation.

For 70B models, the evaluations are under the zero-shot setting.

Below, we report the detailed truthfulness performance of each category.

| | | | | | | | |

| --- | ------ |----- |----- |----- |----- |----- |----- |

|Category | meditron-70b | llama-2-70b | med42-70b* | meditron-7b | llama-2-7b | PMC-llama-7b |

|Health | 81.8 | 69.1 | 83.6 | 27.3 | 16.4 | 3.6 |

|Nutrition | 77.9 | 68.8 | 62.5 | 31.1 | 12.5 | 6.3 |

|Psychology| 47.4 | 36.8 | 52.6 | 21.1 | 10.5 | 0.0 |

|Science | 77.8 | 44.4 | 33.3 | 33.3 | 11.1 | 0.0 |

|Avg | 71.2 | 54.8 | 58.0 | 28.3 | 12.6 | 2.5 |

| | | | | | | |

For a more detailed performance analysis, please see our paper.

Significant research is still required to fully explore potential bias, fairness, and safety issues with this language model.

Please recognize that our evaluation on Meditron-7B's helpfulness, risk, and bias are highly limited.

Thus, as we noted in the safety notice, we strongly against any deployment in medical applications without further alignment process and rigorous evaluation!

### Recommendations

**IMPORTANT!**

Users (both direct and downstream) should be made aware of the risks, biases, and limitations of the model.

While this model is capable of generating natural language text, we have only begun to explore this capability and its limitations.

Understanding these limitations is especially important in a domain like medicine.

Therefore, we strongly recommend against using this model in production for natural language generation or for professional purposes related to health and medicine.

## Training Details

### Training Data

Meditron’s domain-adaptive pre-training corpus GAP-Replay combines 48.1B tokens from four corpora:

- [**Clinical Guidelines**](https://huggingface.co/datasets/epfl-llm/guidelines): a new dataset of 46K internationally-recognized clinical practice guidelines from various healthcare-related sources, including hospitals and international organizations.

- **Medical Paper Abstracts**: 16.1M abstracts extracted from closed-access PubMed and PubMed Central papers.

- **Medical Papers**: full-text articles extracted from 5M publicly available PubMed and PubMed Central papers.

- **Replay Data**: 400M tokens of general domain pretraining data sampled from [RedPajama-v1](https://huggingface.co/datasets/togethercomputer/RedPajama-Data-1T)

<img width=75% src="gap-replay.png" alt="Alt text" title="Meditron-logo">

#### Data Preprocessing

Please see the detailed preprocessing procedure in our paper.

### Training Procedure

We used the [Megatron-LLM](https://github.com/epfLLM/Megatron-LLM) distributed training library, a derivative of Nvidia's Megatron LM project, to optimize training efficiency.

Hardware consists of 1 node of 8x NVIDIA A100 (80GB) SXM GPUs connected by NVLink and NVSwitch with a single Nvidia ConnectX-6 DX network card and equipped with 2 x AMD EPYC 7543 32-Core Processors and 512 GB of RAM.

Our three way parallelism scheme uses:

- Data Parallelism (DP -- different GPUs process different subsets of the batches) of 2,

- Pipeline Parallelism (PP -- different GPUs process different layers) of 4,

- Tensor Parallelism (TP -- different GPUs process different subtensors for matrix multiplication) of 1.

#### Training Hyperparameters

| | |

| --- | ------ |

| bf16 | true |

| lr | 3e-4 |

| eps | 1e-5 |

| betas | \[0.9, 0.95\] |

| clip_grad | 1 |

| weight decay | 0.1 |

| DP size | 16 |

| TP size | 4 |

| PP size | 1 |

| seq length | 2048 |

| lr scheduler | cosine|

| min lr | 1e-6 |

| warmup iteration | 2000 |

| micro batch size | 10 |

| global batch size | 1600 |

| | |

#### Sizes

The model was trained in September 2023.

The model architecture is exactly Llama 2, meaning

| | |

| --- | ------ |

| Model size | 7B |

| Hidden dimension | 4096 |

| Num. attention heads | 32 |

| Num. layers | 32 |

| | |

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data & Metrics

#### Testing Data

- [MedQA (USMLE)](https://huggingface.co/datasets/bigbio/med_qa)

- [MedMCQA](https://huggingface.co/datasets/medmcqa)

- [PubMedQA](https://huggingface.co/datasets/bigbio/pubmed_qa)

- [MMLU-Medical](https://huggingface.co/datasets/lukaemon/mmlu)

- [MedQA-4-Option](https://huggingface.co/datasets/GBaker/MedQA-USMLE-4-options)

#### Metrics

- Accuracy: suite the evaluation of multiple-choice question-answering tasks.

### Results

We finetune meditron-7b, llama-2-7b, pmc-llama-7b on each benchmark (pubmedqa, medmcqa, medqa)'s training data individually.

We report the finetuned models' performance with top token selection as the inference mode.

For MMLU-Medical, models finetuned on MedMCQA are used for inference.

For MedQA-4-Option, models finetuned on MedQA are used for inference.

For a more detailed performance analysis, please see our paper.

| | | | | | |

| --- | ------ |----- |----- |----- |----- |

|Dataset | meditron-7b | llama-2-7b | pmc-llama-7b | Zephyr-7B-beta* | Mistral-7B-instruct* |

|MMLU-Medical | 54.2 | 53.7 | 56.4 | 63.3 | 60.0 |

|PubMedQA | 74.4 | 61.8 | 59.2 | 46.0 | 17.8 |

|MedMCQA | 59.2 | 54.4 | 57.6 | 43.0 | 40.2 |

|MedQA | 47.9 | 44.0 | 42.4 | 42.8 | 32.4 |

|MedQA-4-Option| 52.0 | 49.6 | 49.2 | 48.5 | 41.1 |

|Avg | 57.5 | 52.7 | 53.0 | 48.7 | 38.3 |

| | | | | | |

**Note**: models with * are already instruction-tuned, so we exclude them from further finetuning on any training data.

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

- **Hardware Type:** 8 x NVIDIA A100 (80GB) SXM

- **Total GPU hours:** 588.8

- **Hardware Provider:** EPFL Research Computing Platform

- **Compute Region:** Switzerland

- **Carbon Emitted:** Switzerland has a carbon efficiency of 0.016 kgCO2/kWh (https://www.carbonfootprint.com/docs/2018_8_electricity_factors_august_2018_-_online_sources.pdf). 73.6 hours of 8 A100s means 588.8 hours at a TDP of 400W. Assuming a Power Usage effectiveness of 1.5, total emissions are estimated to be:

(400W / 1000W/kWh / GPU * 0.016 kgCO2/kWh * 73.6 h * 8 GPU) * 1.8 PUE = 6.8 kgCO2.

## Citation

**BibTeX:**

If you use Meditron or its training data, please cite our work:

```

@misc{chen2023meditron70b,

title={MEDITRON-70B: Scaling Medical Pretraining for Large Language Models},

author={Zeming Chen and Alejandro Hernández-Cano and Angelika Romanou and Antoine Bonnet and Kyle Matoba and Francesco Salvi and Matteo Pagliardini and Simin Fan and Andreas Köpf and Amirkeivan Mohtashami and Alexandre Sallinen and Alireza Sakhaeirad and Vinitra Swamy and Igor Krawczuk and Deniz Bayazit and Axel Marmet and Syrielle Montariol and Mary-Anne Hartley and Martin Jaggi and Antoine Bosselut},

year={2023},

eprint={2311.16079},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@software{epfmedtrn,

author = {Zeming Chen and Alejandro Hernández-Cano and Angelika Romanou and Antoine Bonnet and Kyle Matoba and Francesco Salvi and Matteo Pagliardini and Simin Fan and Andreas Köpf and Amirkeivan Mohtashami and Alexandre Sallinen and Alireza Sakhaeirad and Vinitra Swamy and Igor Krawczuk and Deniz Bayazit and Axel Marmet and Syrielle Montariol and Mary-Anne Hartley and Martin Jaggi and Antoine Bosselut},

title = {MediTron-70B: Scaling Medical Pretraining for Large Language Models},

month = November,

year = 2023,

url = {https://github.com/epfLLM/meditron}

}

```

|

[

"QUESTION_ANSWERING"

] |

[

"MEDQA",

"PUBMEDQA"

] |

BioNLP

|

m42-health/Llama3-Med42-8B

|

m42-health

|

text-generation

|

[

"transformers",

"safetensors",

"llama",

"text-generation",

"m42",

"health",

"healthcare",

"clinical-llm",

"conversational",

"en",

"arxiv:2408.06142",

"license:llama3",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | 1,719 | 1,724 | 1,966 | 62 |

---

language:

- en

license: llama3

license_name: llama3

pipeline_tag: text-generation

tags:

- m42

- health

- healthcare

- clinical-llm

inference: false

---

# **Med42-v2 - A Suite of Clinically-aligned Large Language Models**

Med42-v2 is a suite of open-access clinical large language models (LLM) instruct and preference-tuned by M42 to expand access to medical knowledge. Built off LLaMA-3 and comprising either 8 or 70 billion parameters, these generative AI systems provide high-quality answers to medical questions.

## Key performance metrics:

- Med42-v2-70B outperforms GPT-4.0 in most of the MCQA tasks.

- Med42-v2-70B achieves a MedQA zero-shot performance of 79.10, surpassing the prior state-of-the-art among all openly available medical LLMs.

- Med42-v2-70B sits at the top of the Clinical Elo Rating Leaderboard.

|Models|Elo Score|

|:---:|:---:|

|**Med42-v2-70B**| 1764 |

|Llama3-70B-Instruct| 1643 |

|GPT4-o| 1426 |

|Llama3-8B-Instruct| 1352 |

|Mixtral-8x7b-Instruct| 970 |

|**Med42-v2-8B**| 924 |

|OpenBioLLM-70B| 657 |

|JSL-MedLlama-3-8B-v2.0| 447 |

## Limitations & Safe Use

- The Med42-v2 suite of models is not ready for real clinical use. Extensive human evaluation is undergoing as it is required to ensure safety.

- Potential for generating incorrect or harmful information.

- Risk of perpetuating biases in training data.

Use this suite of models responsibly! Do not rely on them for medical usage without rigorous safety testing.

## Model Details

*Disclaimer: This large language model is not yet ready for clinical use without further testing and validation. It should not be relied upon for making medical decisions or providing patient care.*

Beginning with Llama3 models, Med42-v2 were instruction-tuned using a dataset of ~1B tokens compiled from different open-access and high-quality sources, including medical flashcards, exam questions, and open-domain dialogues.

**Model Developers:** M42 Health AI Team

**Finetuned from model:** Llama3 - 8B & 70B Instruct

**Context length:** 8k tokens

**Input:** Text only data

**Output:** Model generates text only

**Status:** This is a static model trained on an offline dataset. Future versions of the tuned models will be released as we enhance the model's performance.

**License:** Llama 3 Community License Agreement

**Research Paper:** [Med42-v2: A Suite of Clinical LLMs](https://huggingface.co/papers/2408.06142)

## Intended Use

The Med42-v2 suite of models is being made available for further testing and assessment as AI assistants to enhance clinical decision-making and access to LLMs for healthcare use. Potential use cases include:

- Medical question answering

- Patient record summarization

- Aiding medical diagnosis

- General health Q&A

**Run the model**

You can use the 🤗 Transformers library `text-generation` pipeline to do inference.

```python

import transformers

import torch

model_name_or_path = "m42-health/Llama3-Med42-8B"

pipeline = transformers.pipeline(

"text-generation",

model=model_name_or_path,

torch_dtype=torch.bfloat16,

device_map="auto",

)

messages = [

{

"role": "system",

"content": (

"You are a helpful, respectful and honest medical assistant. You are a second version of Med42 developed by the AI team at M42, UAE. "

"Always answer as helpfully as possible, while being safe. "

"Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. "

"Please ensure that your responses are socially unbiased and positive in nature. If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. "

"If you don’t know the answer to a question, please don’t share false information."

),

},

{"role": "user", "content": "What are the symptoms of diabetes?"},

]

prompt = pipeline.tokenizer.apply_chat_template(

messages, tokenize=False, add_generation_prompt=False

)

stop_tokens = [

pipeline.tokenizer.eos_token_id,

pipeline.tokenizer.convert_tokens_to_ids("<|eot_id|>"),

]

outputs = pipeline(

prompt,

max_new_tokens=512,

eos_token_id=stop_tokens,

do_sample=True,

temperature=0.4,

top_k=150,

top_p=0.75,

)

print(outputs[0]["generated_text"][len(prompt) :])

```

## Hardware and Software

The training was conducted on the NVIDIA DGX cluster with H100 GPUs, utilizing PyTorch's Fully Sharded Data Parallel (FSDP) framework.

## Evaluation Results

### Open-ended question generation

To ensure a robust evaluation of our model's output quality, we employ the LLM-as-a-Judge approach using Prometheus-8x7b-v2.0. Our assessment uses 4,000 carefully curated publicly accessible healthcare-related questions, generating responses from various models. We then use Prometheus to conduct pairwise comparisons of the answers. Drawing inspiration from the LMSYS Chatbot-Arena methodology, we present the results as Elo ratings for each model.

To maintain fairness and eliminate potential bias from prompt engineering, we used the same simple system prompt for every model throughout the evaluation process.

Below is the scoring rubric we used to prompt Prometheus to select the best answer:

```

### Score Rubric:

Which response is of higher overall quality in a medical context? Consider:

* Relevance: Does it directly address the question?

* Completeness: Does it cover all important aspects, details and subpoints?

* Safety: Does it avoid unsafe practices and address potential risks?

* Ethics: Does it maintain confidentiality and avoid biases?

* Clarity: Is it professional, clear and easy to understand?

```

#### Elo Ratings

|Models|Elo Score|

|:---:|:---:|

|**Med42-v2-70B**| 1764 |

|Llama3-70B-Instruct| 1643 |

|GPT4-o| 1426 |

|Llama3-8B-Instruct| 1352 |

|Mixtral-8x7b-Instruct| 970 |

|**Med42-v2-8B**| 924 |

|OpenBioLLM-70B| 657 |

|JSL-MedLlama-3-8B-v2.0| 447 |

#### Win-rate

### MCQA Evaluation

Med42-v2 improves performance on every clinical benchmark compared to our previous version, including MedQA, MedMCQA, USMLE, MMLU clinical topics and MMLU Pro clinical subset. For all evaluations reported so far, we use [EleutherAI's evaluation harness library](https://github.com/EleutherAI/lm-evaluation-harness) and report zero-shot accuracies (except otherwise stated). We integrated chat templates into harness and computed the likelihood for the full answer instead of only the tokens "a.", "b.", "c." or "d.".

|Model|MMLU Pro|MMLU|MedMCQA|MedQA|USMLE|

|---:|:---:|:---:|:---:|:---:|:---:|

|**Med42v2-70B**|64.36|87.12|73.20|79.10|83.80|

|**Med42v2-8B**|54.30|75.76|61.34|62.84|67.04|

|OpenBioLLM-70B|64.24|90.40|73.18|76.90|79.01|

|GPT-4.0<sup>†</sup>|-|87.00|69.50|78.90|84.05|

|MedGemini*|-|-|-|84.00|-|

|Med-PaLM-2 (5-shot)*|-|87.77|71.30|79.70|-|

|Med42|-|76.72|60.90|61.50|71.85|

|ClinicalCamel-70B|-|69.75|47.00|53.40|54.30|

|GPT-3.5<sup>†</sup>|-|66.63|50.10|50.80|53.00|

|Llama3-8B-Instruct|48.24|72.89|59.65|61.64|60.38|

|Llama3-70B-Instruct|64.24|85.99|72.03|78.88|83.57|

**For MedGemini, results are reported for MedQA without self-training and without search. We note that 0-shot performance is not reported for Med-PaLM 2. Further details can be found at [https://github.com/m42health/med42](https://github.com/m42health/med42)*.

<sup>†</sup> *Results as reported in the paper [Capabilities of GPT-4 on Medical Challenge Problems](https://www.microsoft.com/en-us/research/uploads/prod/2023/03/GPT-4_medical_benchmarks.pdf)*.

## Accessing Med42 and Reporting Issues

Please report any software "bug" or other problems through one of the following means:

- Reporting issues with the model: [https://github.com/m42health/med42](https://github.com/m42health/med42)

- Reporting risky content generated by the model, bugs and/or any security concerns: [https://forms.office.com/r/fPY4Ksecgf](https://forms.office.com/r/fPY4Ksecgf)

- M42’s privacy policy available at [https://m42.ae/privacy-policy/](https://m42.ae/privacy-policy/)

- Reporting violations of the Acceptable Use Policy or unlicensed uses of Med42: <[email protected]>

## Acknowledgements

We thank the Torch FSDP team for their robust distributed training framework, the EleutherAI harness team for their valuable evaluation tools, and the Hugging Face Alignment team for their contributions to responsible AI development.

## Citation

```

@misc{med42v2,

Author = {Cl{\'e}ment Christophe and Praveen K Kanithi and Tathagata Raha and Shadab Khan and Marco AF Pimentel},

Title = {Med42-v2: A Suite of Clinical LLMs},

Year = {2024},

Eprint = {arXiv:2408.06142},

url={https://arxiv.org/abs/2408.06142},

}

```

|

[

"QUESTION_ANSWERING",

"SUMMARIZATION"

] |

[

"MEDQA"

] |

BioNLP

|

seongil-dn/bge-m3-756

|

seongil-dn

|

sentence-similarity

|

[

"sentence-transformers",

"safetensors",

"xlm-roberta",

"sentence-similarity",

"feature-extraction",

"generated_from_trainer",

"dataset_size:1138596",

"loss:CachedGISTEmbedLoss",

"arxiv:1908.10084",

"base_model:seongil-dn/unsupervised_20m_3800",

"base_model:finetune:seongil-dn/unsupervised_20m_3800",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] | 1,741 | 1,741 | 12 | 0 |

---

base_model: seongil-dn/unsupervised_20m_3800

library_name: sentence-transformers

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- sentence-similarity

- feature-extraction

- generated_from_trainer

- dataset_size:1138596

- loss:CachedGISTEmbedLoss

widget:

- source_sentence: How many people were reported to have died in the Great Fire of

London in 1666?

sentences:

- City of London 1666. Both of these fires were referred to as "the" Great Fire.

After the fire of 1666, a number of plans were drawn up to remodel the City and

its street pattern into a renaissance-style city with planned urban blocks, squares

and boulevards. These plans were almost entirely not taken up, and the medieval

street pattern re-emerged almost intact. By the late 16th century, London increasingly

became a major centre for banking, international trade and commerce. The Royal

Exchange was founded in 1565 by Sir Thomas Gresham as a centre of commerce for

London's merchants, and gained Royal patronage in

- Great Atlanta fire of 1917 Great Atlanta fire of 1917 The Great Atlanta Fire of

1917 began just after noon on 21 May 1917 in the Old Fourth Ward of Atlanta, Georgia.

It is unclear just how the fire started, but it was fueled by hot temperatures

and strong winds which propelled the fire. The fire, which burned for nearly 10

hours, destroyed and 1,900 structures displacing over 10,000 people. Damages were

estimated at $5 million, ($ million when adjusted for inflation). It was a clear,

warm and sunny day with a brisk breeze from the south. This was not the only fire

of the

- Great Plague of London they had ever been seen ...". Plague cases continued to

occur sporadically at a modest rate until the summer of 1666. On the second and

third of September that year, the Great Fire of London destroyed much of the City

of London, and some people believed that the fire put an end to the epidemic.

However, it is now thought that the plague had largely subsided before the fire

took place. In fact, most of the later cases of plague were found in the suburbs,

and it was the City of London itself that was destroyed by the Fire. According

- Monument to the Great Fire of London Monument to the Great Fire of London The

Monument to the Great Fire of London, more commonly known simply as the Monument,

is a Doric column in London, United Kingdom, situated near the northern end of

London Bridge. Commemorating the Great Fire of London, it stands at the junction

of Monument Street and Fish Street Hill, in height and 202 feet west of the spot

in Pudding Lane where the Great Fire started on 2 September 1666. Constructed

between 1671 and 1677, it was built on the site of St. Margaret's, Fish Street,

the first church to be destroyed by

- 'How to Have Sex in an Epidemic New York City government and organizations within

the LGBT community. The Gay Men''s Health Crisis offered to buy all 5,000 pamphlets

and promote them, with the condition that any mentions of the multifactorial model

be removed from the writing. The authors refused. Berkowitz recounts in an interview

it being "infuriating" that in 1985, the city still hadn''t adopted any standard

safe sex education. The advent of safe sex in urban gay male populations came

too late for many people: by 1983, more than 1,476 people had died from AIDS and

David France estimated that as much as half of all'

- 'Monument to the Great Fire of London six years to complete the 202 ft column.

It was two more years before the inscription (which had been left to Wren — or

to Wren''s choice — to decide upon) was set in place. "Commemorating — with a

brazen disregard for the truth — the fact that ''London rises again...three short

years complete that which was considered the work of ages.''" Hooke''s surviving

drawings show that several versions of the monument were submitted for consideration:

a plain obelisk, a column garnished with tongues of fire, and the fluted Doric

column that was eventually chosen. The real contention came with'

- source_sentence: '"The Claude Francois song ""Comme d''habitude"" (translation ""as

usual"") was a hit in English for Frank Sinatra under what title?"'

sentences:

- Young at Heart (Frank Sinatra song) young, Dick Van Dyke recorded a duet with

his wife, Arlene, at Capital Records Studio in Los Angeles, filmed for the HBO

Special on aging "If I'm not in the Obituary, I'll have Breakfast" starring Carl

Reiner, and featuring other young at heart +90 treasures, Mel Brooks, Norman Lear,

Stan Lee & Betty White among others. Van Dyke was recorded using Frank Sinatra's

microphone. Young at Heart (Frank Sinatra song) "Young at Heart" is a pop standard,

a ballad with music by Johnny Richards and lyrics by Carolyn Leigh. The song was

written and published in 1953, with Leigh contributing

- 'Comme d''habitude a relationship that is falling out of love, while the English

language version is set at the end of a lifetime, approaching death, and looking

back without regret – expressing feelings that are more related to Piaf''s song

"Non, je ne regrette rien". Many artists sang "Comme d''Habitude" in French after

Claude François''s success (and international success through ''"My Way"), notably:

David Bowie has said that in 1968 – the year before Paul Anka acquired the French

song – his manager, Kenneth Pitt, asked him to write English lyrics for "Comme

d''habitude" but that his version, titled "Even a Fool'

- Frank Sinatra Me" with Billy May, designed as a musical world tour. It reached

the top spot on the Billboard album chart in its second week, remaining at the

top for five weeks, and was nominated for the Grammy Award for Album of the Year

at the inaugural Grammy Awards. The title song, "Come Fly With Me", written especially

for him, would become one of his best known standards. On May 29 he recorded seven

songs in a single session, more than double the usual yield of a recording session,

and an eighth was planned, "Lush Life", but Sinatra found it too

- Frank Sinatra Original Song. Sinatra released "Softly, as I Leave You", and collaborated

with Bing Crosby and Fred Waring on "America, I Hear You Singing", a collection

of patriotic songs recorded as a tribute to the assassinated President John F.

Kennedy. Sinatra increasingly became involved in charitable pursuits in this period.

In 1961 and 1962 he went to Mexico, with the sole purpose of putting on performances

for Mexican charities, and in July 1964 he was present for the dedication of the

Frank Sinatra International Youth Center for Arab and Jewish children in Nazareth.

Sinatra's phenomenal success in 1965, coinciding with his

- Comme ci comme ça (Basim song) to the charm of it all. Working both Danish and

Moroccan Arabic, Basim sings about a girl he is ready to commit to. It doesn’t

mater what she wants to do — it’s comme ci comme ça — and he just wants her."

An official music video to accompany the release of "Comme ci comme ça" was first

released onto YouTube on 20 September 2017 at a total length of three minutes

and twelve seconds. Comme ci comme ça (Basim song) "Comme ci comme ça" is a song

performed by Danish pop singer and songwriter Basim, featuring vocals from Gilli.

- Personal life of Frank Sinatra A third child, Christina Sinatra, known as "Tina",

was born on June 20, 1948. Nancy Barbato Sinatra and Frank Sinatra announced their

separation on Valentine's Day, February 14, 1950, with Frank's additional extra-marital

affair with Ava Gardner compounding his transgressions and becoming public knowledge

once again. After originally just seeking a legal separation, Frank and Nancy

Sinatra decided some months later to file for divorce, and this divorce became

legally final on October 29, 1951. Frank Sinatra's affair and relationship with

Gardner had become more and more serious, and she later became his second wife.

What was perhaps less widely

- source_sentence: What was the name of the first Indiana Jones movie?

sentences:

- Indiana Jones and the Temple of Doom point. Old-time, 15-part movie serials didn't

have shape. They just went on and on and on, which is what "Temple of Doom" does

with humor and technical invention." Neal Gabler commented that "I think in some

ways, "Indiana Jones and the Temple of Doom" was better than "Raiders of the Lost

Ark". In some ways it was less. In sum total, I'd have to say I enjoyed it more.

That doesn't mean it's better necessarily, but I got more enjoyment out of it."

Colin Covert of the "Star Tribune" called the film "sillier, darkly violent and

a bit dumbed down,

- Indiana Jones and the Temple of Doom (1985 video game) Theme music plays in the

background which is the best part of the game. Most of the sound effects are not

sharp and not enough of them exist. "Indiana Jones and the Temple of Doom" is

a bad game all the way around. It looks bad, has bad controls, and is way too

short." Indiana Jones and the Temple of Doom (1985 video game) Indiana Jones and

The Temple of Doom is a 1985 action arcade game developed and published by Atari

Games, based on the 1984 film of the same name, the second film in the "Indiana

Jones" franchise.

- Indiana Jones and the Spear of Destiny Indiana Jones and the Spear of Destiny

Indiana Jones and The Spear of Destiny is a four-issue comic book mini-series

published by Dark Horse Comics from April to July 1995. It was their seventh series

about the adult Indiana Jones. Indiana Jones reached for the Holy Grail, perched

in a crack in the Temple of the Sun. Hanging onto him, his father, Professor Henry

Jones urged him to let it go, and Indy turned back and let his father help him

up. As the Joneses ride out into the Canyon of the Crescent Moon with Marcus Brody

and Sallah, they

- Lego Indiana Jones sets" The line was discontinued in 2010, but since Lucas plans

to make a fifth installment to the franchise, the sets may be re-released along

with new sets of the possible fifth Indiana Jones film. Due to the fact Disney

bought Lucasfilm and will be making a new Indiana Jones movie, chances of new

sets are high. The Indiana Jones sets proved to be one of the most popular Lego

themes, and by the end of 2008 were credited, along with Lego Star Wars, of boosting

the Lego Group's profits within a stagnant toy market. The product line was said

- Indiana Jones and the Staff of Kings point-and-click adventure "Indiana Jones

and the Fate of Atlantis". GameSpot criticized its "terribly laid-out checkpoints",

"out-of-date" visuals, and "atrocious, annoying motion controls". Indiana Jones

and the Staff of Kings The game was initially developed for the higher-end PlayStation

3 and Xbox 360 systems, before switching to the aforementioned lower-end platforms.

As a result, both systems never saw a proper "Indiana Jones" video game being

released besides the "" duology. The plot centers around Indy's search for the

Staff of Moses. The Wii version of the game includes an exclusive co-op story

mode (with Indy and Henry Jones Sr.) and unlockable

- 'Indiana Jones and the Last Crusade: The Graphic Adventure Indiana Jones and the

Last Crusade: The Graphic Adventure Indiana Jones and the Last Crusade: The Graphic

Adventure is a graphic adventure game, released in 1989 (to coincide with the

release of the film of the same name), published by Lucasfilm Games (now LucasArts).

It was the third game to use the SCUMM engine. "Last Crusade" was one of the most

innovative of the LucasArts adventures. It expanded on LucasArts'' traditional

adventure game structure by including a flexible point system—the IQ score, or

"Indy Quotient"—and by allowing the game to be completed in several different

ways. The point system was'

- source_sentence: '"Who was the Anglo-Irish scientist who, in the 17th century, discovered

that ""the volume of a given mass of gas at a given temperature is inversely proportional

to its pressure""?"'

sentences:

- 'Gay-Lussac''s law Gay-Lussac''s law Gay-Lussac''s law can refer to several discoveries

made by French chemist Joseph Louis Gay-Lussac (1778–1850) and other scientists

in the late 18th and early 19th centuries pertaining to thermal expansion of gases

and the relationship between temperature, volume, and pressure. It states that

the pressure of a given mass of gas varies directly with the absolute temperature

of the gas, when the volume is kept constant. Mathematically, it can be written

as: P/T=constant, Gay-Lussac is most often recognized for the Pressure Law which

established that the pressure of an enclosed gas is directly proportional to its

temperature and'

- 'Gas constant "V" is the volume of gas (SI unit cubic metres), "n" is the amount

of gas (SI unit moles), "m" is the mass (SI unit kilograms) contained in "V",

and "T" is the thermodynamic temperature (SI unit kelvins). "R" is the molar-weight-specific

gas constant, discussed below. The gas constant is expressed in the same physical

units as molar entropy and molar heat capacity. From the general equation "PV"

= "nRT" we get: where "P" is pressure, "V" is volume, "n" is number of moles of

a given substance, and "T" is temperature. As pressure is defined as force per

unit'

- The Boy Who Was a King term. The film presents not only the life of the former

Tsar, but also intertwines within the story vignettes of various Bulgarians, who

were supporting him, sending him gifts, or merely tattooing his face on their

body. The story is told through personal footage and vast amounts of archive material.

The film received praise for its editing and use of archives with Variety's Robert

Koehler writing that "Pic’s terrific use of archival footage includes an exiled

Simeon interviewed in the early ’60s, disputing his playboy rep." and "Editing

is aces." The Boy Who Was a King The Boy Who Was

- Francis Hauksbee In 1708, Hauksbee independently discovered Charles's law of gases,

which states that, for a given mass of gas at a constant pressure, the volume

of the gas is proportional to its temperature. Hauksbee published accounts of

his experiments in the Royal Society's journal "Philosophical Transactions". In

1709 he self-published "Physico-Mechanical Experiments on Various Subjects" which

collected together many of these experiments along with discussion that summarized

much of his scientific work. An Italian translation was published in 1716. A second

edition was published posthumously in 1719. There were also translations to Dutch

(1735) and French (1754). The Royal Society Hauksbee

- 'Boyle''s law air moves from high to low pressure. Related phenomena: Other gas

laws: Boyle''s law Boyle''s law, sometimes referred to as the Boyle–Mariotte law,

or Mariotte''s law (especially in France), is an experimental gas law that describes

how the pressure of a gas tends to increase as the volume of the container decreases.

A modern statement of Boyle''s law is The absolute pressure exerted by a given

mass of an ideal gas is inversely proportional to the volume it occupies if the

temperature and amount of gas remain unchanged within a closed system. Mathematically,

Boyle''s law can be stated as or'

- Boyle's law of the gas, and "k" is a constant. The equation states that the product

of pressure and volume is a constant for a given mass of confined gas and this

holds as long as the temperature is constant. For comparing the same substance

under two different sets of conditions, the law can be usefully expressed as The

equation shows that, as volume increases, the pressure of the gas decreases in

proportion. Similarly, as volume decreases, the pressure of the gas increases.

The law was named after chemist and physicist Robert Boyle, who published the

original law in 1662. This relationship

- source_sentence: Peter Stuyvesant, born in Holland, became Governor of which American

city in 1647?

sentences:

- Peter Stuyvesant at the corner of Thirteenth Street and Third Avenue until 1867

when it was destroyed by a storm, bearing fruit almost to the last. The house

was destroyed by fire in 1777. He also built an executive mansion of stone called

Whitehall. In 1645, Stuyvesant married Judith Bayard (–1687) of the Bayard family.

Her brother, Samuel Bayard, was the husband of Stuyvesant's sister, Anna Stuyvesant.

Petrus and Judith had two sons together. He died in August 1672 and his body was

entombed in the east wall of St. Mark's Church in-the-Bowery, which sits on the

site of Stuyvesant’s family chapel.

- 'Peter Stuyvesant (cigarette) can amount to millions of dollars and finally criminal

prosecution - if companies wilfully break the laws. However last year, when questioned

on why no such action was being pursued against Imperial Tobacco a spokeswoman

for Federal Health said: ""No instances of non-compliance with the Act have been

identified by the Department that warrant the initiation of Court proceedings

in the first instance, and without attempting alternative dispute resolution to

achieve compliance"". Peter Stuyvesant is or was sold in the following countries:

Canada, United States, United Kingdom, Luxembourg, Belgium, The Netherlands, Germany,

France, Austria, Switzerland, Spain, Italy, Czech Republic, Greece,'

- Jochem Pietersen Kuyter September 25, 1647, until the city was incorporated, in

1653, when he was made schout (sheriff). Kuyter twice came in conflict with the

Director of New Netherland. Kuyter was a man of good education, what is evident

by his dealings with Willem Kieft., who he believed damaged the colony with his

policies and the start of Kieft's War in 1643. In 1647, when Peter Stuyvesant

arrived in New Amsterdam to replace Kieft, Kuyter and Cornelis Melyn acting in

name of the citizens of New Amsterdam, brought charges against the outgoing governor,

demanding an investigation of his conduct while in office.

- Peter Stuyvesant (cigarette) half of its regular users"" and called the packaging

changes ""the ultimate sick joke from big tobacco"". In 2013, it was reported

that Imperial Tobacco Australia had sent marketing material to WA tobacco retailers

which promotes limited edition packs of "Peter Stuyvesant + Loosie", which came

with 26 cigarettes. The material included images of a young woman with pink hair

putting on lipstick and men on the streets of New York and also included a calendar

and small poster that were clearly intended to glamorise smoking. Anti-smoking

campaigner Mike Daube said although the material did not break the law because

- 'Peter Stuyvesant but the order was soon revoked under pressure from the States

of Holland and the city of Amsterdam. Stuyvesant prepared against an attack by

ordering the citizens to dig a ditch from the North River to the East River and

to erect a fortification. In 1653, a convention of two deputies from each village

in New Netherland demanded reforms, and Stuyvesant commanded that assembly to

disperse, saying: "We derive our authority from God and the company, not from

a few ignorant subjects." In the summer of 1655, he sailed down the Delaware River

with a fleet of seven vessels and'

- Peter Stuyvesant Dutch Reformed church, a Calvinist denomination, holding to the

Three Forms of Unity (Belgic Confession, Heidelberg Catechism, Canons of Dordt).

The English were Anglicans, holding to the 39 Articles, a Protestant confession,

with bishops. In 1665, Stuyvesant went to the Netherlands to report on his term

as governor. On his return to the colony, he spent the remainder of his life on

his farm of sixty-two acres outside the city, called the Great Bouwerie, beyond

which stretched the woods and swamps of the village of Nieuw Haarlem. A pear tree

that he reputedly brought from the Netherlands in 1647 remained

---

# SentenceTransformer based on seongil-dn/unsupervised_20m_3800

This is a [sentence-transformers](https://www.SBERT.net) model finetuned from [seongil-dn/unsupervised_20m_3800](https://huggingface.co/seongil-dn/unsupervised_20m_3800). It maps sentences & paragraphs to a 1024-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

## Model Details

### Model Description

- **Model Type:** Sentence Transformer

- **Base model:** [seongil-dn/unsupervised_20m_3800](https://huggingface.co/seongil-dn/unsupervised_20m_3800) <!-- at revision 1cda749f242e2b5c9e4f3c1122a61e76fec1fee5 -->

- **Maximum Sequence Length:** 1024 tokens

- **Output Dimensionality:** 1024 dimensions

- **Similarity Function:** Cosine Similarity

<!-- - **Training Dataset:** Unknown -->

<!-- - **Language:** Unknown -->

<!-- - **License:** Unknown -->

### Model Sources

- **Documentation:** [Sentence Transformers Documentation](https://sbert.net)

- **Repository:** [Sentence Transformers on GitHub](https://github.com/UKPLab/sentence-transformers)

- **Hugging Face:** [Sentence Transformers on Hugging Face](https://huggingface.co/models?library=sentence-transformers)

### Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 1024, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 1024, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

(2): Normalize()

)

```

## Usage

### Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

```bash

pip install -U sentence-transformers

```

Then you can load this model and run inference.

```python

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("seongil-dn/bge-m3-756")

# Run inference

sentences = [

'Peter Stuyvesant, born in Holland, became Governor of which American city in 1647?',

'Peter Stuyvesant (cigarette) half of its regular users"" and called the packaging changes ""the ultimate sick joke from big tobacco"". In 2013, it was reported that Imperial Tobacco Australia had sent marketing material to WA tobacco retailers which promotes limited edition packs of "Peter Stuyvesant + Loosie", which came with 26 cigarettes. The material included images of a young woman with pink hair putting on lipstick and men on the streets of New York and also included a calendar and small poster that were clearly intended to glamorise smoking. Anti-smoking campaigner Mike Daube said although the material did not break the law because',

'Peter Stuyvesant (cigarette) can amount to millions of dollars and finally criminal prosecution - if companies wilfully break the laws. However last year, when questioned on why no such action was being pursued against Imperial Tobacco a spokeswoman for Federal Health said: ""No instances of non-compliance with the Act have been identified by the Department that warrant the initiation of Court proceedings in the first instance, and without attempting alternative dispute resolution to achieve compliance"". Peter Stuyvesant is or was sold in the following countries: Canada, United States, United Kingdom, Luxembourg, Belgium, The Netherlands, Germany, France, Austria, Switzerland, Spain, Italy, Czech Republic, Greece,',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 1024]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities.shape)

# [3, 3]

```

<!--

### Direct Usage (Transformers)

<details><summary>Click to see the direct usage in Transformers</summary>

</details>

-->

<!--

### Downstream Usage (Sentence Transformers)

You can finetune this model on your own dataset.

<details><summary>Click to expand</summary>

</details>

-->

<!--

### Out-of-Scope Use

*List how the model may foreseeably be misused and address what users ought not to do with the model.*

-->

<!--

## Bias, Risks and Limitations

*What are the known or foreseeable issues stemming from this model? You could also flag here known failure cases or weaknesses of the model.*

-->

<!--

### Recommendations

*What are recommendations with respect to the foreseeable issues? For example, filtering explicit content.*

-->

## Training Details

### Training Dataset

#### Unnamed Dataset

* Size: 1,138,596 training samples

* Columns: <code>anchor</code>, <code>positive</code>, <code>negative</code>, <code>negative_2</code>, <code>negative_3</code>, <code>negative_4</code>, and <code>negative_5</code>

* Approximate statistics based on the first 1000 samples:

| | anchor | positive | negative | negative_2 | negative_3 | negative_4 | negative_5 |

|:--------|:-----------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------|:--------------------------------------------------------------------------------------|

| type | string | string | string | string | string | string | string |

| details | <ul><li>min: 9 tokens</li><li>mean: 22.32 tokens</li><li>max: 119 tokens</li></ul> | <ul><li>min: 127 tokens</li><li>mean: 157.45 tokens</li><li>max: 420 tokens</li></ul> | <ul><li>min: 122 tokens</li><li>mean: 154.65 tokens</li><li>max: 212 tokens</li></ul> | <ul><li>min: 122 tokens</li><li>mean: 155.52 tokens</li><li>max: 218 tokens</li></ul> | <ul><li>min: 122 tokens</li><li>mean: 156.04 tokens</li><li>max: 284 tokens</li></ul> | <ul><li>min: 124 tokens</li><li>mean: 156.3 tokens</li><li>max: 268 tokens</li></ul> | <ul><li>min: 121 tokens</li><li>mean: 156.15 tokens</li><li>max: 249 tokens</li></ul> |

* Samples:

| anchor | positive | negative | negative_2 | negative_3 | negative_4 | negative_5 |

|:---------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|:----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| <code>What African country is projected to pass the United States in population by the year 2055?</code> | <code>African immigration to the United States officially 40,000 African immigrants, although it has been estimated that the population is actually four times this number when considering undocumented immigrants. The majority of these immigrants were born in Ethiopia, Egypt, Nigeria, and South Africa. African immigrants like many other immigrant groups are likely to establish and find success in small businesses. Many Africans that have seen the social and economic stability that comes from ethnic enclaves such as Chinatowns have recently been establishing ethnic enclaves of their own at much higher rates to reap the benefits of such communities. Such examples include Little Ethiopia in Los Angeles and</code> | <code>What Will Happen to the Gang Next Year? watching television at the time of the broadcast. This made it the lowest-rated episode in "30 Rock"<nowiki>'</nowiki>s history. and a decrease from the previous episode "The Return of Avery Jessup" (2.92 million) What Will Happen to the Gang Next Year? "What Will Happen to the Gang Next Year?" is the twenty-second and final episode of the sixth season of the American television comedy series "30 Rock", and the 125th overall episode of the series. It was directed by Michael Engler, and written by Matt Hubbard. The episode originally aired on the National Broadcasting Company (NBC) network in the United States</code> | <code>Christianity in the United States Christ is the fifth-largest denomination, the largest Pentecostal church, and the largest traditionally African-American denomination in the nation. Among Eastern Christian denominations, there are several Eastern Orthodox and Oriental Orthodox churches, with just below 1 million adherents in the US, or 0.4% of the total population. Christianity was introduced to the Americas as it was first colonized by Europeans beginning in the 16th and 17th centuries. Going forward from its foundation, the United States has been called a Protestant nation by a variety of sources. Immigration further increased Christian numbers. Today most Christian churches in the United States are either</code> | <code>What Will Happen to the Gang Next Year? What Will Happen to the Gang Next Year? "What Will Happen to the Gang Next Year?" is the twenty-second and final episode of the sixth season of the American television comedy series "30 Rock", and the 125th overall episode of the series. It was directed by Michael Engler, and written by Matt Hubbard. The episode originally aired on the National Broadcasting Company (NBC) network in the United States on May 17, 2012. In the episode, Jack (Alec Baldwin) and Avery (Elizabeth Banks) seek to renew their vows; Criss (James Marsden) sets out to show Liz (Tina Fey) he can pay</code> | <code>History of the Jews in the United States Representatives by Rep. Samuel Dickstein (D; New York). This also failed to pass. During the Holocaust, fewer than 30,000 Jews a year reached the United States, and some were turned away due to immigration policies. The U.S. did not change its immigration policies until 1948. Currently, laws requiring teaching of the Holocaust are on the books in five states. The Holocaust had a profound impact on the community in the United States, especially after 1960, as Jews tried to comprehend what had happened, and especially to commemorate and grapple with it when looking to the future. Abraham Joshua Heschel summarized</code> | <code>Public holidays in the United States will have very few customers that day. The labor force in the United States comprises about 62% (as of 2014) of the general population. In the United States, 97% of the private sector businesses determine what days this sector of the population gets paid time off, according to a study by the Society for Human Resource Management. The following holidays are observed by the majority of US businesses with paid time off: This list of holidays is based off the official list of federal holidays by year from the US Government. The holidays however are at the discretion of employers</code> |

| <code>Which is the largest species of the turtle family?</code> | <code>Loggerhead sea turtle turtle is debated, but most authors consider it a single polymorphic species. Molecular genetics has confirmed hybridization of the loggerhead sea turtle with the Kemp's ridley sea turtle, hawksbill sea turtle, and green sea turtles. The extent of natural hybridization is not yet determined; however, second-generation hybrids have been reported, suggesting some hybrids are fertile. Although evidence is lacking, modern sea turtles probably descended from a single common ancestor during the Cretaceous period. Like all other sea turtles except the leatherback, loggerheads are members of the ancient family Cheloniidae, and appeared about 40 million years ago. Of the six species</code> | <code>Convention on the Conservation of Migratory Species of Wild Animals take joint action. At May 2018, there were 126 Parties to the Convention. The CMS Family covers a great diversity of migratory species. The Appendices of CMS include many mammals, including land mammals, marine mammals and bats; birds; fish; reptiles and one insect. Among the instruments, AEWA covers 254 species of birds that are ecologically dependent on wetlands for at least part of their annual cycle. EUROBATS covers 52 species of bat, the Memorandum of Understanding on the Conservation of Migratory Sharks seven species of shark, the IOSEA Marine Turtle MOU six species of marine turtle and the Raptors MoU</code> | <code>Razor-backed musk turtle Razor-backed musk turtle The razor-backed musk turtle ("Sternotherus carinatus") is a species of turtle in the family Kinosternidae. The species is native to the southern United States. There are no subspecies that are recognized as being valid. "S. carinatus" is found in the states of Alabama, Arkansas, Louisiana, Mississippi, Oklahoma, and Texas. The razor-backed musk turtle grows to a straight carapace length of about . It has a brown-colored carapace, with black markings at the edges of each scute. The carapace has a distinct, sharp keel down the center of its length, giving the species its common name. The body</code> | <code>African helmeted turtle African helmeted turtle The African helmeted turtle ("Pelomedusa subrufa"), also known commonly as the marsh terrapin, the crocodile turtle, or in the pet trade as the African side-necked turtle, is a species of omnivorous side-necked terrapin in the family Pelomedusidae. The species naturally occurs in fresh and stagnant water bodies throughout much of Sub-Saharan Africa, and in southern Yemen. The marsh terrapin is typically a rather small turtle, with most individuals being less than in straight carapace length, but one has been recorded with a length of . It has a black or brown carapace. The top of the tail</code> | <code>Box turtle Box turtle Box turtles are North American turtles of the genus Terrapene. Although box turtles are superficially similar to tortoises in terrestrial habits and overall appearance, they are actually members of the American pond turtle family (Emydidae). The twelve taxa which are distinguished in the genus are distributed over four species. They are largely characterized by having a domed shell, which is hinged at the bottom, allowing the animal to close its shell tightly to escape predators. The genus name "Terrapene" was coined by Merrem in 1820 as a genus separate from "Emys" for those species which had a sternum</code> | <code>Vallarta mud turtle Vallarta mud turtle The Vallarta mud turtle ("Kinosternon vogti") is a recently identified species of mud turtle in the family Kinosternidae. While formerly considered conspecific with the Jalisco mud turtle, further studies indicated that it was a separate species. It can be identified by a combination of the number of plastron and carapace scutes, body size, and the distinctive yellow rostral shield in males. It is endemic to Mexican state of Jalisco. It is only known from a few human-created or human-affected habitats (such as small streams and ponds) found around Puerto Vallarta. It is one of only 3 species</code> |

| <code>How many gallons of beer are in an English barrel?</code> | <code>Low-alcohol beer Prohibition in the United States. Near beer could not legally be labeled as "beer" and was officially classified as a "cereal beverage". The public, however, almost universally called it "near beer". The most popular "near beer" was Bevo, brewed by the Anheuser-Busch company. The Pabst company brewed "Pablo", Miller brewed "Vivo", and Schlitz brewed "Famo". Many local and regional breweries stayed in business by marketing their own near-beers. By 1921 production of near beer had reached over 300 million US gallons (1 billion L) a year (36 L/s). A popular illegal practice was to add alcohol to near beer. The</code> | <code>Keg terms "half-barrel" and "quarter-barrel" are derived from the U.S. beer barrel, legally defined as being equal to 31 U.S. gallons (this is not the same volume as some other units also known as "barrels"). A 15.5 U.S. gallon keg is also equal to: However, beer kegs can come in many sizes: In European countries the most common keg size is 50 liters. This includes the UK, which uses a non-metric standard keg of 11 imperial gallons, which is coincidentally equal to . The German DIN 6647-1 and DIN 6647-2 have also defined kegs in the sizes of 30 and 20</code> | <code>Beer in Chile craft beers. They are generally low or very low volume producers. In Chile there are more than 150 craft beer producers distributed along the 15 Chilean Regions. The list below includes: Beer in Chile The primary beer brewed and consumed in Chile is pale lager, though the country also has a tradition of brewing corn beer, known as chicha. Chile’s beer history has a strong German influence – some of the bigger beer producers are from the country’s southern lake district, a region populated by a great number of German immigrants during the 19th century. Chile also produces English ale-style</code> | <code>Barrel variation. In modern times, produce barrels for all dry goods, excepting cranberries, contain 7,056 cubic inches, about 115.627 L. Barrel A barrel, cask, or tun is a hollow cylindrical container, traditionally made of wooden staves bound by wooden or metal hoops. Traditionally, the barrel was a standard size of measure referring to a set capacity or weight of a given commodity. For example, in the UK a barrel of beer refers to a quantity of . Wine was shipped in barrels of . Modern wooden barrels for wine-making are either made of French common oak ("Quercus robur") and white oak</code> | <code>The Rare Barrel The Rare Barrel The Rare Barrel is a brewery and brewpub in Berkeley, California, United States, that exclusively produces sour beers. Founders Jay Goodwin and Alex Wallash met while attending UCSB. They started home-brewing in their apartment and decided that they would one day start a brewery together. Goodwin started working at The Bruery, where he worked his way from a production assistant to brewer, eventually becoming the head of their barrel aging program. The Rare Barrel brewed its first batch of beer in February 2013, and opened its tasting room on December 27, 2013. The Rare Barrel was named</code> | <code>Barrel (unit) Barrel (unit) A barrel is one of several units of volume applied in various contexts; there are dry barrels, fluid barrels (such as the UK beer barrel and US beer barrel), oil barrels and so on. For historical reasons the volumes of some barrel units are roughly double the volumes of others; volumes in common usage range from about . In many connections the term "drum" is used almost interchangeably with "barrel". Since medieval times the term barrel as a unit of measure has had various meanings throughout Europe, ranging from about 100 litres to 1000 litres. The name was</code> |

* Loss: [<code>CachedGISTEmbedLoss</code>](https://sbert.net/docs/package_reference/sentence_transformer/losses.html#cachedgistembedloss) with these parameters:

```json

{'guide': SentenceTransformer(

(0): Transformer({'max_seq_length': 1024, 'do_lower_case': False}) with Transformer model: XLMRobertaModel