---

license: agpl-3.0

tags:

- image

- keras

- myology

- biology

- histology

- muscle

- cells

- fibers

- myopathy

- SDH

- myoquant

- classification

- mitochondria

datasets:

- corentinm7/MyoQuant-SDH-Data

metrics:

- accuracy

library_name: keras

model-index:

- name: MyoQuant-SDH-Resnet50V2

results:

- task:

type: image-classification # Required. Example: automatic-speech-recognition

name: Image Classification # Optional. Example: Speech Recognition

dataset:

type: corentinm7/MyoQuant-SDH-Data # Required. Example: common_voice. Use dataset id from https://hf.co/datasets

name: MyoQuant SDH Data # Required. A pretty name for the dataset. Example: Common Voice (French)

split: test # Optional. Example: test

metrics:

- type: accuracy # Required. Example: wer. Use metric id from https://hf.co/metrics

value: 0.927 # Required. Example: 20.90

name: Test Accuracy # Optional. Example: Test WER

- type: hyperml/balanced_accuracy

value: 0.912

name: Test Balanced Accuracy

---

**Update 10-06-2025: The model have been re-trained and made compatible with Keras v3**

## Model description

This is the model card for the SDH Model used by the [MyoQuant](https://github.com/lambda-science/MyoQuant) tool.

## Intended uses & limitations

It's intended to allow people to use, improve and verify the reproducibility of our MyoQuant tool. The SDH model is used to classify SDH stained muscle fiber with abnormal mitochondria profile.

## Training and evaluation data

It's trained on the [corentinm7/MyoQuant-SDH-Data](https://huggingface.co/datasets/corentinm7/MyoQuant-SDH-Data), avaliable on HuggingFace Dataset Hub.

## Training procedure

This model was trained using the ResNet50V2 model architecture in Keras.

All images have been resized to 256x256.

Data augmentation was included as layers before ResNet50V2.

Full model code:

```python

data_augmentation = keras.Sequential([

keras.layers.RandomBrightness(factor=0.2, input_shape=(None, None, 3)),

keras.layers.RandomContrast(factor=0.2),

keras.layers.RandomFlip("horizontal_and_vertical"),

keras.layers.RandomRotation(0.3, fill_mode="constant"),

keras.layers.RandomZoom(.2, .2, fill_mode="constant"),

keras.layers.RandomTranslation(0.2, .2, fill_mode="constant"),

keras.layers.Resizing(256, 256, interpolation="bilinear", crop_to_aspect_ratio=True, input_shape=(None, None, 3)),

keras.layers.Rescaling(scale=1. / 127.5, offset=-1), # For [-1, 1] scaling

])

# My ResNet50V2

model = keras.models.Sequential()

model.add(data_augmentation)

model.add(

ResNet50V2(

include_top=False,

input_shape=(256, 256, 3),

pooling="avg",

)

)

model.add(keras.layers.Dense(len(config.SUB_FOLDERS), activation='softmax'))

model.summary()

```

```

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

sequential (Sequential) (None, 256, 256, 3) 0

resnet50v2 (Functional) (None, 2048) 23564800

flatten (Flatten) (None, 2048) 0

dense (Dense) (None, 2) 4098

=================================================================

Total params: 23,568,898 (89.91 MB)

Trainable params: 23,523,458 (89.73 MB)

Non-trainable params: 45,440 (177.50 KB)

_________________________________________________________________

```

We used a ResNet50V2 pre-trained on ImageNet as a starting point and trained the model using an EarlyStopping with a value of 20 (i.e. if validation loss doesn't improve after 20 epoch, stop the training and roll back to the epoch with lowest val loss.)

Class imbalance was handled by using the class\_-weight attribute during training. It was calculated for each class as `(1/n. elem of the class) * (n. of all training elem / 2)` giving in our case: `{0: 0.6593016912165849, 1: 2.069349315068493}`

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: Adam

- Learning Rate Schedule: `ReduceLROnPlateau(monitor='val_loss', factor=0.2, patience=5, min_lr=1e-7` with START_LR = 1e-5 and MIN_LR = 1e-7

- Loss Function: SparseCategoricalCrossentropy

- Metric: Accuracy

For more details please see the training notebook associated.

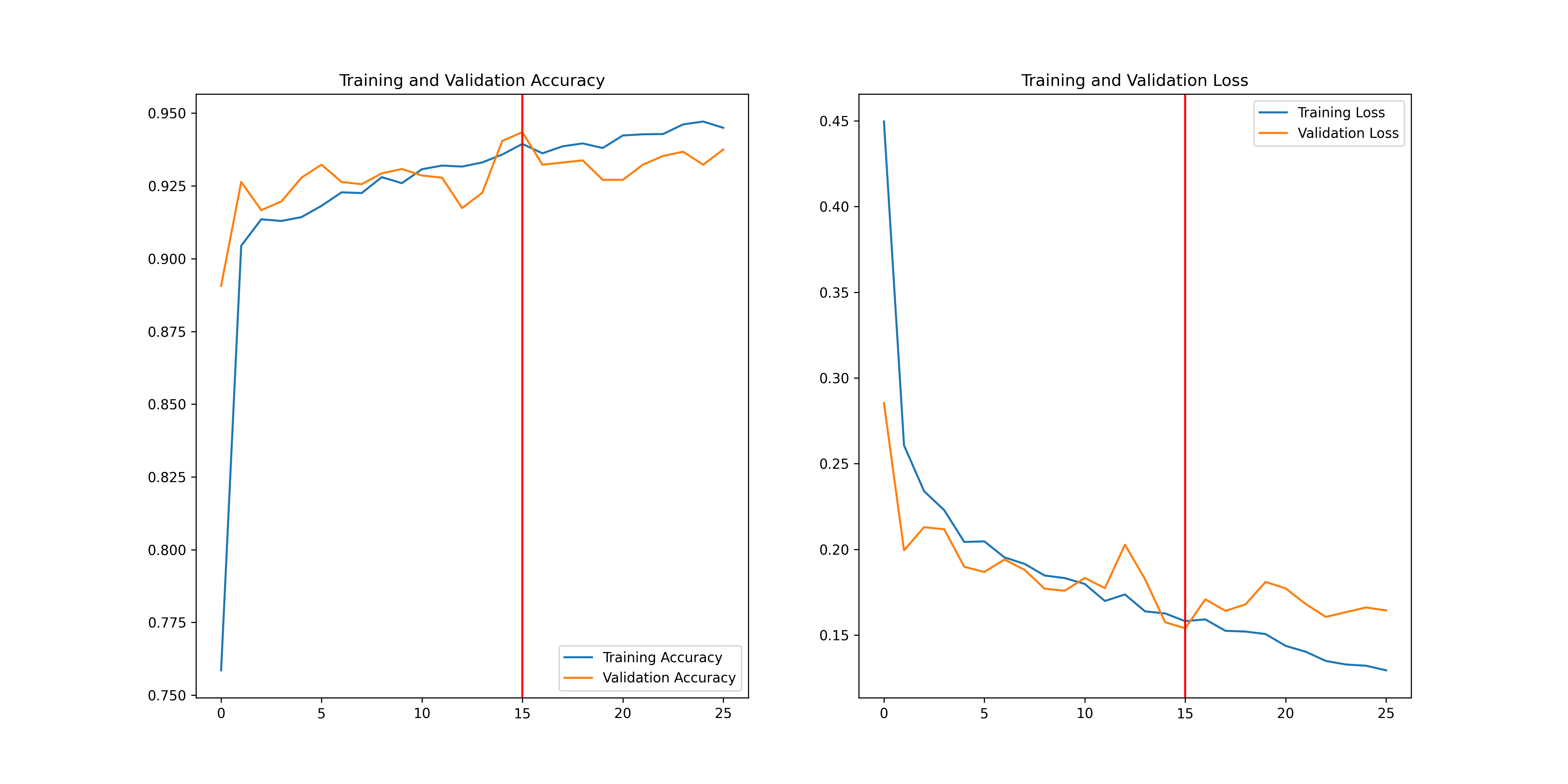

## Training Curve

Full training results are avaliable on `Weights and Biases`

here: [https://wandb.ai/lambda-science/myoquant-sdh](https://wandb.ai/lambda-science/myoquant-sdh)

Plot of the accuracy vs epoch and loss vs epoch for training and validation set.

## Test Results

Results for accuracy and balanced accuracy metrics on the test split of the [corentinm7/MyoQuant-SDH-Data](https://huggingface.co/datasets/corentinm7/MyoQuant-SDH-Data) dataset.

```

105/105 - 22s - 207ms/step - accuracy: 0.9276 - loss: 0.1678

Test data results:

0.9276354908943176

105/105 ━━━━━━━━━━━━━━━━━━━━ 20s 168ms/step

Test data results:

0.9128566397981572

```

# How to Import the Model

With Keras 3 / Tensorflow 2.19 and over:

```python

import keras

model = keras.saving.load_model("hf://corentinm7/MyoQuant-SDH-Model")

```

Resizing and rescaling layer are already implemented inside the model. You simply need to provide your input images as numpy float32

array (0-255) of any shape (None, None, 3) (3 channels - RGB).

## The Team Behind this Dataset

**The creator, uploader and main maintainer of this model, associated dataset and MyoQuant is:**

- **[Corentin Meyer, PhD in Biomedical AI](https://cmeyer.fr) Email: Github: [@lambda-science](https://github.com/lambda-science)**

Special thanks to the experts that created the data for the dataset and all the time they spend counting cells :

- **Quentin GIRAUD, PhD Student** @ [Department Translational Medicine, IGBMC, CNRS UMR 7104](https://www.igbmc.fr/en/recherche/teams/pathophysiology-of-neuromuscular-diseases), 1 rue Laurent Fries, 67404 Illkirch, France

- **Charlotte GINESTE, Post-Doc** @ [Department Translational Medicine, IGBMC, CNRS UMR 7104](https://www.igbmc.fr/en/recherche/teams/pathophysiology-of-neuromuscular-diseases), 1 rue Laurent Fries, 67404 Illkirch, France

Last but not least thanks to Bertrand Vernay being at the origin of this project:

- **Bertrand VERNAY, Platform Leader** @ [Light Microscopy Facility, IGBMC, CNRS UMR 7104](https://www.igbmc.fr/en/plateformes-technologiques/photonic-microscopy), 1 rue Laurent Fries, 67404 Illkirch, France

## Partners

MyoQuant-SDH-Model is born within the collaboration between the [CSTB Team @ ICube](https://cstb.icube.unistra.fr/en/index.php/Home) led by Julie D. Thompson, the [Morphological Unit of the Institute of Myology of Paris](https://www.institut-myologie.org/en/recherche-2/neuromuscular-investigation-center/morphological-unit/) led by Teresinha Evangelista, the [imagery platform MyoImage of Center of Research in Myology](https://recherche-myologie.fr/technologies/myoimage/) led by Bruno Cadot, [the photonic microscopy platform of the IGMBC](https://www.igbmc.fr/en/plateformes-technologiques/photonic-microscopy) led by Bertrand Vernay and the [Pathophysiology of neuromuscular diseases team @ IGBMC](https://www.igbmc.fr/en/igbmc/a-propos-de-ligbmc/directory/jocelyn-laporte) led by Jocelyn Laporte