Initial upload of custom Hindi LM v1

Browse files- README.md +190 -0

- config.json +23 -0

- hindi_embeddings.py +730 -0

- hindi_language_model.py +547 -0

- model.safetensors +3 -0

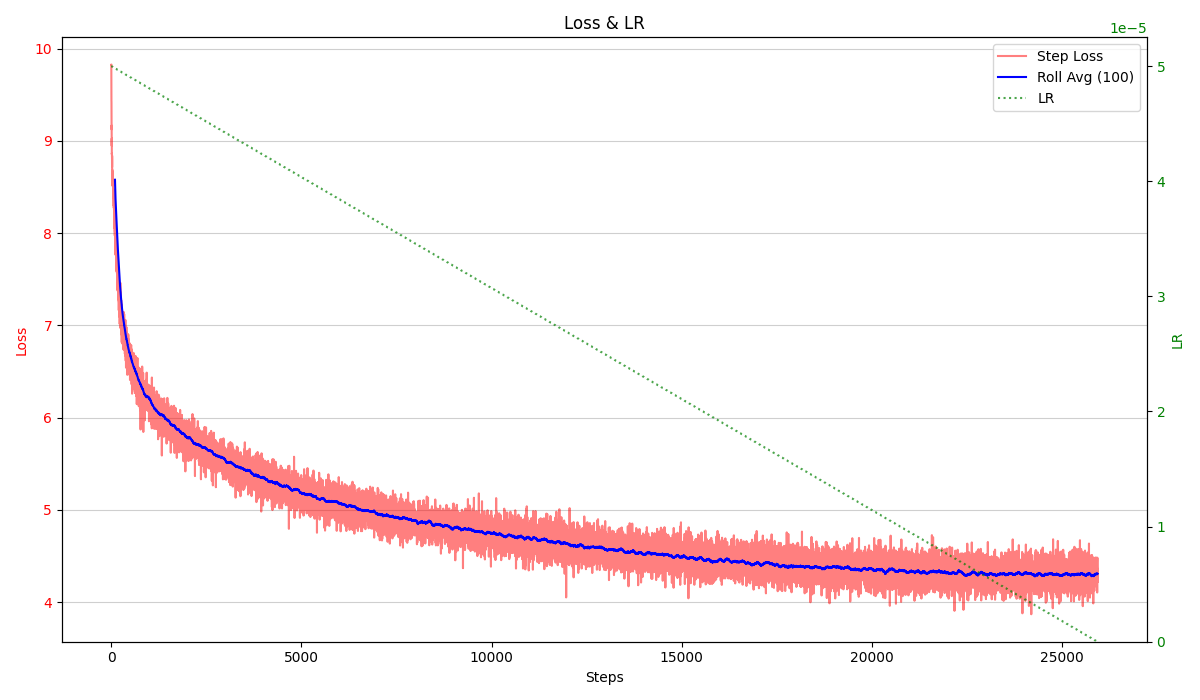

- step_loss_lr.png +0 -0

- tokenizer.model +3 -0

- tokenizer_config.json +9 -0

README.md

ADDED

|

@@ -0,0 +1,190 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

---

|

| 3 |

+

license: mit # Or choose another appropriate license: https://huggingface.co/docs/hub/repositories-licenses

|

| 4 |

+

language: hi

|

| 5 |

+

tags:

|

| 6 |

+

- hindi

|

| 7 |

+

- text-generation

|

| 8 |

+

- causal-lm

|

| 9 |

+

- custom-model

|

| 10 |

+

# Add more specific tags if applicable, e.g., based on training data domain

|

| 11 |

+

pipeline_tag: text-generation

|

| 12 |

+

# Specify model size if known from config

|

| 13 |

+

|

| 14 |

+

---

|

| 15 |

+

|

| 16 |

+

# Hindi Causal Language Model (convaiinnovations/hindi-foundational-model-base)

|

| 17 |

+

|

| 18 |

+

This repository contains a custom-trained Hindi Causal Language Model.

|

| 19 |

+

|

| 20 |

+

## Model Description

|

| 21 |

+

|

| 22 |

+

* **Architecture:** Custom Transformer (12 layers, hidden=768, 16 heads, ffn=3072, act=swiglu, norm=rmsnorm) based on the `HindiCausalLM` class with modified attention mechanisms and Hindi-specific optimizations including multi-resolution attention to capture both character-level and word-level patterns, morphology-aware feed-forward layers, and script-mix processing for Hindi-English code-mixing.

|

| 23 |

+

* **Language:** Hindi (hi)

|

| 24 |

+

* **Training Data:** The model was trained on a diverse corpus of 2.7 million high-quality Hindi text samples from multiple sources including IITB Parallel Corpus (1.2M sentences), Samanantar (750K samples), Oscar Hindi (450K sentences), CC-100 Hindi (300K sentences), Hindi Wikipedia (150K articles), Hindi news articles (100K pieces), XNLI Hindi (50K premise-hypothesis pairs), IndicGLUE (30K samples), and Hindi literature (5K passages).

|

| 25 |

+

* **Tokenizer:** SentencePiece trained on Hindi text (`tokenizer.model`). Vocab Size: 16000

|

| 26 |

+

* **Model Details:** Trained for [Unknown] epochs [Add more training details like batch size, learning rate if desired].

|

| 27 |

+

|

| 28 |

+

## How to Use

|

| 29 |

+

|

| 30 |

+

**⚠️ Important:** This model uses custom Python classes (`HindiCausalLM`, `HindiCausalLMConfig`, `SentencePieceTokenizerWrapper`) which are **not** part of the standard Hugging Face `transformers` library. To use this model, you **must** have the Python files defining these classes (e.g., `hindi_language_model.py`, `hindi_embeddings.py`) available in your Python environment.

|

| 31 |

+

|

| 32 |

+

```python

|

| 33 |

+

import os

|

| 34 |

+

import json

|

| 35 |

+

import torch

|

| 36 |

+

import numpy as np

|

| 37 |

+

from huggingface_hub import hf_hub_download

|

| 38 |

+

|

| 39 |

+

# --- ENSURE THESE CLASSES ARE DEFINED OR IMPORTED ---

|

| 40 |

+

# You MUST have hindi_language_model.py and hindi_embeddings.py available

|

| 41 |

+

try:

|

| 42 |

+

from hindi_language_model import HindiCausalLM, HindiCausalLMConfig

|

| 43 |

+

from hindi_embeddings import SentencePieceTokenizerWrapper

|

| 44 |

+

print("Custom classes imported successfully.")

|

| 45 |

+

except ImportError:

|

| 46 |

+

print("ERROR: Cannot import custom classes.")

|

| 47 |

+

print("Please place hindi_language_model.py and hindi_embeddings.py in your working directory or Python path.")

|

| 48 |

+

# Define minimal dummy classes to potentially allow script execution, but loading will fail

|

| 49 |

+

class SentencePieceTokenizerWrapper: pass

|

| 50 |

+

class HindiCausalLMConfig: pass

|

| 51 |

+

class HindiCausalLM(torch.nn.Module): pass

|

| 52 |

+

# Exit if classes are truly needed

|

| 53 |

+

# exit()

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

# --- Configuration ---

|

| 57 |

+

repo_id = "convaiinnovations/hindi-foundational-model-base"

|

| 58 |

+

# model_dir = "./downloaded_model" # Example download location

|

| 59 |

+

# os.makedirs(model_dir, exist_ok=True)

|

| 60 |

+

# Use current directory if preferred

|

| 61 |

+

model_dir = "."

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

# --- Download Files ---

|

| 65 |

+

print(f"Downloading files for {repo_id} to '{os.path.abspath(model_dir)}'...")

|

| 66 |

+

try:

|

| 67 |

+

config_path = hf_hub_download(repo_id=repo_id, filename="config.json", local_dir=model_dir, local_dir_use_symlinks=False)

|

| 68 |

+

tokenizer_path = hf_hub_download(repo_id=repo_id, filename="tokenizer.model", local_dir=model_dir, local_dir_use_symlinks=False)

|

| 69 |

+

# Try safetensors first, then bin

|

| 70 |

+

using_safetensors = True

|

| 71 |

+

try: weights_path = hf_hub_download(repo_id=repo_id, filename="model.safetensors", local_dir=model_dir, local_dir_use_symlinks=False)

|

| 72 |

+

except Exception: # More specific: from huggingface_hub.utils import EntryNotFoundError

|

| 73 |

+

try: weights_path = hf_hub_download(repo_id=repo_id, filename="pytorch_model.bin", local_dir=model_dir, local_dir_use_symlinks=False); using_safetensors = False

|

| 74 |

+

except Exception as e_inner: raise FileNotFoundError(f"Could not download weights (.safetensors or .bin): {e_inner}") from e_inner

|

| 75 |

+

except Exception as e: raise RuntimeError(f"Failed to download files from Hub: {e}") from e

|

| 76 |

+

print("Files downloaded.")

|

| 77 |

+

|

| 78 |

+

# --- Load Components ---

|

| 79 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 80 |

+

print(f"Using device: {device}")

|

| 81 |

+

|

| 82 |

+

try:

|

| 83 |

+

# 1. Load Tokenizer

|

| 84 |

+

tokenizer = SentencePieceTokenizerWrapper(tokenizer_path) # Assumes constructor takes path

|

| 85 |

+

|

| 86 |

+

# 2. Load Config

|

| 87 |

+

with open(config_path, 'r', encoding='utf-8') as f: config_dict = json.load(f)

|

| 88 |

+

if hasattr(HindiCausalLMConfig, 'from_dict'): config = HindiCausalLMConfig.from_dict(config_dict)

|

| 89 |

+

else: config = HindiCausalLMConfig(**config_dict) # Assumes __init__ takes kwargs

|

| 90 |

+

|

| 91 |

+

# 3. Load Model

|

| 92 |

+

model = HindiCausalLM(config) # Instantiate model

|

| 93 |

+

if using_safetensors:

|

| 94 |

+

from safetensors.torch import load_file

|

| 95 |

+

state_dict = load_file(weights_path, device="cpu")

|

| 96 |

+

else:

|

| 97 |

+

state_dict = torch.load(weights_path, map_location="cpu")

|

| 98 |

+

model.load_state_dict(state_dict, strict=True) # Use strict=True after training

|

| 99 |

+

del state_dict

|

| 100 |

+

model.to(device)

|

| 101 |

+

model.eval()

|

| 102 |

+

print("Model and tokenizer loaded successfully.")

|

| 103 |

+

|

| 104 |

+

except Exception as e:

|

| 105 |

+

print(f"ERROR: Failed loading model components: {e}")

|

| 106 |

+

# Add more specific error handling if needed

|

| 107 |

+

exit()

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

# --- Example Inference ---

|

| 111 |

+

prompt = "भारत की संस्कृति" # Example prompt

|

| 112 |

+

max_new_tokens = 60 # Generate N new tokens

|

| 113 |

+

temperature = 0.7

|

| 114 |

+

top_k = 50

|

| 115 |

+

seed = 42

|

| 116 |

+

|

| 117 |

+

print(f"\nGenerating text for prompt: '{prompt}'...")

|

| 118 |

+

|

| 119 |

+

torch.manual_seed(seed)

|

| 120 |

+

np.random.seed(seed)

|

| 121 |

+

if device.type == 'cuda': torch.cuda.manual_seed_all(seed)

|

| 122 |

+

|

| 123 |

+

try:

|

| 124 |

+

# Encoding (use the correct method from your wrapper)

|

| 125 |

+

if hasattr(tokenizer, '__call__'): encoded = tokenizer(prompt, return_tensors=None); input_ids = encoded.get('input_ids')

|

| 126 |

+

elif hasattr(tokenizer, 'sp_model') and hasattr(tokenizer.sp_model, 'EncodeAsIds'): input_ids = tokenizer.sp_model.EncodeAsIds(prompt)

|

| 127 |

+

else: raise AttributeError("Tokenizer lacks encoding method.")

|

| 128 |

+

assert input_ids, "Encoding failed"

|

| 129 |

+

|

| 130 |

+

bos_id = getattr(tokenizer, 'bos_token_id', 1)

|

| 131 |

+

if bos_id is not None: input_ids = [bos_id] + input_ids

|

| 132 |

+

|

| 133 |

+

input_tensor = torch.tensor([input_ids], dtype=torch.long, device=device)

|

| 134 |

+

generated_ids = input_tensor

|

| 135 |

+

|

| 136 |

+

with torch.no_grad():

|

| 137 |

+

for _ in range(max_new_tokens):

|

| 138 |

+

outputs = model(input_ids=generated_ids)

|

| 139 |

+

# Access logits

|

| 140 |

+

if isinstance(outputs, dict) and 'logits' in outputs: logits = outputs['logits']

|

| 141 |

+

elif hasattr(outputs, 'logits'): logits = outputs.logits

|

| 142 |

+

else: raise TypeError("Model output format error.")

|

| 143 |

+

|

| 144 |

+

next_token_logits = logits[:, -1, :]

|

| 145 |

+

# Sampling

|

| 146 |

+

if temperature > 0: scaled_logits = next_token_logits / temperature

|

| 147 |

+

else: scaled_logits = next_token_logits

|

| 148 |

+

if top_k > 0: kth_vals, _ = torch.topk(scaled_logits, k=top_k); scaled_logits[scaled_logits < kth_vals[:, -1].unsqueeze(-1)] = -float("Inf")

|

| 149 |

+

probs = torch.softmax(scaled_logits, dim=-1)

|

| 150 |

+

next_token_id = torch.multinomial(probs, num_samples=1)

|

| 151 |

+

generated_ids = torch.cat([generated_ids, next_token_id], dim=1)

|

| 152 |

+

# Check EOS

|

| 153 |

+

eos_id = getattr(tokenizer, 'eos_token_id', 2)

|

| 154 |

+

if eos_id is not None and next_token_id.item() == eos_id: break

|

| 155 |

+

|

| 156 |

+

# Decoding

|

| 157 |

+

output_ids = generated_ids[0].cpu().tolist()

|

| 158 |

+

# Remove special tokens

|

| 159 |

+

if bos_id and output_ids and output_ids[0] == bos_id: output_ids = output_ids[1:]

|

| 160 |

+

if eos_id and output_ids and output_ids[-1] == eos_id: output_ids = output_ids[:-1]

|

| 161 |

+

|

| 162 |

+

# Use appropriate decode method

|

| 163 |

+

if hasattr(tokenizer, 'sp_model') and hasattr(tokenizer.sp_model, 'DecodeIds'): generated_text = tokenizer.sp_model.DecodeIds(output_ids)

|

| 164 |

+

elif hasattr(tokenizer, 'decode'): generated_text = tokenizer.decode(output_ids)

|

| 165 |

+

else: raise AttributeError("Tokenizer lacks decoding method.")

|

| 166 |

+

|

| 167 |

+

print("\n--- Generated Text ---")

|

| 168 |

+

# Print prompt + generated text for context

|

| 169 |

+

print(prompt + generated_text)

|

| 170 |

+

print("----------------------")

|

| 171 |

+

|

| 172 |

+

except Exception as e:

|

| 173 |

+

print(f"\nERROR during example inference: {e}")

|

| 174 |

+

```

|

| 175 |

+

|

| 176 |

+

## Limitations and Biases

|

| 177 |

+

|

| 178 |

+

This model was trained on a diverse corpus of Hindi text from sources including IITB Parallel Corpus, Samanantar, Oscar Hindi, CC-100 Hindi, Hindi Wikipedia, news articles, XNLI Hindi, IndicGLUE, and Hindi literature. As such, it may reflect biases present in that data, including potential cultural, gender, or regional biases found in these source materials.

|

| 179 |

+

|

| 180 |

+

The model's performance is limited by its architecture (12 layers, hidden=768, 16 heads, ffn=3072, act=swiglu, norm=rmsnorm) and the size of the training dataset.

|

| 181 |

+

|

| 182 |

+

It may generate repetitive, nonsensical, or factually incorrect text.

|

| 183 |

+

|

| 184 |

+

The model uses a weighted pooling strategy with sensitivity to Hindi's SOV structure, but may still struggle with complex semantic relationships in longer texts.

|

| 185 |

+

|

| 186 |

+

As noted in the DeepRAG research paper, the model may have particular difficulties with cultural concepts that lack direct English translations, idiomatic expressions specific to Hindi, and formal/informal speech distinctions.

|

| 187 |

+

|

| 188 |

+

Please use this model responsibly.

|

| 189 |

+

|

| 190 |

+

Model trained using custom scripts.

|

config.json

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"vocab_size": 16000,

|

| 3 |

+

"hidden_size": 768,

|

| 4 |

+

"num_hidden_layers": 12,

|

| 5 |

+

"num_attention_heads": 16,

|

| 6 |

+

"intermediate_size": 3072,

|

| 7 |

+

"hidden_dropout_prob": 0.1,

|

| 8 |

+

"attention_probs_dropout_prob": 0.1,

|

| 9 |

+

"max_position_embeddings": 512,

|

| 10 |

+

"layer_norm_eps": 1e-12,

|

| 11 |

+

"pad_token_id": 0,

|

| 12 |

+

"bos_token_id": 1,

|

| 13 |

+

"eos_token_id": 2,

|

| 14 |

+

"tie_word_embeddings": true,

|

| 15 |

+

"activation_function": "swiglu",

|

| 16 |

+

"normalization_layer": "rmsnorm",

|

| 17 |

+

"positional_encoding_type": "rope",

|

| 18 |

+

"unk_token_id": 3,

|

| 19 |

+

"architectures": [

|

| 20 |

+

"HindiCausalLM"

|

| 21 |

+

],

|

| 22 |

+

"model_type": "hindi_causal_lm"

|

| 23 |

+

}

|

hindi_embeddings.py

ADDED

|

@@ -0,0 +1,730 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import torch

|

| 3 |

+

import json

|

| 4 |

+

import numpy as np

|

| 5 |

+

from torch import nn

|

| 6 |

+

from torch.nn import functional as F

|

| 7 |

+

import sentencepiece as spm

|

| 8 |

+

from sklearn.metrics.pairwise import cosine_similarity

|

| 9 |

+

from tqdm import tqdm

|

| 10 |

+

import matplotlib.pyplot as plt

|

| 11 |

+

from sklearn.manifold import TSNE

|

| 12 |

+

|

| 13 |

+

# Tokenizer wrapper class

|

| 14 |

+

class SentencePieceTokenizerWrapper:

|

| 15 |

+

def __init__(self, sp_model_path):

|

| 16 |

+

self.sp_model = spm.SentencePieceProcessor()

|

| 17 |

+

self.sp_model.Load(sp_model_path)

|

| 18 |

+

self.vocab_size = self.sp_model.GetPieceSize()

|

| 19 |

+

|

| 20 |

+

# Special token IDs from tokenizer training

|

| 21 |

+

self.pad_token_id = 0

|

| 22 |

+

self.bos_token_id = 1

|

| 23 |

+

self.eos_token_id = 2

|

| 24 |

+

self.unk_token_id = 3

|

| 25 |

+

|

| 26 |

+

# Set special tokens

|

| 27 |

+

self.pad_token = "<pad>"

|

| 28 |

+

self.bos_token = "<s>"

|

| 29 |

+

self.eos_token = "</s>"

|

| 30 |

+

self.unk_token = "<unk>"

|

| 31 |

+

self.mask_token = "<mask>"

|

| 32 |

+

|

| 33 |

+

def __call__(self, text, padding=False, truncation=False, max_length=None, return_tensors=None):

|

| 34 |

+

# Handle both string and list inputs

|

| 35 |

+

if isinstance(text, str):

|

| 36 |

+

# Encode a single string

|

| 37 |

+

ids = self.sp_model.EncodeAsIds(text)

|

| 38 |

+

|

| 39 |

+

# Handle truncation

|

| 40 |

+

if truncation and max_length and len(ids) > max_length:

|

| 41 |

+

ids = ids[:max_length]

|

| 42 |

+

|

| 43 |

+

attention_mask = [1] * len(ids)

|

| 44 |

+

|

| 45 |

+

# Handle padding

|

| 46 |

+

if padding and max_length:

|

| 47 |

+

padding_length = max(0, max_length - len(ids))

|

| 48 |

+

ids = ids + [self.pad_token_id] * padding_length

|

| 49 |

+

attention_mask = attention_mask + [0] * padding_length

|

| 50 |

+

|

| 51 |

+

result = {

|

| 52 |

+

'input_ids': ids,

|

| 53 |

+

'attention_mask': attention_mask

|

| 54 |

+

}

|

| 55 |

+

|

| 56 |

+

# Convert to tensors if requested

|

| 57 |

+

if return_tensors == 'pt':

|

| 58 |

+

import torch

|

| 59 |

+

result = {k: torch.tensor([v]) for k, v in result.items()}

|

| 60 |

+

|

| 61 |

+

return result

|

| 62 |

+

|

| 63 |

+

# Process a batch of texts

|

| 64 |

+

batch_encoded = [self.sp_model.EncodeAsIds(t) for t in text]

|

| 65 |

+

|

| 66 |

+

# Apply truncation if needed

|

| 67 |

+

if truncation and max_length:

|

| 68 |

+

batch_encoded = [ids[:max_length] for ids in batch_encoded]

|

| 69 |

+

|

| 70 |

+

# Create attention masks

|

| 71 |

+

batch_attention_mask = [[1] * len(ids) for ids in batch_encoded]

|

| 72 |

+

|

| 73 |

+

# Apply padding if needed

|

| 74 |

+

if padding:

|

| 75 |

+

if max_length:

|

| 76 |

+

max_len = max_length

|

| 77 |

+

else:

|

| 78 |

+

max_len = max(len(ids) for ids in batch_encoded)

|

| 79 |

+

|

| 80 |

+

# Pad sequences to max_len

|

| 81 |

+

batch_encoded = [ids + [self.pad_token_id] * (max_len - len(ids)) for ids in batch_encoded]

|

| 82 |

+

batch_attention_mask = [mask + [0] * (max_len - len(mask)) for mask in batch_attention_mask]

|

| 83 |

+

|

| 84 |

+

result = {

|

| 85 |

+

'input_ids': batch_encoded,

|

| 86 |

+

'attention_mask': batch_attention_mask

|

| 87 |

+

}

|

| 88 |

+

|

| 89 |

+

# Convert to tensors if requested

|

| 90 |

+

if return_tensors == 'pt':

|

| 91 |

+

import torch

|

| 92 |

+

result = {k: torch.tensor(v) for k, v in result.items()}

|

| 93 |

+

|

| 94 |

+

return result

|

| 95 |

+

|

| 96 |

+

# Model architecture components

|

| 97 |

+

class MultiHeadAttention(nn.Module):

|

| 98 |

+

"""Multi-headed attention mechanism"""

|

| 99 |

+

def __init__(self, config):

|

| 100 |

+

super().__init__()

|

| 101 |

+

self.num_attention_heads = config["num_attention_heads"]

|

| 102 |

+

self.attention_head_size = config["hidden_size"] // config["num_attention_heads"]

|

| 103 |

+

self.all_head_size = self.num_attention_heads * self.attention_head_size

|

| 104 |

+

|

| 105 |

+

# Query, Key, Value projections

|

| 106 |

+

self.query = nn.Linear(config["hidden_size"], self.all_head_size)

|

| 107 |

+

self.key = nn.Linear(config["hidden_size"], self.all_head_size)

|

| 108 |

+

self.value = nn.Linear(config["hidden_size"], self.all_head_size)

|

| 109 |

+

|

| 110 |

+

# Output projection

|

| 111 |

+

self.output = nn.Sequential(

|

| 112 |

+

nn.Linear(self.all_head_size, config["hidden_size"]),

|

| 113 |

+

nn.Dropout(config["attention_probs_dropout_prob"])

|

| 114 |

+

)

|

| 115 |

+

|

| 116 |

+

# Simplified relative position bias

|

| 117 |

+

self.max_position_embeddings = config["max_position_embeddings"]

|

| 118 |

+

self.relative_attention_bias = nn.Embedding(

|

| 119 |

+

2 * config["max_position_embeddings"] - 1,

|

| 120 |

+

config["num_attention_heads"]

|

| 121 |

+

)

|

| 122 |

+

|

| 123 |

+

def transpose_for_scores(self, x):

|

| 124 |

+

new_shape = x.size()[:-1] + (self.num_attention_heads, self.attention_head_size)

|

| 125 |

+

x = x.view(*new_shape)

|

| 126 |

+

return x.permute(0, 2, 1, 3)

|

| 127 |

+

|

| 128 |

+

def forward(self, hidden_states, attention_mask=None):

|

| 129 |

+

batch_size, seq_length = hidden_states.size()[:2]

|

| 130 |

+

|

| 131 |

+

# Project inputs to queries, keys, and values

|

| 132 |

+

query_layer = self.transpose_for_scores(self.query(hidden_states))

|

| 133 |

+

key_layer = self.transpose_for_scores(self.key(hidden_states))

|

| 134 |

+

value_layer = self.transpose_for_scores(self.value(hidden_states))

|

| 135 |

+

|

| 136 |

+

# Take the dot product between query and key to get the raw attention scores

|

| 137 |

+

attention_scores = torch.matmul(query_layer, key_layer.transpose(-1, -2))

|

| 138 |

+

|

| 139 |

+

# Generate relative position matrix

|

| 140 |

+

position_ids = torch.arange(seq_length, dtype=torch.long, device=hidden_states.device)

|

| 141 |

+

relative_position = position_ids.unsqueeze(1) - position_ids.unsqueeze(0) # [seq_len, seq_len]

|

| 142 |

+

# Shift values to be >= 0

|

| 143 |

+

relative_position = relative_position + self.max_position_embeddings - 1

|

| 144 |

+

# Ensure indices are within bounds

|

| 145 |

+

relative_position = torch.clamp(relative_position, 0, 2 * self.max_position_embeddings - 2)

|

| 146 |

+

|

| 147 |

+

# Get relative position embeddings [seq_len, seq_len, num_heads]

|

| 148 |

+

rel_attn_bias = self.relative_attention_bias(relative_position) # [seq_len, seq_len, num_heads]

|

| 149 |

+

|

| 150 |

+

# Reshape to add to attention heads [1, num_heads, seq_len, seq_len]

|

| 151 |

+

rel_attn_bias = rel_attn_bias.permute(2, 0, 1).unsqueeze(0)

|

| 152 |

+

|

| 153 |

+

# Add to attention scores - now dimensions will match

|

| 154 |

+

attention_scores = attention_scores + rel_attn_bias

|

| 155 |

+

|

| 156 |

+

# Scale attention scores

|

| 157 |

+

attention_scores = attention_scores / (self.attention_head_size ** 0.5)

|

| 158 |

+

|

| 159 |

+

# Apply attention mask

|

| 160 |

+

if attention_mask is not None:

|

| 161 |

+

attention_scores = attention_scores + attention_mask

|

| 162 |

+

|

| 163 |

+

# Normalize the attention scores to probabilities

|

| 164 |

+

attention_probs = F.softmax(attention_scores, dim=-1)

|

| 165 |

+

|

| 166 |

+

# Apply dropout

|

| 167 |

+

attention_probs = F.dropout(attention_probs, p=0.1, training=self.training)

|

| 168 |

+

|

| 169 |

+

# Apply attention to values

|

| 170 |

+

context_layer = torch.matmul(attention_probs, value_layer)

|

| 171 |

+

|

| 172 |

+

# Reshape back to [batch_size, seq_length, hidden_size]

|

| 173 |

+

context_layer = context_layer.permute(0, 2, 1, 3).contiguous()

|

| 174 |

+

new_shape = context_layer.size()[:-2] + (self.all_head_size,)

|

| 175 |

+

context_layer = context_layer.view(*new_shape)

|

| 176 |

+

|

| 177 |

+

# Final output projection

|

| 178 |

+

output = self.output(context_layer)

|

| 179 |

+

|

| 180 |

+

return output

|

| 181 |

+

|

| 182 |

+

class EnhancedTransformerLayer(nn.Module):

|

| 183 |

+

"""Advanced transformer layer with pre-layer norm and enhanced attention"""

|

| 184 |

+

def __init__(self, config):

|

| 185 |

+

super().__init__()

|

| 186 |

+

self.attention_pre_norm = nn.LayerNorm(config["hidden_size"], eps=config["layer_norm_eps"])

|

| 187 |

+

self.attention = MultiHeadAttention(config)

|

| 188 |

+

|

| 189 |

+

self.ffn_pre_norm = nn.LayerNorm(config["hidden_size"], eps=config["layer_norm_eps"])

|

| 190 |

+

|

| 191 |

+

# Feed-forward network

|

| 192 |

+

self.ffn = nn.Sequential(

|

| 193 |

+

nn.Linear(config["hidden_size"], config["intermediate_size"]),

|

| 194 |

+

nn.GELU(),

|

| 195 |

+

nn.Dropout(config["hidden_dropout_prob"]),

|

| 196 |

+

nn.Linear(config["intermediate_size"], config["hidden_size"]),

|

| 197 |

+

nn.Dropout(config["hidden_dropout_prob"])

|

| 198 |

+

)

|

| 199 |

+

|

| 200 |

+

def forward(self, hidden_states, attention_mask=None):

|

| 201 |

+

# Pre-layer norm for attention

|

| 202 |

+

attn_norm_hidden = self.attention_pre_norm(hidden_states)

|

| 203 |

+

|

| 204 |

+

# Self-attention

|

| 205 |

+

attention_output = self.attention(attn_norm_hidden, attention_mask)

|

| 206 |

+

|

| 207 |

+

# Residual connection

|

| 208 |

+

hidden_states = hidden_states + attention_output

|

| 209 |

+

|

| 210 |

+

# Pre-layer norm for feed-forward

|

| 211 |

+

ffn_norm_hidden = self.ffn_pre_norm(hidden_states)

|

| 212 |

+

|

| 213 |

+

# Feed-forward

|

| 214 |

+

ffn_output = self.ffn(ffn_norm_hidden)

|

| 215 |

+

|

| 216 |

+

# Residual connection

|

| 217 |

+

hidden_states = hidden_states + ffn_output

|

| 218 |

+

|

| 219 |

+

return hidden_states

|

| 220 |

+

|

| 221 |

+

class AdvancedTransformerModel(nn.Module):

|

| 222 |

+

"""Advanced Transformer model for inference"""

|

| 223 |

+

|

| 224 |

+

def __init__(self, config):

|

| 225 |

+

super().__init__()

|

| 226 |

+

self.config = config

|

| 227 |

+

|

| 228 |

+

# Embeddings

|

| 229 |

+

self.word_embeddings = nn.Embedding(

|

| 230 |

+

config["vocab_size"],

|

| 231 |

+

config["hidden_size"],

|

| 232 |

+

padding_idx=config["pad_token_id"]

|

| 233 |

+

)

|

| 234 |

+

|

| 235 |

+

# Position embeddings

|

| 236 |

+

self.position_embeddings = nn.Embedding(config["max_position_embeddings"], config["hidden_size"])

|

| 237 |

+

|

| 238 |

+

# Embedding dropout

|

| 239 |

+

self.embedding_dropout = nn.Dropout(config["hidden_dropout_prob"])

|

| 240 |

+

|

| 241 |

+

# Transformer layers

|

| 242 |

+

self.layers = nn.ModuleList([

|

| 243 |

+

EnhancedTransformerLayer(config) for _ in range(config["num_hidden_layers"])

|

| 244 |

+

])

|

| 245 |

+

|

| 246 |

+

# Final layer norm

|

| 247 |

+

self.final_layer_norm = nn.LayerNorm(config["hidden_size"], eps=config["layer_norm_eps"])

|

| 248 |

+

|

| 249 |

+

def forward(self, input_ids, attention_mask=None):

|

| 250 |

+

input_shape = input_ids.size()

|

| 251 |

+

batch_size, seq_length = input_shape

|

| 252 |

+

|

| 253 |

+

# Get position ids

|

| 254 |

+

position_ids = torch.arange(seq_length, dtype=torch.long, device=input_ids.device)

|

| 255 |

+

position_ids = position_ids.unsqueeze(0).expand(batch_size, -1)

|

| 256 |

+

|

| 257 |

+

# Get embeddings

|

| 258 |

+

word_embeds = self.word_embeddings(input_ids)

|

| 259 |

+

position_embeds = self.position_embeddings(position_ids)

|

| 260 |

+

|

| 261 |

+

# Sum embeddings

|

| 262 |

+

embeddings = word_embeds + position_embeds

|

| 263 |

+

|

| 264 |

+

# Apply dropout

|

| 265 |

+

embeddings = self.embedding_dropout(embeddings)

|

| 266 |

+

|

| 267 |

+

# Default attention mask

|

| 268 |

+

if attention_mask is None:

|

| 269 |

+

attention_mask = torch.ones(input_shape, device=input_ids.device)

|

| 270 |

+

|

| 271 |

+

# Extended attention mask for transformer layers (1 for tokens to attend to, 0 for masked tokens)

|

| 272 |

+

extended_attention_mask = attention_mask.unsqueeze(1).unsqueeze(2)

|

| 273 |

+

extended_attention_mask = (1.0 - extended_attention_mask) * -10000.0

|

| 274 |

+

|

| 275 |

+

# Apply transformer layers

|

| 276 |

+

hidden_states = embeddings

|

| 277 |

+

for layer in self.layers:

|

| 278 |

+

hidden_states = layer(hidden_states, extended_attention_mask)

|

| 279 |

+

|

| 280 |

+

# Final layer norm

|

| 281 |

+

hidden_states = self.final_layer_norm(hidden_states)

|

| 282 |

+

|

| 283 |

+

return hidden_states

|

| 284 |

+

|

| 285 |

+

class AdvancedPooling(nn.Module):

|

| 286 |

+

"""Advanced pooling module supporting multiple pooling strategies"""

|

| 287 |

+

def __init__(self, config):

|

| 288 |

+

super().__init__()

|

| 289 |

+

self.pooling_mode = config["pooling_mode"] # 'mean', 'max', 'cls', 'attention'

|

| 290 |

+

self.hidden_size = config["hidden_size"]

|

| 291 |

+

|

| 292 |

+

# For attention pooling

|

| 293 |

+

if self.pooling_mode == 'attention':

|

| 294 |

+

self.attention_weights = nn.Linear(config["hidden_size"], 1)

|

| 295 |

+

|

| 296 |

+

# For weighted pooling

|

| 297 |

+

elif self.pooling_mode == 'weighted':

|

| 298 |

+

self.weight_layer = nn.Linear(config["hidden_size"], 1)

|

| 299 |

+

|

| 300 |

+

def forward(self, token_embeddings, attention_mask=None):

|

| 301 |

+

if attention_mask is None:

|

| 302 |

+

attention_mask = torch.ones_like(token_embeddings[:, :, 0])

|

| 303 |

+

|

| 304 |

+

mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

|

| 305 |

+

|

| 306 |

+

if self.pooling_mode == 'cls':

|

| 307 |

+

# Use [CLS] token (first token)

|

| 308 |

+

pooled = token_embeddings[:, 0]

|

| 309 |

+

|

| 310 |

+

elif self.pooling_mode == 'max':

|

| 311 |

+

# Max pooling

|

| 312 |

+

token_embeddings = token_embeddings.clone()

|

| 313 |

+

# Set padding tokens to large negative value to exclude them from max

|

| 314 |

+

token_embeddings[mask_expanded == 0] = -1e9

|

| 315 |

+

pooled = torch.max(token_embeddings, dim=1)[0]

|

| 316 |

+

|

| 317 |

+

elif self.pooling_mode == 'attention':

|

| 318 |

+

# Attention pooling

|

| 319 |

+

weights = self.attention_weights(token_embeddings).squeeze(-1)

|

| 320 |

+

# Mask out padding tokens

|

| 321 |

+

weights = weights.masked_fill(attention_mask == 0, -1e9)

|

| 322 |

+

weights = F.softmax(weights, dim=1).unsqueeze(-1)

|

| 323 |

+

pooled = torch.sum(token_embeddings * weights, dim=1)

|

| 324 |

+

|

| 325 |

+

elif self.pooling_mode == 'weighted':

|

| 326 |

+

# Weighted average pooling

|

| 327 |

+

weights = torch.sigmoid(self.weight_layer(token_embeddings)).squeeze(-1)

|

| 328 |

+

# Apply mask

|

| 329 |

+

weights = weights * attention_mask

|

| 330 |

+

# Normalize weights

|

| 331 |

+

sum_weights = torch.sum(weights, dim=1, keepdim=True)

|

| 332 |

+

sum_weights = torch.clamp(sum_weights, min=1e-9)

|

| 333 |

+

weights = weights / sum_weights

|

| 334 |

+

# Apply weights

|

| 335 |

+

pooled = torch.sum(token_embeddings * weights.unsqueeze(-1), dim=1)

|

| 336 |

+

|

| 337 |

+

else: # Default to mean pooling

|

| 338 |

+

# Mean pooling

|

| 339 |

+

sum_embeddings = torch.sum(token_embeddings * mask_expanded, dim=1)

|

| 340 |

+

sum_mask = torch.clamp(mask_expanded.sum(1), min=1e-9)

|

| 341 |

+

pooled = sum_embeddings / sum_mask

|

| 342 |

+

|

| 343 |

+

# L2 normalize

|

| 344 |

+

pooled = F.normalize(pooled, p=2, dim=1)

|

| 345 |

+

|

| 346 |

+

return pooled

|

| 347 |

+

|

| 348 |

+

class SentenceEmbeddingModel(nn.Module):

|

| 349 |

+

"""Complete sentence embedding model for inference"""

|

| 350 |

+

def __init__(self, config):

|

| 351 |

+

super(SentenceEmbeddingModel, self).__init__()

|

| 352 |

+

self.config = config

|

| 353 |

+

|

| 354 |

+

# Create transformer model

|

| 355 |

+

self.transformer = AdvancedTransformerModel(config)

|

| 356 |

+

|

| 357 |

+

# Create pooling module

|

| 358 |

+

self.pooling = AdvancedPooling(config)

|

| 359 |

+

|

| 360 |

+

# Build projection module if needed

|

| 361 |

+

if "projection_dim" in config and config["projection_dim"] > 0:

|

| 362 |

+

self.use_projection = True

|

| 363 |

+

self.projection = nn.Sequential(

|

| 364 |

+

nn.Linear(config["hidden_size"], config["hidden_size"]),

|

| 365 |

+

nn.GELU(),

|

| 366 |

+

nn.Linear(config["hidden_size"], config["projection_dim"]),

|

| 367 |

+

nn.LayerNorm(config["projection_dim"], eps=config["layer_norm_eps"])

|

| 368 |

+

)

|

| 369 |

+

else:

|

| 370 |

+

self.use_projection = False

|

| 371 |

+

|

| 372 |

+

def forward(self, input_ids, attention_mask=None):

|

| 373 |

+

# Get token embeddings from transformer

|

| 374 |

+

token_embeddings = self.transformer(input_ids, attention_mask)

|

| 375 |

+

|

| 376 |

+

# Pool token embeddings

|

| 377 |

+

pooled_output = self.pooling(token_embeddings, attention_mask)

|

| 378 |

+

|

| 379 |

+

# Apply projection if enabled

|

| 380 |

+

if self.use_projection:

|

| 381 |

+

pooled_output = self.projection(pooled_output)

|

| 382 |

+

pooled_output = F.normalize(pooled_output, p=2, dim=1)

|

| 383 |

+

|

| 384 |

+

return pooled_output

|

| 385 |

+

|

| 386 |

+

class HindiEmbedder:

|

| 387 |

+

def __init__(self, model_path="/home/ubuntu/output/hindi-embeddings-custom-tokenizer/final"):

|

| 388 |

+

"""

|

| 389 |

+

Initialize the Hindi sentence embedder.

|

| 390 |

+

|

| 391 |

+

Args:

|

| 392 |

+

model_path: Path to the model directory

|

| 393 |

+

"""

|

| 394 |

+

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 395 |

+

print(f"Using device: {self.device}")

|

| 396 |

+

|

| 397 |

+

# Load tokenizer - look for it in the model directory

|

| 398 |

+

tokenizer_path = os.path.join(model_path, "tokenizer.model")

|

| 399 |

+

|

| 400 |

+

if not os.path.exists(tokenizer_path):

|

| 401 |

+

raise FileNotFoundError(f"Could not find tokenizer at {tokenizer_path}")

|

| 402 |

+

|

| 403 |

+

self.tokenizer = SentencePieceTokenizerWrapper(tokenizer_path)

|

| 404 |

+

print(f"Loaded tokenizer from {tokenizer_path} with vocabulary size: {self.tokenizer.vocab_size}")

|

| 405 |

+

|

| 406 |

+

# Load model config

|

| 407 |

+

config_path = os.path.join(model_path, "config.json")

|

| 408 |

+

with open(config_path, "r") as f:

|

| 409 |

+

self.config = json.load(f)

|

| 410 |

+

print(f"Loaded model config with hidden_size={self.config['hidden_size']}")

|

| 411 |

+

|

| 412 |

+

# Load model

|

| 413 |

+

model_pt_path = os.path.join(model_path, "embedding_model.pt")

|

| 414 |

+

|

| 415 |

+

try:

|

| 416 |

+

# Support both PyTorch 2.6+ and older versions

|

| 417 |

+

try:

|

| 418 |

+

checkpoint = torch.load(model_pt_path, map_location=self.device, weights_only=False)

|

| 419 |

+

print("Loaded model using PyTorch 2.6+ style loading")

|

| 420 |

+

except TypeError:

|

| 421 |

+

checkpoint = torch.load(model_pt_path, map_location=self.device)

|

| 422 |

+

print("Loaded model using older PyTorch style loading")

|

| 423 |

+

|

| 424 |

+

# Create model

|

| 425 |

+

self.model = SentenceEmbeddingModel(self.config)

|

| 426 |

+

|

| 427 |

+

# Load state dict

|

| 428 |

+

if "model_state_dict" in checkpoint:

|

| 429 |

+

state_dict = checkpoint["model_state_dict"]

|

| 430 |

+

else:

|

| 431 |

+

state_dict = checkpoint

|

| 432 |

+

|

| 433 |

+

missing_keys, unexpected_keys = self.model.load_state_dict(state_dict, strict=False)

|

| 434 |

+

print(f"Loaded model with {len(missing_keys)} missing keys and {len(unexpected_keys)} unexpected keys")

|

| 435 |

+

|

| 436 |

+

# Move to device

|

| 437 |

+

self.model.to(self.device)

|

| 438 |

+

self.model.eval()

|

| 439 |

+

print("Model loaded successfully and placed in evaluation mode")

|

| 440 |

+

|

| 441 |

+

except Exception as e:

|

| 442 |

+

print(f"Error loading model: {e}")

|

| 443 |

+

raise RuntimeError(f"Failed to load the model: {e}")

|

| 444 |

+

|

| 445 |

+

def encode(self, sentences, batch_size=32, normalize=True):

|

| 446 |

+

"""

|

| 447 |

+

Encode sentences to embeddings.

|

| 448 |

+

|

| 449 |

+

Args:

|

| 450 |

+

sentences: A string or list of strings to encode

|

| 451 |

+

batch_size: Batch size for encoding

|

| 452 |

+

normalize: Whether to normalize the embeddings

|

| 453 |

+

|

| 454 |

+

Returns:

|

| 455 |

+

Numpy array of embeddings

|

| 456 |

+

"""

|

| 457 |

+

# Handle single sentence

|

| 458 |

+

if isinstance(sentences, str):

|

| 459 |

+

sentences = [sentences]

|

| 460 |

+

|

| 461 |

+

all_embeddings = []

|

| 462 |

+

|

| 463 |

+

# Process in batches

|

| 464 |

+

with torch.no_grad():

|

| 465 |

+

for i in range(0, len(sentences), batch_size):

|

| 466 |

+

batch = sentences[i:i+batch_size]

|

| 467 |

+

|

| 468 |

+

# Tokenize

|

| 469 |

+

inputs = self.tokenizer(

|

| 470 |

+

batch,

|

| 471 |

+

padding=True,

|

| 472 |

+

truncation=True,

|

| 473 |

+

max_length=self.config.get("max_position_embeddings", 128),

|

| 474 |

+

return_tensors="pt"

|

| 475 |

+

)

|

| 476 |

+

|

| 477 |

+

# Move to device

|

| 478 |

+

input_ids = inputs["input_ids"].to(self.device)

|

| 479 |

+

attention_mask = inputs["attention_mask"].to(self.device)

|

| 480 |

+

|

| 481 |

+

# Get embeddings

|

| 482 |

+

embeddings = self.model(input_ids, attention_mask)

|

| 483 |

+

|

| 484 |

+

# Move to CPU and convert to numpy

|

| 485 |

+

all_embeddings.append(embeddings.cpu().numpy())

|

| 486 |

+

|

| 487 |

+

# Concatenate all embeddings

|

| 488 |

+

all_embeddings = np.vstack(all_embeddings)

|

| 489 |

+

|

| 490 |

+

# Normalize if requested

|

| 491 |

+

if normalize:

|

| 492 |

+

all_embeddings = all_embeddings / np.linalg.norm(all_embeddings, axis=1, keepdims=True)

|

| 493 |

+

|

| 494 |

+

return all_embeddings

|

| 495 |

+

|

| 496 |

+

def compute_similarity(self, texts1, texts2=None):

|

| 497 |

+

"""

|

| 498 |

+

Compute cosine similarity between texts.

|

| 499 |

+

|

| 500 |

+

Args:

|

| 501 |

+

texts1: First set of texts

|

| 502 |

+

texts2: Second set of texts. If None, compute similarity matrix within texts1.

|

| 503 |

+

|

| 504 |

+

Returns:

|

| 505 |

+

Similarity scores

|

| 506 |

+

"""

|

| 507 |

+

# Convert single strings to lists for consistent handling

|

| 508 |

+

if isinstance(texts1, str):

|

| 509 |

+

texts1 = [texts1]

|

| 510 |

+

|

| 511 |

+

if texts2 is not None and isinstance(texts2, str):

|

| 512 |

+

texts2 = [texts2]

|

| 513 |

+

|

| 514 |

+

embeddings1 = self.encode(texts1)

|

| 515 |

+

|

| 516 |

+

if texts2 is None:

|

| 517 |

+

# Compute similarity matrix within texts1

|

| 518 |

+

similarities = cosine_similarity(embeddings1)

|

| 519 |

+

return similarities

|

| 520 |

+

else:

|

| 521 |

+

# Compute similarity between texts1 and texts2

|

| 522 |

+

embeddings2 = self.encode(texts2)

|

| 523 |

+

|

| 524 |

+

if len(texts1) == len(texts2):

|

| 525 |

+

# Compute pairwise similarity when the number of texts match

|

| 526 |

+

similarities = np.array([

|

| 527 |

+

cosine_similarity([e1], [e2])[0][0]

|

| 528 |

+

for e1, e2 in zip(embeddings1, embeddings2)

|

| 529 |

+

])

|

| 530 |

+

|

| 531 |

+

# If there's just one pair, return a scalar

|

| 532 |

+

if len(similarities) == 1:

|

| 533 |

+

return similarities[0]

|

| 534 |

+

return similarities

|

| 535 |

+

else:

|

| 536 |

+

# Return full similarity matrix

|

| 537 |

+

return cosine_similarity(embeddings1, embeddings2)

|

| 538 |

+

|

| 539 |

+

def search(self, query, documents, top_k=5):

|

| 540 |

+

"""

|

| 541 |

+

Search for similar documents to a query.

|

| 542 |

+

|

| 543 |

+

Args:

|

| 544 |

+

query: The query text

|

| 545 |

+

documents: List of documents to search

|

| 546 |

+

top_k: Number of top results to return

|

| 547 |

+

|

| 548 |

+

Returns:

|

| 549 |

+

List of dictionaries with document and score

|

| 550 |

+

"""

|

| 551 |

+

# Get embeddings

|

| 552 |

+