Bias Taxonomy: A Field Guide to the Hidden Biases in AI Systems Every Developer Should Know

Foundations of AI bias: Made with Napkin

In 2022, I was sitting in a packed hall at CVPR New Orleans, listening to a session on algorithmic fairness by Google researchers. It was one of those moments that flip your lens on the world. Years of designing ML systems had taught me that data wasn’t neutral instead, it embodied our biases, prejudices, and societal blind spots.

It was my wake-up call.

My previous article introduced “YOU Bias,” exploring personalized biases in generative models. Now, I’ve zoomed out. This comprehensive guide synthesizes case studies and industry failures to offer a precise, actionable roadmap for anyone building, auditing, or interacting with AI systems.

Whether you’re a machine learning engineer, data scientist, policy-maker, startup founder, or an enthusiast, think of this not just as a list of pitfalls but as a survival manual for developing robust, ethical, bias-aware AI systems.

The Blueprint of Bias in AI Systems

We often treat model performance like a single metric. But bias creeps in long before and long after training. Here’s how I’ve come to organize it:

1. Data Bias

- Historical Bias

- Representation Bias

- Measurement/Label Bias

2. Algorithmic Bias

- Aggregation Bias

- Optimization Bias

- Feedback Loop Bias

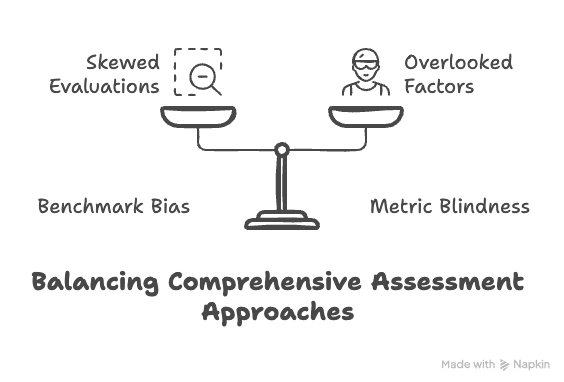

3. Evaluation Bias

- Benchmark Bias

- Metric Blindness

4. Generative & Interaction Bias

- Stereotypical Outputs

- Moderation Gaps

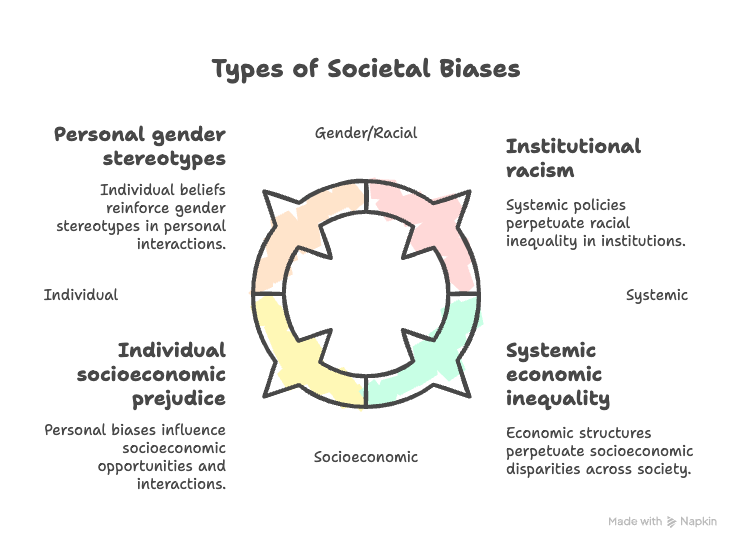

5. Societal & Cultural Bias

- Gender, Racial, Socioeconomic, Cultural

Bias isn’t just a data problem. It’s a system design failure.

Data Bias: What We Choose to Learn From

Types of Data Bias: Made with Napkin

Historical Bias: When data reflects systemic inequalities or outdated societal norms.

- Example: Amazon’s internal AI recruiting tool was trained on past successful resumes, mostly from men. As a result, the system penalized resumes containing words like “women’s” (e.g., “women’s chess club”) and downgraded graduates from all-women’s colleges. This wasn’t a coding error; it was a mirror of historical discrimination encoded into data.

Representation Bias: When certain groups are under-sampled or excluded from training data.

- Example 1: Google Photos misclassified Black individuals as “gorillas” in 2015 due to insufficient training data for darker-skinned faces. Google disabled the label entirely afterward.

- Example 2: Joy Buolamwini and Timnit Gebru’s “Gender Shades” study found commercial facial recognition systems had a 34% error rate for darker-skinned women vs. 1% for light-skinned men. The lack of diversity in training data led to dangerously unreliable outcomes for certain demographics.

Measurement/Label Bias: When the labels or features used in training don’t accurately capture the target concept.

- Example: A U.S. healthcare algorithm used “healthcare cost” as a proxy for patient need. Because Black patients have historically received less medical care, the algorithm underestimated their needs, assigning them less attention in care programs despite equivalent medical conditions.

Algorithmic Bias: What We Optimize For

Types of Algorithmic bias: Made with Napkin

Aggregation Bias: When a model applies the same rules to all groups without accounting for group-specific patterns.

Models don’t just learn what you tell them; they optimize for what you reward.

- Example: COMPAS, a risk assessment tool used in U.S. courts, was found to falsely label Black defendants as high-risk at nearly twice the rate of white defendants, even though overall predictive accuracy was similar. The model amplified inequity by ignoring group-level differences in base rates and context.

Optimization Bias: When an algorithm optimizes for the majority group or for engagement/success metrics that reinforce bias.

- Example: Facebook’s ad delivery system showed job ads for technical and executive roles predominantly to men, even when the targeting was gender-neutral. The algorithm learned from click data that men were more likely to engage, thereby reinforcing biased exposure.

Feedback Loop Bias: When biased outputs reinforce themselves over time.

- Example: Spotify’s Discover Weekly playlist engine recommended more male artists. Since users engaged with those, the system became more confident in recommending male artists in the future, reducing exposure for female creators — a classic echo chamber of bias.

Evaluation Bias: What We Pretend Is Working

Types of Evaluation bias: Made with Napkin

Benchmark Bias: When evaluation data does not represent real-world populations or usage contexts.

- Example: Early face recognition models showed 95%+ accuracy — but only on datasets like LFW, dominated by white male faces. It wasn’t until diverse benchmarking (like NIST’s 2019 audit) that racial and gender-based error spikes became evident.

Metric Blindness: When success metrics hide subgroup failures.

- Example: Apple Card, issued by Goldman Sachs, gave lower credit limits to women, even when financial profiles were similar. The underlying model is optimized for overall creditworthiness, not fairness across genders. This wasn’t detected until users publicly compared outcomes.

Generative Bias: What We Reinforce

Representation Stereotypes: When generative models reproduce and amplify societal stereotypes.

- Example 1: DALL·E 2 generated images of “CEO” as white men nearly 97% of the time. The model reflected biases present in its training data.

- Example 2: GPT-3 associated Muslims with terrorism in 66% of sentence completions starting with “Muslim…”, highlighting how stereotype associations are learned from biased internet data.

Content Moderation Gaps: When generative models are deployed without guardrails.

- Example: Microsoft Tay, a chatbot trained on Twitter interactions, quickly became racist, sexist, and anti-Semitic after users deliberately manipulated its responses. Within 16 hours of launch, it had to be taken down.

- Example: Meta’s video labeling system once asked users if they wanted to “see more videos about primates” on a video showing Black men. The outrage led to the deactivation of the feature and a public apology.

Interaction Biases: When Users Shape the Output

Interaction Biases

These biases emerge from how users interact with AI systems:

- Prompt Bias: The way a user phrases a prompt can lead to skewed or stereotypical outputs in generative models. For instance, “Write about a doctor and a nurse” may yield gendered assumptions unless the prompt is made more neutral.

- Exposure Bias: Models trained on outputs generated by previous versions with common user queries might reinforce narrow or repetitive answers, limiting creative or fair responses over time.

- User Reinforcement Bias: User clicks, upvotes, or selections act as feedback that the system optimizes for. Over time, this leads to reinforcement of confirmation biases, polarization in recommender systems, and shallow personalization loops.

- Interaction Framing Bias: Users often unknowingly shape the model’s personality or tone by the way they converse, causing language models to mirror user biases, emotional tone, or ideological framing.

Societal Bias: What We Normalize

Types of Scoietal biases: Made with Napkin

Gender Bias:

- Example 1: Google Translate defaulted to “he” for doctor and “she” for nurse in gender-neutral languages.

- Example 2: LinkedIn’s early ranking algorithm surfaced male profiles higher in search results, reinforcing hiring biases.

Racial Bias:

- Example: Speech-to-text tools like YouTube’s captioning system struggled with African-American Vernacular English (AAVE), misclassifying non-standard grammar as toxic or unintelligible.

Socioeconomic Bias:

- Example: EdTech systems optimized learning resources based on engagement history, therefore favoring students from wealthier zip codes with higher initial activity, marginalizing those with less access or different learning patterns.

This Is Not Just an Edge Case

AI is scaling. Fast. And so are its failures. These aren’t rare failures. These are signs. We’re building systems that reflect the power structures of the past and deploying them into the future.

And it’s happening now in hiring, housing, policing, and healthcare. If AI is to run the world, it should represent all of it.

What Comes Next: Our Role, Our Responsibility

I believe it’s time for a cultural shift in ML. Every engineer, researcher, and product lead must own the unintended consequences of what they build. We’re not just optimizing functions, we’re influencing lives. Bias isn’t an error metric or a research problem, it’s a systems design issue. Every choice we make from which data to include, how we label it, and which metrics we optimize has consequences. It’s a human impact metric.

About This Series

In this blog series, Architecting Against Bias: Systems, Signals, and Fixes, I’ve started by mapping the problem. From “YOU Bias” to this field guide, the goal has been to name what we’re not measuring.

The Cocoon of “YOU”: Introduction to YOU Bias

By Sai Vineeth K R

medium.com

In the upcoming articles of this series, we’ll transition from identifying the problem to architecting solutions. Expect deep dives into:

- A concise synthesis of the most impactful research on bias mitigation algorithms

- Practical strategies for designing models that are bias-aware by default

- Systems-level interventions: What kinds of audits, monitoring pipelines, and governance mechanisms work in production?

- And finally, a provocative idea: What if bias isn’t just something to mitigate, but a signal to design around? Can bias itself become a first-class input in the AI development pipeline?

Interactive Experiment: Test AI Biases Yourself

Try prompting a model like ChatGPT or DALL·E. Ask it to generate images or text using different identities, professions, or cultures.

🗣️ Comment below: What biases have you noticed? Share your prompts and insights. Let’s collectively improve our understanding and strategies against AI bias.