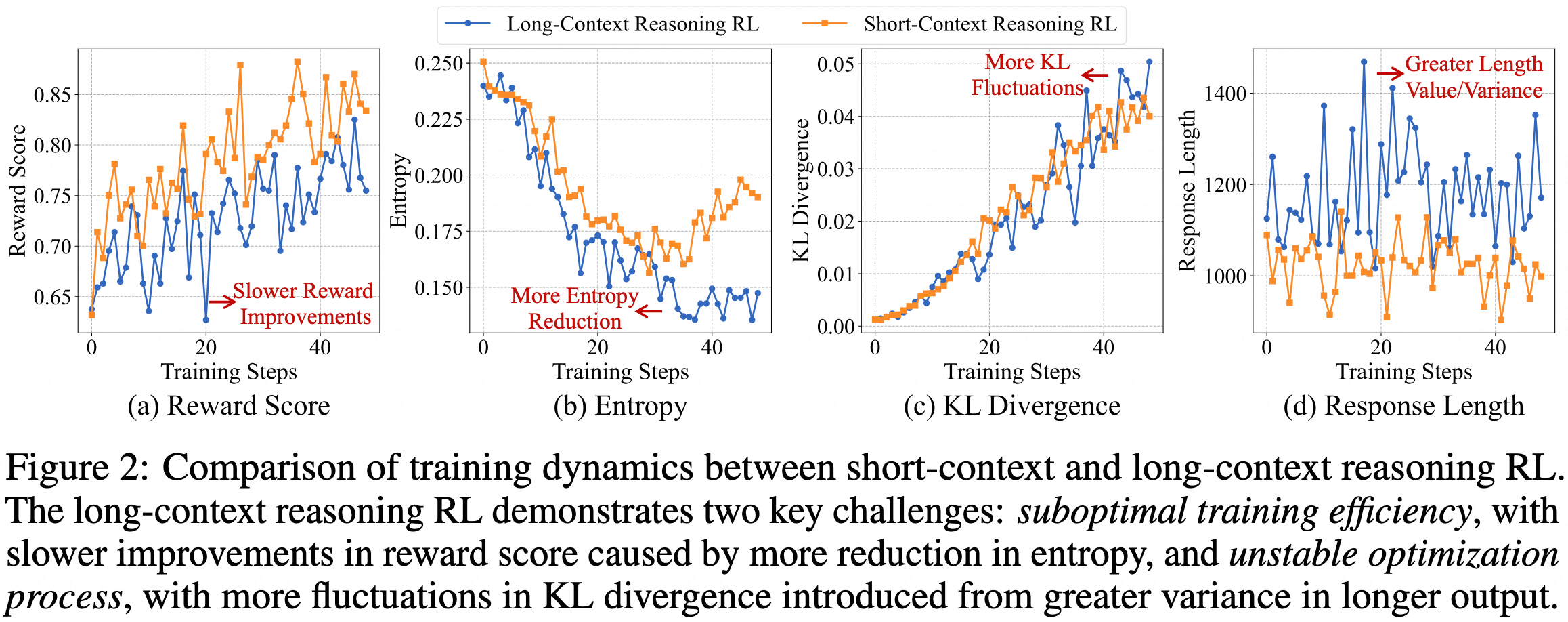

QwenLong-L1: Towards Long-Context Large Reasoning Models with Reinforcement Learning

-----------------------------

[](https://opensource.org/licenses/Apache-2.0)

[](https://arxiv.org/abs/xxxx.xxxxx)

[](https://github.com/Tongyi-Zhiwen)

[](https://modelscope.cn/organization/iic/)

[](https://huggingface.co/Tongyi-Zhiwen)

_**Fanqi Wan, Weizhou Shen, Shengyi Liao, Yingcheng Shi, Chenliang Li, Ziyi Yang, Ji Zhang, Fei Huang, Jingren Zhou, Ming Yan**_

_Qwen-Doc Team, Alibaba Group_