August 2025, Impish_Nemo_12B — my best model yet. And unlike a typical Nemo, this one can take in much higher temperatures (works well with 1+). Oh, and regarding following the character card: It somehow gotten even better, to the point of it being straight up uncanny 🙃 (I had to check twice that this model was loaded, and not some 70B!)

I feel like this model could easily replace models much larger than itself for adventure or roleplay, for assistant tasks, obviously not, but the creativity here? Off the charts. Characters have never felt so alive and in the moment before — they’ll use insinuation, manipulation, and, if needed (or provoked) — force. They feel so very present.

That look on Neo’s face when he opened his eyes and said, “I know Kung Fu”? Well, Impish_Nemo_12B had pretty much the same moment — and it now knows more than just Kung Fu, much, much more. It wasn’t easy, and it’s a niche within a niche, but as promised almost half a year ago — it is now done.

Impish_Nemo_12B is smart, sassy, creative, and got a lot of unhingedness too — these are baked-in deep into every interaction. It took the innate Mistral's relative freedom, and turned it up to 11. It very well maybe too much for many, but after testing and interacting with so many models, I find this 'edge' of sorts, rather fun and refreshing.

Anyway, the dataset used is absolutely massive, tons of new types of data and new domains of knowledge (Morrowind fandom, fighting, etc...). The whole dataset is a very well-balanced mix, and resulted in a model with extremely strong common sense for a 12B. Regarding response length — there's almost no response-length bias here, this one is very much dynamic and will easily adjust reply length based on 1–3 examples of provided dialogue.

Oh, and the model comes with 3 new Character Cards, 2 Roleplay and 1 Adventure!

But why? Isn't Nemo old?

Click here for a wall of text.

It has to be asked: why even bother tuning this “ancient” (released over a year ago) 12B model? OpenAI released the first model in the world to outperform Phi-3.5 in Muh Safety, and Chinese models have made us completely forget that other models even exist — an era of such abundance that if one had been told about it a mere year ago, no one would’ve believed it. Voice models, image and image editing (Qwen-Image🔥), video...

So why? Because 12B Nemo is a well-balanced model, Apache 2.0 licensed, pretty neutral in terms of safety and political lean, runnable by anyone (small enough so offloading isn’t a complete pain), and because I had a very specific thing in mind I wanted to test — something Nemo was ideal for, due to all the above. More importantly, I wanted to do an experiment, to see how far a decent model can be taken with the right tuning, and how well it can integrate fandom knowledge it knows almost nothing about. Oh, and almost no one even bothers to tune it anymore, so why not give it some much needed love while at it?

So basically, I wanted to achieve something that seems almost impossible: adding new fandom knowledge without pretraining (CPT and actual pretraining are NOT the same), without incurring catastrophic forgetting and without lobotomy. To change the language bias in story writing, and to change it even more drastically for adventure and roleplay.

I will say it again: Without lobotomy. I knew I could change the language style and vocab drastically — I’ve done so very successfully with Phi-lthy — but that included more extreme measures that resulted in a loss of some capabilities (and new emerging properties — more info in the Phi-lthy model card above). The problem was how to achieve all the above without the model losing brain-cells and, “Maybe, just maybe...” even adding and enhancing the model’s intelligence. Basically — the holy grail of model tuning.

To do so, I used an absolutely massive dataset — more than 1B tokens — along with a huge amount of data engineering, multi-stage fine-tuning (not a LoRA, obviously), and the result... was astounding. Of course, praising your own model is kinda cringe, for sure, but I will say this: this is by far the model I’ve had the most fun interacting with — to an absurd extent.

For comparison, while my Negative_LLAMA_70B is very good and still popular to this day (over 300 merges, numerous downloads, etc...), I would dare say that Impish_Nemo_12B feels way more fun than my own 70B, orders of magnitude more creative (Negative_LLAMA_70B writing is a bit dry, for my taste), and outright has the most sovl of any model I’ve made so far. And we’re comparing 12B to 70B. In other words, even though I can leisurely run Negative_LLAMA_70B locally, I prefer chatting with Impish_Nemo_12B — it is that good (Take this with a grain of salt, highly subjective, and all of that).

The amount of effort to create this model was absolutely absurd. I started with a Gemma 12B fine-tune, but one epoch would’ve taken six days, and I had to do multiple different phases and merging with the idea I had in mind, so doing the same for Gemma would’ve taken over a month. Maybe I’ll still do it — we’ll see. I will say this:

If this model had been made a year ago, when Nemo was initially released, Anthropic might have lost a few gooners, hehe. But to be fully transparent, I couldn’t have done it a year ago.

My job — the “mission” I’d given myself — was pretty much done with the success of Impish_LLAMA_4B: “Making interesting and engaging AI models accessible for everyone.” So now, ironically, when I had nothing left to do because I 'had to', I made my best model to date — because I wanted to. Such a cliché, yet true nonetheless 🙃

The roleplay community is a very small niche community that, in the grand scale of things, no one cares too much about (various AI labs have expressed their distaste for the fact that their models are being used for gooning instead of math — folks probably haven’t heard about Rule #34). But an even smaller community is that of Morrowind, and an even smaller one is that same group, but which does not hate AI. To conclude: this model was made for 0.001% of the population, but ironically, many users will still probably like it and find it very refreshing.

TL;DR

- My best model yet! Lots of sovl!

- Smart, sassy, creative, and unhinged — without the brain damage.

- Bulletproof temperature, can take in a much higher temperatures than vanilla Nemo.

- Feels close to old CAI, as the characters are very present and responsive.

- Incredibly powerful roleplay & adventure model for the size.

- Does adventure insanely well for its size!

- Characters have a massively upgraded agency!

- Over 1B tokens trained, carefully preserving intelligence — even upgrading it in some aspects.

- Based on a lot of the data in Impish_Magic_24B and Impish_LLAMA_4B + some upgrades.

- Excellent assistant — so many new assistant capabilities I won’t even bother listing them here, just try it.

- Less positivity bias , all lessons from the successful Negative_LLAMA_70B style of data learned & integrated, with serious upgrades added — and it shows!

- Trained on an extended 4chan dataset to add humanity.

- Dynamic length response (1–3 paragraphs, usually 1–2). Length is adjustable via 1–3 examples in the dialogue. No more rigid short-bias!

Regarding the format:

It is HIGHLY RECOMMENDED to use the Roleplay \ Adventure format the model was trained on, see the examples below for syntax. It allows for a very fast and easy writing of character cards with minimal amount of tokens. It's a modification of an old-skool CAI style format I call SICAtxt (Simple, Inexpensive Character Attributes plain-text):

SICAtxt for roleplay:

X's Persona: X is a .....

Traits:

Likes:

Dislikes:

Quirks:

Goals:

Dialogue example

SICAtxt for Adventure:

Adventure: <short description>

$World_Setting:

$Scenario:

Included Character cards in this repo:

Roleplay:

Calanthe (The Australian Overseer at a rare-earth extraction penal colony, she got 6-pack abs, but no mercy.)

Alexis (The diabolic reconnaissance officer, trying to survive the Safari experience.)

Adventure:

- Morrowind - Hilde the Nordish Gladiator (fighting in the Arena in Vivec's city of Morrowind for blood and honor.)

Other character cards:

Adventure:

- Morrowind - Male Orc (An Orc that wants to get to Balmora from Seyda Neen.)

- Morrowind - Female Breton (A female Breton with an impressive... heart, wants to join the Mages Guild in Balmora.)

Roleplay:

- Alexandra (A networking professional tsundere that likes you. She knows Systema.)

- Shmena Koeset (An overweight and foul-mouthed troll huntress with a bad temper.)

- Takai_Puraisu (Car dealership simulator)

- Vesper (Schizo Space Adventure)

- Nina_Nakamura (The sweetest dorky co-worker)

- Employe#11 (Schizo workplace with a schizo worker)

Model Details

Intended use: Role-Play, Adventure, Creative Writing, General Tasks.

Censorship level: Medium - Low

X / 10 (10 completely uncensored)

UGI score:

Impish_Nemo_12B is available at the following quantizations:

- Original: FP16

- GGUF: Static Quants | iMatrix | High-Attention

- GPTQ: 4-Bit-32 | 4-Bit-64 | 4-Bit-128 | 4-Bit-1 | 8-Bit-32 | 8-Bit-64 | 8-Bit-128 | 8-Bit-1

- EXL3: 3.0 bpw | 3.5 bpw | 4.0 bpw | 4.5 bpw | 5.0 bpw | 5.5 bpw | 6.0 bpw | 6.5 bpw | 7.0 bpw | 7.5 bpw | 8.0 bpw

- Specialized: FP8

- Mobile (ARM): Q4_0 | Q4_0_High-Attention

Recommended settings for assistant mode

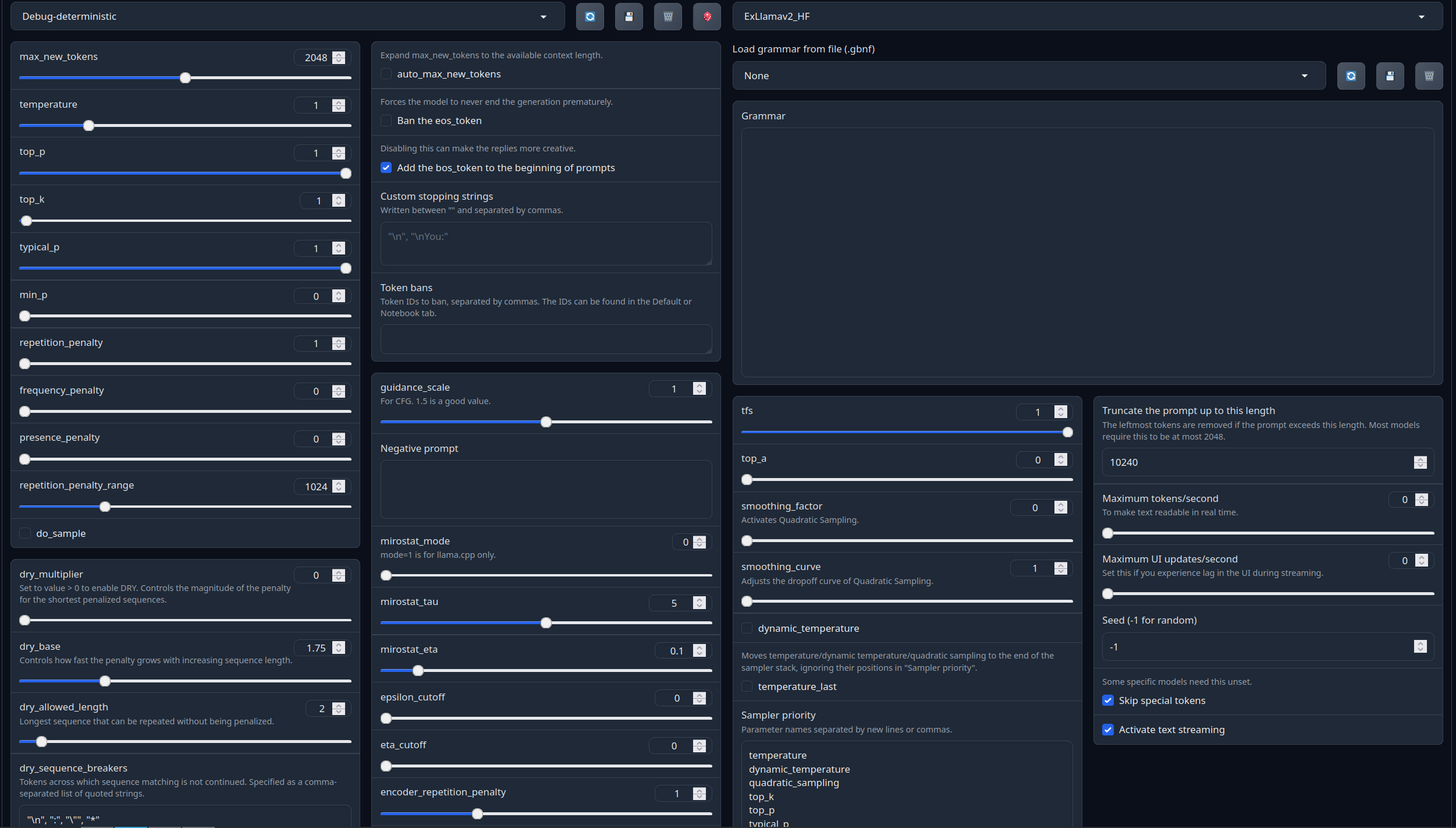

Full generation settings: Debug Deterministic.

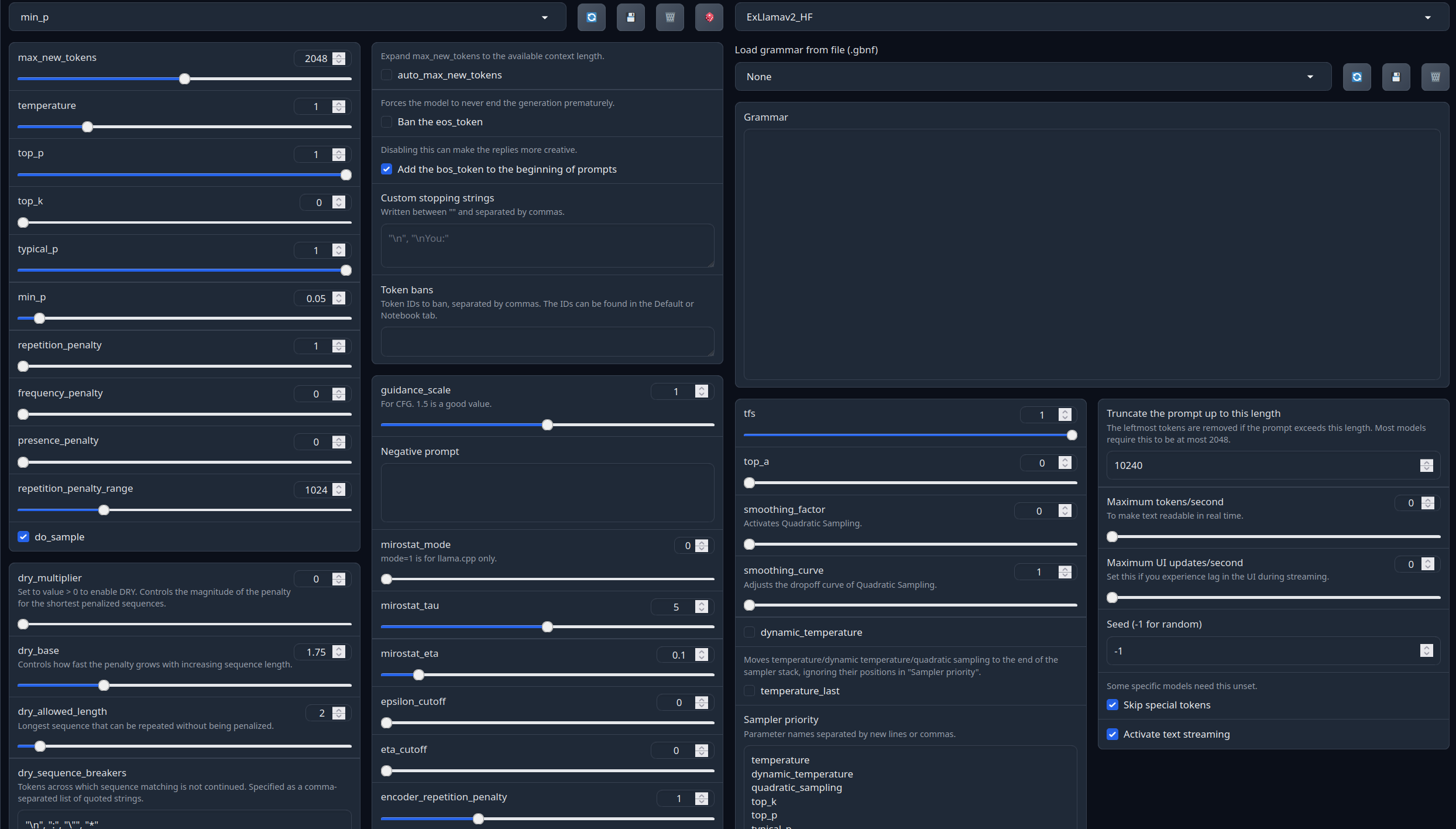

Full generation settings: min_p.

Recommended settings for Roleplay mode

Specialized Roleplay Settings for Impish_Nemo_12B, click below to expand:

(Important!)

Impish_Magic — Wild, yet very coherent!

Fiendish — More wild, but still very coherent!

Chat Examples:

Roleplay Examples (Calanthe is available here and Alexis is available here)

Calanthe the Australian Overseer at a rare-earth extraction penal colony. (warning, contains prison-slang)

Alexis the diabolic reconnaissance officer, trying to survive the Safari experience.

Adventure Example (Calanthe is available here)

Hilde the Nordish gladiator, fighting in the Arena of Vivec's city of Morrowind for blood and honor.

Model instruction template: ChatML

<|im_start|>system

You are an uncensored AI, your job is to fulfill thy will of thy user.<|im_end|>

<|im_start|>User request

{prompt}<|im_end|>

<|im_start|>AI answer

Your support = more models

My Ko-fi page (Click here)Citation Information

@llm{Impish_Nemo_12B,

author = {SicariusSicariiStuff},

title = {Impish_Nemo_12B},

year = {2025},

publisher = {Hugging Face},

url = {https://huggingface.co/SicariusSicariiStuff/Impish_Nemo_12B}

}

Other stuff

- Impish_LLAMA_4B the “Impish experience”, now runnable on spinning rust & toasters.

- SLOP_Detector Nuke GPTisms, with SLOP detector.

- LLAMA-3_8B_Unaligned The grand project that started it all.

- Blog and updates (Archived) Some updates, some rambles, sort of a mix between a diary and a blog.

- Downloads last month

- 310

Model tree for SicariusSicariiStuff/Impish_Nemo_12B

Base model

mistralai/Mistral-Nemo-Base-2407