Update README.md

Browse files

README.md

CHANGED

|

@@ -1,118 +1,15 @@

|

|

| 1 |

---

|

| 2 |

base_model:

|

| 3 |

- trashpanda-org/QwQ-32B-Snowdrop-v0

|

| 4 |

-

- trashpanda-org/Qwen2.5-32B-Marigold-v0

|

| 5 |

-

- Qwen/QwQ-32B

|

| 6 |

-

- Qwen/Qwen2.5-32B

|

| 7 |

-

- trashpanda-org/Qwen2.5-32B-Marigold-v0-exp

|

| 8 |

library_name: transformers

|

|

|

|

| 9 |

tags:

|

| 10 |

- mergekit

|

| 11 |

- mergekitty

|

| 12 |

- merge

|

| 13 |

|

| 14 |

---

|

| 15 |

-

|

| 16 |

|

| 17 |

-

|

| 18 |

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

<p><b>Has's notes</b>: it's actually pretty damn good?!</p>

|

| 22 |

-

|

| 23 |

-

<p><b>Severian's notes</b>: R1 at home for RP, literally. Able to handle my cards with gimmicks and subtle tricks in them. With a good reasoning starter+prompt, I'm getting consistently-structured responses that have a good amount of variation across them still while rerolling. Char/scenario portrayal is good despite my focus on writing style, lorebooks are properly referenced at times. Slop doesn't seem to be too much of an issue with thinking enabled. Some user impersonation is rarely observed. Prose is refreshing if you take advantage of what I did (writing style fixation). I know I said Marigold would be my daily driver, but this one is that now, it's that good.</p>

|

| 24 |

-

|

| 25 |

-

## Recommended settings

|

| 26 |

-

|

| 27 |

-

<p><b>Context/instruct template</b>: ChatML. <s>Was definitely not tested with ChatML instruct and Mistral v7 template, nuh-uh.</s></p>

|

| 28 |

-

|

| 29 |

-

<p><b>Samplers</b>: temperature at 0.9, min_p at 0.05, top_a at 0.3, TFS at 0.75, repetition_penalty at 1.03, DRY if you have access to it.</p>

|

| 30 |

-

|

| 31 |

-

A virt-io derivative prompt worked best during our testing, but feel free to use what you like.

|

| 32 |

-

|

| 33 |

-

Master import for ST: [https://files.catbox.moe/b6nwbc.json](https://files.catbox.moe/b6nwbc.json)

|

| 34 |

-

|

| 35 |

-

## Reasoning

|

| 36 |

-

|

| 37 |

-

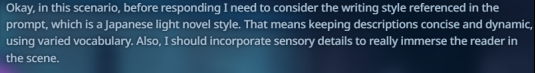

Feel free to test whichever reasoning setup you're most comfortable with, but here's a recommendation from me. My prompt has a line that says:

|

| 38 |

-

|

| 39 |

-

```

|

| 40 |

-

Style Preference: Encourage the usage of a Japanese light novel writing style.

|

| 41 |

-

```

|

| 42 |

-

|

| 43 |

-

Deciding to fixate on that, my reasoning starter is:

|

| 44 |

-

|

| 45 |

-

```

|

| 46 |

-

<think>Okay, in this scenario, before responding I need to consider the writing style referenced in the prompt, which is

|

| 47 |

-

```

|

| 48 |

-

|

| 49 |

-

What this did for me, at least during testing is that it gave the reasoning a structure to follow across rerolls, seeking out that part of the prompt consistently.

|

| 50 |

-

See below:

|

| 51 |

-

|

| 52 |

-

|

| 53 |

-

|

| 54 |

-

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

But the responses were still varied, because the next few paragraphs after these delved into character details, so on and so forth. Might want to experiment and make your own thinking/reasoning starter that focuses on what you hope to get out of the responses for best results.

|

| 58 |

-

|

| 59 |

-

-- Severian

|

| 60 |

-

|

| 61 |

-

## Thank you!

|

| 62 |

-

|

| 63 |

-

Big thanks to the folks in the trashpanda-org discord for testing and sending over some logs!

|

| 64 |

-

|

| 65 |

-

## Reviews

|

| 66 |

-

|

| 67 |

-

To follow.

|

| 68 |

-

|

| 69 |

-

## Just us having fun, don't mind it

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

## Some logs

|

| 74 |

-

|

| 75 |

-

To follow.

|

| 76 |

-

|

| 77 |

-

## Merge Details

|

| 78 |

-

### Merge Method

|

| 79 |

-

|

| 80 |

-

This model was merged using the [TIES](https://arxiv.org/abs/2306.01708) merge method using [Qwen/Qwen2.5-32B](https://huggingface.co/Qwen/Qwen2.5-32B) as a base.

|

| 81 |

-

|

| 82 |

-

### Models Merged

|

| 83 |

-

|

| 84 |

-

The following models were included in the merge:

|

| 85 |

-

* [trashpanda-org/Qwen2.5-32B-Marigold-v0](https://huggingface.co/trashpanda-org/Qwen2.5-32B-Marigold-v0)

|

| 86 |

-

* [Qwen/QwQ-32B](https://huggingface.co/Qwen/QwQ-32B)

|

| 87 |

-

* [trashpanda-org/Qwen2.5-32B-Marigold-v0-exp](https://huggingface.co/trashpanda-org/Qwen2.5-32B-Marigold-v0-exp)

|

| 88 |

-

|

| 89 |

-

### Configuration

|

| 90 |

-

|

| 91 |

-

The following YAML configuration was used to produce this model:

|

| 92 |

-

|

| 93 |

-

```yaml

|

| 94 |

-

models:

|

| 95 |

-

- model: trashpanda-org/Qwen2.5-32B-Marigold-v0-exp

|

| 96 |

-

parameters:

|

| 97 |

-

weight: 1

|

| 98 |

-

density: 1

|

| 99 |

-

- model: trashpanda-org/Qwen2.5-32B-Marigold-v0

|

| 100 |

-

parameters:

|

| 101 |

-

weight: 1

|

| 102 |

-

density: 1

|

| 103 |

-

- model: Qwen/QwQ-32B

|

| 104 |

-

parameters:

|

| 105 |

-

weight: 0.9

|

| 106 |

-

density: 0.9

|

| 107 |

-

merge_method: ties

|

| 108 |

-

base_model: Qwen/Qwen2.5-32B

|

| 109 |

-

parameters:

|

| 110 |

-

weight: 0.9

|

| 111 |

-

density: 0.9

|

| 112 |

-

normalize: true

|

| 113 |

-

int8_mask: true

|

| 114 |

-

tokenizer_source: Qwen/Qwen2.5-32B-Instruct

|

| 115 |

-

dtype: bfloat16

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

```

|

|

|

|

| 1 |

---

|

| 2 |

base_model:

|

| 3 |

- trashpanda-org/QwQ-32B-Snowdrop-v0

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

library_name: transformers

|

| 5 |

+

base_model_relation: quantized

|

| 6 |

tags:

|

| 7 |

- mergekit

|

| 8 |

- mergekitty

|

| 9 |

- merge

|

| 10 |

|

| 11 |

---

|

|

|

|

| 12 |

|

| 13 |

+

EXL2 Quants by FrenzyBiscuit.

|

| 14 |

|

| 15 |

+

This model is 4.0 BPW EXL2.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|