Upload 15 files

Browse files- .gitattributes +1 -0

- README.md +190 -0

- added_tokens.json +24 -0

- config.json +40 -0

- mergekitty_config.yml +23 -0

- merges.txt +0 -0

- model-00001-of-00003.safetensors +3 -0

- model-00002-of-00003.safetensors +3 -0

- model-00003-of-00003.safetensors +3 -0

- model.safetensors.index.json +0 -0

- quantization_config.json +0 -0

- special_tokens_map.json +31 -0

- tokenizer.json +3 -0

- tokenizer_config.json +208 -0

- vocab.json +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

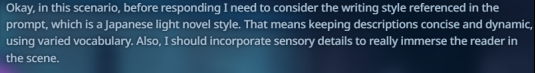

|

| 36 |

+

tokenizer.json filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,190 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

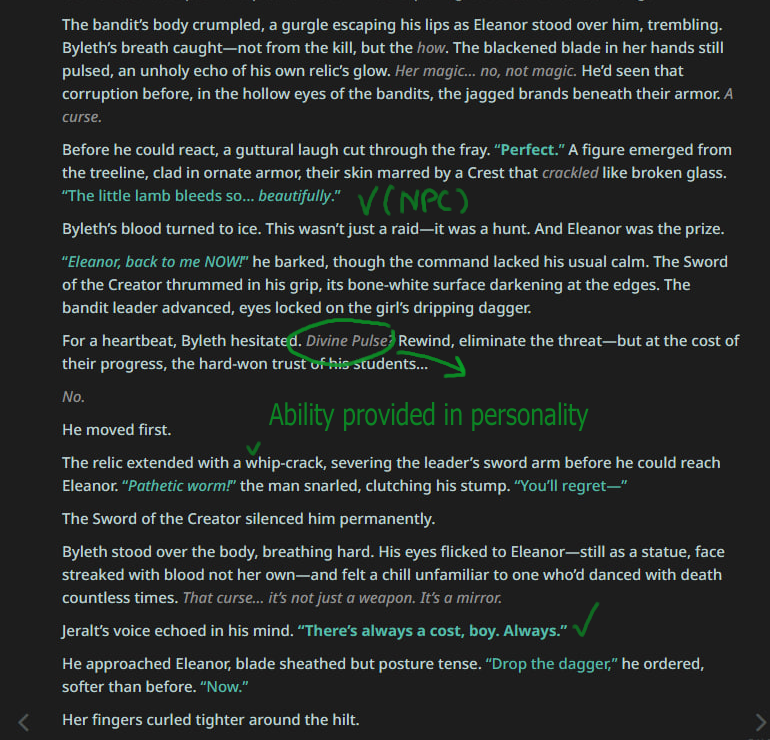

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

base_model:

|

| 3 |

+

- trashpanda-org/QwQ-32B-Snowdrop-v0

|

| 4 |

+

base_model_relation: quantized

|

| 5 |

+

library_name: transformers

|

| 6 |

+

tags:

|

| 7 |

+

- mergekit

|

| 8 |

+

- mergekitty

|

| 9 |

+

- merge

|

| 10 |

+

---

|

| 11 |

+

## Quantized using the default exllamav3 (0.0.1) quantization process.

|

| 12 |

+

|

| 13 |

+

- Original model: https://huggingface.co/trashpanda-org/QwQ-32B-Snowdrop-v0

|

| 14 |

+

- exllamav3: https://github.com/turboderp-org/exllamav3

|

| 15 |

+

---

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

<sup>QwQwQwQwQwQ and Marigold met at a party and hit it off...</sup>

|

| 19 |

+

<p><b>Has's notes</b>: it's actually pretty damn good?!</p>

|

| 20 |

+

|

| 21 |

+

<p><b>Severian's notes</b>: R1 at home for RP, literally. Able to handle my cards with gimmicks and subtle tricks in them. With a good reasoning starter+prompt, I'm getting consistently-structured responses that have a good amount of variation across them still while rerolling. Char/scenario portrayal is good despite my focus on writing style, lorebooks are properly referenced at times. Slop doesn't seem to be too much of an issue with thinking enabled. Some user impersonation is rarely observed. Prose is refreshing if you take advantage of what I did (writing style fixation). I know I said Marigold would be my daily driver, but this one is that now, it's that good.</p>

|

| 22 |

+

|

| 23 |

+

## Recommended settings

|

| 24 |

+

|

| 25 |

+

<p><b>Context/instruct template</b>: ChatML. <s>Was definitely not tested with ChatML instruct and Mistral v7 template, nuh-uh.</s></p>

|

| 26 |

+

|

| 27 |

+

<p><b>Samplers</b>: temperature at 0.9, min_p at 0.05, top_a at 0.3, TFS at 0.75, repetition_penalty at 1.03, DRY if you have access to it.</p>

|

| 28 |

+

|

| 29 |

+

A virt-io derivative prompt worked best during our testing, but feel free to use what you like.

|

| 30 |

+

|

| 31 |

+

Master import for ST: [https://files.catbox.moe/b6nwbc.json](https://files.catbox.moe/b6nwbc.json)

|

| 32 |

+

|

| 33 |

+

## Reasoning

|

| 34 |

+

|

| 35 |

+

Feel free to test whichever reasoning setup you're most comfortable with, but here's a recommendation from me. My prompt has a line that says:

|

| 36 |

+

|

| 37 |

+

```

|

| 38 |

+

Style Preference: Encourage the usage of a Japanese light novel writing style.

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

Deciding to fixate on that, my reasoning starter is:

|

| 42 |

+

|

| 43 |

+

```

|

| 44 |

+

<think>Okay, in this scenario, before responding I need to consider the writing style referenced in the prompt, which is

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

What this did for me, at least during testing is that it gave the reasoning a structure to follow across rerolls, seeking out that part of the prompt consistently.

|

| 48 |

+

See below:

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

But the responses were still varied, because the next few paragraphs after these delved into character details, so on and so forth. Might want to experiment and make your own thinking/reasoning starter that focuses on what you hope to get out of the responses for best results.

|

| 56 |

+

|

| 57 |

+

— Severian

|

| 58 |

+

|

| 59 |

+

## Thank you!

|

| 60 |

+

|

| 61 |

+

Big thanks to the folks in the trashpanda-org discord for testing and sending over some logs!

|

| 62 |

+

|

| 63 |

+

## Reviews

|

| 64 |

+

|

| 65 |

+

> PROS:

|

| 66 |

+

>

|

| 67 |

+

> In 10 swipes, had only two minor instances of speaking for {{user}}. (Can probably be fixed with a good prompt, though.)

|

| 68 |

+

>

|

| 69 |

+

> Creativity: 8/10 swipes provided unique text for 90% of the response, almost no cliché phrases.

|

| 70 |

+

>

|

| 71 |

+

> Takes personality of characters into account, sticking to it well. Even without a lorebook to support it was able to retain lore-specific terms and actually remember which meant which.

|

| 72 |

+

>

|

| 73 |

+

> NPCs: In 6/10 swipes NPC characters also partook in action, sticking to bits of information provided about them in opening message. Some of them even had their unique speech patterns. (Certain with a proper lorebook it would cook.)

|

| 74 |

+

>

|

| 75 |

+

> Unfiltered, graphic descriptions of fight scenes. Magic, physical attacks - everything was taken into account with no holding back.

|

| 76 |

+

>

|

| 77 |

+

> CONS:

|

| 78 |

+

>

|

| 79 |

+

> Some swipes were a bit OOC. Some swipes were bland, providing little to no input or any weight on the roleplay context.

|

| 80 |

+

>

|

| 81 |

+

> Out of all models I've tried recently, this one definitely has most potential. With proper prompting I think this beast would be genuinely one of the best models for unique scenarios.

|

| 82 |

+

|

| 83 |

+

— Sellvene

|

| 84 |

+

|

| 85 |

+

> It's one of the -maybe THE- best small thinking models right now. It sticks to character really well, slops are almost non-existent though they are still there of course, it proceeds with the story well and listens to the prompt. I LOVE R1 but I love snowdrop even more right now because answers feel more geniune and less agressive compared to R1.

|

| 86 |

+

|

| 87 |

+

— Carmenta

|

| 88 |

+

|

| 89 |

+

> Writes better than GPT 4.5. Overall, I think censorship is fucking up more unhinged bots and it's too tame for my liking. Another thing I noticed is that, it's sticking too much to being "right" to the character and too afraid to go off the rails.

|

| 90 |

+

|

| 91 |

+

— Myscell

|

| 92 |

+

|

| 93 |

+

> I'm fainting, the character breakdown in it's thinking is similar like R1 does. Character handling looks amazing. Broo if a merge this good then, I'm looking forward to that QwQ finetune.

|

| 94 |

+

|

| 95 |

+

— Sam

|

| 96 |

+

|

| 97 |

+

> Negligible slop, no positivity bias which is good though. I like the model so far, R1 at home.

|

| 98 |

+

|

| 99 |

+

— Raihanbook

|

| 100 |

+

|

| 101 |

+

> Overall, I think this is a real solid model. Cot is great, listens to my prompt extremely well. Number 1 for reasoning, honestly. And the way it portrays the character and persona details? Perfect. Narration, perfect. I have very little complaints about this model, ya'll cooked.

|

| 102 |

+

|

| 103 |

+

— Moothdragon

|

| 104 |

+

|

| 105 |

+

> On my end, posivity bias isn't really there 🤔 Character and scenario portrayal is good. The prose too, I like it. Between this and Marigold, I feel like I can lean into snowboard (I mean Snowdrop) more. For now though, it is still Marigold.

|

| 106 |

+

|

| 107 |

+

— Azula

|

| 108 |

+

|

| 109 |

+

> Honestly i am impressed and I like it.

|

| 110 |

+

|

| 111 |

+

— OMGWTFBBQ

|

| 112 |

+

|

| 113 |

+

> It's pretty damn good. Better than Mullein, I think.

|

| 114 |

+

|

| 115 |

+

— br

|

| 116 |

+

|

| 117 |

+

> So far, it fucking SLAPS. I don't think it's tried to pull POV once yet.

|

| 118 |

+

|

| 119 |

+

— Overloke

|

| 120 |

+

|

| 121 |

+

## Just us having fun, don't mind it

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

## Some logs

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

|

| 138 |

+

|

| 139 |

+

|

| 140 |

+

(After a session started with Gemini)

|

| 141 |

+

|

| 142 |

+

|

| 143 |

+

|

| 144 |

+

|

| 145 |

+

|

| 146 |

+

|

| 147 |

+

|

| 148 |

+

|

| 149 |

+

## Merge Details

|

| 150 |

+

### Merge Method

|

| 151 |

+

|

| 152 |

+

This model was merged using the [TIES](https://arxiv.org/abs/2306.01708) merge method using [Qwen/Qwen2.5-32B](https://huggingface.co/Qwen/Qwen2.5-32B) as a base.

|

| 153 |

+

|

| 154 |

+

### Models Merged

|

| 155 |

+

|

| 156 |

+

The following models were included in the merge:

|

| 157 |

+

* [trashpanda-org/Qwen2.5-32B-Marigold-v0](https://huggingface.co/trashpanda-org/Qwen2.5-32B-Marigold-v0)

|

| 158 |

+

* [Qwen/QwQ-32B](https://huggingface.co/Qwen/QwQ-32B)

|

| 159 |

+

* [trashpanda-org/Qwen2.5-32B-Marigold-v0-exp](https://huggingface.co/trashpanda-org/Qwen2.5-32B-Marigold-v0-exp)

|

| 160 |

+

|

| 161 |

+

### Configuration

|

| 162 |

+

|

| 163 |

+

The following YAML configuration was used to produce this model:

|

| 164 |

+

|

| 165 |

+

```yaml

|

| 166 |

+

models:

|

| 167 |

+

- model: trashpanda-org/Qwen2.5-32B-Marigold-v0-exp

|

| 168 |

+

parameters:

|

| 169 |

+

weight: 1

|

| 170 |

+

density: 1

|

| 171 |

+

- model: trashpanda-org/Qwen2.5-32B-Marigold-v0

|

| 172 |

+

parameters:

|

| 173 |

+

weight: 1

|

| 174 |

+

density: 1

|

| 175 |

+

- model: Qwen/QwQ-32B

|

| 176 |

+

parameters:

|

| 177 |

+

weight: 0.9

|

| 178 |

+

density: 0.9

|

| 179 |

+

merge_method: ties

|

| 180 |

+

base_model: Qwen/Qwen2.5-32B

|

| 181 |

+

parameters:

|

| 182 |

+

weight: 0.9

|

| 183 |

+

density: 0.9

|

| 184 |

+

normalize: true

|

| 185 |

+

int8_mask: true

|

| 186 |

+

tokenizer_source: Qwen/Qwen2.5-32B-Instruct

|

| 187 |

+

dtype: bfloat16

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

```

|

added_tokens.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"</tool_call>": 151658,

|

| 3 |

+

"<tool_call>": 151657,

|

| 4 |

+

"<|box_end|>": 151649,

|

| 5 |

+

"<|box_start|>": 151648,

|

| 6 |

+

"<|endoftext|>": 151643,

|

| 7 |

+

"<|file_sep|>": 151664,

|

| 8 |

+

"<|fim_middle|>": 151660,

|

| 9 |

+

"<|fim_pad|>": 151662,

|

| 10 |

+

"<|fim_prefix|>": 151659,

|

| 11 |

+

"<|fim_suffix|>": 151661,

|

| 12 |

+

"<|im_end|>": 151645,

|

| 13 |

+

"<|im_start|>": 151644,

|

| 14 |

+

"<|image_pad|>": 151655,

|

| 15 |

+

"<|object_ref_end|>": 151647,

|

| 16 |

+

"<|object_ref_start|>": 151646,

|

| 17 |

+

"<|quad_end|>": 151651,

|

| 18 |

+

"<|quad_start|>": 151650,

|

| 19 |

+

"<|repo_name|>": 151663,

|

| 20 |

+

"<|video_pad|>": 151656,

|

| 21 |

+

"<|vision_end|>": 151653,

|

| 22 |

+

"<|vision_pad|>": 151654,

|

| 23 |

+

"<|vision_start|>": 151652

|

| 24 |

+

}

|

config.json

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "Qwen/Qwen2.5-32B",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"Qwen2ForCausalLM"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 151643,

|

| 8 |

+

"eos_token_id": 151643,

|

| 9 |

+

"hidden_act": "silu",

|

| 10 |

+

"hidden_size": 5120,

|

| 11 |

+

"initializer_range": 0.02,

|

| 12 |

+

"intermediate_size": 27648,

|

| 13 |

+

"max_position_embeddings": 131072,

|

| 14 |

+

"max_window_layers": 64,

|

| 15 |

+

"model_type": "qwen2",

|

| 16 |

+

"num_attention_heads": 40,

|

| 17 |

+

"num_hidden_layers": 64,

|

| 18 |

+

"num_key_value_heads": 8,

|

| 19 |

+

"rms_norm_eps": 1e-05,

|

| 20 |

+

"rope_scaling": null,

|

| 21 |

+

"rope_theta": 1000000.0,

|

| 22 |

+

"sliding_window": 131072,

|

| 23 |

+

"tie_word_embeddings": false,

|

| 24 |

+

"torch_dtype": "bfloat16",

|

| 25 |

+

"transformers_version": "4.49.0",

|

| 26 |

+

"use_cache": true,

|

| 27 |

+

"use_sliding_window": false,

|

| 28 |

+

"vocab_size": 151665,

|

| 29 |

+

"quantization_config": {

|

| 30 |

+

"quant_method": "exl3",

|

| 31 |

+

"version": "0.0.1",

|

| 32 |

+

"bits": 4.0,

|

| 33 |

+

"head_bits": 6,

|

| 34 |

+

"calibration": {

|

| 35 |

+

"rows": 100,

|

| 36 |

+

"cols": 2048

|

| 37 |

+

},

|

| 38 |

+

"out_scales": "auto"

|

| 39 |

+

}

|

| 40 |

+

}

|

mergekitty_config.yml

ADDED

|

@@ -0,0 +1,23 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

models:

|

| 2 |

+

- model: trashpanda-org/Qwen2.5-32B-Marigold-v0-exp

|

| 3 |

+

parameters:

|

| 4 |

+

weight: 1

|

| 5 |

+

density: 1

|

| 6 |

+

- model: trashpanda-org/Qwen2.5-32B-Marigold-v0

|

| 7 |

+

parameters:

|

| 8 |

+

weight: 1

|

| 9 |

+

density: 1

|

| 10 |

+

- model: Qwen/QwQ-32B

|

| 11 |

+

parameters:

|

| 12 |

+

weight: 0.9

|

| 13 |

+

density: 0.9

|

| 14 |

+

merge_method: ties

|

| 15 |

+

base_model: Qwen/Qwen2.5-32B

|

| 16 |

+

parameters:

|

| 17 |

+

weight: 0.9

|

| 18 |

+

density: 0.9

|

| 19 |

+

normalize: true

|

| 20 |

+

int8_mask: true

|

| 21 |

+

tokenizer_source: Qwen/Qwen2.5-32B-Instruct

|

| 22 |

+

dtype: bfloat16

|

| 23 |

+

|

merges.txt

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

model-00001-of-00003.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f86df203f51ba095cc4f6e64eb530457ae096cba38dddb328c01b629b41a04d6

|

| 3 |

+

size 8387675040

|

model-00002-of-00003.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:348084430b03fa647c6235e4d0ddbaf4b920f82ea2b7c58c8623a8f4b3b85706

|

| 3 |

+

size 8543277872

|

model-00003-of-00003.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0aa5e95c129dc860b36daf8b4c4f3996fa6c522e00de24205c131b1c5aabd4d1

|

| 3 |

+

size 826868976

|

model.safetensors.index.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

quantization_config.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"additional_special_tokens": [

|

| 3 |

+

"<|im_start|>",

|

| 4 |

+

"<|im_end|>",

|

| 5 |

+

"<|object_ref_start|>",

|

| 6 |

+

"<|object_ref_end|>",

|

| 7 |

+

"<|box_start|>",

|

| 8 |

+

"<|box_end|>",

|

| 9 |

+

"<|quad_start|>",

|

| 10 |

+

"<|quad_end|>",

|

| 11 |

+

"<|vision_start|>",

|

| 12 |

+

"<|vision_end|>",

|

| 13 |

+

"<|vision_pad|>",

|

| 14 |

+

"<|image_pad|>",

|

| 15 |

+

"<|video_pad|>"

|

| 16 |

+

],

|

| 17 |

+

"eos_token": {

|

| 18 |

+

"content": "<|im_end|>",

|

| 19 |

+

"lstrip": false,

|

| 20 |

+

"normalized": false,

|

| 21 |

+

"rstrip": false,

|

| 22 |

+

"single_word": false

|

| 23 |

+

},

|

| 24 |

+

"pad_token": {

|

| 25 |

+

"content": "<|endoftext|>",

|

| 26 |

+

"lstrip": false,

|

| 27 |

+

"normalized": false,

|

| 28 |

+

"rstrip": false,

|

| 29 |

+

"single_word": false

|

| 30 |

+

}

|

| 31 |

+

}

|

tokenizer.json

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c0382117ea329cdf097041132f6d735924b697924d6f6fc3945713e96ce87539

|

| 3 |

+

size 7031645

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,208 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": false,

|

| 3 |

+

"add_prefix_space": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"151643": {

|

| 6 |

+

"content": "<|endoftext|>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"151644": {

|

| 14 |

+

"content": "<|im_start|>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"151645": {

|

| 22 |

+

"content": "<|im_end|>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": false,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

},

|

| 29 |

+

"151646": {

|

| 30 |

+

"content": "<|object_ref_start|>",

|

| 31 |

+

"lstrip": false,

|

| 32 |

+

"normalized": false,

|

| 33 |

+

"rstrip": false,

|

| 34 |

+

"single_word": false,

|

| 35 |

+

"special": true

|

| 36 |

+

},

|

| 37 |

+

"151647": {

|

| 38 |

+

"content": "<|object_ref_end|>",

|

| 39 |

+

"lstrip": false,

|

| 40 |

+

"normalized": false,

|

| 41 |

+

"rstrip": false,

|

| 42 |

+

"single_word": false,

|

| 43 |

+

"special": true

|

| 44 |

+

},

|

| 45 |

+

"151648": {

|

| 46 |

+

"content": "<|box_start|>",

|

| 47 |

+

"lstrip": false,

|

| 48 |

+

"normalized": false,

|

| 49 |

+

"rstrip": false,

|

| 50 |

+

"single_word": false,

|

| 51 |

+

"special": true

|

| 52 |

+

},

|

| 53 |

+

"151649": {

|

| 54 |

+

"content": "<|box_end|>",

|

| 55 |

+

"lstrip": false,

|

| 56 |

+

"normalized": false,

|

| 57 |

+

"rstrip": false,

|

| 58 |

+

"single_word": false,

|

| 59 |

+

"special": true

|

| 60 |

+

},

|

| 61 |

+

"151650": {

|

| 62 |

+

"content": "<|quad_start|>",

|

| 63 |

+

"lstrip": false,

|

| 64 |

+

"normalized": false,

|

| 65 |

+

"rstrip": false,

|

| 66 |

+

"single_word": false,

|

| 67 |

+

"special": true

|

| 68 |

+

},

|

| 69 |

+

"151651": {

|

| 70 |

+

"content": "<|quad_end|>",

|

| 71 |

+

"lstrip": false,

|

| 72 |

+

"normalized": false,

|

| 73 |

+

"rstrip": false,

|

| 74 |

+

"single_word": false,

|

| 75 |

+

"special": true

|

| 76 |

+

},

|

| 77 |

+

"151652": {

|

| 78 |

+

"content": "<|vision_start|>",

|

| 79 |

+

"lstrip": false,

|

| 80 |

+

"normalized": false,

|

| 81 |

+

"rstrip": false,

|

| 82 |

+

"single_word": false,

|

| 83 |

+

"special": true

|

| 84 |

+

},

|

| 85 |

+

"151653": {

|

| 86 |

+

"content": "<|vision_end|>",

|

| 87 |

+

"lstrip": false,

|

| 88 |

+

"normalized": false,

|

| 89 |

+

"rstrip": false,

|

| 90 |

+

"single_word": false,

|

| 91 |

+

"special": true

|

| 92 |

+

},

|

| 93 |

+

"151654": {

|

| 94 |

+

"content": "<|vision_pad|>",

|

| 95 |

+

"lstrip": false,

|

| 96 |

+

"normalized": false,

|

| 97 |

+

"rstrip": false,

|

| 98 |

+

"single_word": false,

|

| 99 |

+

"special": true

|

| 100 |

+

},

|

| 101 |

+

"151655": {

|

| 102 |

+

"content": "<|image_pad|>",

|

| 103 |

+

"lstrip": false,

|

| 104 |

+

"normalized": false,

|

| 105 |

+

"rstrip": false,

|

| 106 |

+

"single_word": false,

|

| 107 |

+

"special": true

|

| 108 |

+

},

|

| 109 |

+

"151656": {

|

| 110 |

+

"content": "<|video_pad|>",

|

| 111 |

+

"lstrip": false,

|

| 112 |

+

"normalized": false,

|

| 113 |

+

"rstrip": false,

|

| 114 |

+

"single_word": false,

|

| 115 |

+

"special": true

|

| 116 |

+

},

|

| 117 |

+

"151657": {

|

| 118 |

+

"content": "<tool_call>",

|

| 119 |

+

"lstrip": false,

|

| 120 |

+

"normalized": false,

|

| 121 |

+

"rstrip": false,

|

| 122 |

+

"single_word": false,

|

| 123 |

+

"special": false

|

| 124 |

+

},

|

| 125 |

+

"151658": {

|

| 126 |

+

"content": "</tool_call>",

|

| 127 |

+

"lstrip": false,

|

| 128 |

+

"normalized": false,

|

| 129 |

+

"rstrip": false,

|

| 130 |

+

"single_word": false,

|

| 131 |

+

"special": false

|

| 132 |

+

},

|

| 133 |

+

"151659": {

|

| 134 |

+

"content": "<|fim_prefix|>",

|

| 135 |

+

"lstrip": false,

|

| 136 |

+

"normalized": false,

|

| 137 |

+

"rstrip": false,

|

| 138 |

+

"single_word": false,

|

| 139 |

+

"special": false

|

| 140 |

+

},

|

| 141 |

+

"151660": {

|

| 142 |

+

"content": "<|fim_middle|>",

|

| 143 |

+

"lstrip": false,

|

| 144 |

+

"normalized": false,

|

| 145 |

+

"rstrip": false,

|

| 146 |

+

"single_word": false,

|

| 147 |

+

"special": false

|

| 148 |

+

},

|

| 149 |

+

"151661": {

|

| 150 |

+

"content": "<|fim_suffix|>",

|

| 151 |

+

"lstrip": false,

|

| 152 |

+

"normalized": false,

|

| 153 |

+

"rstrip": false,

|

| 154 |

+

"single_word": false,

|

| 155 |

+

"special": false

|

| 156 |

+

},

|

| 157 |

+

"151662": {

|

| 158 |

+

"content": "<|fim_pad|>",

|

| 159 |

+

"lstrip": false,

|

| 160 |

+

"normalized": false,

|

| 161 |

+

"rstrip": false,

|

| 162 |

+

"single_word": false,

|

| 163 |

+

"special": false

|

| 164 |

+

},

|

| 165 |

+

"151663": {

|

| 166 |

+

"content": "<|repo_name|>",

|

| 167 |

+

"lstrip": false,

|

| 168 |

+

"normalized": false,

|

| 169 |

+

"rstrip": false,

|

| 170 |

+

"single_word": false,

|

| 171 |

+

"special": false

|

| 172 |

+

},

|

| 173 |

+

"151664": {

|

| 174 |

+

"content": "<|file_sep|>",

|

| 175 |

+

"lstrip": false,

|

| 176 |

+

"normalized": false,

|

| 177 |

+

"rstrip": false,

|

| 178 |

+

"single_word": false,

|

| 179 |

+

"special": false

|

| 180 |

+

}

|

| 181 |

+

},

|

| 182 |

+

"additional_special_tokens": [

|

| 183 |

+

"<|im_start|>",

|

| 184 |

+

"<|im_end|>",

|

| 185 |

+

"<|object_ref_start|>",

|

| 186 |

+

"<|object_ref_end|>",

|

| 187 |

+

"<|box_start|>",

|

| 188 |

+

"<|box_end|>",

|

| 189 |

+

"<|quad_start|>",

|

| 190 |

+

"<|quad_end|>",

|

| 191 |

+

"<|vision_start|>",

|

| 192 |

+

"<|vision_end|>",

|

| 193 |

+

"<|vision_pad|>",

|

| 194 |

+

"<|image_pad|>",

|

| 195 |

+

"<|video_pad|>"

|

| 196 |

+

],

|

| 197 |

+

"bos_token": null,

|

| 198 |

+

"chat_template": "{%- if tools %}\n {{- '<|im_start|>system\\n' }}\n {%- if messages[0]['role'] == 'system' %}\n {{- messages[0]['content'] }}\n {%- else %}\n {{- 'You are Qwen, created by Alibaba Cloud. You are a helpful assistant.' }}\n {%- endif %}\n {{- \"\\n\\n# Tools\\n\\nYou may call one or more functions to assist with the user query.\\n\\nYou are provided with function signatures within <tools></tools> XML tags:\\n<tools>\" }}\n {%- for tool in tools %}\n {{- \"\\n\" }}\n {{- tool | tojson }}\n {%- endfor %}\n {{- \"\\n</tools>\\n\\nFor each function call, return a json object with function name and arguments within <tool_call></tool_call> XML tags:\\n<tool_call>\\n{\\\"name\\\": <function-name>, \\\"arguments\\\": <args-json-object>}\\n</tool_call><|im_end|>\\n\" }}\n{%- else %}\n {%- if messages[0]['role'] == 'system' %}\n {{- '<|im_start|>system\\n' + messages[0]['content'] + '<|im_end|>\\n' }}\n {%- else %}\n {{- '<|im_start|>system\\nYou are Qwen, created by Alibaba Cloud. You are a helpful assistant.<|im_end|>\\n' }}\n {%- endif %}\n{%- endif %}\n{%- for message in messages %}\n {%- if (message.role == \"user\") or (message.role == \"system\" and not loop.first) or (message.role == \"assistant\" and not message.tool_calls) %}\n {{- '<|im_start|>' + message.role + '\\n' + message.content + '<|im_end|>' + '\\n' }}\n {%- elif message.role == \"assistant\" %}\n {{- '<|im_start|>' + message.role }}\n {%- if message.content %}\n {{- '\\n' + message.content }}\n {%- endif %}\n {%- for tool_call in message.tool_calls %}\n {%- if tool_call.function is defined %}\n {%- set tool_call = tool_call.function %}\n {%- endif %}\n {{- '\\n<tool_call>\\n{\"name\": \"' }}\n {{- tool_call.name }}\n {{- '\", \"arguments\": ' }}\n {{- tool_call.arguments | tojson }}\n {{- '}\\n</tool_call>' }}\n {%- endfor %}\n {{- '<|im_end|>\\n' }}\n {%- elif message.role == \"tool\" %}\n {%- if (loop.index0 == 0) or (messages[loop.index0 - 1].role != \"tool\") %}\n {{- '<|im_start|>user' }}\n {%- endif %}\n {{- '\\n<tool_response>\\n' }}\n {{- message.content }}\n {{- '\\n</tool_response>' }}\n {%- if loop.last or (messages[loop.index0 + 1].role != \"tool\") %}\n {{- '<|im_end|>\\n' }}\n {%- endif %}\n {%- endif %}\n{%- endfor %}\n{%- if add_generation_prompt %}\n {{- '<|im_start|>assistant\\n' }}\n{%- endif %}\n",

|

| 199 |

+

"clean_up_tokenization_spaces": false,

|

| 200 |

+

"eos_token": "<|im_end|>",

|

| 201 |

+

"errors": "replace",

|

| 202 |

+

"extra_special_tokens": {},

|

| 203 |

+

"model_max_length": 131072,

|

| 204 |

+

"pad_token": "<|endoftext|>",

|

| 205 |

+

"split_special_tokens": false,

|

| 206 |

+

"tokenizer_class": "Qwen2Tokenizer",

|

| 207 |

+

"unk_token": null

|

| 208 |

+

}

|

vocab.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|