![]()

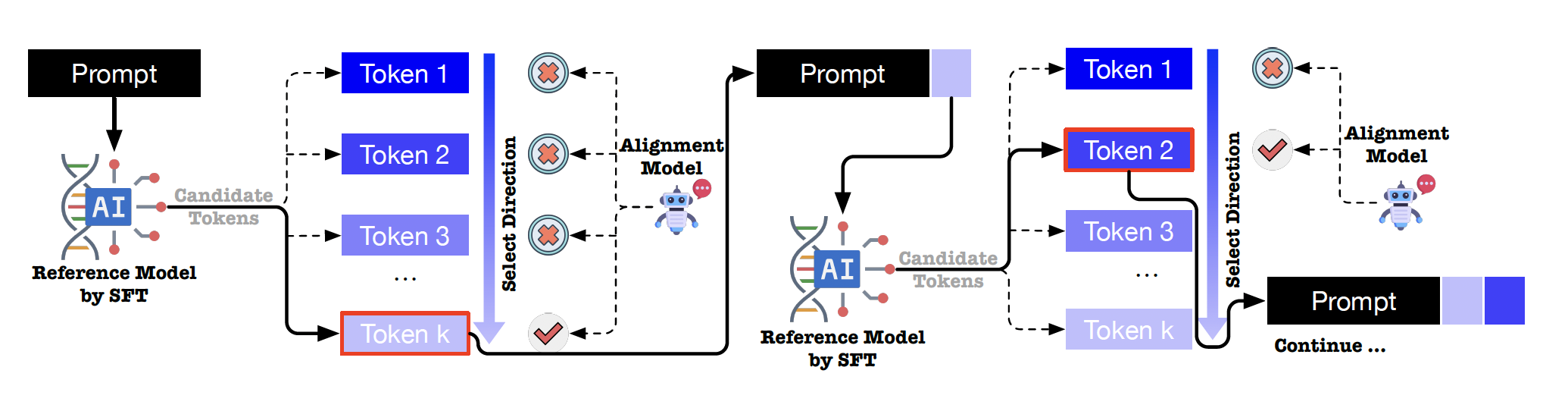

Performance improvements of MARA across PKUSafeRLHF, BeaverTails, and HarmfulQA datasets. Each entry shows the percentage improvement in preference rate achieved by applying MARA compared to using the original LLM alone.

|

Compatibility analysis of MARA, an alignment model trained with a LLM to be aggregate with other inference LLM. The value of each cell represents the percentage improvement in preference rate of our algorithm over the upstream model, i.e., inference model.

|

Performance comparison of MARA against RLHF, DPO, and Aligner measured by percentage improvements of preference rate.

|