Upload folder using huggingface_hub

Browse files- .ipynb_checkpoints/Granite3.2-2B-NF4-lora-FP16-Evaluation_Results-checkpoint.json +34 -0

- Granite3.2-2B-NF4-lora-FP16-Evaluation_Results.json +34 -0

- Granite3.2-2B-NF4-lora-FP16-Inference_Curve.png +0 -0

- Granite3.2-2B-NF4-lora-FP16-Latency_Histogram.png +0 -0

- Granite3.2-2B-NF4-lora-FP16-Memory_Histogram.png +0 -0

- Granite3.2-2B-NF4-lora-FP16-Memory_Usage_Curve.png +0 -0

.ipynb_checkpoints/Granite3.2-2B-NF4-lora-FP16-Evaluation_Results-checkpoint.json

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"eval_loss:": 0.6800438622995038,

|

| 3 |

+

"perplexity:": 1.9739643129455233,

|

| 4 |

+

"performance_metrics:": {

|

| 5 |

+

"accuracy:": 0.9993324432576769,

|

| 6 |

+

"precision:": 1.0,

|

| 7 |

+

"recall:": 1.0,

|

| 8 |

+

"f1:": 1.0,

|

| 9 |

+

"bleu:": 0.9608755860087081,

|

| 10 |

+

"rouge:": {

|

| 11 |

+

"rouge1": 0.9785245035961412,

|

| 12 |

+

"rouge2": 0.9783954249371395,

|

| 13 |

+

"rougeL": 0.9785245035961412

|

| 14 |

+

},

|

| 15 |

+

"semantic_similarity_avg:": 0.9975973963737488

|

| 16 |

+

},

|

| 17 |

+

"mauve:": 0.8818151996410524,

|

| 18 |

+

"inference_performance:": {

|

| 19 |

+

"min_latency_ms": 105.39054870605469,

|

| 20 |

+

"max_latency_ms": 4774.695873260498,

|

| 21 |

+

"lower_quartile_ms": 108.52676630020142,

|

| 22 |

+

"median_latency_ms": 112.74242401123047,

|

| 23 |

+

"upper_quartile_ms": 2542.6074862480164,

|

| 24 |

+

"avg_latency_ms": 1218.470693907846,

|

| 25 |

+

"min_memory_gb": 0.13478469848632812,

|

| 26 |

+

"max_memory_gb": 0.13478469848632812,

|

| 27 |

+

"lower_quartile_gb": 0.13478469848632812,

|

| 28 |

+

"median_memory_gb": 0.13478469848632812,

|

| 29 |

+

"upper_quartile_gb": 0.13478469848632812,

|

| 30 |

+

"avg_memory_gb": 0.13478469848632812,

|

| 31 |

+

"model_load_memory_gb": 1.5763826370239258,

|

| 32 |

+

"avg_inference_memory_gb": 0.13478469848632812

|

| 33 |

+

}

|

| 34 |

+

}

|

Granite3.2-2B-NF4-lora-FP16-Evaluation_Results.json

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"eval_loss:": 0.6800438622995038,

|

| 3 |

+

"perplexity:": 1.9739643129455233,

|

| 4 |

+

"performance_metrics:": {

|

| 5 |

+

"accuracy:": 0.9993324432576769,

|

| 6 |

+

"precision:": 1.0,

|

| 7 |

+

"recall:": 1.0,

|

| 8 |

+

"f1:": 1.0,

|

| 9 |

+

"bleu:": 0.9608755860087081,

|

| 10 |

+

"rouge:": {

|

| 11 |

+

"rouge1": 0.9785245035961412,

|

| 12 |

+

"rouge2": 0.9783954249371395,

|

| 13 |

+

"rougeL": 0.9785245035961412

|

| 14 |

+

},

|

| 15 |

+

"semantic_similarity_avg:": 0.9975973963737488

|

| 16 |

+

},

|

| 17 |

+

"mauve:": 0.8818151996410524,

|

| 18 |

+

"inference_performance:": {

|

| 19 |

+

"min_latency_ms": 105.39054870605469,

|

| 20 |

+

"max_latency_ms": 4774.695873260498,

|

| 21 |

+

"lower_quartile_ms": 108.52676630020142,

|

| 22 |

+

"median_latency_ms": 112.74242401123047,

|

| 23 |

+

"upper_quartile_ms": 2542.6074862480164,

|

| 24 |

+

"avg_latency_ms": 1218.470693907846,

|

| 25 |

+

"min_memory_gb": 0.13478469848632812,

|

| 26 |

+

"max_memory_gb": 0.13478469848632812,

|

| 27 |

+

"lower_quartile_gb": 0.13478469848632812,

|

| 28 |

+

"median_memory_gb": 0.13478469848632812,

|

| 29 |

+

"upper_quartile_gb": 0.13478469848632812,

|

| 30 |

+

"avg_memory_gb": 0.13478469848632812,

|

| 31 |

+

"model_load_memory_gb": 1.5763826370239258,

|

| 32 |

+

"avg_inference_memory_gb": 0.13478469848632812

|

| 33 |

+

}

|

| 34 |

+

}

|

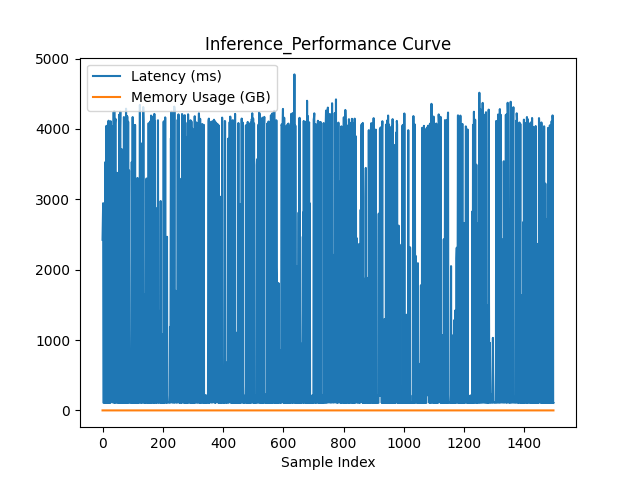

Granite3.2-2B-NF4-lora-FP16-Inference_Curve.png

ADDED

|

Granite3.2-2B-NF4-lora-FP16-Latency_Histogram.png

ADDED

|

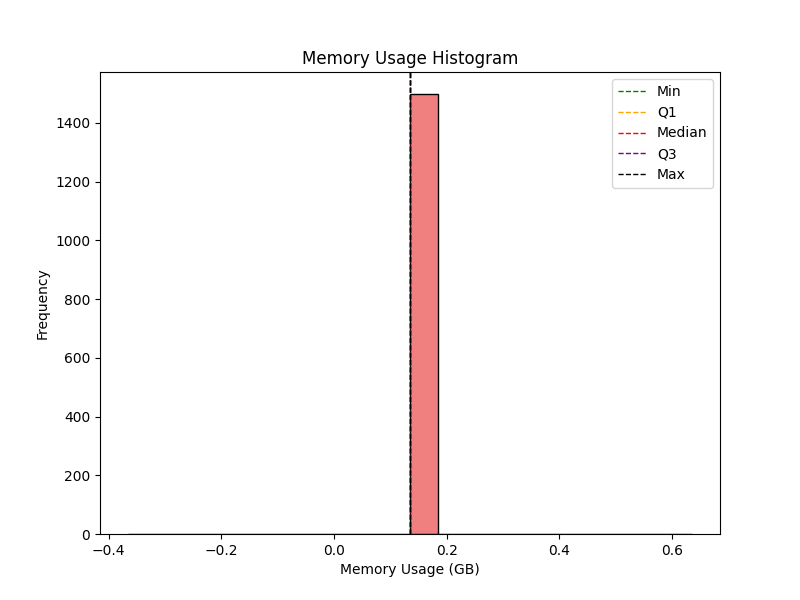

Granite3.2-2B-NF4-lora-FP16-Memory_Histogram.png

ADDED

|

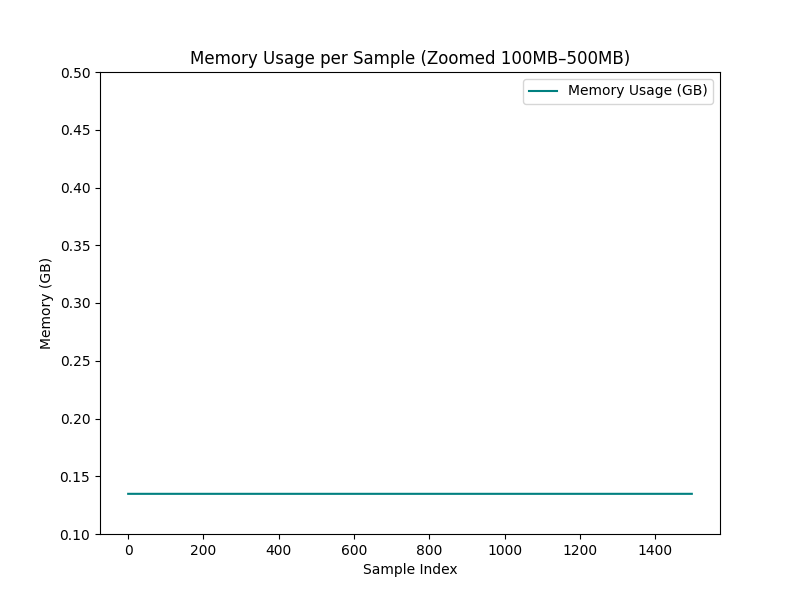

Granite3.2-2B-NF4-lora-FP16-Memory_Usage_Curve.png

ADDED

|