Upload folder using huggingface_hub

Browse files- .ipynb_checkpoints/Granite3.2-2B-FP16-lora-FP16-Evaluation_Results-checkpoint.json +32 -0

- Granite3.2-2B-FP16-lora-FP16-Evaluation_Results.json +34 -0

- Granite3.2-2B-FP16-lora-FP16-Inference_Curve.png +0 -0

- Granite3.2-2B-FP16-lora-FP16-Latency_Histogram.png +0 -0

- Granite3.2-2B-FP16-lora-FP16-Memory_Histogram.png +0 -0

- Granite3.2-2B-FP16-lora-FP16-Memory_Usage_Curve.png +0 -0

.ipynb_checkpoints/Granite3.2-2B-FP16-lora-FP16-Evaluation_Results-checkpoint.json

ADDED

|

@@ -0,0 +1,32 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"eval_loss": 0.628613162081992,

|

| 3 |

+

"perplexity": 1.8750084425057905,

|

| 4 |

+

"performance_metrics": {

|

| 5 |

+

"accuracy:": 0.9993324432576769,

|

| 6 |

+

"precision:": 1.0,

|

| 7 |

+

"recall:": 1.0,

|

| 8 |

+

"f1:": 1.0,

|

| 9 |

+

"bleu:": 0.9588057481855817,

|

| 10 |

+

"rouge:": {

|

| 11 |

+

"rouge1": 0.9774806284001962,

|

| 12 |

+

"rouge2": 0.9773617811911263,

|

| 13 |

+

"rougeL": 0.9774806284001962

|

| 14 |

+

},

|

| 15 |

+

"semantic_similarity_avg:": 0.9974465370178223

|

| 16 |

+

},

|

| 17 |

+

"mauve": 0.8804768654231001,

|

| 18 |

+

"inference_performance": {

|

| 19 |

+

"min_latency_ms": 59.4637393951416,

|

| 20 |

+

"max_latency_ms": 3621.332883834839,

|

| 21 |

+

"lower_quartile_ms": 61.145663261413574,

|

| 22 |

+

"median_latency_ms": 62.72470951080322,

|

| 23 |

+

"upper_quartile_ms": 2128.1622648239136,

|

| 24 |

+

"avg_latency_ms": 899.8617341266932,

|

| 25 |

+

"min_memory_mb": 0.0,

|

| 26 |

+

"max_memory_mb": 0.00439453125,

|

| 27 |

+

"lower_quartile_mb": 0.0,

|

| 28 |

+

"median_memory_mb": 0.0,

|

| 29 |

+

"upper_quartile_mb": 0.0,

|

| 30 |

+

"avg_memory_mb": 2.9335989652870494e-06

|

| 31 |

+

}

|

| 32 |

+

}

|

Granite3.2-2B-FP16-lora-FP16-Evaluation_Results.json

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"eval_loss:": 0.628613162081992,

|

| 3 |

+

"perplexity:": 1.8750084425057905,

|

| 4 |

+

"performance_metrics:": {

|

| 5 |

+

"accuracy:": 0.9986648865153538,

|

| 6 |

+

"precision:": 1.0,

|

| 7 |

+

"recall:": 1.0,

|

| 8 |

+

"f1:": 1.0,

|

| 9 |

+

"bleu:": 0.9576095377330995,

|

| 10 |

+

"rouge:": {

|

| 11 |

+

"rouge1": 0.9765448807677461,

|

| 12 |

+

"rouge2": 0.9763919443226166,

|

| 13 |

+

"rougeL": 0.9765410442347443

|

| 14 |

+

},

|

| 15 |

+

"semantic_similarity_avg:": 0.9966857433319092

|

| 16 |

+

},

|

| 17 |

+

"mauve:": 0.8804768654231001,

|

| 18 |

+

"inference_performance:": {

|

| 19 |

+

"min_latency_ms": 67.85345077514648,

|

| 20 |

+

"max_latency_ms": 4228.219270706177,

|

| 21 |

+

"lower_quartile_ms": 70.30397653579712,

|

| 22 |

+

"median_latency_ms": 74.25427436828613,

|

| 23 |

+

"upper_quartile_ms": 2400.713324546814,

|

| 24 |

+

"avg_latency_ms": 999.433975830893,

|

| 25 |

+

"min_memory_gb": 0.13478469848632812,

|

| 26 |

+

"max_memory_gb": 0.13478469848632812,

|

| 27 |

+

"lower_quartile_gb": 0.13478469848632812,

|

| 28 |

+

"median_memory_gb": 0.13478469848632812,

|

| 29 |

+

"upper_quartile_gb": 0.13478469848632812,

|

| 30 |

+

"avg_memory_gb": 0.13478469848632812,

|

| 31 |

+

"model_load_memory_gb": 7.857240676879883,

|

| 32 |

+

"avg_inference_memory_gb": 0.13478469848632812

|

| 33 |

+

}

|

| 34 |

+

}

|

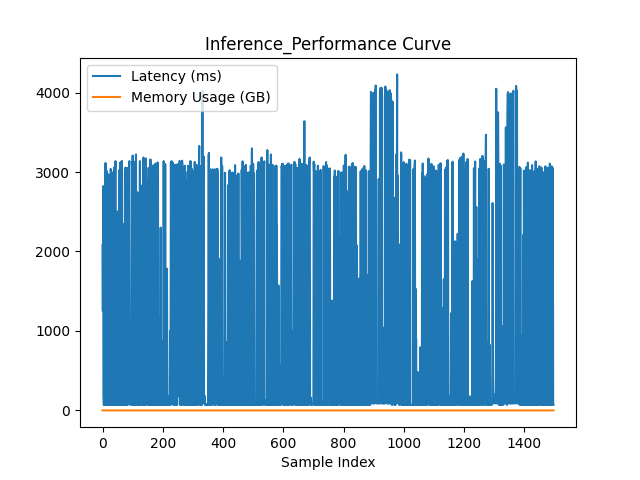

Granite3.2-2B-FP16-lora-FP16-Inference_Curve.png

ADDED

|

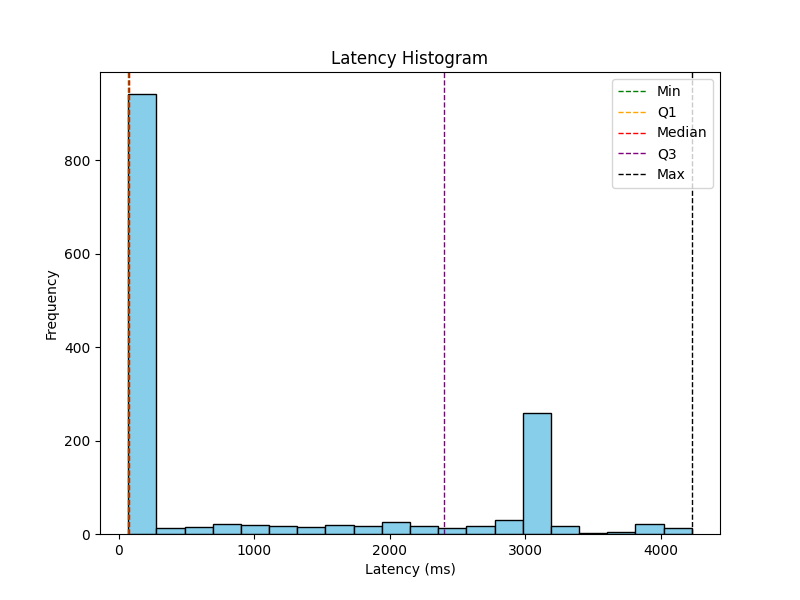

Granite3.2-2B-FP16-lora-FP16-Latency_Histogram.png

ADDED

|

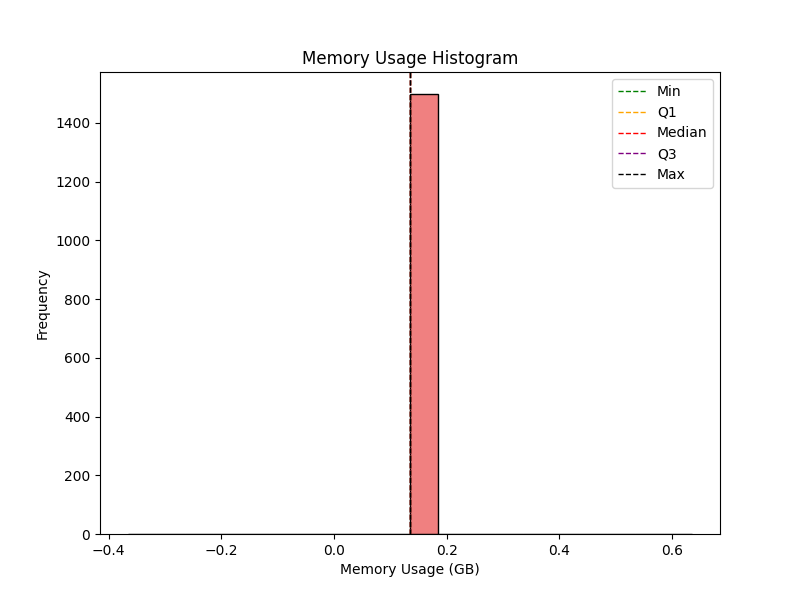

Granite3.2-2B-FP16-lora-FP16-Memory_Histogram.png

ADDED

|

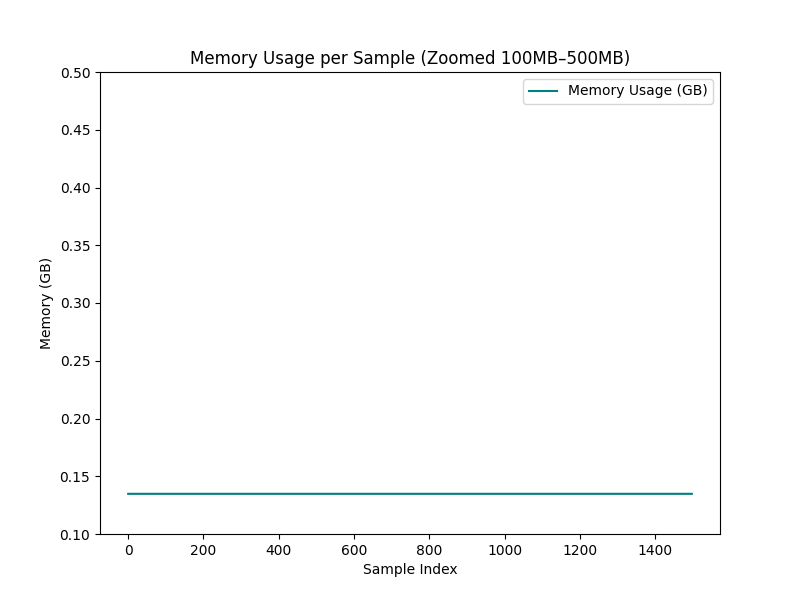

Granite3.2-2B-FP16-lora-FP16-Memory_Usage_Curve.png

ADDED

|