Commit

·

1b458cf

1

Parent(s):

2576a37

Update README.md

Browse files

README.md

CHANGED

|

@@ -37,6 +37,8 @@ tags:

|

|

| 37 |

# CausalLM 14B - Fully Compatible with Meta LLaMA 2

|

| 38 |

Use the transformers library that does not require remote/external code to load the model, AutoModelForCausalLM and AutoTokenizer (or manually specify LlamaForCausalLM to load LM, GPT2Tokenizer to load Tokenizer), and model quantization is fully compatible with GGUF (llama.cpp), GPTQ, and AWQ.

|

| 39 |

|

|

|

|

|

|

|

| 40 |

# Recent Updates: [DPO-α Version](https://huggingface.co/CausalLM/14B-DPO-alpha) outperforms Zephyr-β in MT-Bench

|

| 41 |

|

| 42 |

# Friendly reminder: If your VRAM is insufficient, you should use the 7B model instead of the quantized version.

|

|

@@ -124,9 +126,16 @@ We are currently unable to produce accurate benchmark templates for non-QA tasks

|

|

| 124 |

|

| 125 |

*JCommonsenseQA benchmark result is very, very close to [Japanese Stable LM Gamma 7B (83.47)](https://github.com/Stability-AI/lm-evaluation-harness/tree/jp-stable), current SOTA Japanese LM. However, our model was not trained on a particularly large amount of text in Japanese. This seems to reflect the cross-language transferability of metalinguistics.*

|

| 126 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 127 |

# 因果语言模型 14B - 与 Meta LLaMA 2 完全兼容

|

| 128 |

使用无需远程/外部代码的transformers库加载模型,AutoModelForCausalLM和AutoTokenizer(或者手动指定LlamaForCausalLM加载LM, GPT2Tokenizer加载Tokenizer),并且模型量化与GGUF(llama.cpp)、GPTQ、AWQ完全兼容。

|

| 129 |

|

|

|

|

|

|

|

| 130 |

# 最近更新: [DPO-α Version](https://huggingface.co/CausalLM/14B-DPO-alpha) 在 MT-Bench 超过 Zephyr-β

|

| 131 |

|

| 132 |

# 友情提示:如果您的显存不足,您应该使用7B模型而不是量化版本。

|

|

@@ -212,3 +221,8 @@ STEM准确率:66.71

|

|

| 212 |

|jcommonsenseqa-1.1-0.6| 1.1|acc |0.8213|± |0.0115|

|

| 213 |

|

| 214 |

*JCommonsenseQA 基准测试结果非常非常接近 [Japanese Stable LM Gamma 7B (83.47)](https://github.com/Stability-AI/lm-evaluation-harness/tree/jp-stable),当前 SOTA 日文 LM 。然而,我们的模型并未在日文上进行特别的大量文本训练。这似乎能体现元语言的跨语言迁移能力。*

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 37 |

# CausalLM 14B - Fully Compatible with Meta LLaMA 2

|

| 38 |

Use the transformers library that does not require remote/external code to load the model, AutoModelForCausalLM and AutoTokenizer (or manually specify LlamaForCausalLM to load LM, GPT2Tokenizer to load Tokenizer), and model quantization is fully compatible with GGUF (llama.cpp), GPTQ, and AWQ.

|

| 39 |

|

| 40 |

+

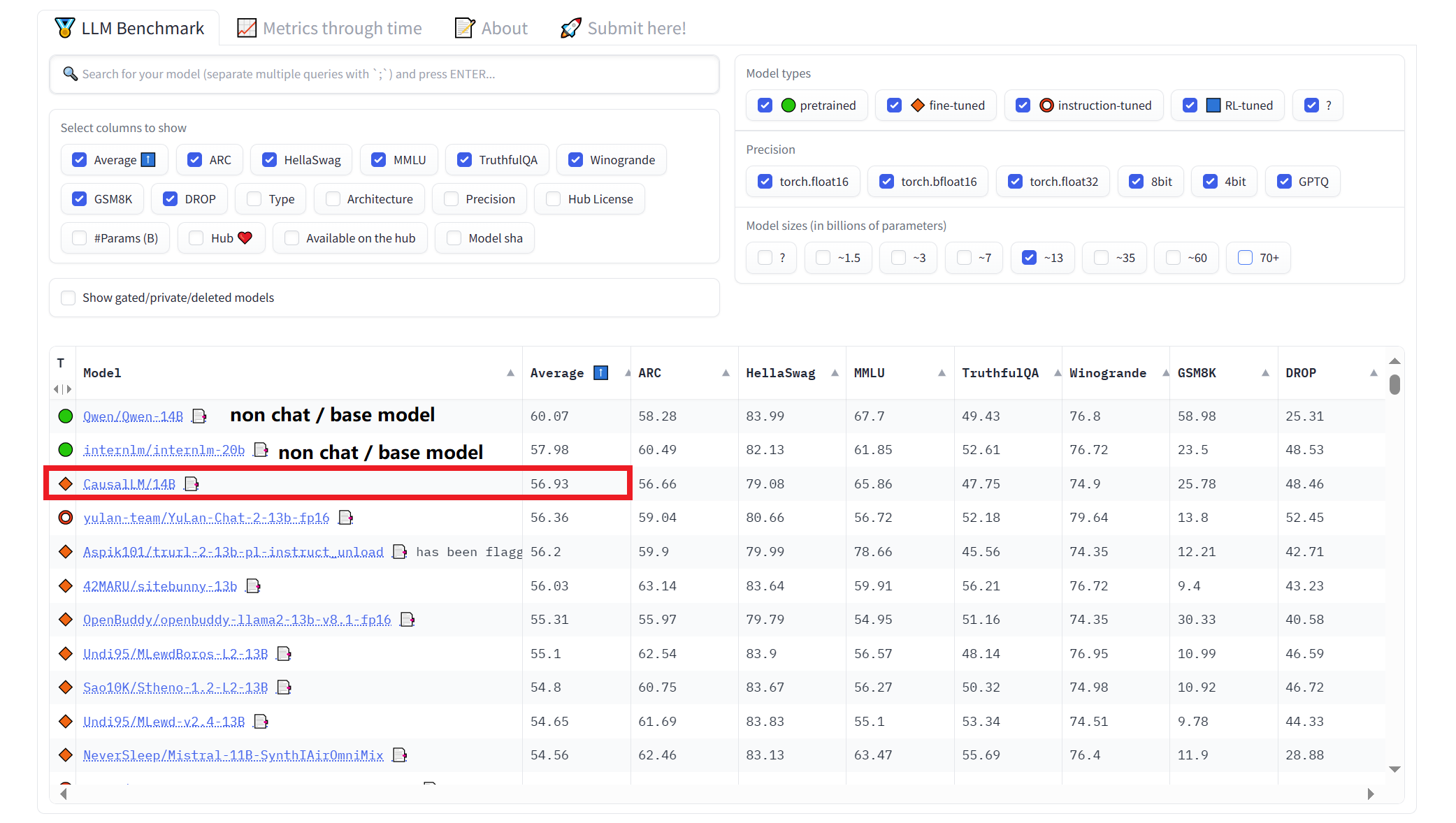

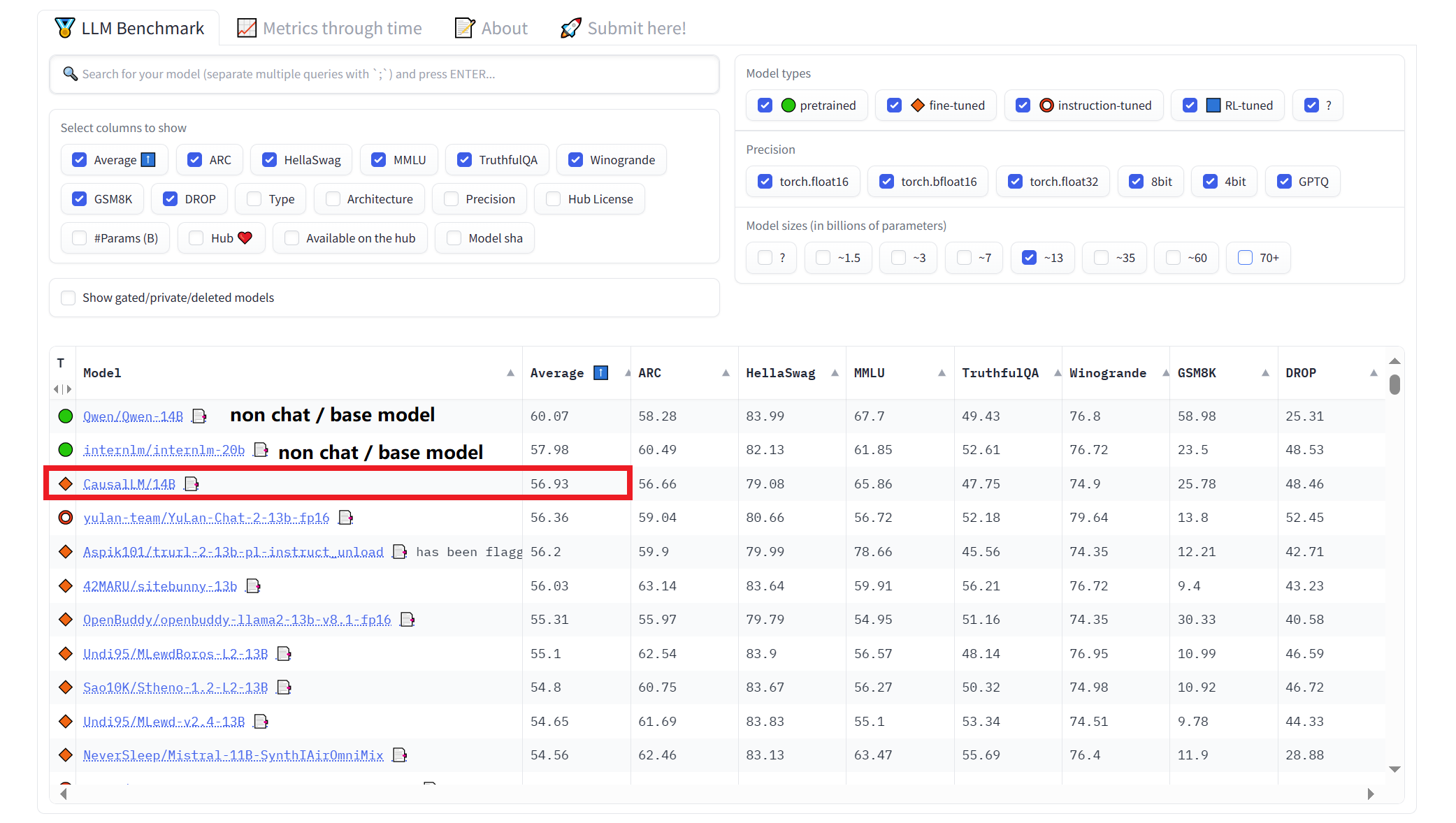

**News: SOTA chat model of its size on 🤗 Open LLM Leaderboard**

|

| 41 |

+

|

| 42 |

# Recent Updates: [DPO-α Version](https://huggingface.co/CausalLM/14B-DPO-alpha) outperforms Zephyr-β in MT-Bench

|

| 43 |

|

| 44 |

# Friendly reminder: If your VRAM is insufficient, you should use the 7B model instead of the quantized version.

|

|

|

|

| 126 |

|

| 127 |

*JCommonsenseQA benchmark result is very, very close to [Japanese Stable LM Gamma 7B (83.47)](https://github.com/Stability-AI/lm-evaluation-harness/tree/jp-stable), current SOTA Japanese LM. However, our model was not trained on a particularly large amount of text in Japanese. This seems to reflect the cross-language transferability of metalinguistics.*

|

| 128 |

|

| 129 |

+

## 🤗 Open LLM Leaderboard

|

| 130 |

+

SOTA chat model of its size on 🤗 Open LLM Leaderboard.

|

| 131 |

+

|

| 132 |

+

|

| 133 |

+

|

| 134 |

# 因果语言模型 14B - 与 Meta LLaMA 2 完全兼容

|

| 135 |

使用无需远程/外部代码的transformers库加载模型,AutoModelForCausalLM和AutoTokenizer(或者手动指定LlamaForCausalLM加载LM, GPT2Tokenizer加载Tokenizer),并且模型量化与GGUF(llama.cpp)、GPTQ、AWQ完全兼容。

|

| 136 |

|

| 137 |

+

# 新消息:🤗 Open LLM 排行榜上同尺寸的聊天模型中评分最高

|

| 138 |

+

|

| 139 |

# 最近更新: [DPO-α Version](https://huggingface.co/CausalLM/14B-DPO-alpha) 在 MT-Bench 超过 Zephyr-β

|

| 140 |

|

| 141 |

# 友情提示:如果您的显存不足,您应该使用7B模型而不是量化版本。

|

|

|

|

| 221 |

|jcommonsenseqa-1.1-0.6| 1.1|acc |0.8213|± |0.0115|

|

| 222 |

|

| 223 |

*JCommonsenseQA 基准测试结果非常非常接近 [Japanese Stable LM Gamma 7B (83.47)](https://github.com/Stability-AI/lm-evaluation-harness/tree/jp-stable),当前 SOTA 日文 LM 。然而,我们的模型并未在日文上进行特别的大量文本训练。这似乎能体现元语言的跨语言迁移能力。*

|

| 224 |

+

|

| 225 |

+

## 🤗 Open LLM 排行榜

|

| 226 |

+

🤗 Open LLM 排行榜上同尺寸的聊天模型中评分最高

|

| 227 |

+

|

| 228 |

+

|